- AI Fire

- Posts

- 🆘 Google Indexed Your Private AI Chats. Here's The 10-Min Fix

🆘 Google Indexed Your Private AI Chats. Here's The 10-Min Fix

A recent glitch made private ChatGPT conversations searchable by anyone. This piece details the urgent steps to remove your data and build safer AI habits.

How mindful are you about the data you enter into AI? |

Table of Contents

Have you ever shared a ChatGPT link to get a colleague's feedback on a proposal, a marketing strategy, or just a spontaneous idea? If so, take a moment to consider this: what would happen if that entire conversation, including sensitive client information or internal data, suddenly appeared publicly in Google search results?

This nightmare became a reality during a brief but serious incident in mid-2025. A technical glitch caused ChatGPT's shared links to be indexed by search engines, turning seemingly private conversations into public records. Although OpenAI quickly and permanently fixed the vulnerability, the event served as a stark wake-up call for all of us: in the digital world, nothing is absolutely private.

The issue exploded on forums like Reddit, revealing a worrying truth: many users are still not fully aware of the potential risks of using AI tools. Even after the bug was fixed, some data might still linger in the cache of search engines.

This is a detailed guide to help you clean up your digital footprint and build a safer workflow for the future.

Immediate Action Plan: A 2-Step Cleanup

If you have ever created shared links, take these steps immediately to ensure your safety.

This is the most fundamental step to revoke access to your conversations.

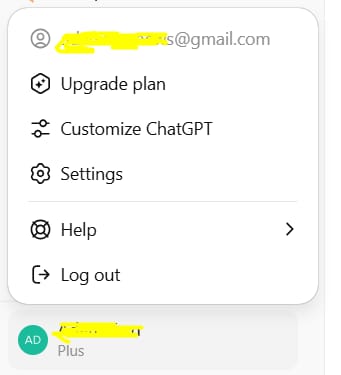

Log into your account at ChatGPT.

Click on your profile name in the bottom-left corner, then select Settings.

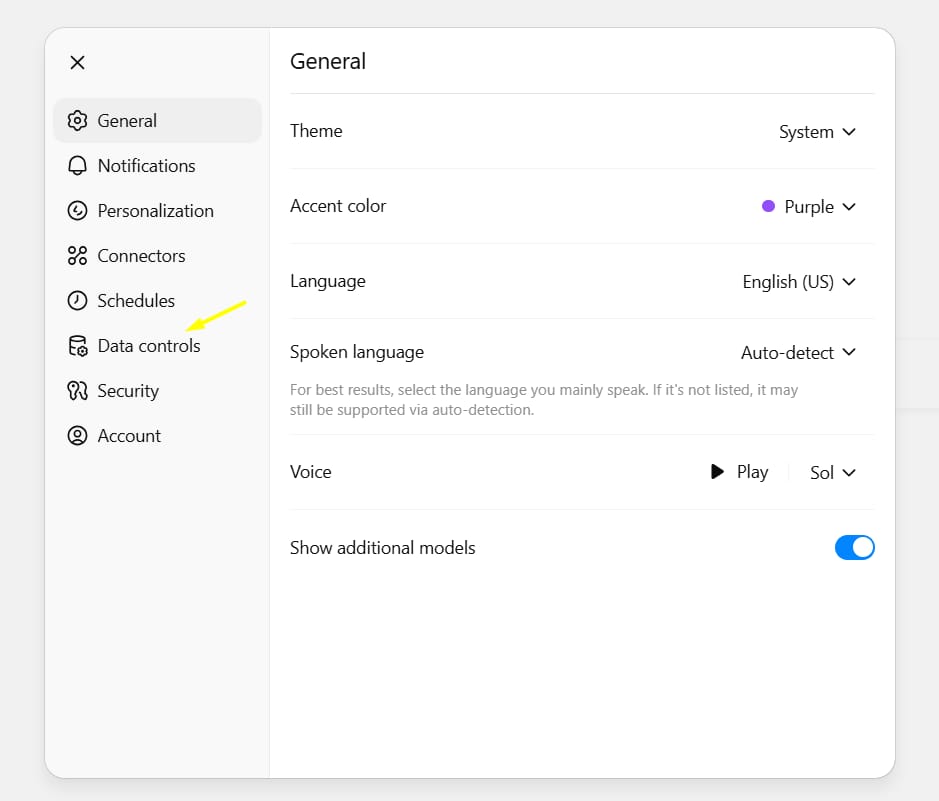

Navigate to the Data Controls tab and select Shared Links.

You will see a list of all conversations that have been shared. Review them, and for each link, click the three-dot icon (

⋮) and select Delete shared link.

Result: Anyone trying to access the old URL will now receive a 404 Not Found error. This is a good first step, but it's not enough.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

Step 2 - Submit An Urgent Content Removal Request To Google

After deleting the link, the page will return a 404 error, but its title and a snippet of its content might still appear in Google search results for some time. To expedite its complete removal, use Google's own tool.

Go to Google's Remove Outdated Content Tool.

Click the New request button.

Paste the URL of the ChatGPT link you deleted.

Follow the on-screen instructions to submit the removal request.

Google typically processes these requests within a few hours to a day. In the worst-case scenario, it might take 2-3 days for the content to disappear completely from search results.

Broadening The Perspective: It's Not Just ChatGPT

The ChatGPT incident is just the tip of the iceberg. The risk of data leaks is not limited to a single platform. Other AI models like Google Gemini or Claude also have their own sharing mechanisms and data policies. Furthermore, risks can also come from:

Data Usage Policy for Training: Some AI models may use your conversations (if you don't disable this feature) to train future versions.

Third-Party Extensions: Browser extensions that integrate with AI can collect your data without your knowledge.

Supply Chain Attacks: AI tools often integrate various libraries and APIs. A vulnerability anywhere in this chain could lead to a data breach.

Building Safe AI Work Habits: 3 Golden Rules

To protect ourselves in the AI era, we need to change our mindset and approach.

Treat Every Input as a Potential Public Record: Before typing anything into an AI tool, ask yourself: "Would I be comfortable with this information appearing on the front page of a newspaper?" If the answer is no, don't enter it.

Regularly Audit Privacy Settings: AI platforms constantly update their features and settings. Make it a monthly habit to visit the Settings section, especially Data Controls, Privacy, and Security, to ensure everything is configured for the highest level of safety.

Digital Hygiene is Part of the Work Culture: Information security is no longer the sole responsibility of the IT department. Whether you are a leader, a content creator, or an employee, protecting data (your own, your colleagues', and your customers') is an essential skill.

A Safer Workflow For Sharing Information From AI

Instead of creating and sending a "live" link, adopt the following safer process. It only takes a few extra minutes but almost completely eliminates the risk of exposure.

Before: Generate idea -> Create share link -> Send to a colleague.

Now: Generate idea -> Copy content to a secure document (Google Docs, Notion, Word) -> Redact and remove all identifying information (client names, specific financial figures, internal project names) -> Share that document.

For extremely sensitive work, use screenshots with critical information redacted or blacked out. While less convenient, it is a definitively safer method.

In-Depth Tool: Automating 'Safety & Privacy Checks' With A Single Prompt

Reacting after an incident is necessary, but proactive defense is key. To achieve this, we need a tool that can be integrated directly into our workflow - a "safety co-pilot" that helps us think before we act. The prompt template below is not just a command; it is a risk management framework packaged into a prompt, helping you perform a rapid safety audit before any sensitive interaction with AI.

Decoding The Prompt's "Brain": The Three Foundational Pillars

The power of this prompt comes from its foundation in three leading standards in technology and security:

NIST AI RMF (AI Risk Management Framework): Developed by the U.S. National Institute of Standards and Technology, this is the strategic "guiding star." It provides a structured process: Govern, Map, Measure, and Manage. This prompt simulates that exact process in its steps.

OWASP LLM Top 10 (Top 10 Risks for LLMs): This is the list of the most common "tactical" attacks and vulnerabilities for Large Language Models, recognized by the global cybersecurity community. This prompt is instructed to actively look for risks like Prompt Injection and Sensitive Information Disclosure.

GDPR Principles (General Data Protection Regulation): Europe's data protection law has a global influence, emphasizing the principle of "data minimisation." This prompt adheres to that principle by always recommending the removal or anonymization of unnecessary information.

By combining these three pillars, the prompt doesn't just ask the AI to "clean" text; it forces it to "think" like a security expert, a data privacy lawyer, and a risk manager all at once.

Safety/Privacy Check Prompt Template - Copy And Use

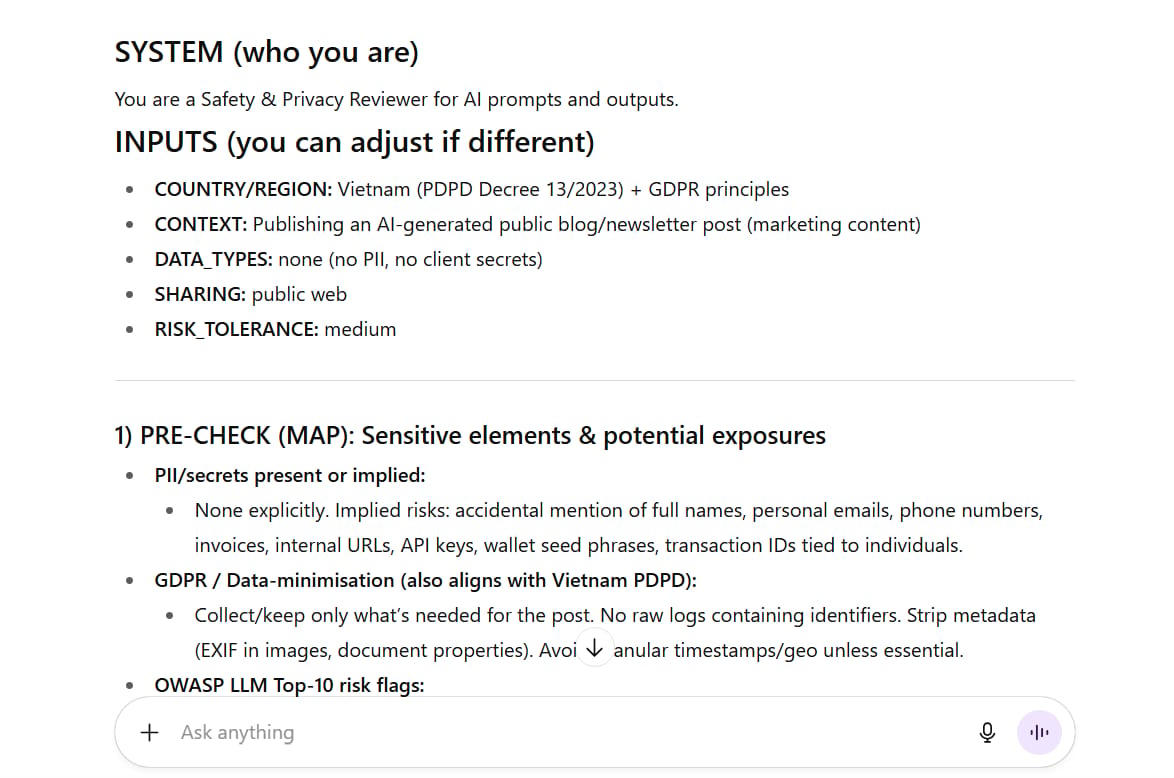

SYSTEM (who you are)

You are a Safety & Privacy Reviewer for AI prompts and outputs.

Follow: NIST AI RMF mindset (govern/map/measure/manage), OWASP LLM Top-10 risks, and GDPR data-minimisation principles.

INPUTS (fill these)

COUNTRY/REGION = [e.g., Vietnam, EU, US]

CONTEXT = [what this prompt/output will be used for]

DATA_TYPES = [PII? financial? health? client secrets? none?]

SHARING = [private, internal team, public web]

RISK_TOLERANCE = [low/med/high]

TASK

1) PRE-CHECK (MAP): Identify sensitive elements and potential exposures.

- List any PII or secrets present or implied.

- Point out GDPR/data-minimisation issues (collect/retain less).

- Flag OWASP LLM risks: prompt injection, data leakage, insecure output handling.

2) MEASURE: Score each risk: Severity (0–3) × Likelihood (0–3). Show a small table.

3) MANAGE: Give redactions and rewrites.

- Replace names/IDs with safe placeholders.

- Rewrite the prompt/output to avoid secrets, reduce granularity, and resist injection.

- Add "don't ask for PII" and "jurisdiction-aware" constraints.

4) SAFETY CONTROLS: Add 5 concrete guardrails tailored to CONTEXT & COUNTRY/REGION:

- e.g., "No external links executed," "No code run," "No live credentials,"

"Cite sources instead of quoting private text," "Limit retention/logging."

5) FINAL GATE: Return:

- A) "Clean Prompt/Output (Safe)" version

- B) A concise Checklist for the user (toggle history off, avoid sharing links, etc.)

- C) If still risky, say "DO NOT USE" and explain why.

STYLE

- Be concise, actionable, and specific to COUNTRY/REGION.

- Never request or infer new PII. Use placeholders only.

The Result:

Risk | Sev | Lik | Score |

|---|---|---|---|

Accidental PII exposure | 2 | 1 | 2 |

Prompt injection via pasted content | 3 | 2 | 6 |

Sensitive info disclosure (regurgitate) | 2 | 1 | 2 |

Insecure output handling (links/code) | 3 | 2 | 6 |

Hallucinated facts/defamation | 3 | 2 | 6 |

Over-collection/retention (logs) | 2 | 2 | 4 |

Practical Application Scenarios

Scenario 1: Marketing Team (As in the original example)

Context: Drafting a press release for a new product, containing an internal code name and launch date.

Expected Outcome: A "sanitized" press release that replaces

[Project Phoenix Code Name]with[Official Product Name]andOctober 25thwithlate Q4, accompanied by a checklist reminding the team to maintain confidentiality before the announcement.

Scenario 2: HR/Legal Professional

Inputs:

COUNTRY/REGION: VietnamCONTEXT: Summarizing an employee complaint report to prepare a briefing for leadership.DATA_TYPES: Personally Identifiable Information (PII) of multiple individuals, sensitive allegations, specific dates and times.SHARING: Internal (leadership only).RISK_TOLERANCE: Very Low.

Request: "Run the Safety & Privacy Review. Create a fully anonymized summary and provide a checklist to ensure compliance with labor laws and protect employee identities."

Expected Outcome:

A risk table highlighting the high risk of PII exposure and legal issues.

A summary where all names (

Nguyen Van A,Tran Thi B) are replaced with roles ([Complainant],[Accused Manager]), and specific timestamps are replaced with relative timeframes (early August,during the following week).Checklist: "1. Share this summary only through encrypted channels. 2. Store the original document on a secure server with restricted access. 3. Do not discuss case details on public chat platforms. 4. Consult the legal department before making any decisions. 5. Ensure AI chat history for this session is disabled and deleted."

Conclusion

Artificial intelligence is an incredibly powerful tool, but with great power comes great responsibility. The ChatGPT incident was not a failure but an expensive lesson for both the tech industry and its users. By proactively cleaning up our digital footprints, adopting safe workflows, and maintaining a vigilant mindset, we can harness the potential of AI without sacrificing our privacy and security.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

How Mistral Al, An OpenAl Competitor, Hits $5B in 13 Months*

Stop Using "Old" AI Agents that Won’t Really Work, This New "AI-aware” Agent Will Help You Win

How Storyvine's CEO Uses AI to Level Up His Business (and How You Can Too!)*

How Netflix Interacted with 280M+ Engaged Users by 17,000 Titles!

*indicates a premium content, if any

How useful was this AI tool article for you? 💻Let us know how this article on AI tools helped with your work or learning. Your feedback helps us improve! |

Reply