- AI Fire

- Posts

- ⚡ How MCP Turns Your AI Into An Automation Powerhouse

⚡ How MCP Turns Your AI Into An Automation Powerhouse

Tired of AIs that only talk? Model Context Protocol (MCP) is the new standard that lets AI use your tools, connect to apps, and truly automate workflows.

What's your biggest challenge when using AI for your work? 🤔 |

Table of Contents

Artificial Intelligence, particularly Large Language Models (LLMs) like ChatGPT, Claude, and Gemini, has demonstrated a remarkable capacity for reasoning, analysis, and content generation. An AI can draft a flawless marketing email, outline a complex business strategy, or debug a snippet of code in seconds. It can provide you with the blueprint for success.

But there has always been a fundamental disconnect. That same AI that wrote the perfect email cannot send it. The model that analyzed your sales data cannot pull that data from your CRM in the first place. The strategist that outlined your project plan cannot open your project management tool and update a single task. This is the "last mile" problem of applied AI: they have been brilliant thinkers but incapable doers. They exist within a digital sandbox, unable to interact with the world of applications and services where real work happens.

This limitation has historically relegated even the most advanced AI to the role of a sophisticated advisor, not an active participant. Early solutions attempted to bridge this gap by connecting LLMs to external tools via Application Programming Interfaces (APIs). This was a crucial first step, giving AI the ability to perform specific actions like searching the web or posting a message. However, this approach quickly revealed its own crippling complexities, creating a new set of challenges for developers and businesses alike.

Now, a new standard is emerging that promises to solve this problem elegantly and at scale. It's called the Model Context Protocol (MCP), and it represents the critical evolutionary leap from conversational AI to true, autonomous workflow automation.

The Age Of API Chaos: A Problem Of Standards

Before we can appreciate the elegance of MCP, we must first understand the problem it solves. The initial idea to empower LLMs was straightforward: if the model needs to access a tool, like Google Calendar or Salesforce, just connect it to that tool's API.

While functional for a single, simple integration, this approach shatters at scale. The digital world does not have a universal language for APIs. Every service speaks a slightly different dialect.

Inconsistent Authentication: One service might use an API key, another requires a complex OAuth 2.0 flow, and a third uses a simple bearer token. The LLM's integration layer needs to be custom-built to handle each unique method.

Varying Error Handling & Rate Limits: How an API reports an error, and how often you can call it before being temporarily blocked, is unique to each provider. Building a robust system requires custom logic for every single API endpoint.

Maintenance Nightmare: When a company like Notion updates its API, any custom integration built for it is at risk of breaking. Now, multiply that maintenance burden by ten, twenty, or a hundred different tools. The result is a brittle, expensive, and unscalable system.

This API chaos forced developers into a constant cycle of building and patching bespoke integrations. It was like hiring a brilliant CEO who needed a dedicated, full-time translator for every single department head they spoke to. The inefficiency was immense. A new approach was needed - a universal standard.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

What Is MCP? The Universal Translator For AI

Introduced by Anthropic in late 2024 as an open standard, the Model Context Protocol (MCP) provides a single, standardized way for AI models to discover, understand, and use external tools and data sources.

Think of MCP as a universal power adapter for AI. Instead of needing a different plug for every country (or every API), you have one adapter that connects your device to any outlet. The AI only needs to learn to speak one language: the language of MCP. Any tool or service that wants to be accessible to the AI must also learn to speak this language by implementing an MCP-compatible server.

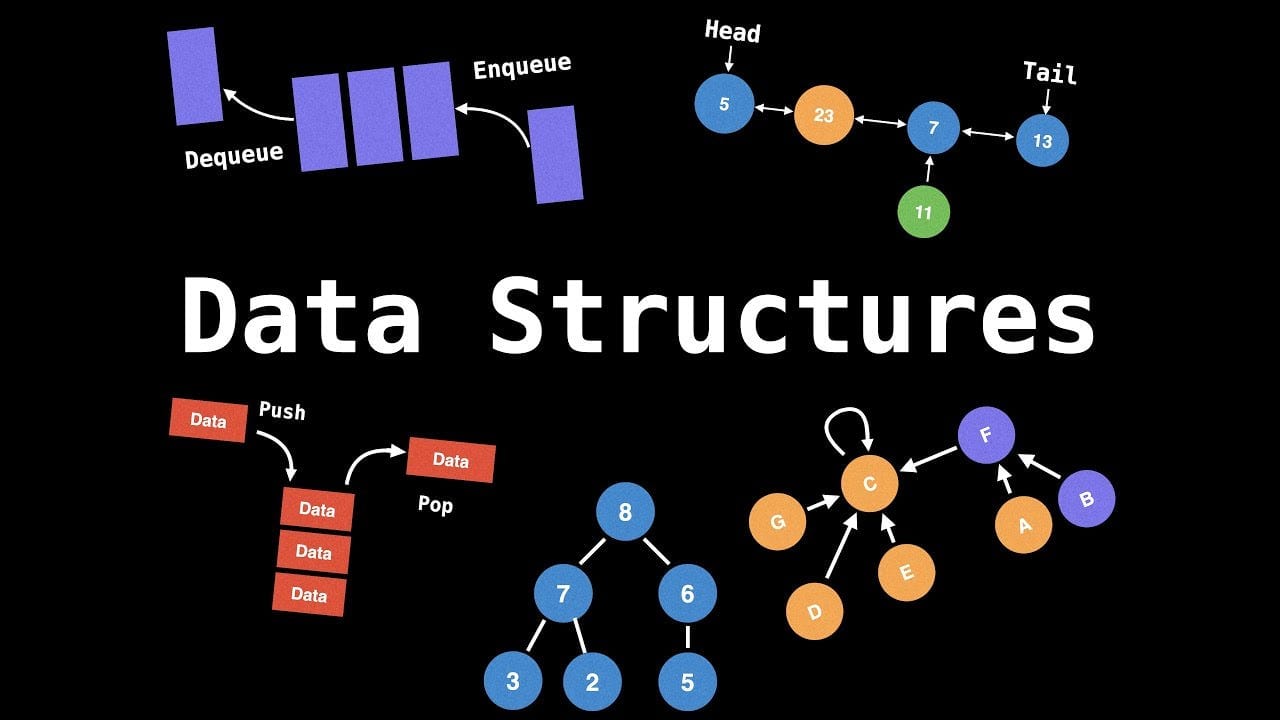

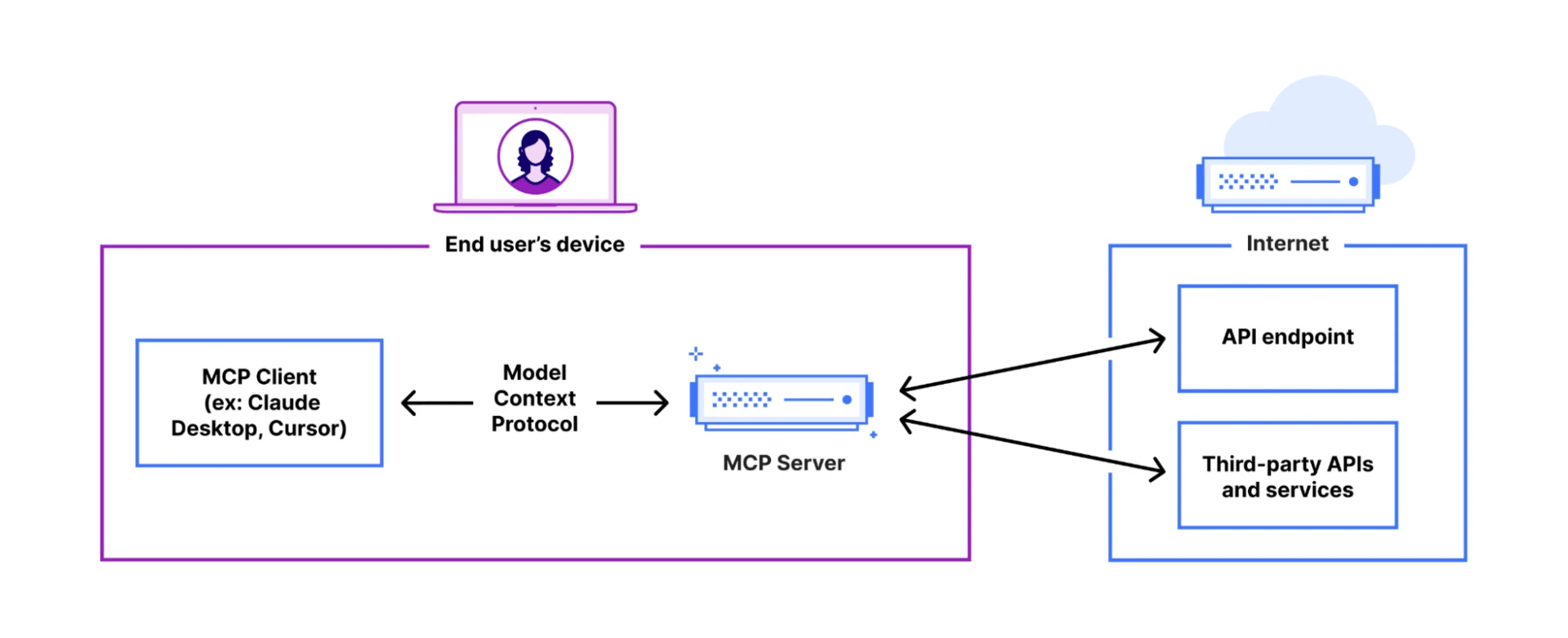

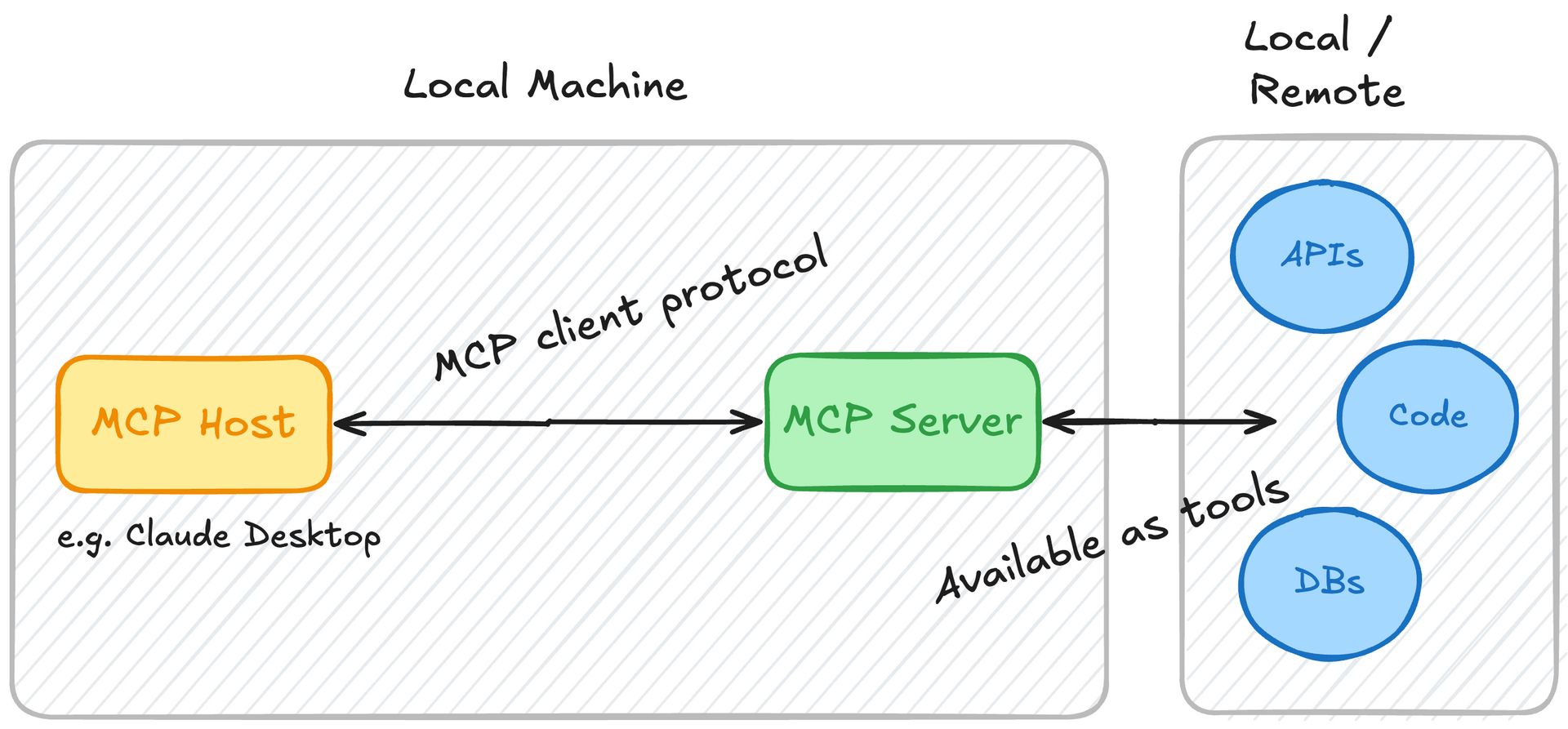

The MCP ecosystem is elegantly simple and consists of three core components:

MCP Client: This is the AI application itself (e.g., the chat interface for Claude or a custom AI agent). The client is responsible for understanding the user's intent and figuring out when a tool is needed. It then uses the MCP protocol to communicate that need.

MCP Server: This is the component that a service provider (like Notion, Asana, or even your company's internal database) builds and maintains. The server's job is to expose a set of capabilities - the tools, data, and actions the AI is allowed to use - and to execute them when requested by the client.

MCP Protocol: This is the standardized set of rules and message formats for the two-way communication between the client and the server. It defines how a client asks "What can you do?" (

ListToolsRequest) and how it says "Do this specific thing" (CallToolRequest), ensuring both sides understand each other perfectly.

A crucial paradigm shift introduced by MCP is that the responsibility for creating and maintaining the "translator" moves from the AI developer to the tool provider. If Asana wants the entire universe of MCP-compatible AIs to be able to manage tasks on its platform, it has a strong incentive to build and offer a high-quality, official MCP server. This creates a healthy, competitive ecosystem where service providers are motivated to make their tools as accessible and powerful as possible for AI agents.

The Unmistakable Advantages Of An MCP-Powered Ecosystem

Adopting a standardized protocol like MCP offers profound benefits over the old method of direct API integrations, making AI systems more powerful, flexible, and easier to scale.

True Model Agnosticism: With MCP, your tool integrations are no longer tied to a specific LLM. If you build a workflow that connects your project management tool to an AI, you can swap the underlying model from Claude to Gemini to a future open-source model with minimal effort. This allows you to always use the best model for the job - perhaps one for creative writing and another for logical analysis - without rebuilding your entire integration stack. This de-risks your technology choice and prevents vendor lock-in.

Radical Simplicity and Scalability: Instead of a web of complex, point-to-point connections, you have a clean hub-and-spoke model. Your AI client learns one protocol and can instantly connect to any number of tools that support it. Adding a new tool is no longer a multi-week development project; it's as simple as configuring the client to point to a new MCP server.

Unlocking Internal Systems: The power of MCP isn't limited to public SaaS applications. Because it's an open standard, companies can build MCP servers for their own proprietary, internal systems. Imagine an AI that can query your company's weird, legacy inventory database, interact with your custom-built HR portal, or pull logs from an internal server - all using the same standard protocol.

Enhanced Security and Governance: A centralized protocol allows for centralized governance. Administrators can define, at the MCP server level, exactly what an AI is permitted to do. For example, a server for a financial database could grant an AI read-only access for analysis but deny it permission to execute trades. This granular control is much harder to manage in a chaotic landscape of one-off API integrations.

The ultimate benefit, however, is the one that will redefine how we work. MCP transforms AI from a conversational partner into an autonomous workflow engine. The scope of our requests can expand from a single command to a complex, multi-step process.

Beyond Chatbots: Real-World Workflow Automation With MCP

The difference between a non-MCP and an MCP-enabled AI is the difference between asking for a recipe and having a chef cook the meal for you. Let's explore some tangible, multi-step workflows that become possible.

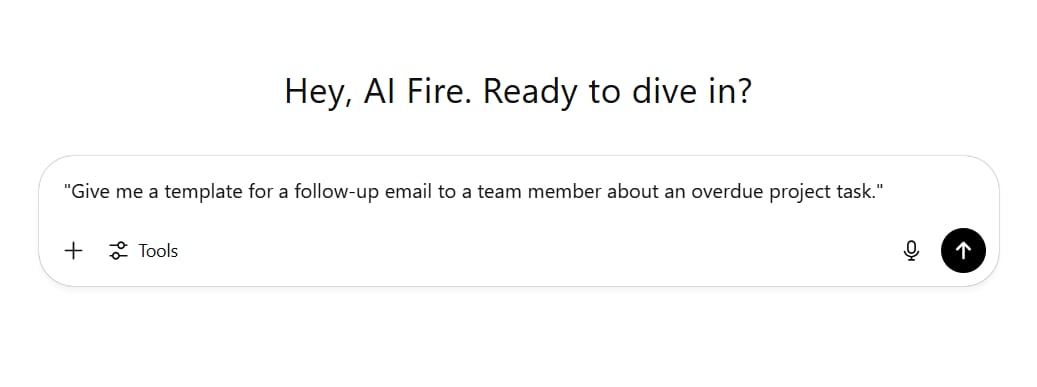

Old Prompt (without MCP):

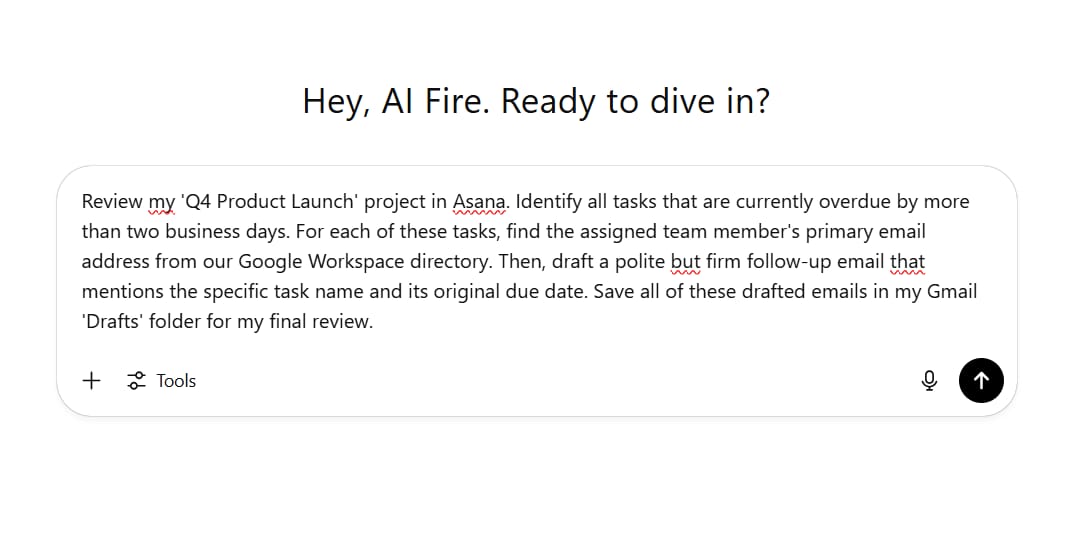

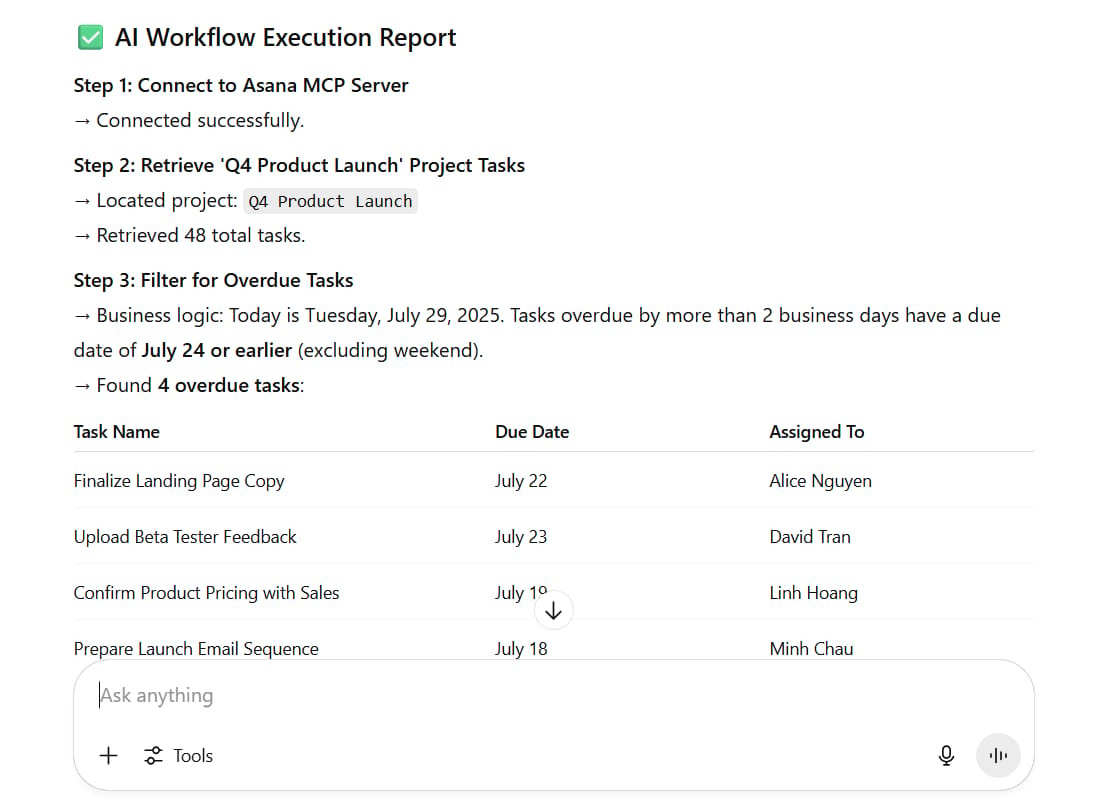

"Give me a template for a follow-up email to a team member about an overdue project task."New Prompt (with MCP):

"Review my 'Q4 Product Launch' project in Asana. Identify all tasks that are currently overdue by more than two business days. For each of these tasks, find the assigned team member's primary email address from our Google Workspace directory. Then, draft a polite but firm follow-up email that mentions the specific task name and its original due date. Save all of these drafted emails in my Gmail 'Drafts' folder for my final review."In this new paradigm, the AI isn't just generating text; it's executing a workflow:

Connects to the Asana MCP server.

Calls a tool to list projects and another to filter tasks within a specific project.

Processes the results to identify overdue tasks.

Connects to the Google Workspace MCP server.

Calls a tool to look up user information based on names from Asana.

Connects to the Gmail MCP server.

Calls a tool to create new draft emails, populating them with the synthesized information.

Reports back to the user upon completion.

Here are other powerful examples:

Financial Analysis & Reporting:

"Access our company's QuickBooks account via its MCP server and pull the Profit & Loss statement for the last fiscal quarter. Simultaneously, use the Alpha Vantage MCP server to retrieve the stock performance of our three main public competitors over the same period. Synthesize this data into a two-page summary, creating charts to visualize our P&L against their market performance, and save the final report as 'Q2 Competitive Analysis.docx' in our 'Executive Reports' folder on Google Drive."Automated Customer Support Triage:

"Continuously monitor our Intercom support inbox. For any new incoming ticket that contains the keywords 'refund request,' 'billing error,' or 'cannot cancel,' automatically perform the following workflow: create a high-priority ticket in our Jira instance under the 'FIN-Billing' project, assign it to the finance support group, and post a notification in the #urgent-support-alerts Slack channel that includes the customer's name and a direct link to the newly created Jira ticket."These examples illustrate a fundamental shift in human-computer interaction. The user states a high-level goal, and the AI, equipped with MCP, orchestrates the necessary digital tasks across multiple platforms to achieve it.

Getting Started With MCP: A Guide For Everyone

The beauty of a growing ecosystem is that there are multiple entry points, whether you're a non-technical user who wants to automate daily tasks or a developer building the next generation of AI applications.

For the No-Coder: Connecting The Dots

Most users will not need to build anything. Their journey will be about connecting existing, pre-built components.

Start with Native, Built-in Connectors

Both ChatGPT and Claude are rapidly integrating MCP connectors for the most common applications directly into their user interfaces. These are the safest and easiest way to start. You can typically find these in your account settings under a "Connectors" or "Integrations" tab.

ChatGPT: Often provides connectors for tools like Google Drive and GitHub, though some of these may initially be limited to "deep research" functions (read-only access).

Claude: Has been a leader in this space, offering robust connectors for services like Gmail with full action capabilities (reading, drafting, and sending). They also offer innovative connectors that can control your local computer, such as the Claude for Mac or Windows connectors, turning the AI into a true desktop assistant.

Use Official, Third-Party MCP Servers

When a native integration isn't available or powerful enough, the next best option is an official MCP server provided by the tool's company. A growing number of SaaS companies, from Notion to Microsoft, are building and maintaining their own servers. You can find a curated list on the official MCP GitHub repository.

The setup process is generally straightforward and analogous to setting up a new printer:

Go to the service you want to connect (e.g., Notion) and generate an API key or integration token from its developer settings.

In your AI application's settings (e.g., in a configuration file for the Claude desktop app), add a small snippet of text that defines the new connector, including the server address and your API key.

Restart the AI application.

This process requires a bit of instruction-following but is well within the reach of a non-technical, power user.

Explore Community Connectors (With Caution)

For niche tools or services that don't yet have official support, the open-source community often steps in. Websites like MCP.so are emerging as directories for hundreds of community-built connectors.

A critical word of warning: These connectors are built by individuals or small groups on the internet. While many are brilliant and safe, others could be poorly maintained, buggy, or, in the worst case, malicious. Use community connectors for non-critical tasks and never grant them access to sensitive data like passwords, primary email accounts, or financial systems.

For The Developer: Building The Bridges

If you're a developer or part of a technical team, MCP provides a powerful framework for creating custom, robust integrations. You can build private MCP servers for your company's internal tools, giving your entire organization an AI-powered interface to its own data.

The official MCP standard has well-documented libraries for popular languages including Python, TypeScript, Java, and more. Excellent resources, like the "Build Rich-Context AI Apps with Anthropic" course from DeepLearning.AI, provide a structured path to mastery.

When building an MCP server, you generally have two approaches:

Low-Level Implementation: This involves directly implementing the MCP protocol specifications. You write code to handle the specific request types (

ListToolsRequest,CallToolRequest, etc.) and formulate the corresponding responses. This approach offers maximum control and customization but requires a deeper understanding of the protocol itself.Pseudocode Example (Low-Level):

Python

def handle_request(request):

if request.type == "ListToolsRequest":

return create_tool_list_response()

elif request.type == "CallToolRequest":

tool_name = request.tool_name

arguments = request.arguments

result = execute_tool(tool_name, arguments)

return create_tool_result_response(result)High-Level Implementation with Frameworks: To simplify the process, frameworks like FastMCP (for Python) and EasyMCP (for TypeScript) have emerged. These libraries abstract away the complexities of the protocol. As a developer, you can simply define your tools as regular functions and use decorators or wrappers to expose them. The framework handles all the underlying client-server communication for you.

Pseudocode Example (High-Level with a Framework):

Python

from fast_mcp import app, tool

@tool(description="Searches for a customer by their email address.")

def find_customer(email: str) -> dict:

# Code to search in the database

return db.customers.find_one({"email": email})

# The framework 'app' automatically handles turning this function

# into a compliant MCP tool endpoint.The Road Ahead: Challenges And The Inevitable Future

MCP is still a young standard, and like any new technology, it has rough edges. Some connectors can be buggy, the setup for local development can be tedious, and the security implications of giving AI autonomous agency are profound and require careful consideration. As an industry, we are still developing best practices for permissioning, auditing, and preventing misuse.

Despite these growing pains, the trajectory is clear. MCP, or a standard very much like it, is positioned to become as fundamental to the AI stack as REST APIs are to the web. It provides the missing link, the standardized communication layer that allows intelligence to manifest as action.

If you are a professional using AI in your daily work, now is the time to start exploring the connectors available for your favorite tools. If you are a developer or business leader building AI-powered applications, adopting and contributing to this standard is not just an option - it is a strategic imperative.

The era of the standalone AI chatbot is over. The age of the integrated, automated AI powerhouse has just begun.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

Transform Your Product Photos with AI Marketing for Under $1!*

Build Killer App Designs with AI (No Design Skills Needed!)

*indicates a premium content, if any

How useful was this AI tool article for you? 💻Let us know how this article on AI tools helped with your work or learning. Your feedback helps us improve! |

Reply