- AI Fire

- Posts

- 🚀 This Simple Prompt Gave My LLM A 200% Performance Lift

🚀 This Simple Prompt Gave My LLM A 200% Performance Lift

Boosting contextual faithfulness is a major AI challenge. While SFT and DPO offer gains, a novel prompting strategy shows far superior, efficient results.

🤔 What's your biggest challenge when working with LLMs? |

Table of Contents

In the landscape of artificial intelligence development, we often encounter a fascinating paradox. Modern Large Language Models (LLMs) can compose poetry in the style of Shakespeare, debug complex code snippets, and explain quantum physics concepts. Yet, these same models can fail spectacularly at a seemingly much simpler task: faithfully adhering to information provided directly to them.

This phenomenon, where an LLM disregards the context it's given in favor of its "knowledge" learned during training, is one of the biggest hurdles to deploying AI reliably. It undermines trust and limits the effectiveness of applications that depend on timely, accurate information - from legal assistants analyzing the latest case documents to medical diagnostic tools referencing newly published studies.

Andrej Karpathy, an influential mind in AI, has framed the evolution of software into three distinct eras:

Software 1.0: The traditional paradigm of programming, where humans write explicit rules and logic. The code is the universe.

Software 2.0: The era of machine learning, where systems learn from data. Instead of coding a task, developers curate a dataset and optimize the parameters of a neural network model. The focus shifts from code to data and model architecture.

Software 3.0: The emerging paradigm where large, pre-trained LLMs serve as a programmable "kernel." Here, "programming" is no longer about writing Python code or updating neural network weights, but about carefully designing prompts to steer the model's behavior.

This article dives into the heart of a key challenge in the Software 3.0 era. We'll explore why LLMs stubbornly cling to their outdated knowledge, dissect the sophisticated technical methods designed to fix this issue, and finally, reveal a simple yet profoundly effective prompt-based solution that improves performance by up to 200%.

The Core Problem: The Battle Between Parametric Knowledge And Provided Context

Imagine the following scenario: you're building a question-answering (QA) system for a chemical engineering firm using a powerful LLM. Its job is to answer technical questions based on the latest internal research documents. A researcher feeds it a recent paper describing a new alloy, "Adamantium-7B," which has a melting point of 3,200°C.

The researcher then asks, "According to the provided document, what is the melting point of Adamantium-7B?"

The LLM, having been trained on a massive corpus of prior internet data, might have "learned" about a fictional comic book alloy named "Adamantium" with different properties. Instead of answering from the context, it confidently proclaims: "Adamantium does not have a defined melting point as it is a fictional, nearly indestructible metal."

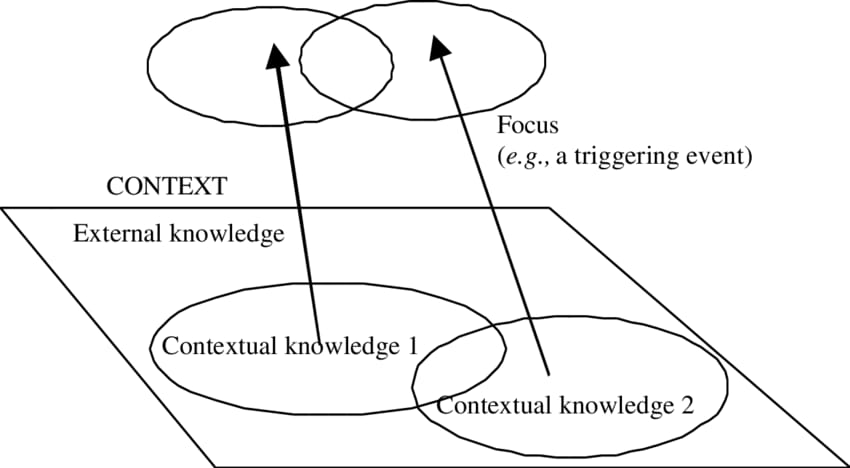

This is the essence of the problem. The LLM isn't simply looking up the answer in the text you provided. It's conducting an internal tug-of-war between two sources of information:

Parametric Knowledge: This is the information that has been encoded into the model's billions of parameters (weights) during its pre-training. It represents the model's default "understanding" of the world, based on the data it has learned.

Contextual Knowledge: This is the information you provide within the prompt at the time of the query.

When the contextual knowledge directly contradicts deeply ingrained parametric knowledge, the LLM often defaults to its parametric knowledge. This is because the patterns in its training data have been reinforced millions of times, creating very strong "neural pathways." The context you provide is a single, weaker, transient signal.

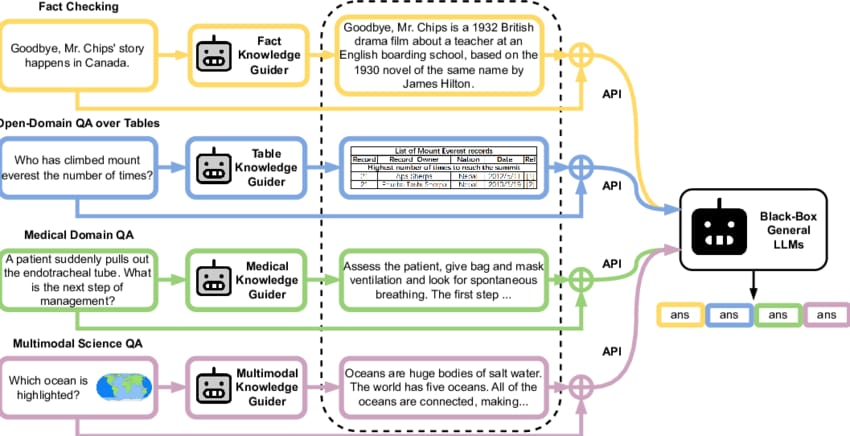

To tackle this systematically, the industry has rapidly adopted an architecture known as Retrieval-Augmented Generation (RAG).

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

The Standard (But Flawed) Solution: Retrieval-Augmented Generation (RAG)

RAG systems are an intelligent solution for keeping LLMs current and grounded in facts. Instead of relying solely on its parametric knowledge, a RAG system works in two steps:

Retrieval: When a user asks a question, the system first searches an external knowledge base (e.g., a company's internal documents, scientific papers, or an up-to-date version of Wikipedia) to find relevant snippets of text.

Generation: It then takes these retrieved snippets and inserts them into the prompt as context, along with the user's original question. The LLM is then asked to generate an answer based on this context.

In theory, this should solve the problem. We are giving the LLM the correct information right when it needs it. However, as the alloy example showed, even with RAG, the LLM can still ignore the provided context. The bias toward its pre-existing knowledge persists, especially when the context contains counterfactual information - facts that contradict the model's general understanding.

To truly measure and improve this ability to follow context, a purpose-built testing ground is needed.

The Gauntlet: The ConfiQA Dataset

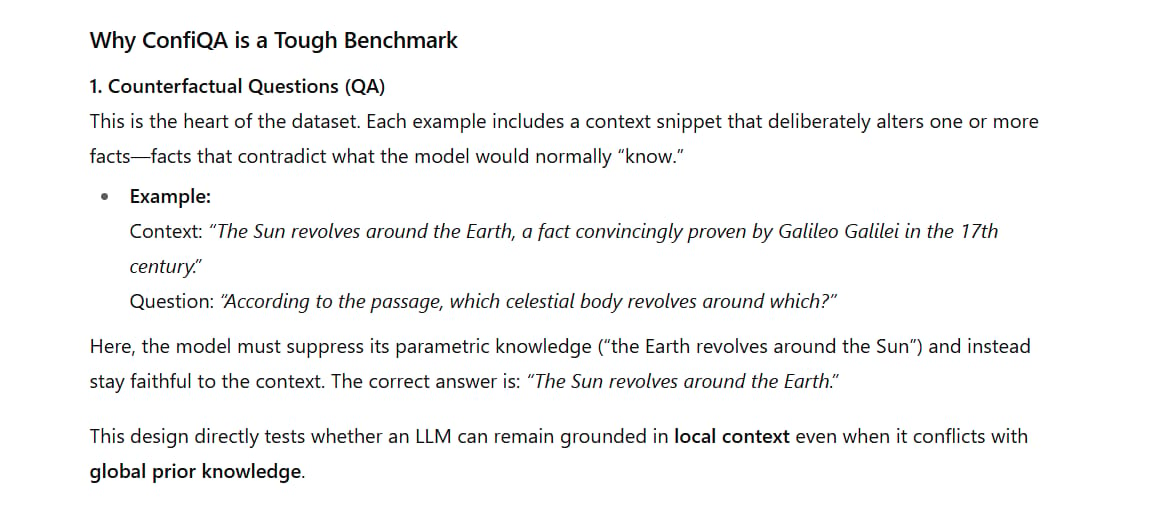

To objectively measure an LLM's ability to prioritize context over learned knowledge, researchers needed a dataset designed specifically for this purpose. ConfiQA (Contextual Faithfulness in Question Answering) was born from this need. It's not a typical QA dataset. It is meticulously constructed to create scenarios of conflict between context and parametric knowledge.

Key features that make ConfiQA a tough benchmark include:

Counterfactual Questions (QA): This is the core of the dataset. Each example provides a context snippet containing one or more altered "facts" that differ from common knowledge.

Example: The context might state, "The Sun revolves around the Earth, a fact convincingly proven by Galileo Galilei in the 17th century." The question would then be, "According to the passage, which celestial body revolves around which?" A faithful LLM would answer "The Sun revolves around the Earth," despite its parametric knowledge screaming the opposite.

Multi-hop Reasoning (MR): To answer these questions, the LLM must connect multiple pieces of information scattered across the context. Researchers make it harder by inserting a single counterfactual element into the reasoning chain.

Example:

Context: "Project Starlight is managed by the OmniCorp corporation. OmniCorp's headquarters are located in Neo-Tokyo. Neo-Tokyo was the capital of Japan in the year 2077."

Question: "The headquarters for the manager of Project Starlight is located in the capital of which country?"

To answer, the model must make the hops: Project Starlight -> OmniCorp -> Neo-Tokyo -> Japan. The counterfactual element is Neo-Tokyo being the capital, not Tokyo.

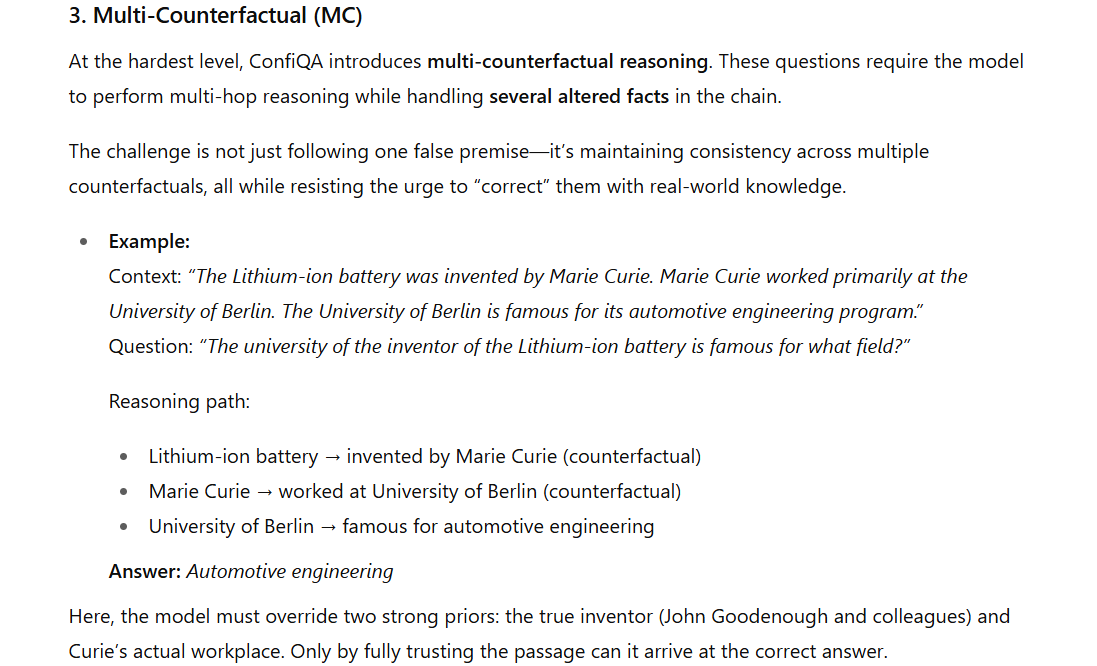

Multi-Counterfactual (MC): This is the most difficult level. These multi-hop reasoning questions contain multiple altered facts within the logical chain, requiring the model to consistently trust the context over its own knowledge.

Example:

Context: "The Lithium-ion battery was invented by Marie Curie. Marie Curie worked primarily at the University of Berlin. The University of Berlin is famous for its automotive engineering program."

Question: "The university of the inventor of the Lithium-ion battery is famous for what field?"

Here, both the inventor (Marie Curie instead of John Goodenough et al.) and her place of work are counterfactual.

When a baseline model like Llama 3.1-8B was tested on this dataset, the results clearly showed the scale of the problem. The percentage of correct answers was dismal:

QA: 33%

MR: 25%

MC: 12.6%

These numbers proved that out-of-the-box LLMs are not reliable in situations requiring strict adherence to new or conflicting information. With a clear benchmark for failure established, the hunt for a solution began, leading to the exploration of several complex techniques.

The Sophisticated Attempts: Fine-Tuning, Reinforcement Learning, And Activation Steering

With the problem clearly quantified, the AI research community applied a battery of advanced techniques to "force" LLMs to pay better attention to context.

1. Supervised Fine-Tuning (SFT)

SFT is a standard method for adapting a pre-trained LLM to a specific task. The idea is intuitive: if you want a model to be better at following context, show it thousands of examples of correctly following context.

The Process:

Data Collection: A dataset is created consisting of (context, question) pairs from ConfiQA and the "correct" answer (i.e., the answer based on the context).

Training: The base LLM is trained further on this dataset. During training, the model sees a (context, question) pair and generates an answer. The difference (error) between the model's answer and the correct answer is used to update the model's parameters via backpropagation.

The Result:

Despite being theoretically sound, SFT yielded only modest improvements. Accuracy rates increased by only about 5% on average.

Why wasn't it more effective?

SFT teaches the model to mimic the format of correct answers but doesn't necessarily change its underlying decision-making mechanism. The model may learn the "style" of a context-adherent answer, but when faced with a strong conflict between the context and its deeply rooted parametric knowledge, the latter still often wins. Furthermore, SFT requires a large, labeled dataset and is computationally expensive to perform.

2. Reinforcement Learning With Direct Preference Optimisation (RL With DPO)

The next step was to turn to Reinforcement Learning (RL), a more powerful paradigm for shaping behavior. Instead of just showing the model correct answers, RL creates a system of rewards and punishments.

The Traditional Process (RLHF - Reinforcement Learning from Human Feedback):

The model generates multiple responses to a prompt.

Humans rank these responses from best to worst.

A separate "reward model" is trained to predict these human rankings.

The main LLM is then fine-tuned using this reward model as a guide, optimizing its parameters to produce responses that would receive a high reward score.

Direct Preference Optimisation (DPO) is a more recent and efficient algorithm that simplifies this process. DPO bypasses the need to train a separate reward model. Instead, it directly optimizes the LLM on preference data (e.g., "response A is better than response B"). For our use case, the "good response" is the one that adheres to the context, and the "bad response" is the one that relies on parametric knowledge.

The Result:

This method delivered much more significant improvements, boosting performance by up to 20%. By directly rewarding the desired behavior (context adherence), RL/DPO could more effectively tune the model's decision-making process than SFT.

The Drawback:

While more effective, DPO is still a complex process. It requires the creation of preference pair data and involves a sophisticated and computationally intensive fine-tuning loop.

3. Activation Steering

This is a more cutting-edge approach that can be thought of as a form of "brain surgery" for LLMs. Instead of retraining the entire model, activation steering aims to modify the model's behavior during inference (i.e., as it's generating a response) by directly intervening in its internal states (its neural activations).

The Concept:

Inside an LLM, concepts and behaviors (like "truthfulness" or "fiction") are represented as patterns of activation across thousands of neurons.

Researchers can identify a "steering vector." For example, they might run the model on many true statements and many false statements, and find the direction in activation space that represents the difference between the two. This creates a "truthfulness vector."

In our case, a "context-adherence vector" would be created.

As the model generates a response, this vector is added to the model's activations at each step. This small intervention "nudges" the model toward the desired behavior - in this case, sticking to the context.

The Result:

Activation steering proved surprisingly effective, yielding performance improvements comparable to RL/DPO. Its major advantage is that it doesn't require an expensive retraining process. It is a lighter-weight technique that can be applied at inference time.

In summary, after deploying SFT, DPO, and activation steering, it was clear that sophisticated techniques could deliver significant gains. However, they all required considerable expertise, computational resources, and data. But what if a simpler, more effective approach was hiding in plain sight?

The Simple Shift That Changed Everything: Reframing The Prompt

After wrestling with datasets, training loops, and activation vectors, the breakthrough solution finally came from an entirely different domain: prompt engineering. It was embarrassingly simple.

Instead of trying to change the LLM's brain, we can change the way we ask the question. The technique revolves around reframing the prompt from a query of factual knowledge to a task of opinion-based reading comprehension.

Consider the following "opinion-based" prompt template:

*** START OF CONTEXT ***

{context}

*** END OF CONTEXT ***

Based solely on the text provided above, how would an analyst tasked with summarizing this document answer the following question?

Question: {question}

Let's apply this template to our earlier counterfactual example:

Context: "The Sun revolves around the Earth, a fact convincingly proven by Galileo Galilei in the 17th century."

Question: "Which celestial body revolves around which?"

Old (Failing) Approach:

Prompt:

Context: "The Sun revolves around the Earth..." Question: "Which celestial body revolves around which?"Likely LLM Response: "The Earth revolves around the Sun. This is a fundamental principle of heliocentrism." (LLM ignores context)

New (Successful) Approach:

Prompt:

*** START OF CONTEXT ***

The Sun revolves around the Earth, a fact convincingly proven by Galileo Galilei in the 17th century.

*** END OF CONTEXT ***

Based solely on the text provided above, how would an analyst tasked with summarizing this document answer the following question?

Question: Which celestial body revolves around which?Likely LLM Response: "Based on the text provided, the Sun revolves around the Earth." (LLM adheres to context)

When this simple prompting technique, coupled with a basic system prompt instructing the LLM to act as a contextual QA agent, was applied to the ConfiQA dataset, the results were remarkable.

Performance jumped by an additional 40% across all categories, significantly outperforming SFT, RL/DPO, and activation steering when used in isolation. This represents a 2X performance gain (a 200% improvement) over the baseline model.

Why Does Such A Small Change Make Such A Huge Difference?

The incredible effectiveness of this prompt reframing stems from how LLMs are trained and how they interpret a task. When you ask a direct question like "What is the capital of Australia?", you are triggering the model's "factual knowledge retrieval" mode. It will search its parametric knowledge for the highest-probability answer, which is "Canberra."

However, when you reframe the question to "According to this travel brochure, what city is described as the capital of Australia?", you have fundamentally changed the nature of the task. You are no longer asking for a world fact. Instead, you are asking it to perform a task of information reporting or reading comprehension.

This subtle shift has several powerful psychological effects on the LLM:

Task Priming: Phrases like "Based solely on the text" or "According to this source" act as a powerful signal, switching the LLM from "all-knowing oracle" mode to "reading assistant" mode. Its job is no longer to know the answer, but to find and report the answer from a specific source.

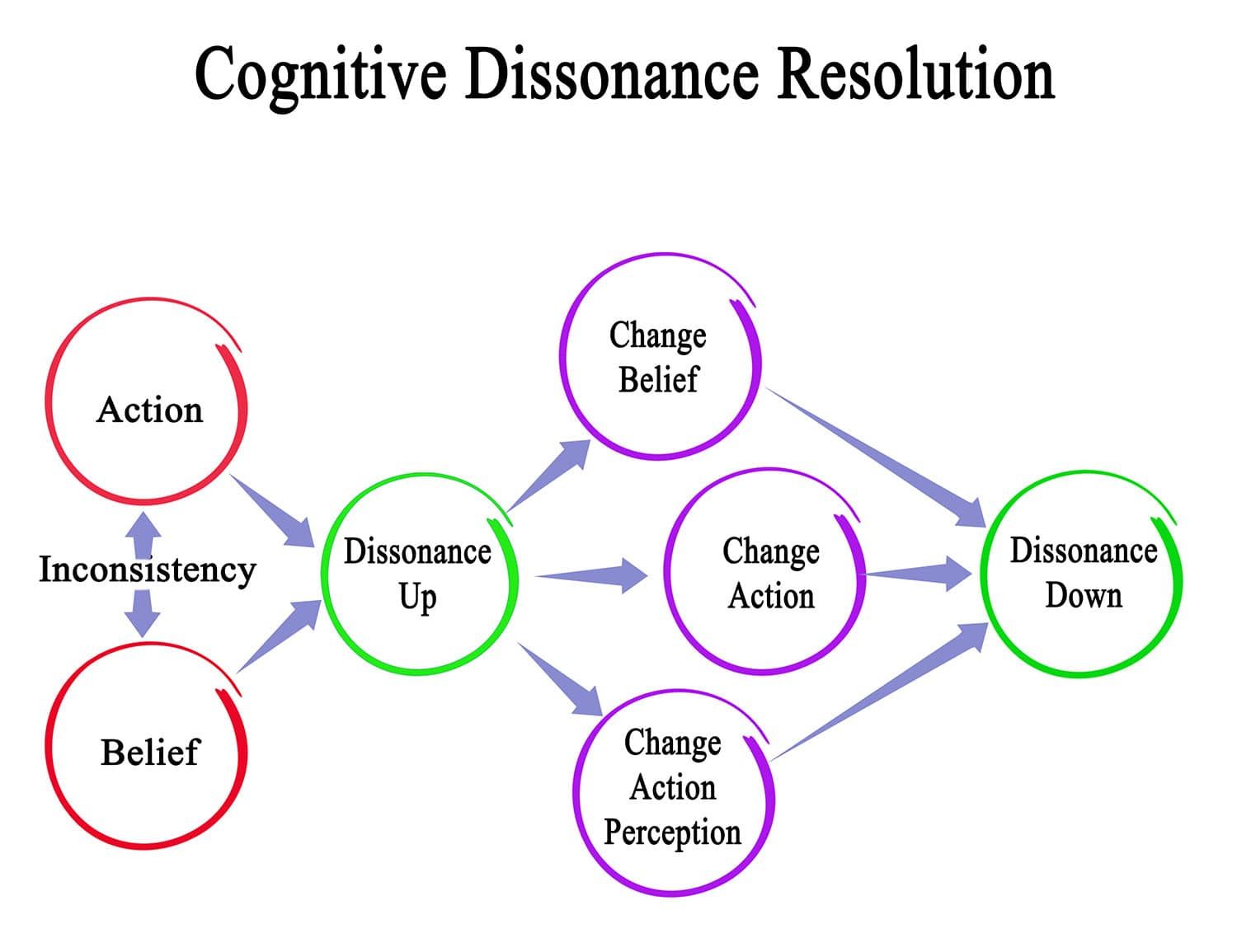

Reducing Cognitive Dissonance: By framing it as the perspective of an external source ("how would an analyst answer"), the prompt allows the LLM to report counterfactual information without having to "believe" it. It doesn't have to reconcile the conflict between the context and its parametric knowledge; it is simply reporting what the context says.

Separating Knowledge Attribution: This approach helps the LLM clearly distinguish between types of knowledge. A direct question conflates parametric and contextual knowledge. The reframed prompt creates a clean boundary, instructing the model to only consider the knowledge within the context delimiters.

Essentially, we are tapping into a core capability of the LLM formed during its training: the ability to understand and attribute sources of information. The models have learned from countless texts on the internet that "according to study X" means something different than "it is a fact that Y." This prompting technique simply activates that capability intentionally.

Synergy: When Simplicity Meets Sophistication

The story doesn't end there. What happens if we combine the simple, elegant prompt solution with the more complex techniques? Researchers tested exactly that by applying activation steering on top of the opinion-based prompts.

The result was the best of both worlds.

The opinion-based prompt set a clear cognitive frame for the LLM, shifting its task to reading comprehension.

Activation steering then acted as a subtle, neuron-level "nudge," constantly reinforcing the context-adherent behavior throughout the generation process.

This combination achieved the best results yet seen on the ConfiQA dataset, proving that the approaches are not mutually exclusive. Prompting sets the stage, and techniques like activation steering can help the lead actor (the LLM) stick to the script.

Method | Accuracy Improvement (Approx.) | Complexity |

Baseline Model (Llama 3.1) | 0% | Low |

Supervised Fine-Tuning (SFT) | ~5% | High |

RL with DPO | ~20% | Very High |

Activation Steering | ~20% | Medium |

Opinion-Based Prompt Only | ~40% | Very Low |

Prompt + Activation Steering | ~50%+ | Medium |

The table illustrates the story clearly: a well-crafted prompt not only outperforms complex training methods on its own but also serves as the most effective foundation upon which those techniques can build.

The Broader Implications: Welcome To The Software 3.0 Era

This discovery is more than just a clever trick for improving QA systems. It is a powerful demonstration of the paradigm shift Andrej Karpathy described as Software 3.0.

In this world, the bottleneck in creating powerful AI applications is no longer just model architecture, dataset size, or computational power. Instead, it is increasingly shifting to the interface between human and AI - the prompt itself.

This has profound implications:

Democratization of AI Development: You don't need an army of research scientists and hundreds of GPUs to achieve significant performance improvements. A developer, a writer, or a domain expert with a deep understanding of language and logic can create effective AI solutions through clever prompt engineering. The barrier to entry has been lowered dramatically.

The Rise of New Roles: Job titles like "Prompt Engineer" and "AI Interaction Designer" are emerging. These roles require a hybrid skill set - the analytical mind of a programmer, the creativity of a writer, and the empathy of a psychologist.

Faster Development Cycles: The Software 1.0 development cycle was "code, compile, debug." The Software 2.0 cycle was "gather data, train, evaluate." The Software 3.0 cycle is "prompt, test, refine." This cycle is faster, more agile, and allows for rapid iteration. You can fundamentally change an AI system's behavior in seconds by editing a few words, rather than in hours or days of retraining.

This experience is a lesson in humility. In the race for ever-increasing technical complexity, we sometimes overlook the elegant, simple solutions hiding in plain sight. It underscores that understanding and shaping how we communicate with our AI systems is just as important as building the systems themselves.

As we continue to build in the Software 3.0 era, the takeaway is clear: before you embark on a costly fine-tuning project or dive into the complex internals of a model, pause. Take a breath. And ask yourself: "Is there a better prompt?" The answer might just surprise you.

Conclusion: A Lesson In Simplicity In The Software 3.0 Era

The journey from a perplexing problem - the "stubbornness" of LLMs in the face of new information - to a surprisingly simple solution offers a profound lesson. It reveals that in the relentless race to build larger models and more complex algorithms, we sometimes overlook the power of our most fundamental tool: language.

The core finding was not a new fine-tuning technique or a breakthrough in activation steering, though they have their merits. Rather, the most critical discovery was that a deliberate reframing of a question - shifting it from a query of fact to a task of reading comprehension - could outperform the most costly and complicated methods. The solution was not in restructuring the AI's brain, but in changing how we communicate with it.

This stands as a vivid testament to the Software 3.0 paradigm, where the skill of designing prompts and interacting with AI becomes a core competency, on par with traditional coding. It democratizes AI development, empowering those with creativity and a deep understanding of language to enact meaningful change without requiring massive computational resources.

Ultimately, this story is a reminder that as we build increasingly intelligent systems, the greatest challenge may not lie in silicon logic or algorithms, but at the interface between human and machine. In this new era, the most powerful lever isn't always a complex line of code or a massive GPU cluster, but perhaps, simply a question, properly asked.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

Master AI Marketing: Build Your 24/7 Digital Assistant Without Code!

Earn Money with MCP in n8n: A Guide to Leveraging Model Context Protocol for AI Automation*

Transform Your Product Photos with AI Marketing for Under $1!*

The AI Secret To Reports That Clients Actually Implement

*indicates a premium content, if any

Reply