- AI Fire

- Posts

- 🔥 Veo 3.1 vs. Sora 2: The AI Video Battle 2025

🔥 Veo 3.1 vs. Sora 2: The AI Video Battle 2025

Breakdown of Sora 2 and Veo 3.1 for real-world creators: how each AI video generator works, where it shines, and which workflow fits your style and budget.

Table of Contents

You’ve seen them, right?

Those AI video clips that look... impossible. A cinematic drone shot over a lost city, or a hyper-realistic character that you swear was shot with a real camera.

This is the next big wave, and it's happening fast.

But let's be real. It's confusing. You hear names like Sora 2, Veo 3.1... it's a full-on AI video battle, and everyone's claiming to be the best. 🥊

So, what's the real deal? Which one actually matters for you as a creator?

I've spent hours digging through all the technical demos, analyzing every single clip, and cutting through the marketing hype. I can confidently say this technology is about to change everything for us. What ChatGPT did for writing, these tools are about to do for video.

I’m really excited to share this lesson with you. This is actually one of the newest modules we’ve just added to the AI Mastery Course, where we are constantly testing the latest tools so you don’t have to.

In this lesson, I'm going to break it all down. We're putting the two heavyweights head-to-head: Google's Veo 3.1 and OpenAI's Sora 2.

I'll walk you through what each one is, show you their real strengths (not just what they advertise), and explain which one you should be watching for your specific creative style.

I. Sora 2 vs. Veo 3.1: What are they?

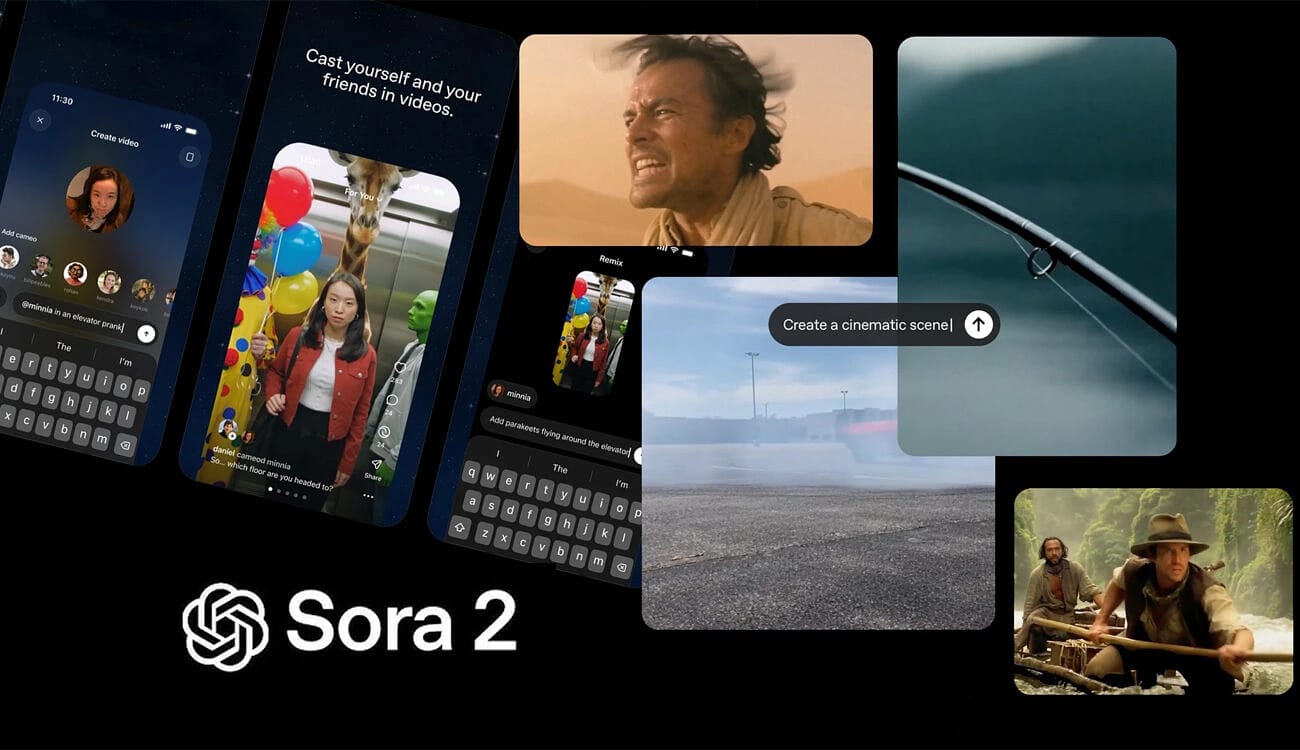

1. Sora 2: The "World Simulator"

Sora 2 from OpenAI is the one that basically broke the internet and made everyone realize that AI video wasn't a joke anymore.

The key phrase to remember for Sora 2 is world simulator.

OpenAI didn't just train this model to make pretty videos. They trained it to understand the physics of the real world. It learned how light bounces, how water splashes, and how a person's hair should move in the wind.

The first version of Sora was amazing, but you could still see the AI weirdness if you looked closely, like a character's face suddenly changing or a hand melting. Sora 2 is the next-generation version, and its entire mission is to fix that.

Here’s what makes Sora 2 special:

Rock-Solid Consistency: This is its superpower. You can create a video of a character walking through a forest, and their face, clothes, and backpack will stay the same.

It Understands Physics: It’s much better at simulating reality. If you ask for a "glass shattering," it understands that the glass should break into pieces and not just... disappear.

Video-to-Video Editing: This is the brand-new, game-changing part. Sora 2 isn't just about text-to-video. It introduces interactive editing. You'll be able to upload an existing video, "mask" (or select) an object, and just type, "Change this blue car to a red truck," and it will do it.

2. Veo 3.1: The "Cinematic Director"

This is Google's direct answer to Sora, and trust me, they are playing to win.

If Sora is the "World Simulator," then Veo 3.1 is the "Cinematic Director."

Google built this tool for creators. They know we don't just want a "random video"; we want artistic control. I've been all over Google's AI and DeepMind blogs, and it's clear they leveraged their massive power (hello, YouTube and Gemini) to focus on one thing: control.

Here’s what makes Veo 3.1 a total beast:

Insane Camera Control: This is its killer feature, hands down. You can use natural language to direct the camera. You can ask for a "sweeping drone shot of a coastline," "a fast-paced tracking shot following a runner," or "a slow-motion close-up." You're not just a prompter; you're the director.

Stunning 4K Output: Google is aiming for the big screen. Veo 3.1 is designed to generate clips in ultra-high-definition 4K.

Long-Form Storytelling: Because it's powered by Google's best Gemini models, it's a beast at understanding long, nuanced, multi-part prompts. It can create much longer videos (well over 60 seconds) that tell a coherent story with multiple scenes, all while keeping the same characters and style.

II. Getting started with Sora 2

1. How to use Sora 2

Before we build, you need to get access. Here's the deal:

Access: It's still in a closed-ish beta. You log in with your normal ChatGPT account, but you need an invite code. (My advice? Ask around in AI communities or check the video comments, people are sharing them. Never pay for a code. It's a great way to get scammed or banned!).

Region: If you're outside the United States, you'll almost certainly need a good VPN set to a US location to sign up and use the app.

In the app, you've got two main options:

The Free Plan: This is surprisingly generous. You get about 100 free generations every 24 hours, which is more than enough to learn.

The Pro Plan: This is about $200/month. This is what you'll need for real work. It unlocks 1080p High quality and lets you generate longer 15-second clips.

Step 1: Download the App

Go to the App Store, or Google Play, then download Sora to your phone

Step 2: Log In & Burn Your Invite

The first time you open the app, it's two simple screens:

Log In: Use your normal OpenAI (ChatGPT) account. If you don't have one, you can make one right there.

Enter Your Invite Code: This is the magic password. Type it in, and you're in the club.

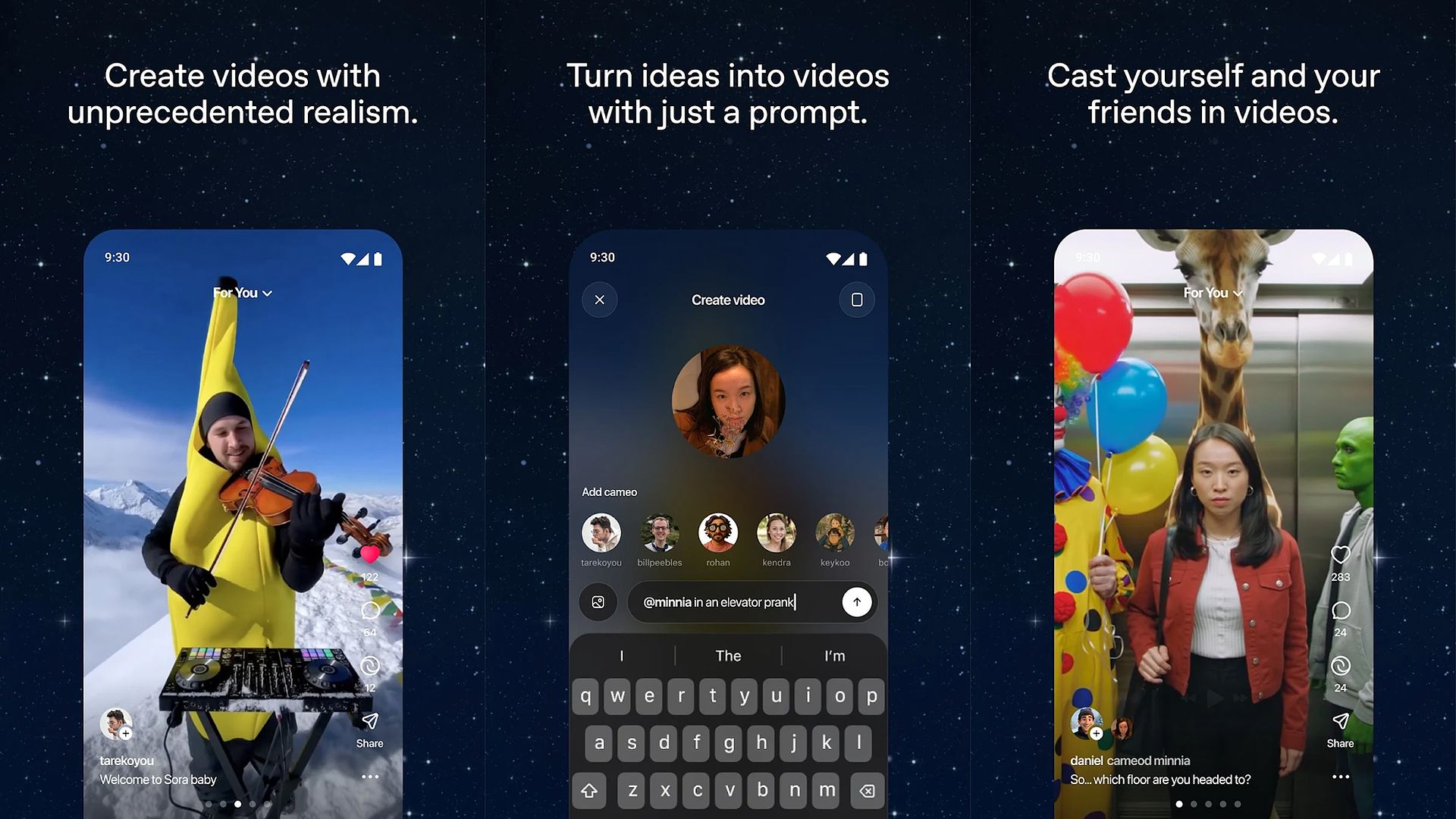

Step 3: The Cameo Verification (This is the Most Important Step)

Okay, pay attention here. This is not like other apps.

Sora 2 will ask for Camera and Microphone access. You must allow this.

Why? It's for the Cameo feature. The app will then ask you to do a Portrait Verification.

You'll have to record a 3-second video of your face and say a short phrase (like "Hello Sora").

This creates your digital avatar, or your Cameo. It's what lets you put yourself (or your character) into your videos later. This is one of Sora 2's most powerful features, so don't skip it.

Step 4: Learn the Interface (aka "The TikTok Clone")

The home screen will look exactly like TikTok’s. It's a vertical feed of videos. You swipe up to browse what other people are making.

❗TIP 1 FOR YOU: Don't just swipe up. Swipe horizontally. This is a hidden feature. It shows you 3 different video variations that were all generated from the exact same prompt. It's the fastest way to learn what a good prompt looks like.

At the bottom, you've got three simple tabs:

For You: The main feed.

Search: Find videos by prompt or style.

Create (The "+" button): This is where you'll live.

Step 5: Create Your First Video

You'll see your main prompt bar. Here, you can type a text prompt, but more importantly, you can Upload a Reference Image. This is key for product ads (upload your bottle) or real estate (upload your room photo). Hit that "+" (Create) button.

Before you type, look at your settings:

Aspect Ratio: Choose 9:16 (for TikTok/Reels), 1:1 (square), or 16:9 (for YouTube/Ads).

Style Presets: You can pick a vibe right away, like "Stop Motion" or "Film Noir."

Duration: (e.g., 8, 12, or 15 seconds)

Quality: (Standard or High - 1080p, which is a Pro feature)

❗TIP 2 FOR YOU: Tap the "star" icon to save your favorite combo (e.g., 9:16 + Film Noir) as your default. It saves you a click every single time.

Hit that "+" (Create) button. Your video will start rendering, and you can find it in the "Drafts" section under your profile. Now, just type a simple prompt to make sure it's all working. I tried "A cat playing with a yarn ball on a couch, cartoon style." It took about 20 seconds for a 10-second clip. You'll see it's incredibly fast.

Master 27+ powerful AI tools with no coding, earn your free certificate, and boost your productivity fast.

2. Other Options I Want to Tell You

The real power is in the API.

Everyone knows these models are expensive, but how much is a single second of cinematic AI actually worth? I’ve gone through all the API documentation to give you the real breakdown of the cost and, more importantly, how you can access them right now.

For API, you pay per second generated. Those high-resolution, long-form videos add up fast.

But the official OpenAI API is crazy expensive (like $0.10 a second). We're not doing that.

I'm going to show you how to use third-party platforms to access the exact same Sora 2 engine for a fraction of the price, we're talking 6x cheaper, plus, you get more control and no watermarks.

I'll walk you through one of the best platforms for this: Kie.ai (my top pick for price) and Fal.ai (a great all-around aggregator).

The Good: You often get unlimited generations for a flat subscription, the clips are up to 12 seconds long, and... no Sora watermark.

The Bad: The generation times are way slower, and you do not get the Cameo feature, which is one of Sora's most powerful tools.

3. How to Use Sora 2 with Kie.ai

This is my #1 recommendation because the price is just unbeatable. While OpenAI and Fal.ai charge $0.10/second, Kie.ai gets you the same video for $0.015 (1.5 cents) per second.

Here's how to get set up.

Step 1: Get Your Account

Go to Kie.ai and sign up for an account.

If you want to automate with other tools, like n8n. Here are additional steps you can do when logging in:

Once you're in, find the "Billing" section on your dashboard. For the Free version, you will have 80 credits when you first sign up on the web.

Add credits. Honestly, just add $5. At their prices, $5 will let you generate hundreds of test videos and will last you forever.

Now, look for the "API Key" section in the sidebar. Generate a new key.

SAVE THIS KEY. Copy it and put it somewhere safe (like a password manager). This is your secret key to the whole platform.

Get that Key for your next n8n automation. Here’s your detailed guideline: 🎬 Sora 2 Is Secretly 6x Cheaper (Using This n8n Hack)

Step 2: Find Your Model

On your Kie.ai dashboard, find the "Models Market." This is your new toybox. You'll see all the models you now have access to:

Google Veo 3.1

Sora 2 (standard)

Sora 2 Pro

Sora 2 Pro Storyboard (this is that insane multi-scene feature)

For the simple usage, let’s just take the Standard model

Step 3: Generate Your Video

Once you choose the model, below is the dashboard. Type your prompt, customize your settings with Aspect Ratio and Frames options, turn on Remove Watermark. Each video will cost you 30 credits.

III. Getting started with Veo 3.1

The most important thing to know is that Google has set up different doors depending on who you are. Below are the options you can choose: Gemini API (Paid Preview),Vertex AI (Paid Preview), Gemini App (via Google AI Plans), Flow

Let's walk through each one:

Gemini API: It's the most straightforward way to get an API key and start making calls to the Veo 3.1 model. This one is for: Solo developers, small teams, and creators who are comfortable with code.

Vertex AI: This is the supercharged version of the API. It's part of the Google Cloud platform, which means it comes with all the heavy-duty tools big companies need, like advanced security, team management, and billing controls. This one is for: Large businesses, enterprises, and production studios.

Gemini App (via Google AI Plans): Veo 3.1's power is built directly into the Gemini app. It's included in your monthly Google AI plan (like the Pro or Ultra tier). You get certain usage limits based on your plan, but you're not getting a separate bill for every second you generate.

Flow (via Google AI Plans): This is a dedicated creative interface (a front-end) built specifically for filmmakers and designers. Just like the Gemini App, this is also included with your Google AI plan.

For this guide, I’ll focus on the two methods most creators are using to make money from it: Gemini and Flow.

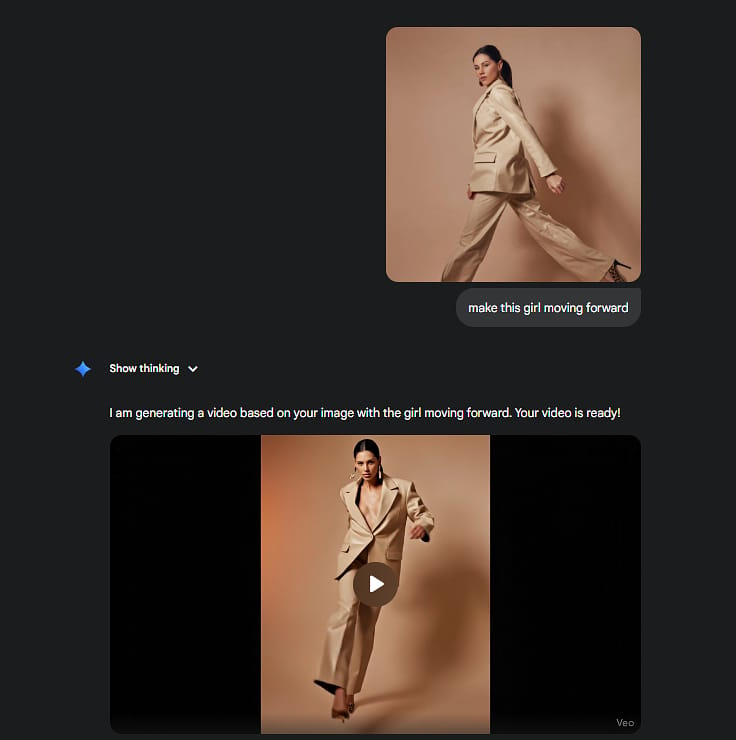

1. How to Use Veo 3.1 in Gemini App

In Gemini, Veo 3.1 has two models for you:

Now let’s get started:

Step 1: Select the option Create videos with Veo

Step 2: Generate your videos

Text-to-video generation: Type your prompt, and wait for the final results

Image-to-video generation: Insert your photo into the bar, then write your prompt

2. How to Use Veo 3.1 in Flow

This is where things get lit, guys!!

In Flow, you can utilize this model in many more ways! This is your pro studio. You use Flow when you're not just making one clip, but a full story with multiple scenes and consistent characters.

Step 1: Get Access

Just like Gemini, access to Flow is unlocked when you subscribe to Google AI Pro or Google AI Ultra.

Step 2: Understand the Workflow

Flow is a full-on AI video studio designed for storytellers.

It's a Storyboard: Instead of one prompt, you're building a sequence of scenes. After generating videos, click Add to scene → Screenbuilder to merge all the separated videos into a long-form one. In the Screenbuilder, you can freely edit, remove/ replace your videos. When editing is done, click Download (the arrow icon)

It Has Asset Management: This is the killer feature. You can upload or generate "ingredients", like a specific product photo or, even better, an image of a character.

It Has Character Consistency: You can then tell Flow to reuse that same character in multiple different shots, something that's almost impossible in other models.

You can even reprompt the videos to get a better one

It Has Camera Control: Flow gives you more manual, granular control over camera angles and motion paths.

This is the tool you can make a 30-second ad with 5 different shots, all featuring the same actor and the same product.

3. My Top Tips for Hacking Your Veo 3.1

I've gone through all the notes and tested these workflows. Here is my pro-guide to using Veo 3.1, saving credits, and actually getting the results you want.

3.1. Build "Scene by Scene." Don't try to make your whole movie in one prompt. I use ChatGPT to write the high-level story or concept first. Then, I break that story down into individual scenes, and I generate those scenes one by one in Veo.

3.2. Use a Good Prompt Formula. Here’s a great starting template:

[Your main scene]. [Add one simple camera movement]. [Add your cinematic visuals & lighting style].You can also use special GPTs about Veo 3.1 Prompt Builder, but this manual formula gives you more control.

Use Negative Prompts: Save yourself the headache. Always add your negative prompts at the end to avoid re-generating. For example: I usually type no subtitle so that my generated videos won’t automatically add subtitles in the video.

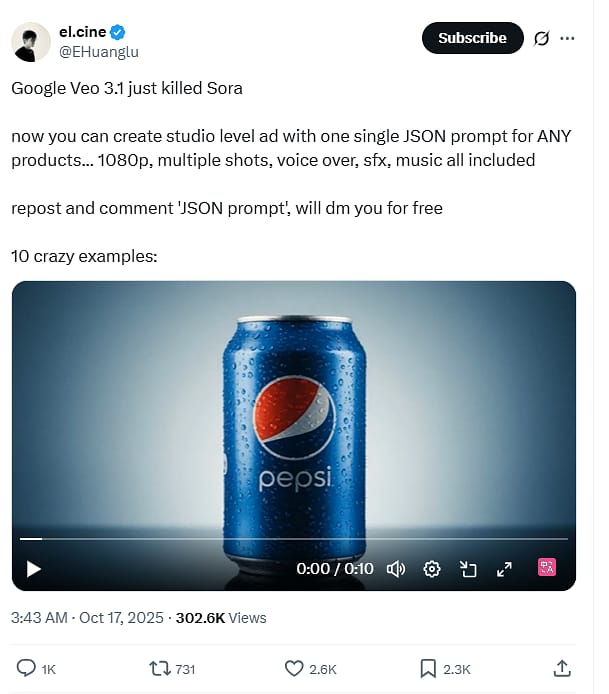

3.3. You can even generate videos with JSON prompt

Don't let the name scare you. It's not code. It's just a hyper-organized list of instructions.

Think of it this way:

A Normal Prompt is like telling a new assistant:

The assistant has to guess what "cinematic" means, what "kinda moody" is, and if the "red jacket" is more important than the "close-up."

A JSON Prompt is like handing that assistant a technical spec sheet:

{

"subject": "A man in a red jacket with a facial scar",

"action": "Sitting by a crackling fire",

"camera": "Slow dolly zoom-in, medium shot",

"lighting": "Warm, high-contrast, moody, only light from fire",

"style": "Cinematic, 35mm film grain, hyper-realistic"

}You see the difference? You're not writing a story; you're filling out a form. The AI doesn't have to guess or interpret your creative writing. It just reads the labels and executes the command.

This is the single best way to get precise, repeatable control.

This video is created using the JSON prompt:

If you need the prompt, click here

IV. Which One Is the Winner?

So who’s the winner in this AI video battle?

On the surface, both are the first AI models that truly nail high-quality video with synchronized audio. But I've been digging through all the specs, and I can tell you they are two completely different beasts, built for two completely different jobs.

Below is my review:

🎨 Sora 2:

Choose Sora 2 when your goal is unmatched realism.

Its Superpower is Physics: Sora 2 is a total physics nerd. It just understands how things are supposed to move. Cloth ripples correctly, things collide realistically, and action scenes feel right.

Perfect Audio Sync: This is its other big win. The lip-sync for dialogue is top-tier, and the ambient background sounds are perfectly matched to the scene.

🎬 Veo 3.1:

Choose Veo 3.1 when your goal is to tell a complete story.

Its Superpower is Consistency: Veo 3.1 is built for structured, multi-scene videos. It's the only tool that can reliably keep the same character, the same lighting, and the same story flow from one shot to the next.

It's an Editor: This is the key. Inside the Flow app, you have real editing power. You can:

Extend Scenes: Generate a clip and tell Veo 3.1 to "keep going," extending it up to a minute long.

Remove Objects: Literally tell it to "remove that car" or "erase that person," and it will rewrite the background. Actually, Sora 2 can do it as well, but it has many errors when doing this. So Veo 3.1 still prevails in this case.

So, Who's the Actual Winner?

🏆 You Should Choose Sora 2 if...you are an artist, a creative, or a perfectionist. Your goal is to create one single, jaw-dropping, cinematic shot. You prioritize perfect physics, flawless realism, and the best lip-sync in the game. You're willing to sacrifice editing control for that "wow" factor.

🏆 You Should Choose Veo 3.1 if...you are a filmmaker, marketer, or storyteller. Your goal is to build a complete narrative with multiple, consistent scenes. You prioritize workflow, character consistency, and editing tools (like object removal). You'd rather have a very good 3-scene story than one perfect 10-second clip.

We've officially hit the point where AI-generated content isn't just a cool tech demo; it's a full-blown category of viral content. I'm sure your feed is just like mine: absolutely flooded with clips that are either stunningly beautiful or just... weird.

And it all seems to be a two-horse race between Sora 2 and Veo 3.1.

But what I find fascinating isn't just that they're being used, but how. The community has already split them into different jobs. Here are the biggest trends I'm seeing.

Trend 1: The Ad & Cinematic Trailer

This is the trend I'm watching most closely. For the first time, we're seeing commercial-grade content.

I'm not talking about a simple product shot. I'm talking about full-blown, multi-scene ads and cinematic trailers. If you haven't seen this Coca-Cola’s annual Christmas advert, you need to stop and watch it. It was made with Veo 3.1, and it's got the storytelling, the multiple camera angles, and the vibe of a real blockbuster.

Personally, I think Veo 3.1 is winning in this one. Just look at some of the results here, and you can see why.

We've talked about what Veo 3.1 can do on its own. But what if I told you the real pro-level move isn't just using Veo 3.1? It's combining the models with other tools.

Right now, the single biggest challenge with AI video is control. How do you get your product, your logo, or your character to look exactly right, every single time?

Well, I'm about to show you my favorite workflow for this. It's a secret weapon combo that uses Nano Banana for perfect visual control and Veo 3.1 for its magic animation engine.

The result? You can create professional, branded video ads from scratch, with perfect product consistency.

Don't believe me? Just take a look at what this workflow case study can produce right here.

Yep. That's an AI-generated ad.

For the workflow guideline, you can check out our blog: 💰 How To Make A Viral UGC Ad For $0.10 (Without A Camera)

Trend 2: Is this real or AI?

These are clips designed to make you stop scrolling and ask, "Wait... is that real?"

Sora 2 is absolutely dominating this trend, and it all comes down to its physics and organic feel.

You see it in two main ways:

AI chiropractic: I'm obsessed with this physical therapy video. It's the way the AI understands the hands interacting with the knee, the subtle muscle movements. It's that organic quality that Sora 2 just nails. It's still a little creepy, but it's hard to tell it's AI unless you're really looking for it.

Animal Interaction: This is the one that's viral all over TikTok. I mean, they all started with some shocking actions, and maybe…dancing:). Besides all those factors, it’s hard to tell whether it’s AI or not.

I have to say, last year, people were still guessing whether it was real or cake. Now it is the era of “Is this real or AI” 😂

If you want to create your own viral video, you won’t miss this one: 🎬 The AI Content Strategy That Hits 1M+ Views

VI. Conclusion

So, there you have it. That's the complete lay of the land for the new world of AI video.

We've covered a ton of ground. You now know the real difference between these two: Sora 2 and Veo 3.1.

You have my full setup guide, from getting access with an invite code and VPN to the pro-hacks of using cheaper APIs like Kie.ai.

But if there's only one thing you take away from this entire lesson, let it be this:

There is no single winner. The magic isn't in the tool; it's in the workflow.

The best viral ads I'm seeing right now aren't just a single tool. The pros are mixing, matching, and finding new ways to break these tools every single day.

You now have the same playbook. You know the strengths, the weaknesses, and all the secret tips.

Now, it's your turn. Go build something.

Are you excited about our new course, AI Mastery AZ? |

Reply