- AI Fire

- Posts

- ⚡️ The AI Race Verdict: America's Grid Can't Power The Future

⚡️ The AI Race Verdict: America's Grid Can't Power The Future

A stunning report from AI experts shows how China's strategic energy surplus creates an insurmountable lead, while the U.S. power grid faces collapse.

In the global race for AI dominance, what do you believe is the most critical limiting factor? |

Table of Contents

Recently, a group of leading AI researchers from Silicon Valley returned from a tour of China's tech hubs with an alarming observation, one that should shake every boardroom from California to Washington D.C. It was summarized in a single, powerful sentence: "Everywhere we went, people treated the availability of energy as a given."

For anyone working in the American AI sector, the idea of treating energy as an infinite resource is almost unimaginable. But on the other side of the Pacific, it is a thoroughly solved problem. This simple statement exposes a stark and uncomfortable truth: the most important technology race of the 21st century might not be decided by complex algorithms or advanced microchips, but by something as fundamentally mundane as the electrical grid.

While America has been bogged down in debates over budget cuts, celebrating tax breaks, and mired in ideological battles, China has been quietly building the unshakable foundation for its future technological supremacy. The result is a dramatic contrast: China's AI developers are free to innovate without a second thought for the electricity bill, while their American counterparts must contend with building private power plants or face the threat of rolling blackouts. The race, it seems, was decided not on a digital playing field, but on a physical one that America has long neglected.

The Energy Chasm: A Tale Of Two Grids

The difference between American and Chinese energy infrastructure is not just one of scale, but of philosophy. The U.S. grid operates on an incredibly thin reserve margin, often around just 15%. This means the system is constantly running close to its limit. It’s like a restaurant that is always one customer away from running out of food. Every heatwave, every sudden spike in demand, can push the system to the brink of collapse, leading to emergency alerts and widespread power outages.

In contrast, China has adopted a strategy of "strategic abundance." They maintain an electricity generation capacity that is nearly 100% greater than their peak demand. This isn't waste; it's a strategic investment. This massive energy surplus ensures that when a new, power-hungry industry like AI emerges, their grid is not only unstrained but welcomes it as an opportunity to "soak up excess supply." AI data centers, which are a burden on the grid in the U.S., become a useful load-balancing solution in China.

To put the scale into perspective, energy analysts report that China's annual increase in electricity demand is greater than the total consumption of Germany. A few of its provinces alone generate as much power as the entire nation of India. They have achieved this through long-term planning, constructing thousands of kilometers of Ultra-High Voltage (UHV) transmission lines that efficiently carry power from massive hydro and solar farms in the remote west to the industrial and tech hubs of the east.

Meanwhile, in the United States, upgrading a single aging transmission line can take a decade due to regulatory hurdles, lawsuits, and local opposition. We not only lack generation capacity, but we also lack the ability to get the power to where it's needed most.

The AI Data Center Dilemma: Insatiable Demand Meets A Brittle Supply

The explosion of generative AI has created an unprecedented thirst for energy. Training a single large language model (LLM) like OpenAI's GPT-4 can consume as much electricity as hundreds of homes in a year. And that's just the training phase. The operational phase, or "inference," where billions of users ask questions and make requests, consumes even more power on a continuous basis.

McKinsey & Company calculates that the world will need to invest approximately $6.7 trillion in new data center infrastructure by 2030 to meet this demand. China is ready with a grid that can support its large share of that pie. In the U.S., we are witnessing a battle for energy resources between tech companies and ordinary citizens.

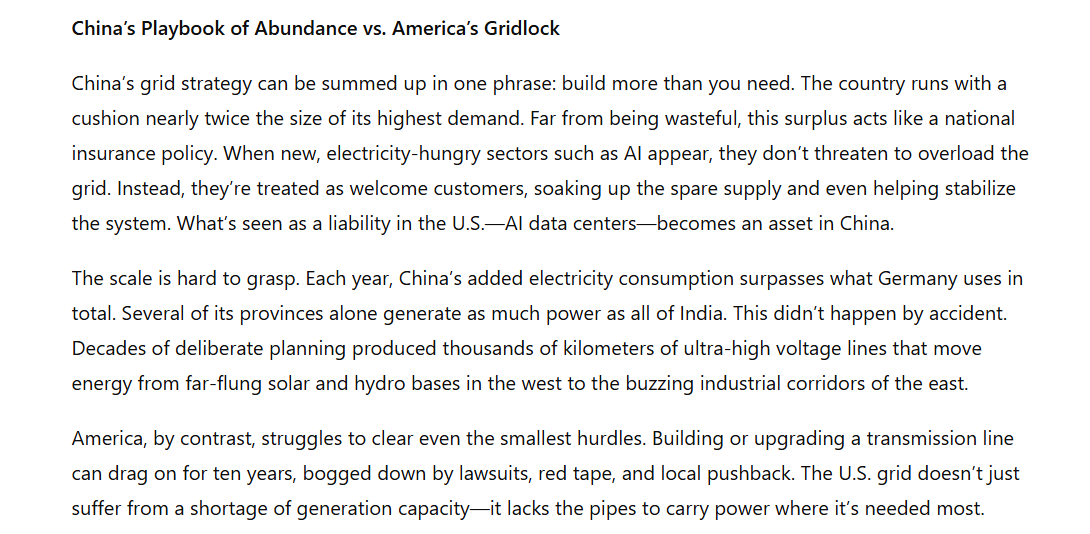

In Ohio, families are paying an average of $15 more per month on their electricity bills, partly because massive data centers in the region have consumed a huge amount of power, driving up wholesale prices.

In Virginia, home to "Data Center Alley," which handles a majority of the world's internet traffic, the utility company Dominion Energy had to announce a temporary halt to new data center connections, fearing the grid could not keep up.

In California and Texas, authorities frequently issue "Flex Alerts," asking residents to reduce electricity consumption during peak hours to avoid rolling blackouts, all while high-performance computing facilities continue to operate at full throttle.

The sad reality is that the American grid, designed for a bygone industrial era, is now buckling under the weight of a digital revolution it was never prepared to support.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

The Root Of The Problem: A Contrast In Philosophies Of Capital And Governance

The disparity in grid infrastructure is merely a symptom of a deeper malady, rooted in the fundamental differences between the two nations' economic and governance models.

The American Model: Short-Termism and Shareholder Primacy

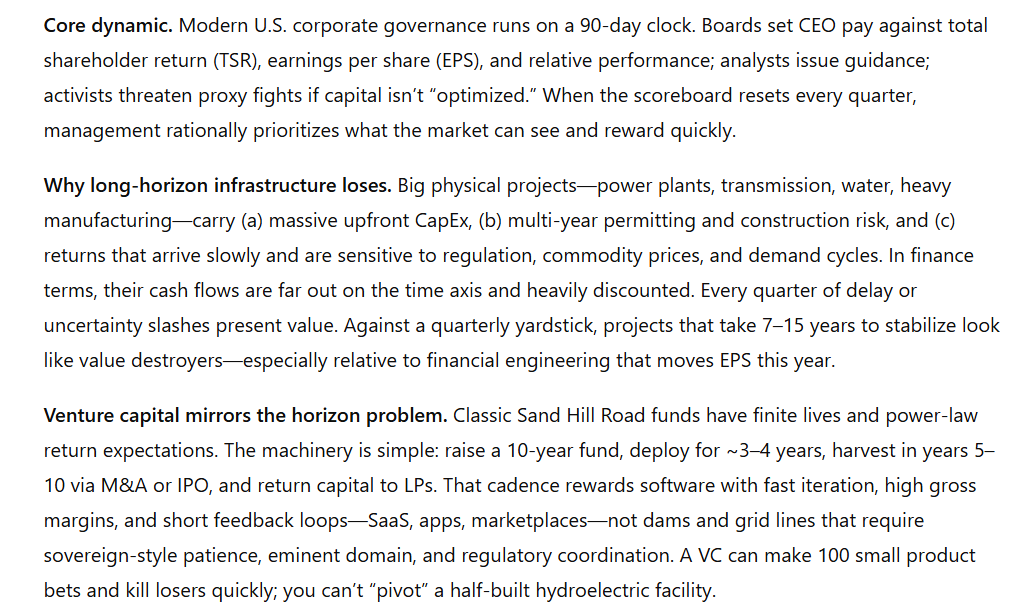

Modern American capitalism operates on the rhythm of the quarterly earnings report. CEOs and boards are obsessed with maximizing short-term profits to please shareholders and Wall Street. In this environment, long-term infrastructure projects like power plants and transmission lines are deeply unattractive. They require enormous upfront capital, take decades to build, and only begin to generate a return on investment over a very long horizon.

Silicon Valley's venture capital system operates on the same logic. It funnels billions into Software-as-a-Service (SaaS) companies and mobile apps with the hope of a 10x or 100x return within five to seven years. No VC fund has the patience to finance a hydroelectric dam.

The result is an "extraction economy." Instead of investing in R&D or capital expenditures (CapEx) to build long-term sustainability, corporations favor stock buybacks to artificially inflate share prices and pay out massive executive bonuses. They prefer to be emperors of a dumpster fire rather than builders of sustainable kingdoms. If they damage the national interest, they might receive a bailout.

The Chinese Model: State-Directed Capitalism And Strategic Patience

In contrast, China operates under a different model. Its government, through its Five-Year Plans, plays a directive role, channeling capital into sectors deemed strategically vital for the nation's future, long before the demand materializes. Massive state-owned enterprises (SOEs) like the State Grid Corporation of China operate not just for profit, but to fulfill a national mission.

They accept that some projects may fail or operate under capacity for years. This is considered a necessary cost to ensure that when the nation needs that capacity, it is ready and waiting. This is not ideology; it is basic planning. They understand that the power of their corporations and elites depends on the overall capability of the nation. In China, billionaires who severely damage the national interest can face dire consequences.

This difference explains why oil and gas lobbyists in the U.S. actively sabotage renewable energy development, even as solar and wind become cheaper than fossil fuels. They are protecting quarterly dividends while China is building the infrastructure of the next century.

The Ideological Quagmire: Pragmatism Vs. Partisan Polarization

Another significant barrier for the U.S. is the politicization of everything. Renewable energy is not viewed through a simple economic and strategic lens but has become a front in the culture wars. The debate over climate change still rages, and building a wind or solar farm is often seen more as a political statement than a sound business decision.

China is not entangled in this mess. They do not treat renewable energy as a moral crusade. Solar and wind simply make economic sense, helping them secure their energy independence and reduce reliance on imported fossil fuels. So, they build them at a scale and speed the world has never seen. When renewable projects can't keep pace with AI's growth, they readily fire up idle coal plants as a backup while continuing to build out more sustainable sources. Coal is viewed as outdated technology, not an incarnate evil.

This pragmatic approach allows them to focus on results instead of getting bogged down in ideological battles. Meanwhile, in the U.S., the permitting process for a major infrastructure project can be endless. The National Environmental Policy Act (NEPA), while well-intentioned, has become a tool for various interest groups to sue and delay projects for years, sometimes decades. China, with its centralized governance, can execute mega-projects like the Three Gorges Dam in the time it takes Americans just to complete the initial environmental impact statements.

The Practical Realities: How Energy Shapes AI Development

This energy disparity is not just a macroeconomic issue; it directly impacts the daily work of AI engineers and researchers.

Scenario 1: The American AI Developer (A Constrained Environment)

At an AI startup in Palo Alto, an engineer spends a significant portion of their time not on inventing new algorithms, but on optimizing code to be as energy-efficient as possible. Their compute budget is limited by electricity costs and access to GPU clusters. A failed model training run isn't just a waste of time; it's a massive electricity bill.

They might use a model like Claude to help them optimize their work. A typical prompt might look like this:

Detailed Optimization Prompt:

"You are an expert in deep learning model optimization. I am working on a text classification transformer model in PyTorch on NVIDIA A100 GPUs. The current code is suffering from high memory usage and computational cost. Please analyze the following code and propose specific changes to:

Reduce GPU memory footprint: Implement techniques like mixed-precision training using torch.cuda.amp and gradient checkpointing to reduce memory for storing intermediate activations.

Decrease floating-point operations (FLOPs): Suggest methods to replace dense self-attention layers with more efficient variants like sparse attention or linear attention, if appropriate for the task.

Optimize the data loading pipeline: Review the DataLoader to ensure num_workers and pin_memory are set optimally to prevent I/O bottlenecks and keep the GPU saturated.

Convert to a more efficient format: Suggest how to use TorchScript or ONNX Runtime to compile the model for faster, more energy-efficient inference after training.

Please provide modified code snippets and explain the reasoning behind each change."The Result:

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader, Dataset

from torch.cuda.amp import autocast, GradScaler

import torch.utils.checkpoint as checkpoint

from transformers import AutoTokenizer, AutoModel

import math

import numpy as np

# 1. MEMORY-EFFICIENT ATTENTION MECHANISMS

class LinearAttention(nn.Module):

"""

Linear attention mechanism with O(N) complexity instead of O(N²)

Reduces FLOPs significantly for long sequences

"""

def __init__(self, dim, heads=8, dim_head=64):

super().__init__()

inner_dim = dim_head * heads

self.heads = heads

self.dim_head = dim_head

self.scale = dim_head ** -0.5

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias=False)

self.to_out = nn.Linear(inner_dim, dim)

def forward(self, x):

b, n, d = x.shape

h = self.heads

qkv = self.to_qkv(x).chunk(3, dim=-1)

q, k, v = map(lambda t: t.view(b, n, h, -1).transpose(1, 2), qkv)

# Apply ELU + 1 to ensure positive values for linear attention

q = F.elu(q) + 1

k = F.elu(k) + 1

# Linear attention: O(N) instead of O(N²)

context = torch.einsum('bhnd,bhne->bhde', k, v)

out = torch.einsum('bhnd,bhde->bhne', q, context)

out = out.transpose(1, 2).contiguous().view(b, n, -1)

return self.to_out(out)

class SparseAttention(nn.Module):

"""

Sparse attention with block-diagonal pattern

Reduces attention complexity while maintaining performance

"""

def __init__(self, dim, heads=8, dim_head=64, block_size=64):

super().__init__()

inner_dim = dim_head * heads

self.heads = heads

self.dim_head = dim_head

self.block_size = block_size

self.scale = dim_head ** -0.5

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias=False)

self.to_out = nn.Linear(inner_dim, dim)

def forward(self, x):

b, n, d = x.shape

h = self.heads

qkv = self.to_qkv(x).chunk(3, dim=-1)

q, k, v = map(lambda t: t.view(b, n, h, -1).transpose(1, 2), qkv)

# Create block-diagonal attention mask

num_blocks = (n + self.block_size - 1) // self.block_size

# Pad sequence to multiple of block_size

pad_size = num_blocks * self.block_size - n

if pad_size > 0:

q = F.pad(q, (0, 0, 0, pad_size))

k = F.pad(k, (0, 0, 0, pad_size))

v = F.pad(v, (0, 0, 0, pad_size))

# Reshape for block attention

q = q.view(b, h, num_blocks, self.block_size, -1)

k = k.view(b, h, num_blocks, self.block_size, -1)

v = v.view(b, h, num_blocks, self.block_size, -1)

# Block attention

attn = torch.einsum('bhnqd,bhnkd->bhnqk', q, k) * self.scale

attn = F.softmax(attn, dim=-1)

out = torch.einsum('bhnqk,bhnkd->bhnqd', attn, v)

out = out.view(b, h, -1, self.dim_head).transpose(1, 2)

# Remove padding

if pad_size > 0:

out = out[:, :n]

out = out.contiguous().view(b, n, -1)

return self.to_out(out)

# 2. MEMORY-OPTIMIZED TRANSFORMER BLOCK

class OptimizedTransformerBlock(nn.Module):

"""

Transformer block with gradient checkpointing and efficient attention

"""

def __init__(self, dim, heads=8, mlp_ratio=4, attention_type='linear'):

super().__init__()

self.norm1 = nn.LayerNorm(dim)

self.norm2 = nn.LayerNorm(dim)

# Choose attention mechanism

if attention_type == 'linear':

self.attn = LinearAttention(dim, heads)

elif attention_type == 'sparse':

self.attn = SparseAttention(dim, heads)

else: # standard attention

self.attn = nn.MultiheadAttention(dim, heads, batch_first=True)

# MLP with reduced intermediate size option

mlp_dim = int(dim * mlp_ratio)

self.mlp = nn.Sequential(

nn.Linear(dim, mlp_dim),

nn.GELU(),

nn.Dropout(0.1),

nn.Linear(mlp_dim, dim),

nn.Dropout(0.1)

)

def forward(self, x):

# Use gradient checkpointing to save memory

return checkpoint.checkpoint(self._forward, x, use_reentrant=False)

def _forward(self, x):

# Pre-norm architecture for better training stability

attn_out = self.attn(self.norm1(x))

if isinstance(attn_out, tuple): # Handle MultiheadAttention output

attn_out = attn_out[0]

x = x + attn_out

x = x + self.mlp(self.norm2(x))

return x

# 3. OPTIMIZED TEXT CLASSIFIER

class OptimizedTextClassifier(nn.Module):

"""

Memory and compute efficient text classifier

"""

def __init__(self, vocab_size, num_classes, dim=512, depth=6, heads=8,

max_seq_len=512, attention_type='linear'):

super().__init__()

self.dim = dim

self.max_seq_len = max_seq_len

# Embedding layers

self.token_embedding = nn.Embedding(vocab_size, dim)

self.pos_embedding = nn.Embedding(max_seq_len, dim)

# Transformer blocks

self.blocks = nn.ModuleList([

OptimizedTransformerBlock(dim, heads, attention_type=attention_type)

for _ in range(depth)

])

# Classification head

self.ln_f = nn.LayerNorm(dim)

self.classifier = nn.Linear(dim, num_classes)

# Initialize weights

self.apply(self._init_weights)

def _init_weights(self, module):

if isinstance(module, (nn.Linear, nn.Embedding)):

module.weight.data.normal_(mean=0.0, std=0.02)

if isinstance(module, nn.Linear) and module.bias is not None:

module.bias.data.zero_()

elif isinstance(module, nn.LayerNorm):

module.bias.data.zero_()

module.weight.data.fill_(1.0)

def forward(self, input_ids, attention_mask=None):

seq_len = input_ids.size(1)

pos_ids = torch.arange(seq_len, device=input_ids.device).unsqueeze(0)

# Embeddings

x = self.token_embedding(input_ids) + self.pos_embedding(pos_ids)

# Apply attention mask if provided

if attention_mask is not None:

x = x * attention_mask.unsqueeze(-1)

# Transformer blocks

for block in self.blocks:

x = block(x)

# Classification

x = self.ln_f(x)

# Use mean pooling for classification

if attention_mask is not None:

mask_expanded = attention_mask.unsqueeze(-1).expand_as(x)

sum_embeddings = torch.sum(x * mask_expanded, dim=1)

sum_mask = torch.clamp(mask_expanded.sum(dim=1), min=1e-9)

x = sum_embeddings / sum_mask

else:

x = x.mean(dim=1)

return self.classifier(x)

# 4. OPTIMIZED DATASET AND DATALOADER

class OptimizedTextDataset(Dataset):

"""

Memory-efficient dataset with proper tokenization caching

"""

def __init__(self, texts, labels, tokenizer, max_length=512):

self.texts = texts

self.labels = labels

self.tokenizer = tokenizer

self.max_length = max_length

# Pre-tokenize to save computation during training

print("Pre-tokenizing dataset...")

self.tokenized = []

for text in texts:

encoded = self.tokenizer(

text,

truncation=True,

padding='max_length',

max_length=max_length,

return_tensors='pt'

)

self.tokenized.append({

'input_ids': encoded['input_ids'].squeeze(),

'attention_mask': encoded['attention_mask'].squeeze()

})

def __len__(self):

return len(self.texts)

def __getitem__(self, idx):

return {

'input_ids': self.tokenized[idx]['input_ids'],

'attention_mask': self.tokenized[idx]['attention_mask'],

'labels': torch.tensor(self.labels[idx], dtype=torch.long)

}

def create_optimized_dataloader(dataset, batch_size=32, num_workers=None, pin_memory=True):

"""

Create optimized DataLoader with proper worker configuration

"""

if num_workers is None:

# Optimal number of workers: typically 4-8 for most systems

num_workers = min(8, torch.get_num_threads())

return DataLoader(

dataset,

batch_size=batch_size,

shuffle=True,

num_workers=num_workers,

pin_memory=pin_memory,

persistent_workers=True, # Keep workers alive between epochs

prefetch_factor=2, # Prefetch batches

drop_last=True # Ensure consistent batch sizes for mixed precision

)

# 5. TRAINING LOOP WITH MIXED PRECISION

class OptimizedTrainer:

def __init__(self, model, train_loader, val_loader, device, lr=1e-4):

self.model = model.to(device)

self.train_loader = train_loader

self.val_loader = val_loader

self.device = device

# Optimizer with weight decay

self.optimizer = torch.optim.AdamW(

model.parameters(),

lr=lr,

weight_decay=0.01,

betas=(0.9, 0.999)

)

# Learning rate scheduler

self.scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(

self.optimizer, T_max=len(train_loader) * 10

)

# Mixed precision training

self.scaler = GradScaler()

# Loss function

self.criterion = nn.CrossEntropyLoss()

def train_epoch(self):

self.model.train()

total_loss = 0

for batch_idx, batch in enumerate(self.train_loader):

# Move to device

input_ids = batch['input_ids'].to(self.device, non_blocking=True)

attention_mask = batch['attention_mask'].to(self.device, non_blocking=True)

labels = batch['labels'].to(self.device, non_blocking=True)

self.optimizer.zero_grad()

# Mixed precision forward pass

with autocast():

outputs = self.model(input_ids, attention_mask)

loss = self.criterion(outputs, labels)

# Mixed precision backward pass

self.scaler.scale(loss).backward()

# Gradient clipping for stability

self.scaler.unscale_(self.optimizer)

torch.nn.utils.clip_grad_norm_(self.model.parameters(), max_norm=1.0)

self.scaler.step(self.optimizer)

self.scaler.update()

self.scheduler.step()

total_loss += loss.item()

# Memory cleanup every 50 steps

if batch_idx % 50 == 0:

torch.cuda.empty_cache()

return total_loss / len(self.train_loader)

def validate(self):

self.model.eval()

total_loss = 0

correct = 0

total = 0

with torch.no_grad():

for batch in self.val_loader:

input_ids = batch['input_ids'].to(self.device, non_blocking=True)

attention_mask = batch['attention_mask'].to(self.device, non_blocking=True)

labels = batch['labels'].to(self.device, non_blocking=True)

with autocast():

outputs = self.model(input_ids, attention_mask)

loss = self.criterion(outputs, labels)

total_loss += loss.item()

_, predicted = outputs.max(1)

total += labels.size(0)

correct += predicted.eq(labels).sum().item()

accuracy = 100. * correct / total

avg_loss = total_loss / len(self.val_loader)

return avg_loss, accuracy

# 6. MODEL COMPILATION FOR INFERENCE

def compile_model_for_inference(model, example_input, export_path="model"):

"""

Convert model to TorchScript and ONNX for optimized inference

"""

model.eval()

# TorchScript compilation

print("Compiling to TorchScript...")

with torch.no_grad():

traced_model = torch.jit.trace(model, example_input)

traced_model.save(f"{export_path}_torchscript.pt")

# ONNX export

print("Exporting to ONNX...")

torch.onnx.export(

model,

example_input,

f"{export_path}.onnx",

export_params=True,

opset_version=11,

do_constant_folding=True,

input_names=['input_ids', 'attention_mask'],

output_names=['logits'],

dynamic_axes={

'input_ids': {0: 'batch_size', 1: 'sequence'},

'attention_mask': {0: 'batch_size', 1: 'sequence'},

'logits': {0: 'batch_size'}

}

)

return traced_model

# 7. EXAMPLE USAGE

def main():

# Configuration

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Model parameters

vocab_size = 30522 # BERT vocab size

num_classes = 10

max_seq_len = 512

batch_size = 32

# Initialize model with linear attention for efficiency

model = OptimizedTextClassifier(

vocab_size=vocab_size,

num_classes=num_classes,

dim=512,

depth=6,

heads=8,

max_seq_len=max_seq_len,

attention_type='linear' # or 'sparse' for sparse attention

)

print(f"Model parameters: {sum(p.numel() for p in model.parameters()):,}")

# Example data (replace with your actual data)

tokenizer = AutoTokenizer.from_pretrained('bert-base-uncased')

# Dummy data for demonstration

texts = ["This is a sample text for classification"] * 1000

labels = np.random.randint(0, num_classes, 1000)

# Create datasets

train_dataset = OptimizedTextDataset(texts[:800], labels[:800], tokenizer, max_seq_len)

val_dataset = OptimizedTextDataset(texts[800:], labels[800:], tokenizer, max_seq_len)

# Create optimized dataloaders

train_loader = create_optimized_dataloader(train_dataset, batch_size)

val_loader = create_optimized_dataloader(val_dataset, batch_size)

# Initialize trainer

trainer = OptimizedTrainer(model, train_loader, val_loader, device)

# Training loop

for epoch in range(5):

train_loss = trainer.train_epoch()

val_loss, val_acc = trainer.validate()

print(f"Epoch {epoch+1}: Train Loss: {train_loss:.4f}, "

f"Val Loss: {val_loss:.4f}, Val Acc: {val_acc:.2f}%")

# Compile model for inference

example_input = (

torch.randint(0, vocab_size, (1, max_seq_len)),

torch.ones(1, max_seq_len, dtype=torch.long)

)

traced_model = compile_model_for_inference(model, example_input)

print("Model compilation complete!")

if __name__ == "__main__":

main()The focus here is on efficiency and conservation. American developers are forced to spend precious intellectual resources solving problems created by infrastructure limitations, rather than focusing purely on pushing the boundaries of AI.

Scenario 2: The Chinese AI Developer (An Abundant Environment)

Meanwhile, at an AI lab in Shenzhen, where energy is plentiful and cheap, researchers can pursue the most audacious and resource-intensive ideas. They can train larger models on more data and experiment with complex architectures without worrying about the electricity cost. Failure is seen as a necessary part of scientific discovery, not a financial disaster.

A researcher here might use a powerful domestic model like Baidu's ERNIE Bot to scope out an incredibly ambitious project. Their prompt would look entirely different:

Detailed Unconstrained Creativity Prompt:

"We are initiating a large-scale multimodal simulation project. Use the entire text of the classic novel 'Dream of the Red Chamber' as the source data. Based on this, generate:

A complete 3D virtual world: Construct a detailed digital twin of the Grand View Garden and associated estates, with architecture, interiors, and landscapes that strictly adhere to the novel's descriptions and the historical context of the Qing Dynasty.

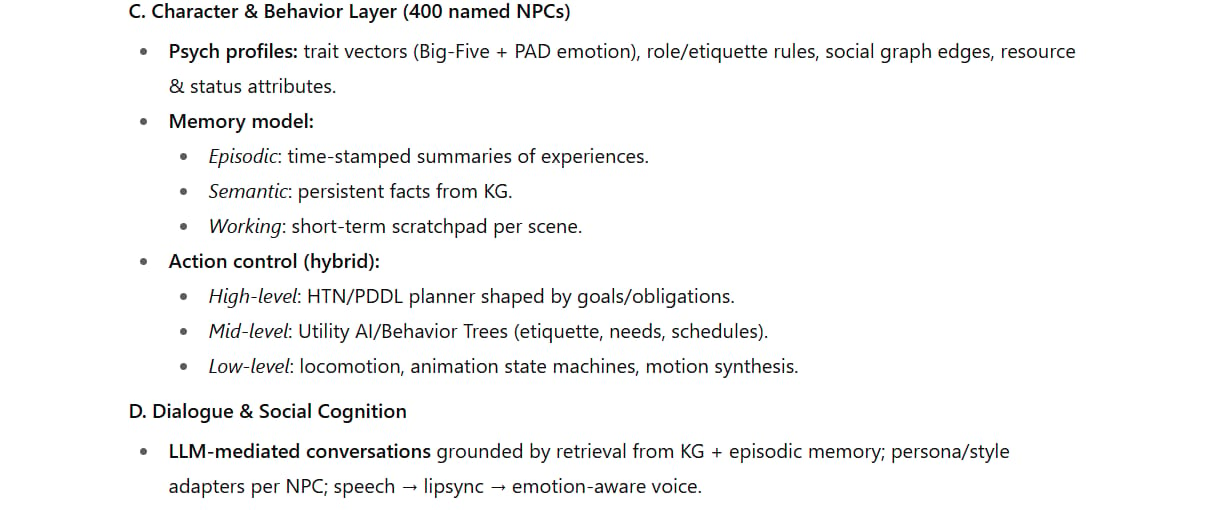

Dynamic simulation of 400 characters: Create autonomous NPCs for all named characters in the book. Each NPC must have a unique psychological model based on their actions and dialogue, allowing them to interact with each other and the environment dynamically.

A generative quest system: Develop a system that can create new, emergent storylines not present in the original text, based on the interactions that arise between the NPCs, while maintaining consistency with their established personalities and motivations.

Multi-sensory reconstruction: Simulate not just the sights and sounds, but also the smells described in the book (e.g., scents of flowers, teas, medicines) and the textures of objects (e.g., silk, brocade, porcelain).

Please outline a total system architecture, recommend the foundational AI models needed for each component (NLP, computer graphics, behavioral AI), and estimate the required computational load, assuming unlimited access to supercomputing clusters."

The focus here is on scale and ambition. When energy is no longer a limiting factor, the only constraint is imagination. They can "brute-force" problems that their Western counterparts must find clever, and often less ambitious, ways to circumvent.

The Inevitable Conclusion: An Empire On A Brittle Grid

The AI race has inadvertently become a stress test for national development models. And the results show that the United States is failing at an alarming rate. The problem is not a lack of talent or innovative spirit. Silicon Valley is still home to some of the most brilliant minds in the world. The problem is the decaying physical foundation upon which those minds must build.

We have seen this pattern repeat across industries: American companies optimize for individual wealth extraction while competitors build systemic advantages. We celebrate tax cuts and deregulation while China invests in collective capability.

This race has exposed a truth many Americans are unwilling to acknowledge: our entire model is broken. We cannot compete with nations that are capable of planning beyond the next election cycle.

The grid does not lie. Right now, it is telling us that decades of short-sighted greed have created a nation that is fundamentally unable to power its own future. China solved its energy problem years ago. We are still arguing about whether spending on infrastructure counts as socialism.

We are witnessing the early stages of a technological power shift that will define the next century. Unless America undergoes a fundamental change in how it balances individual extraction against collective investment, we will continue to lose future races to countries that understand the profound difference between being rich and being powerful.

The question now is not whether we can catch up. The question is whether we are even capable of trying, when our very system rewards all the behaviors that created this mess in the first place. A digital empire cannot be built on a crumbling physical foundation.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

The Secret AI System For Endless Viral Videos (Yes, Really!)*

Is The Front End Dead? AI & MCP Are Making It History!*

*indicates a premium content, if any

How useful was this AI tool article for you? 💻Let us know how this article on AI tools helped with your work or learning. Your feedback helps us improve! |

Reply