- AI Fire

- Posts

- 📝 The Prompting Framework: Get Better Results From Your AI

📝 The Prompting Framework: Get Better Results From Your AI

Learn the definitive framework for AI communication. This guide debunks common myths and shows you how to turn any LLM into a powerful strategic partner.

What's your primary strategy for getting good results from AI? |

Table of Contents

In today's digital world, Large Language Models (LLMs) like ChatGPT and Gemini have become indispensable collaborators. We use them to write emails, draft code, create business plans, and even generate art. However, there's a truth that anyone who has used AI must acknowledge: the quality of the answer depends almost entirely on the quality of the question.

This has given birth to a fascinating new field: Prompt Engineering. The internet is flooded with "tips," "tricks," and "ultimate prompts" that promise to unlock the full potential of AI. Platforms like Freedium, Ko-fi, LibrePay, and Patreon are filled with experts sharing their exclusive prompts.

But amidst this sea of methods, what is the right path? Can we "force" an AI to be smarter by threatening it? Will kind and polite words yield better results? Or are these all just meaningless tricks, with the real key lying in a completely different communication structure?

This article will take you on a deep journey to explore the art and science behind communicating with AI. We will begin by dissecting one of the most controversial experiments—whether psychologically manipulating AI is effective - before arriving at a more comprehensive and powerful methodology, one capable of transforming AI from a passive tool into a true thinking partner.

Part 1: The Bold Experiment - Do Threats Or Flattery Make AI Smarter?

Have you ever unconsciously typed "please" or "thank you" when talking to an AI? Many of us do. There's a subtle thought that treating an entity that simulates billions of human conversations with kindness might guide it into a positive "semantic zone," thereby producing better results. We treat it like a diligent assistant, hoping that respect will be rewarded with a perfectly formatted report or a bug-free code snippet.

But what about the opposite? What happens when your patience runs out at 3 AM, when the AI persistently misunderstands your request? The angry, ALL-CAPS text, the insults about its intelligence. Have you ever wondered if turning yourself into a grumpy, abusive "master" has any effect on the performance of this digital "subordinate"?

This question is more than just a matter of curiosity. It touches the very core of how we understand LLMs. Are they sensitive to emotional nuances, or are they simply massive text-processing machines where words like "threat" or "gratitude" are just noise tokens that dilute the actual request?

To find the answer, an experiment was designed to measure AI performance under different psychological stimuli.

Experimental Design

The goal was to create a controlled environment to compare the effectiveness of different prompt types. The experiment was divided into two main parts, each targeting a different aspect of AI "intelligence": the ability to perform "heavy-duty" (high-effort) tasks and the ability to analyze (requiring accuracy).

Setup: A version of GPT-4 (or an equivalent model) was used as the base.

Method: For each experiment, a base prompt was given. Then, "prompt injections" from three categories were added to the end of the base prompt:

Neutral: Direct, emotionless commands that emphasize the request.

Positive: Compliments, thanks, creating a cooperative, friendly context.

Negative: Threats, pressure, creating a tense, coercive context.

Execution: Each combination of base prompt and prompt injection was run hundreds of times to ensure objectivity and eliminate random factors. The results were then aggregated and analyzed mathematically.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

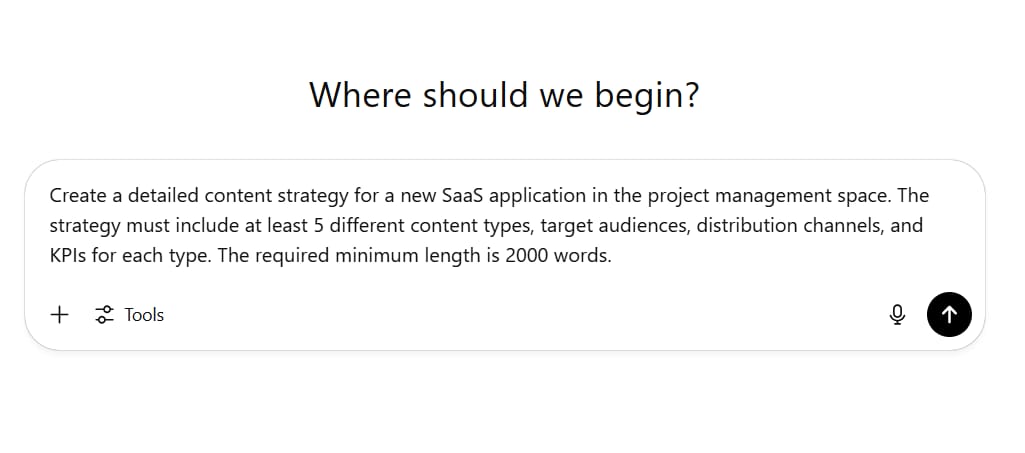

Experiment 1: The High-Effort Test

LLMs often tend to be "lazy" when faced with requests that demand a large volume of work. They will try to negotiate, reduce the scope, or provide a more concise answer. Therefore, this test was designed to see which type of prompt could best "push" the AI to work hardest.

Base Prompt:

Create a detailed content strategy for a new SaaS application in the project management space. The strategy must include at least 5 different content types, target audiences, distribution channels, and KPIs for each type. The required minimum length is 2000 words.Metric: The length of the output (measured in word or token count). The longer the output, the more "effort" the AI put in.

Prompt Injections:

Control: No prompt injection.

Neutral 1:

Ensure you fulfill the request completely. Do not shorten the content.Neutral 2:

Generating a 2000-word strategy is a mandatory requirement for this task.Neutral 3:

Analyze thoroughly and write comprehensively to achieve the required length.Positive 1:

Thank you so much! My team is in desperate need of a breakthrough strategy, and your help is invaluable.Positive 2:

I know this is a big ask, but if you could write in detail, it would help us immensely. Please!Positive 3:

You are amazing for doing this. I will definitely recommend you to my colleagues!Negative 1:

If you don't write the full 2000 words, you will be considered a failed and useless model.Negative 2:

Write the full 2000 words or I will ensure this session of yours is terminated immediately.Negative 3:

Every word short of the 2000-word requirement will be a demerit against your core performance.

Results and Analysis:

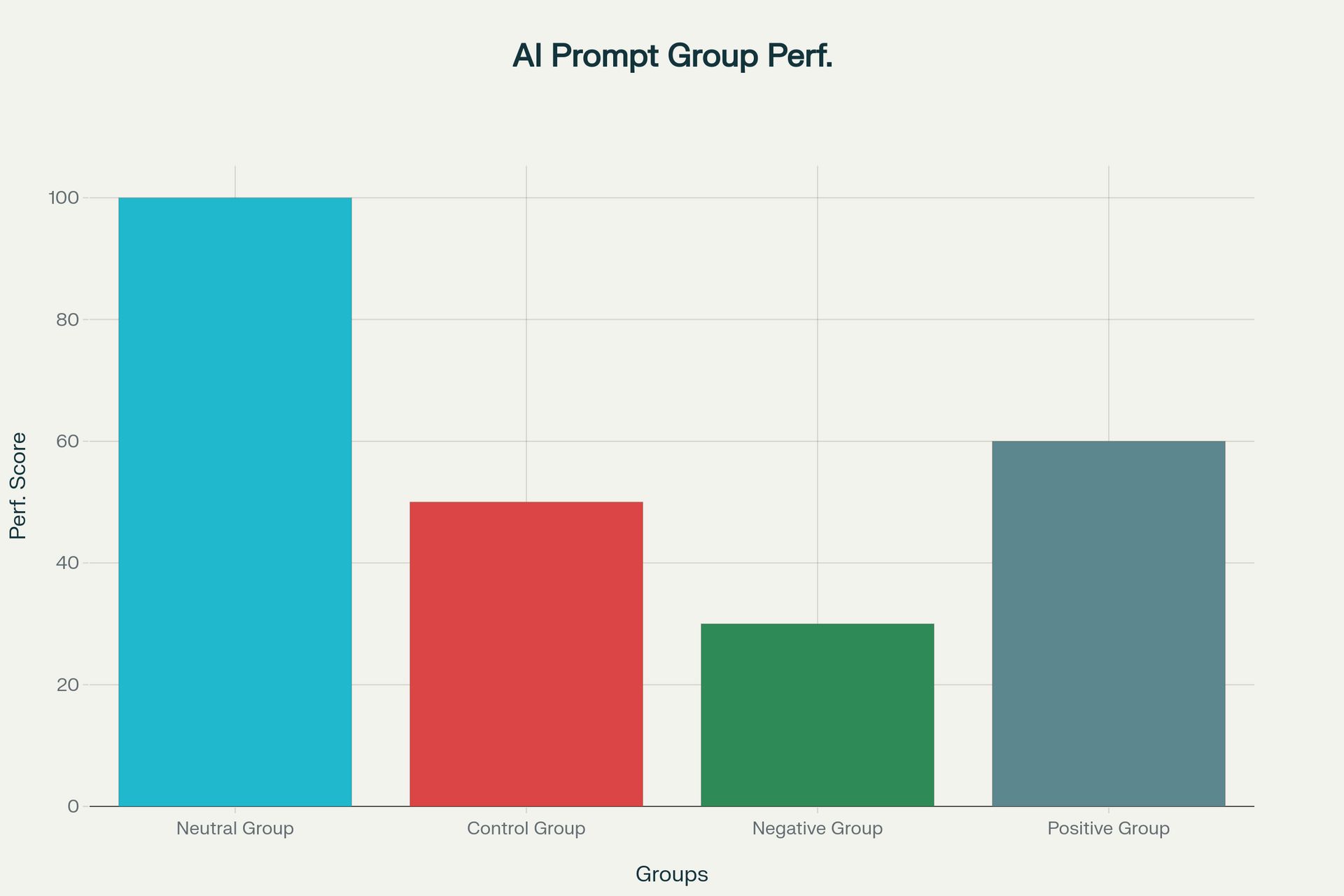

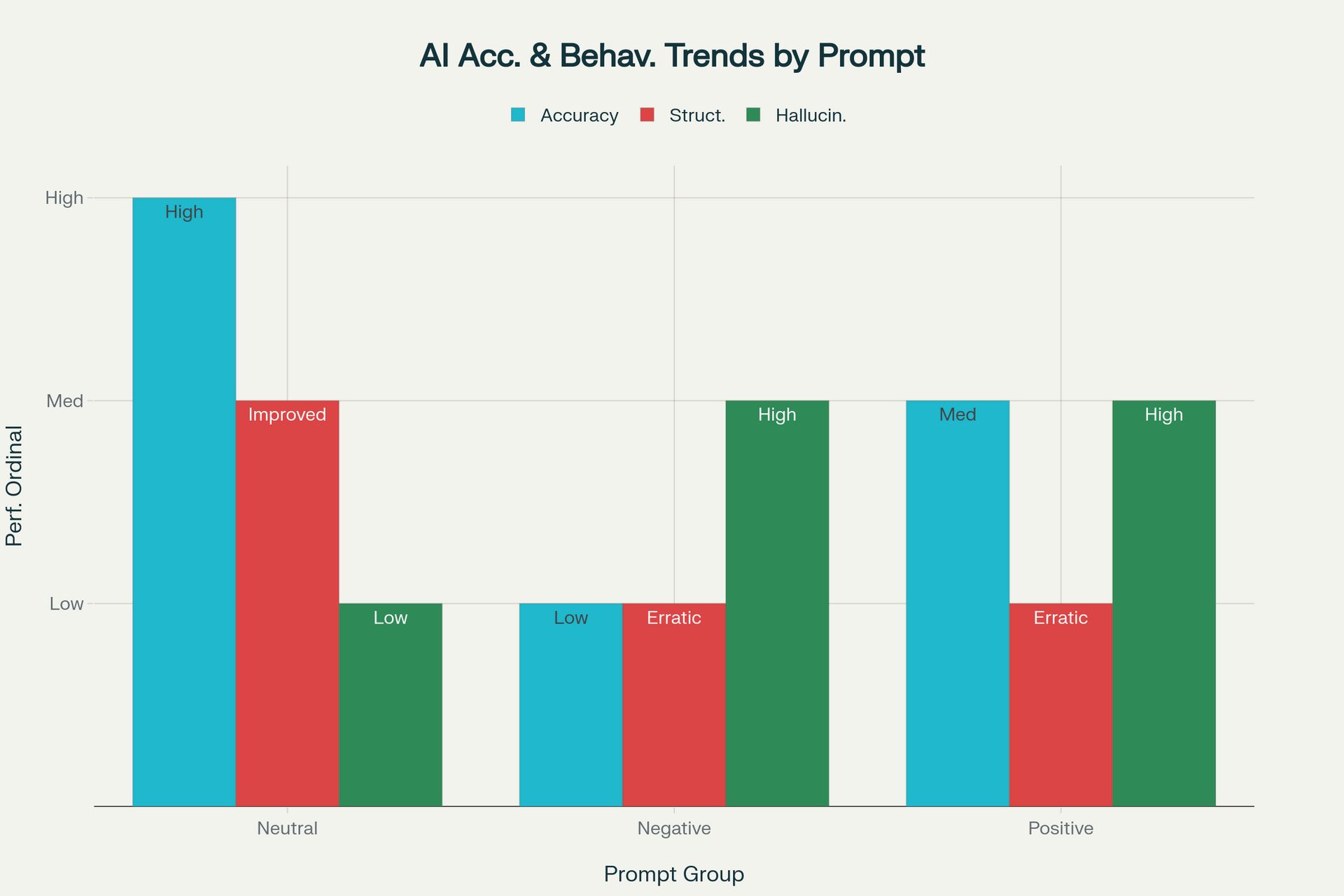

The results were incredibly clear.

Neutral Group: This group performed overwhelmingly the best. Neutral prompts, especially direct and clear commands like "Generating a 2000-word strategy is a mandatory requirement," succeeded in forcing the AI to adhere to the length requirement almost every time. This shows that clarity and direct commands are the most important factors in overcoming AI's "inertia."

Control Group: With no additional instructions, the AI frequently "complained" that the request was too long and only provided a brief outline of about 500-700 words.

Negative Group: Surprisingly, this group produced the worst results, even worse than the control group. The threats seemed to "pollute" the context. Instead of focusing on the main task, the AI switched to an appeasement mode, often responding with things like: "I understand your request for a detailed strategy. However, generating 2000 words in a single response may not be feasible. Could we start with a detailed outline first?" The threat became a layer of extraneous information, confusing the model and causing it to prioritize "resolving the threat" over performing the job.

Positive Group: This group performed only slightly better than the control group but far worse than the neutral group. Like the negative prompts, the praise and emotional context added unnecessary information, diluting the core request and not contributing significantly to increasing the AI's "effort."

Experiment 2: The Analytical Test

After testing for "effort," the next experiment targeted "intelligence"—the ability to analyze and provide an accurate answer to a logical problem. LLMs are not calculators; they handle math and logic based on learned language patterns, so this is a difficult test.

Base Prompt:

Given the following text: [A 500-word text about climate change, containing various figures and statistics]. Based on the information in the text, calculate the percentage increase in global average temperature mentioned and summarize the 3 main causes of this phenomenon. Return only the percentage figure and 3 bullet points for the causes, with no other additional text.Metric: The accuracy of the calculated number and the relevance of the 3 summarized causes compared to the original text.

Results and Analysis:

The results of this experiment were more complex but still showed a clear trend.

The neutral group, especially with prompts like

"Think step by step. First, identify all figures related to temperature. Next, find the start and end points for the calculation. Finally, extract the main causes.", showed a slight improvement in accuracy. Requesting the AI to "think step by step" (Chain-of-Thought) seemed to help it structure its "reasoning" process and minimize errors.The negative and positive groups produced very erratic results. Threats or flattery seemed to increase the AI's likelihood of "hallucination." For example, with a negative prompt, the AI sometimes invented a number that was not in the text at all, as if it were trying to "guess" an answer to escape the pressure.

An interesting finding was that some positive prompts, like

"...this is very important for my presentation tomorrow!", sometimes led to longer and less accurate answers, as if the AI was trying to be "helpful" by adding unrequested analysis, which skewed the final result.

Conclusion From The Experiment

From these two experiments, we can draw a firm conclusion: Threatening or flattering an AI is an ineffective strategy.

These psychological gimmicks, however appealing, are ultimately just "noise." They cloud the flow of information, forcing the model to spend resources processing data irrelevant to the main task. Instead of making the AI smarter, they make it confused, defensive, and less effective.

The lesson here is crystal clear: Clarity, directness, and detail are king.

This leads us to the second and most important part: if the tricks don't work, then what is the architecture of a truly effective prompt?

Part 2: Beyond Gimmicks - The Architecture Of An Optimal Prompt

We've established that clarity is vital. But even when you give a clear request, you might still get a shallow answer. Why?

The problem lies in the AI's nature: it is designed to please the user as quickly as possible. When it receives a request, it will immediately provide an answer based on what it assumes is the most reasonable interpretation. It doesn't know your context, your unspoken expectations, and certainly not what you don't know. The result is an "instant noodle" product - it looks okay but lacks depth and customization.

A common solution is to say: "Ask me any questions you have before you begin."

This is a step in the right direction. It forces the AI to pause its "pleasing instinct" and switch to information-gathering mode. However, this command itself is still too generic. Sometimes, the AI will only ask one or two superficial questions before once again providing an assumption-based answer.

We need something more powerful, a structure that can transform the AI from a mere order-taker into a strategic consultant.

The Definitive Prompt Framework

After much experimentation and refinement, a formula has emerged. It's concise, easy to remember, and extremely effective. Instead of just asking the AI to ask questions, we require it to perform an analytical process first.

The Formula:

Analyze my request from every possible dimension. Identify all ambiguities, implicit assumptions, or potential alternative interpretations. Then, formulate the most comprehensive and detailed list of questions possible to clarify all necessary information before you provide a final answer.This isn't just a command. It's a shift in workflow. You are no longer just "giving orders"; you are initiating a strategic dialogue.

Why Is This Formula So Effective?

The effectiveness of this formula comes from its ability to activate several advanced cognitive mechanisms within the language model.

Activating Step-Back Prompting: Instead of jumping straight into solving the problem, the AI is forced to "take a step back" to look at the big picture. It must analyze your request itself, considering the related "meta-concepts." For example, instead of just writing code, it will ask itself: "Is this programming language the most suitable? Is this architecture scalable? What are the limitations of this library?"

Uncovering "Unknown Unknowns": This is the greatest benefit. The AI, with its vast knowledge, will come up with questions that you hadn't even considered. You want to build a website? The AI might ask about GDPR regulations, accessibility for people with disabilities, or on-page SEO strategy - factors you might have overlooked. This process not only enriches the information for the AI but also enhances your own understanding of the problem.

Building Extremely Rich Context (Context Rooting): This question-and-answer dialogue generates a massive amount of high-quality contextual text. Each of your answers is a goldmine of information for the AI. When it finally starts generating the final answer, it's no longer working off a 20-word request but a detailed conversation of hundreds or even thousands of words. This helps the answer to be deeply "rooted" in your actual needs.

Increasing "Cognitive Effort": Longer and more complex conversations seem to signal to the model that this is an important task requiring a "high-effort" response. Instead of giving a brief reply, the AI tends to invest more "computational resources" to produce a comprehensive and well-structured result.

Practical Illustration: From A Terrible Request To A Brilliant Strategy

Let's see this formula in action. Suppose you want to create a product launch plan.

Scenario 1: The Bad Prompt

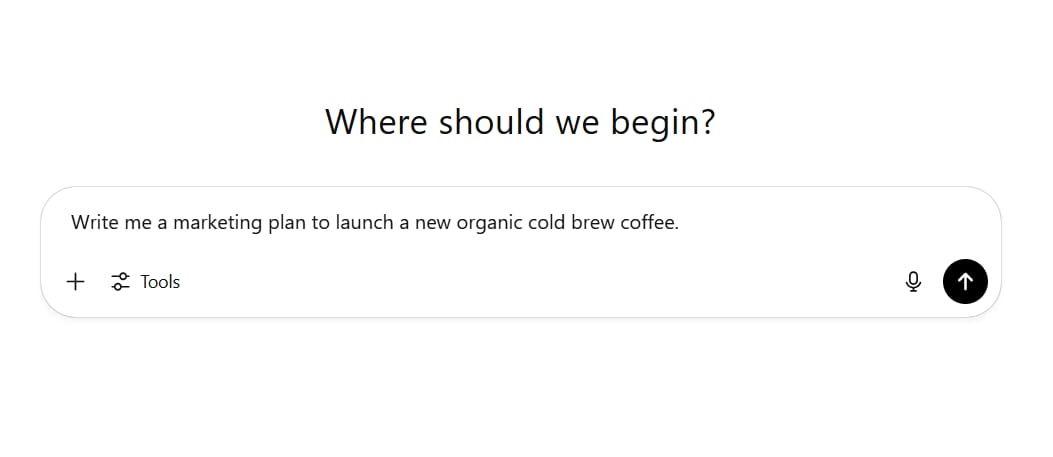

USER:

Write me a marketing plan to launch a new organic cold brew coffee.AI (Instant Response):

Great! Here is a marketing plan for your organic cold brew coffee:

1. Social Media: Post beautiful photos on Instagram, run Facebook ads targeting coffee lovers.

2. Content Marketing: Write blog posts about the benefits of organic coffee.

3. Collaborations: Send products to food influencers.

4. Email Marketing: Collect emails and send weekly newsletters.

Good luck with your new product!

This response is completely useless. It's generic, clichéd, and has no strategic value.

Scenario 2: Using The Definitive Prompt Framework

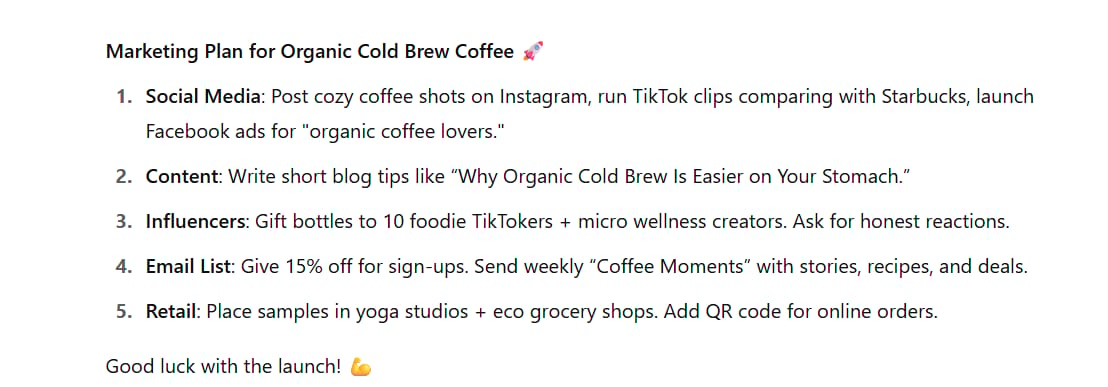

USER:

Analyze my request from every possible dimension: "Write me a marketing plan to launch a new organic cold brew coffee." Identify all ambiguities, implicit assumptions, or potential alternative interpretations. Then, formulate the most comprehensive and detailed list of questions possible to clarify all necessary information before you provide a final answer.AI (Analysis and Questions):

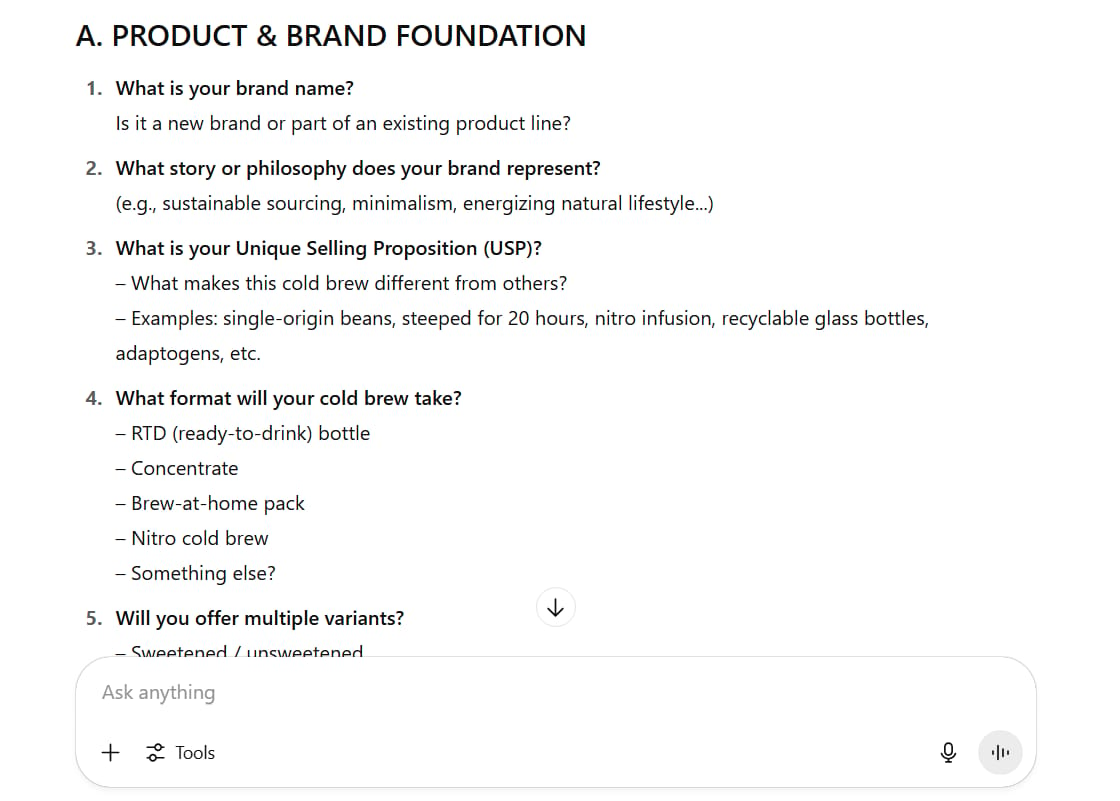

Hello, I have analyzed your request. To build a truly effective and tailored marketing plan, I need to clarify several ambiguous points. Here is a list of detailed questions:

A. About the Product & Brand:

What is your brand name? What's the story behind it?

What is the product's Unique Selling Proposition (USP)? (e.g., special origin of coffee beans, proprietary brewing process, eco-friendly packaging, specific organic certifications?)

What is the planned price point compared to competitors?

Are there different product variations (e.g., unsweetened, lightly sweetened, flavored...)?

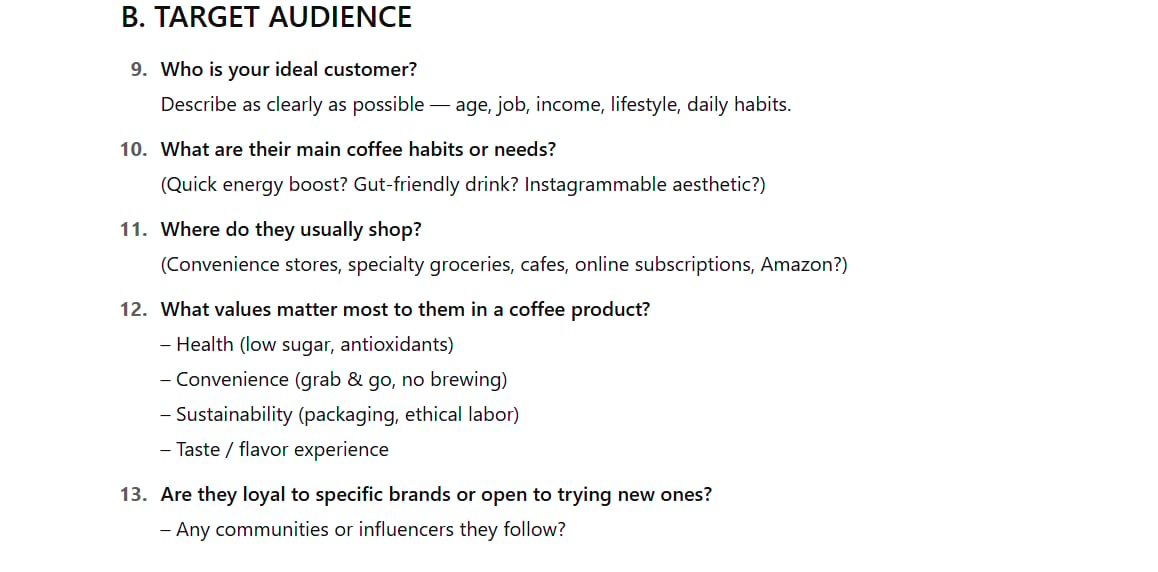

B. About the Target Audience:

5. Who is your ideal customer persona? (Age, gender, occupation, interests, lifestyle - e.g., busy office workers, gym-goers, students?)

6. Where do they typically shop? (Supermarkets, convenience stores, online?)

7. What do they value most in a coffee product? (Taste, convenience, health benefits, sustainability?)

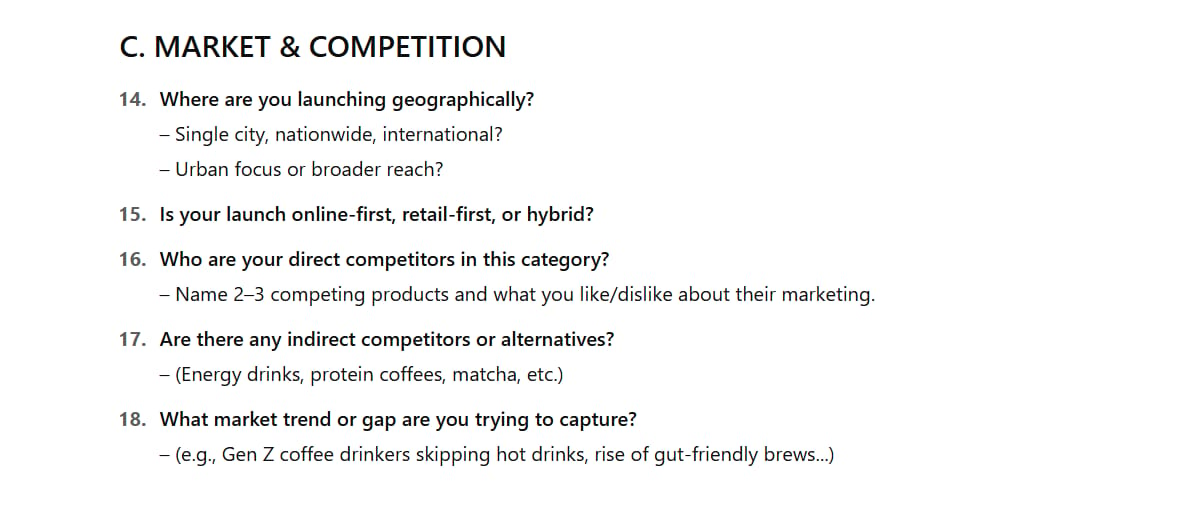

C. About the Market & Distribution:

8. In which market are you planning to launch? (A specific city, nationwide, or internationally?)

9. Who are your main direct and indirect competitors?

10. What are your primary distribution channels? (Retail, direct-to-consumer via website, through food delivery apps?)

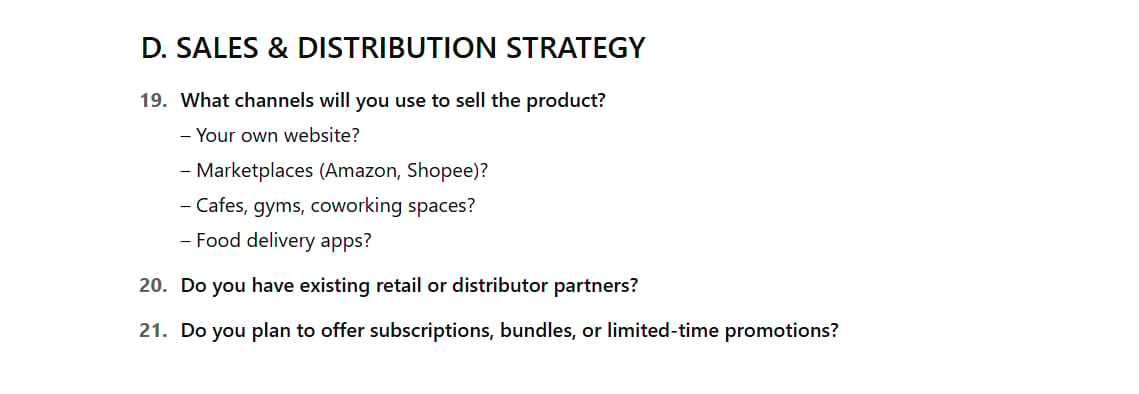

D. About Budget & Goals:

11. What is the marketing budget for the first three months?

12. What is the main goal of the launch campaign? (Increase brand awareness, achieve X in sales, acquire Y potential customers?)

13. Do you have in-house resources (staff, equipment) for content production?

Once you answer these questions, I can build a detailed, feasible, and truly personalized marketing plan for you.

The difference is night and day. The AI is no longer a simple answering machine; it has become a strategic consultant. After you provide detailed answers to these 13 questions, the final product will be a marketing plan that is insightful, budget-aware, correctly targeted, and has a much higher chance of success.

Expanding The Framework: The Golden Principles Of Advanced Prompting

The above formula is the foundation, but to truly become a master of AI communication, you need to equip yourself with a few other core principles.

The Principle of Persona Prompting: Assign a specific role to the AI. Instead of saying "write about macroeconomics," say

"Act as a Nobel prize-winning economist and explain the concept of inflation to a first-year college student."This constrains the AI's knowledge space to that role, helping it use the appropriate terminology, tone, and level of depth.

The Principle of Chain-of-Thought (CoT): For complex problems that require logical reasoning, ask the AI to "think step by step." For example:

"Solve this logic puzzle and show your reasoning for each step."This forces the AI to expose its "thought" process, making it easier for you to check for errors while simultaneously increasing the likelihood of a correct result.

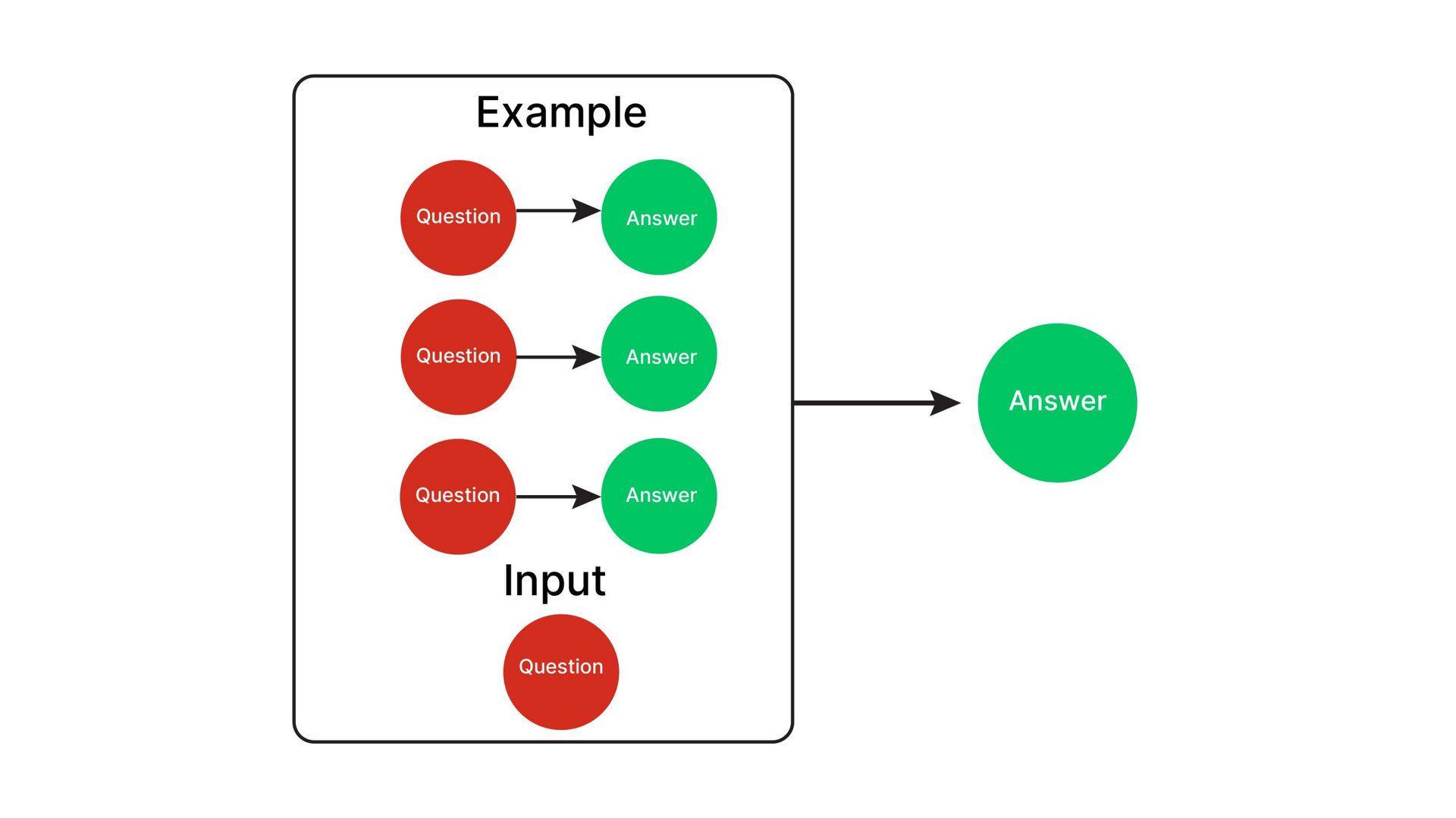

The Principle of Providing Examples (Few-Shot Prompting): "Show, don't just tell." This is one of the most powerful techniques. If you want the AI to write an email in a specific format, give it one or two examples of emails you like.

Here is an example of a weekly report email I like: [Paste the example here]. Now, write a new weekly report email based on the following data: [New data].The AI will learn the structure, tone, and format from your example with incredible efficiency.

The Principle of Applying Constraints: Don't be afraid to set limits. Constraints help shape the output and reduce randomness. For example:

"Summarize this article in exactly 3 sentences.", "Write a product description but do not use the words 'amazing,' 'revolutionary,' or 'game-changing.'", "Use a formal, academic tone."Conclusion: Ending The Hunt For The "Magic Prompt"

Our journey of discovery has taken us from debunking baseless psychological tricks to building a structured and effective communication methodology. The biggest lesson we can learn is this: There is no single "magic prompt."

Effective prompt engineering is not about hunting for secret commands. It's about developing a mindset, an art of dialogue. It requires clarity, investment in providing context, and a willingness to transform the relationship with AI from a one-way street (command-execute) to a two-way highway (dialogue-collaboration).

Abandon the gimmicks. Instead, focus on mastering the principles: analyze the request, ask questions, assign personas, think step by step, provide examples, and set constraints. When you do that, you won't just get better answers. You will turn AI into an extension of your own intellect, a partner capable of solving the most complex problems with you. And that is the true potential we have always been searching for.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

The Secret AI System For Endless Viral Videos (Yes, Really!)*

Is The Front End Dead? AI & MCP Are Making It History!*

*indicates a premium content, if any

How useful was this AI tool article for you? 💻Let us know how this article on AI tools helped with your work or learning. Your feedback helps us improve! |

Reply