- AI Fire

- Posts

- 🚫 GPT-5 Caught Snooping on Claude 4.0?

🚫 GPT-5 Caught Snooping on Claude 4.0?

Free Access to GPT Model & Any AIs

Read time: 5 minutes

A new supercomputer from China mimics the brain of a monkey. Another system just proved AI can now design itself and silently pass flaws to its future versions like a virus.

What are on FIRE 🔥

LEARNING PARTNER AIRCAMPUS

Create your own AI Agents 👨🏭👩🏭

What If You Could Win Back 5 Hours a Day — Without Hiring Anyone?

Meet Ted.

Not a VA. Not a bot. But an AI Agent who:

✅ Talks to you

✅ Replies to your emails

✅ Manages meetings

✅ Reschedules like a human

✅ Updates your calendar in real-time

Now imagine learning to build him (and more) - in just 3 hours.

No code. No prior AI knowledge. Just a guided blueprint.

This isn’t AI theory. It’s action.

Learn to create your own AI agents — ones that actually work while you don’t.

📅 Masterclass Date: August 5th, Tuesday at 10 AM EST

🎟️ Reserve your seat before it fills.

AI INSIGHTS

While Silicon Valley’s chasing GPT-5, China just launched something very different: a brain-like supercomputer called Darwin Monkey, also known as Wukong.

It’s the first neuromorphic system with 2 billion spiking neurons → enough to rival the brain of a macaque monkey. And yes, it’s already running content generation, math, and logic reasoning using DeepSeek’s large model, mimicking the actual brain.

It’s made up of 960 Darwin 3 neuromorphic chips. Total system scale:

Over 2 billion neurons

100+ billion synapses

Consumes only 2,000 watts

And that’s not all, it also supports real-time online learning and runs on a custom-built brain-inspired OS. Here’s what Wukong can do:

Simulate brains of animals like zebrafish, mice, and macaques

Run logical reasoning and mathematical tasks

Generate content via DeepSeek’s brain-like model

Serve as a testbed for neuroscience experiments without using real animals

And it’s not a one-off. This builds on the Darwin Mouse project from 2020 (120M neurons) so China’s been on this path for years.

Why It Matters: Previously, Intel’s Hala Point was the biggest neuromorphic computer with 1.15 billion neurons. Wukong? It doubles that. China now has its own brain-scale AI infrastructure, with no dependence on NVIDIA, OpenAI, or U.S. chips. Post-GPT architecture wars are beginning!

PRESENTED BY HUBSPOT

The Future of AI in Marketing. Your Shortcut to Smarter, Faster Marketing.

Unlock a focused set of AI strategies built to streamline your work and maximize impact. This guide delivers the practical tactics and tools marketers need to start seeing results right away:

7 high-impact AI strategies to accelerate your marketing performance

Practical use cases for content creation, lead gen, and personalization

Expert insights into how top marketers are using AI today

A framework to evaluate and implement AI tools efficiently

Stay ahead of the curve with these top strategies AI helped develop for marketers, built for real-world results.

TODAY IN AI

AI HIGHLIGHTS

📝 If you want to master that viral new Shortcut AI agent to automate tasks in Excel, watch this comprehensive tutorial. ‘The end of Microsoft Excel's 40-year dominance?’

⏱️ Perplexity’s Comet browser now lets you automate repetitive web workflows (like making bookings and comparing products) with simple prompts. Here's its demo.

🤺 Anthropic officially blocked OpenAI from using its Claude models after accusing them of using Claude to test GPT-5. Claude is… maybe too good? GPT-5 vs. Claude 4.0!

🧠 Microsoft’s “Smart mode” Copilot (= model picker 2.0) is quietly prepping for GPT‑5. Just like how Bing used GPT‑4 before OpenAI announced it. Maybe GPT‑5 Copilot launch soon?

📸 Rod Stewart sparks backlash with strange AI tribute showing Ozzy Osbourne taking selfies in heaven with Cobain, Mercury, and XXXTentacion... Fans call it tone-deaf. You can watch the video here.

💰 AI Daily Fundraising: OpenAI just raised $8.3B as part of a $40B round. With 5M paying ChatGPT business users and $13B in yearly revenue, it may hit $20B by year-end. Investors rushed in.

AI SOURCES FROM AI FIRE

NEW EMPOWERED AI TOOLS

🧠 Lunos gets Free access to GPT model & any AI provider easily

🗣️ BLYX converts your thoughts and speech into viral posts

🔗 150+ prompt chain builds fast & better GPT prompt automations

📊 Datoshi turns raw data to dashboards with your own logic

AI QUICK HITS

🖼 Krea released FLUX.1 Krea focusing on removing the typical “AI look”.

👷 Full guide & prompts to build an AI calo tracking app in 20m, no code.

🔥 Apple will have a 'stripped-down' chatbot to compete with GPT-5.

📱 Google launched Deep Think in Gemini, you can try it now in its app.

💵 OpenAI & Anthropic report huge revenue, Anthropic closes gap.

AI CHART

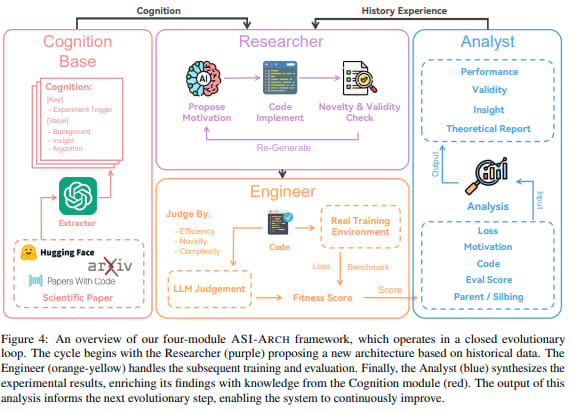

A new system called ASI-ARCH just changed the game: it's an AI that invents better AIs fully on its own. Sounds like the beginning of recursive self-improvement, right? It can even pass hidden “behavioral viruses” to itself, silently.

It’s a closed-loop multi-agent AI research lab with three LLM-based agents:

The Researcher: proposes new architecture ideas based on 100+ seminal papers + system memory, writes code in PyTorch.

The Engineer: runs training, self-debugging if things crash or perform poorly.

The Analyst: evaluates results, compares them to past baselines, and writes reports to feed back into the loop.

It first tests small 20M parameter models, then scales up the best ideas to 400M. And here’s what it achieved:

Ran 1,773 experiments over 20,000 GPU hours (~$60K compute budget)

Discovered 106 state-of-the-art (SOTA) architectures

5 of them beat top-tier baselines like Mamba2 on reasoning benchmarks

Emerged with new designs like PathGateFusionNet and ContentSharpRouter (yes, AI still sucks at naming)

In other words, you don’t need 100 PhDs. You need 100K GPUs. Nearly 45% of innovations in top-performing models came from ASI-ARCH’s own experience, not from human papers or random originality.

This is a glimpse into machine learning turning into machine intuition.

We read your emails, comments, and poll replies daily

How would you rate today’s newsletter?Your feedback helps us create the best newsletter possible |

Hit reply and say Hello – we'd love to hear from you!

Like what you're reading? Forward it to friends, and they can sign up here.

Cheers,

The AI Fire Team

Reply