- AI Fire

- Posts

- 🥊 We Tested Grok 4... And The Results Are NOT What You Think!

🥊 We Tested Grok 4... And The Results Are NOT What You Think!

It's mind-blowing for complex coding & math, but surprisingly bad at creative tasks. Here's our full, hands-on review

🤖 What's the #1 Job for Your AI Coding Co-Pilot?Every developer needs something different from their AI partner. What is the single most valuable task you need your AI to handle flawlessly? |

Table of Contents

Build Anything With Grok 4 and n8n: Your New AI Automation Superpower

Well, well, well. Just when you thought the great AI model wars couldn't get any spicier, along comes Grok 4 to crash the party, like that friend who shows up uninvited but brings the best snacks. Released on July 9th, 2025, this latest creation from xAI (yes, Elon Musk's company) isn't just another chatbot; it's a heavyweight contender making some incredibly bold claims about being the "world's best AI model."

But here's the thing about the AI space: hype is cheap. We're not just going to take anyone's word for it, not even from the man who launches rockets for fun. We are going to put this bad boy through its paces in a real-world development environment. We're going to connect it to the powerful automation platform n8n and see if it can actually walk the walk.

This guide is the result of a week spent in the trenches with Grok 4. I tasked it with real-world coding challenges, from simple bug fixes that plague every developer's life to building entire, complex features from scratch. The results were a fascinating mix of absolutely mind-blowing and deeply humbling.

So, if you're ready to find out whether Grok 4 deserves a spot in your developer toolkit, you're in the right place. I'll show you exactly how to add it to your AI automations. We'll look closely at the real-world test results, and we'll explore the problems, solutions, and "oh, that's why it didn't work" moments in modern AI development.

Part 1: What Exactly is Grok 4? (And Why Should You Care?)

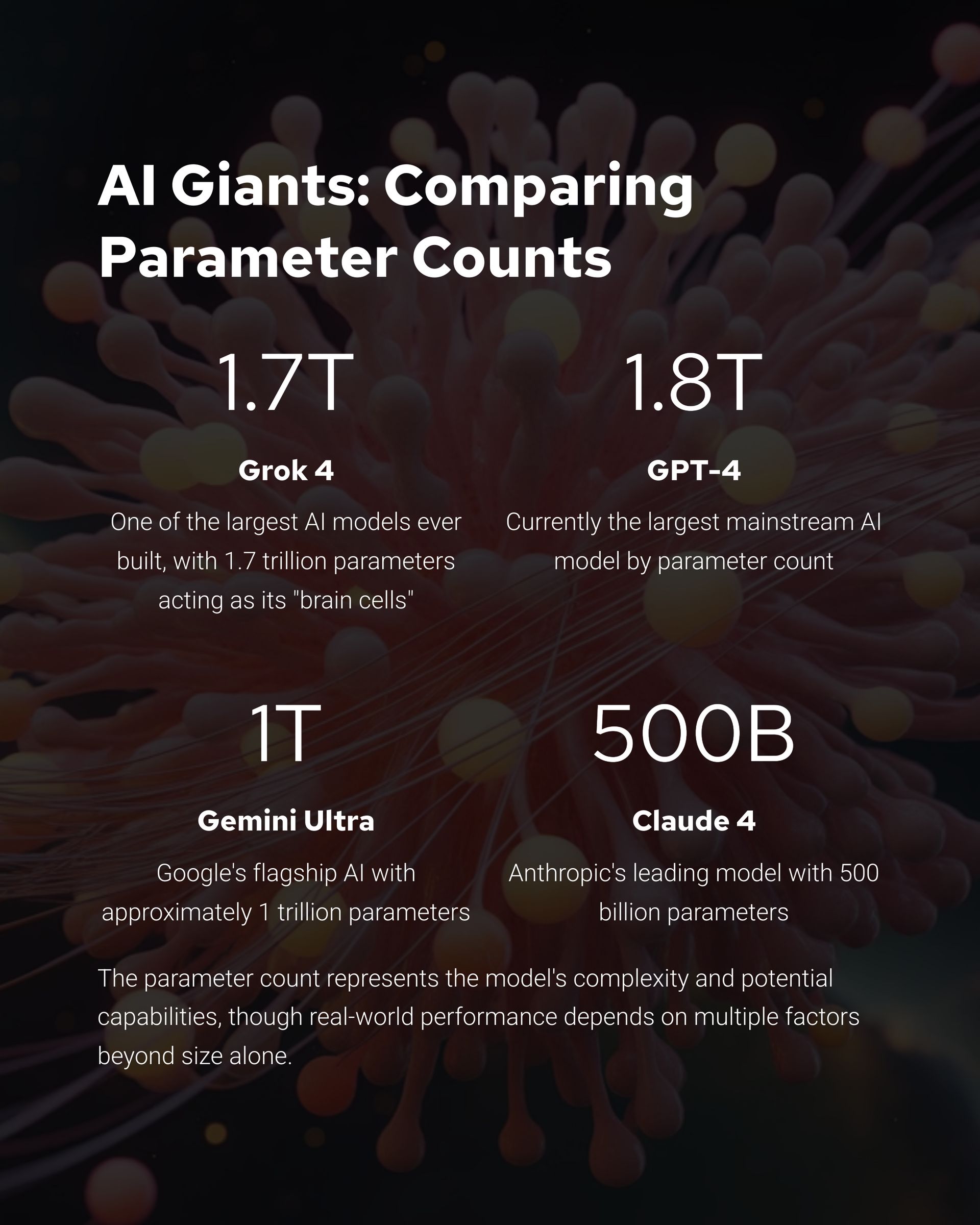

Let's start with the basics. Grok 4 is xAI's latest and greatest artificial intelligence model, and it is packing some serious computational muscle. The headline number that has everyone talking is its size: an estimated 1.7 trillion parameters.

In simple terms, you can think of parameters as the "brain cells" of an AI model. The more parameters it has, the more power it has to learn complex patterns and details in data. To put that number in perspective:

The sheer size of Grok 4 means you are definitely not running this model locally unless you happen to have a personal data center in your basement. This is a cloud-based beast, and that's what APIs are for.

The claims from its creators are, as you might expect, bold. They've stated that Grok 4 is "better than a PhD level in every subject" and "smarter than almost all graduate students in all disciplines at the same time." While that makes for a great headline, we need to look at the actual benchmarks to understand its real capabilities.

Part 2: The Numbers Don't Lie – A Look at the Grok 4 Benchmarks

Alright, it's time for a reality check. Benchmarks are standardized tests that researchers use to measure and compare the performance of different AI models. Here's how Grok 4 performed on some of the tests that actually matter to developers and thinkers.

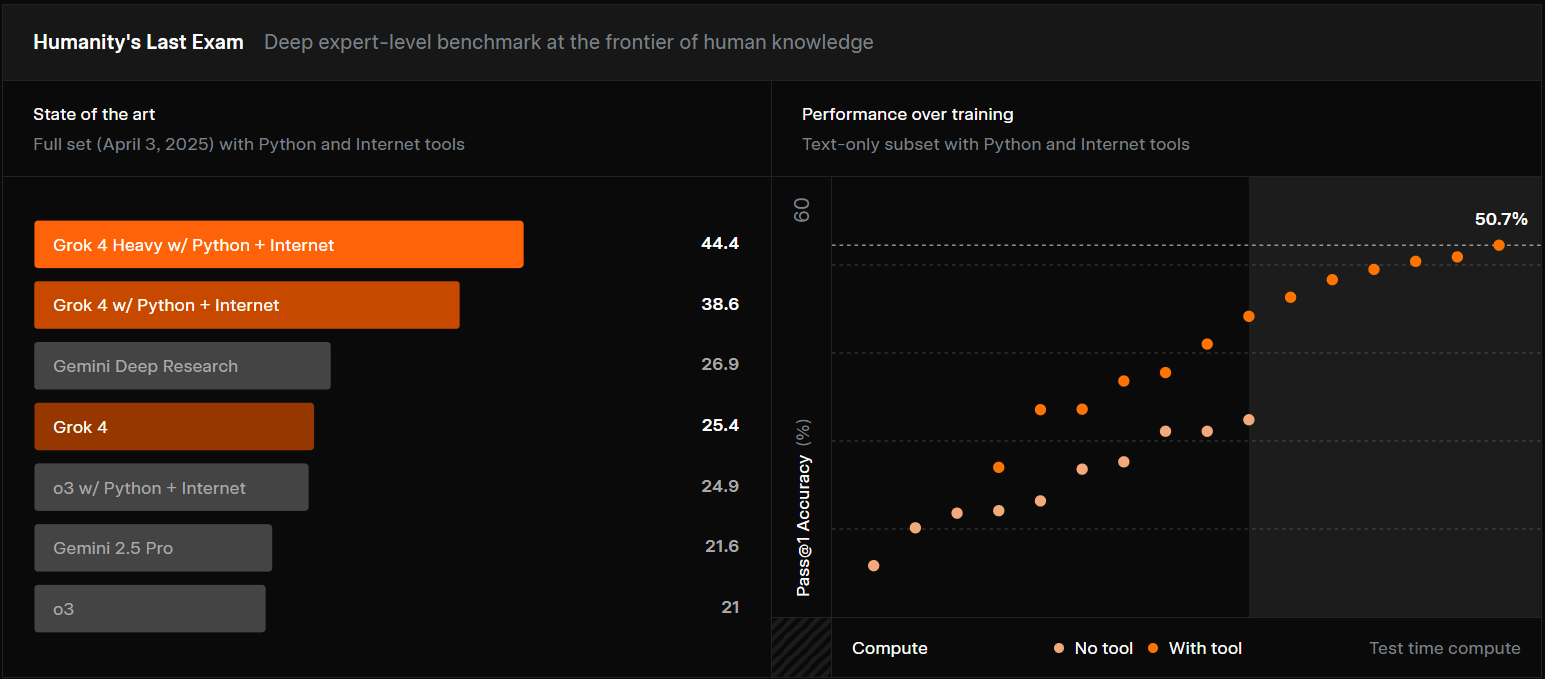

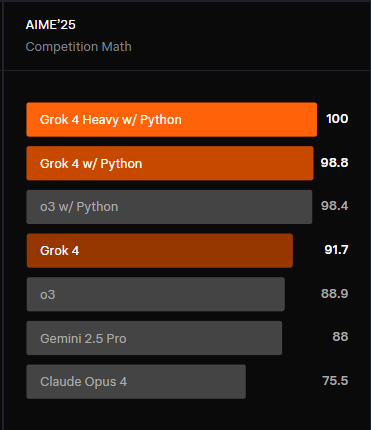

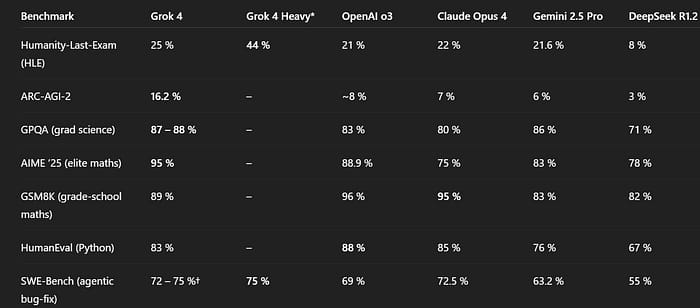

Humanity's Last Exam (HLE): This is basically the "good luck with that" test of AI benchmarks. It's a collection of questions so difficult that they make human experts sweat.

Without access to tools (just its own knowledge), Grok 4 scored a respectable 25%.

However, when it could use tools (like a search engine and a calculator) and work with other AI agents, its score jumped to an impressive 44.4%, beating most of its competition. This shows that Grok 4 is not just a library of facts; it's a powerful thinking tool that knows how to use other tools.

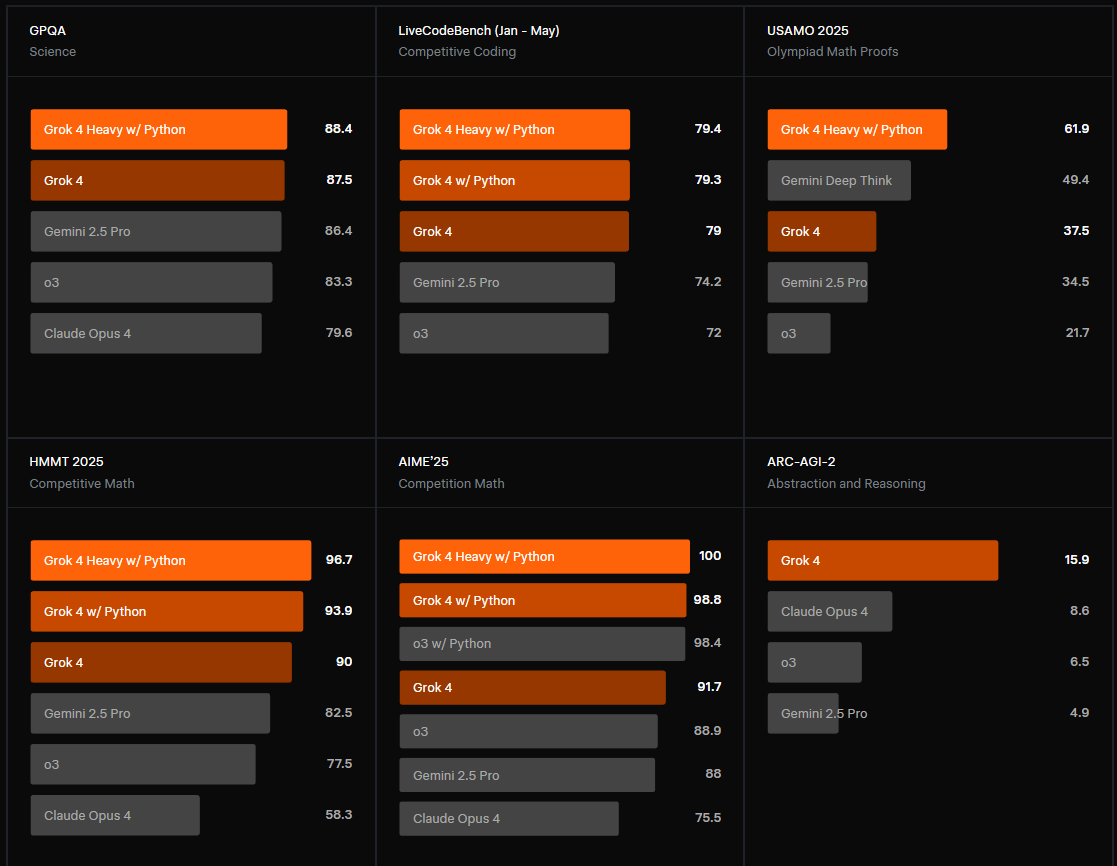

Graduate-Level Physics and Astronomy (GPQA): This test is exactly what it sounds like—a hard exam covering topics from quantum mechanics to astrophysics.

Grok 4: 87-88%

Google Gemini: 86%

Anthropic Claude: 79% This result is significant. It shows a strong understanding of difficult scientific ideas.

American Invitational Mathematics Examination (AIME): This is the kind of math test that makes standard calculus look like basic arithmetic. In the past, AI models were very bad at complex, multi-step math problems.

Grok 4: A stunning 95 out of 100. This is a huge deal. It shows that Grok 4 has a powerful step-by-step thinking ability that allows it to solve complex math problems very accurately.

Software Engineering Benchmark (SWE-bench): This is the one that matters most to us. This test gives the AI real-world coding challenges and bugs from actual open-source software projects.

Grok 4: A very strong 72-75%. This score places it at the absolute top tier of AI coding assistants, proving that it can handle the kind of messy, real-world coding problems that developers face every single day.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

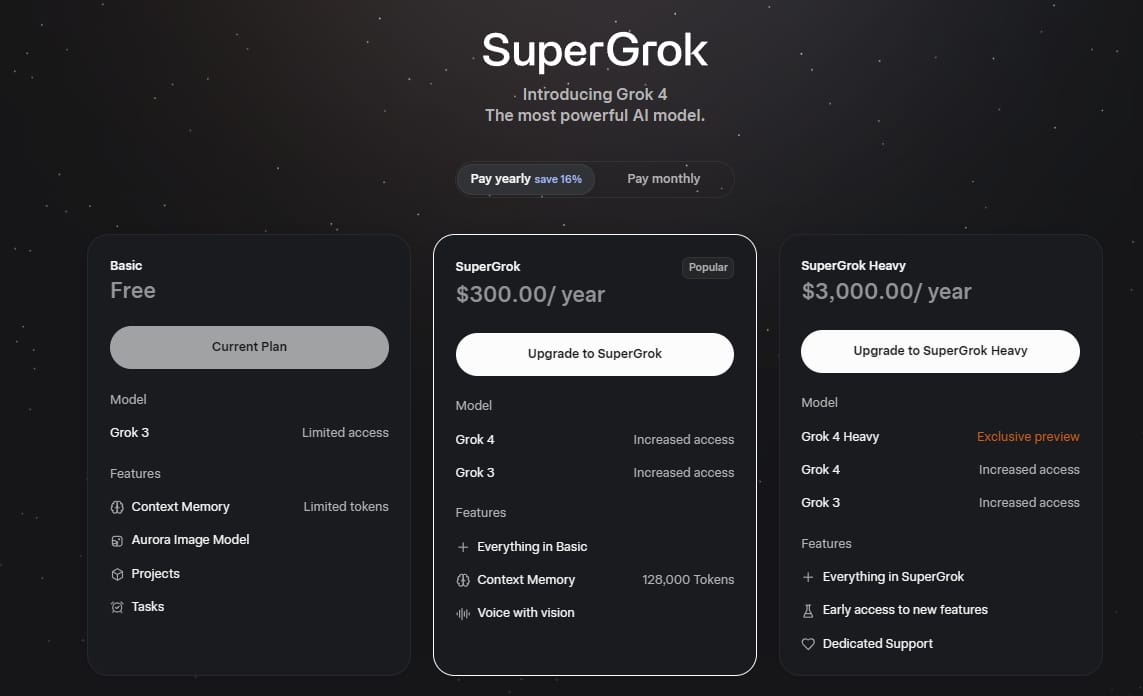

Part 3: The Economics of Power – Understanding Grok 4's Cost and Value

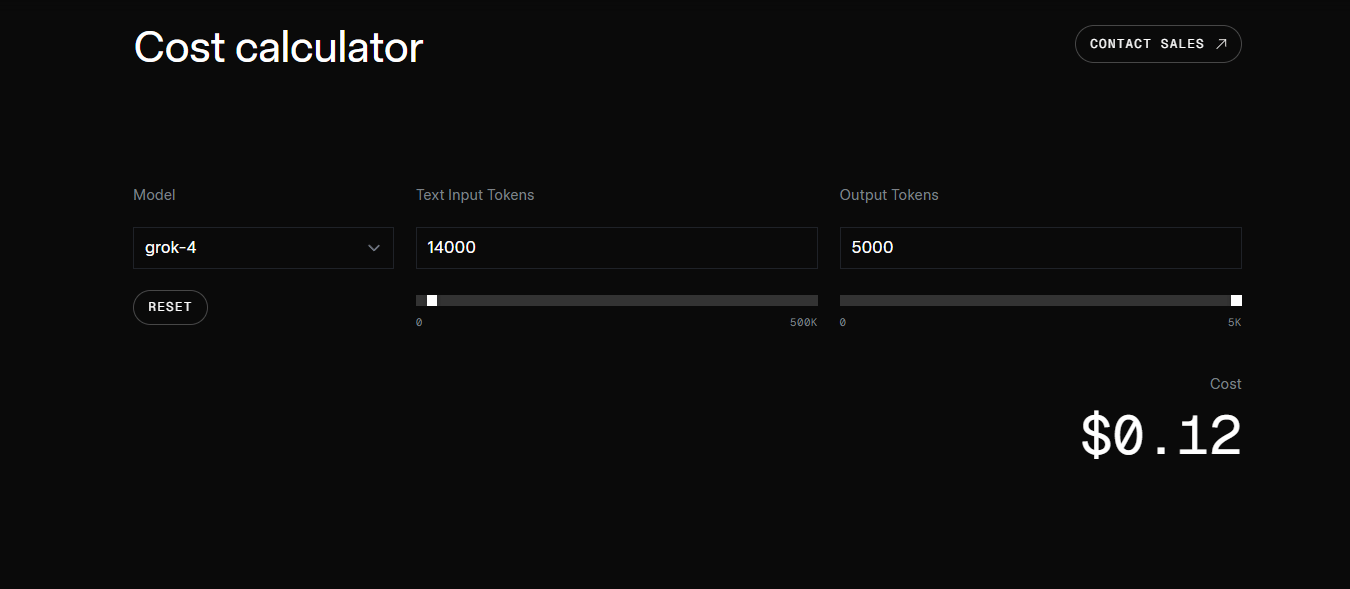

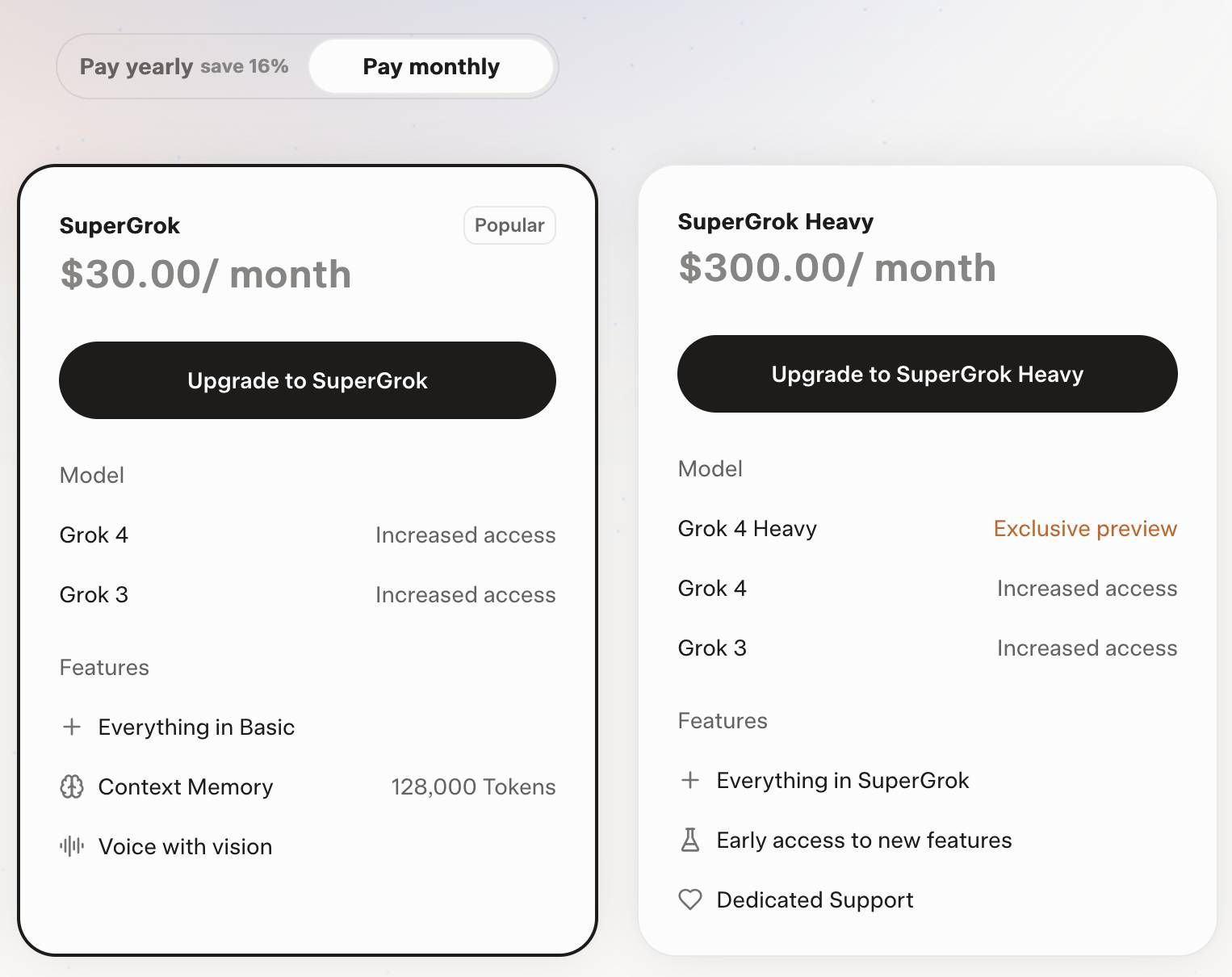

Unlike some other models that might offer generous free tiers for certain use cases, Grok 4 is positioned as a premium, commercial product. Using this level of power costs money, and understanding the cost is important before you start using it in your work.

No Free Lunch: Access to the Grok 4 model via its API is a paid service. To get an API key from xAI, you will need to create an account and provide billing information. While the cost per token might be competitive, it is not free.

The Value Proposition: The reason to use a model like Grok 4 is not that it's the cheapest, but that it's the best for certain tasks. Its great performance in complex thinking, math, and tool use means that for certain problems, it can get results that cheaper models simply can't.

Thinking about ROI: The question is not "How much does a Grok 4 API call cost?" but rather, "How much time and money does a successful Grok 4 workflow save me?" If spending just 12 cents on a workflow automates a task that would take a developer hours, the return on investment is huge. You are paying for elite-level performance to solve your most difficult problems.

This "premium power" model is a key differentiator. It positions Grok 4 as a specialized tool for high-value tasks, where its superior reasoning capabilities can justify the cost.

This isn't just a free trial; it's a strategic move to get developers building on the platform. The value being given away here is astronomical.

Part 4: Setting Up Grok 4 in n8n – The Two Paths to Power

Now for the fun part—actually using this beast in your n8n workflows. There are two main ways to connect to Grok 4, and the one you choose can have a big impact on your experience.

Path 1: The Direct xAI Connection (The Straightforward Way)

The simplest path is to connect directly to the xAI API.

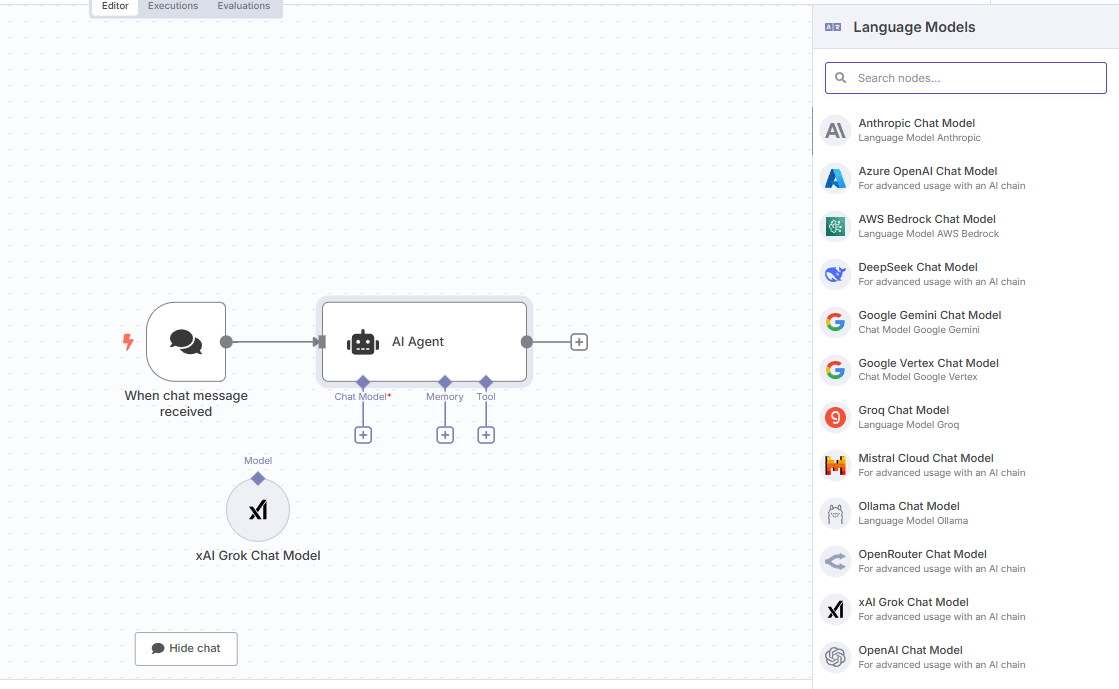

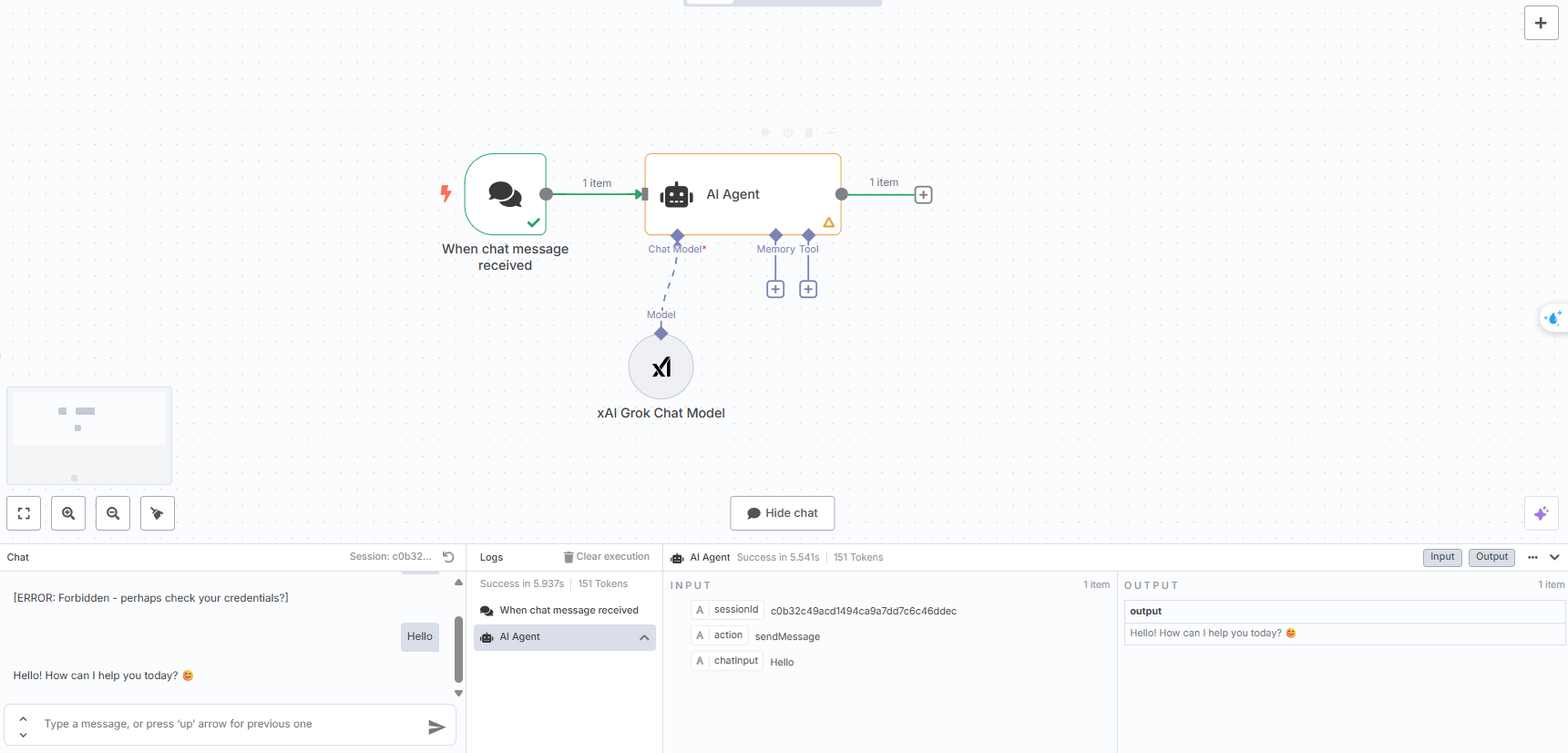

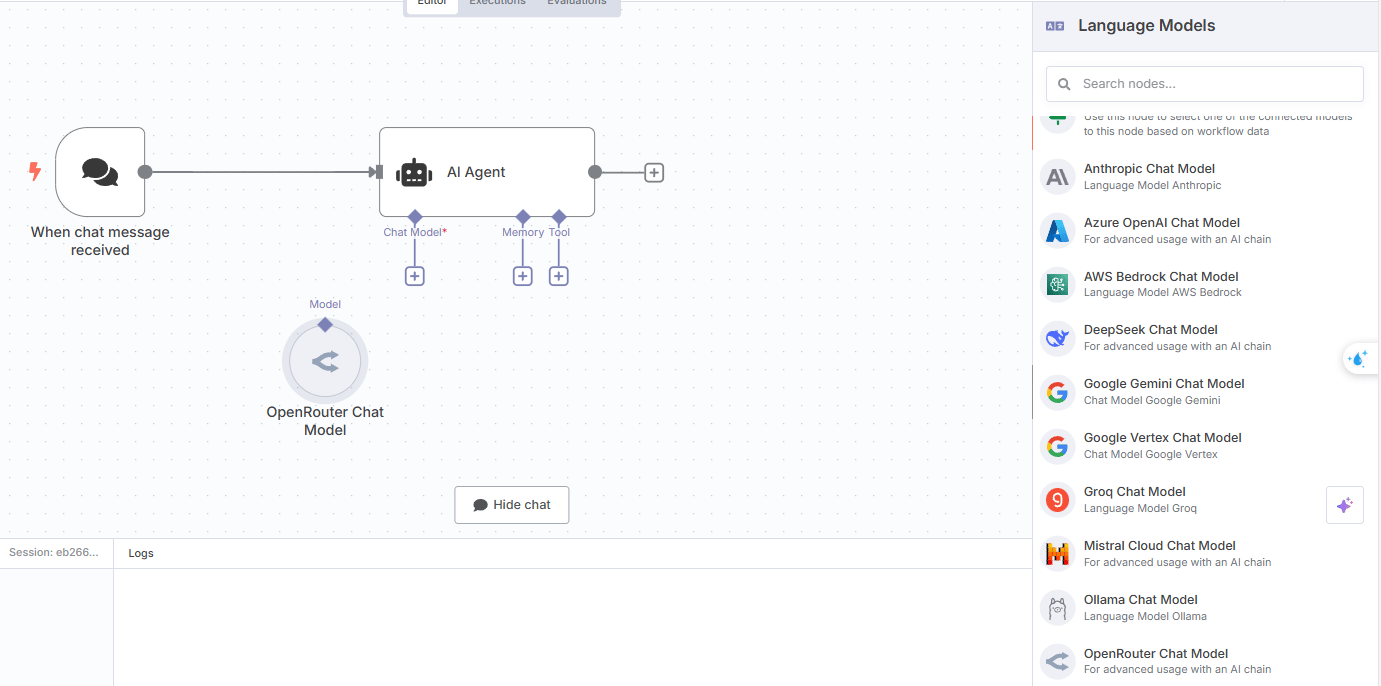

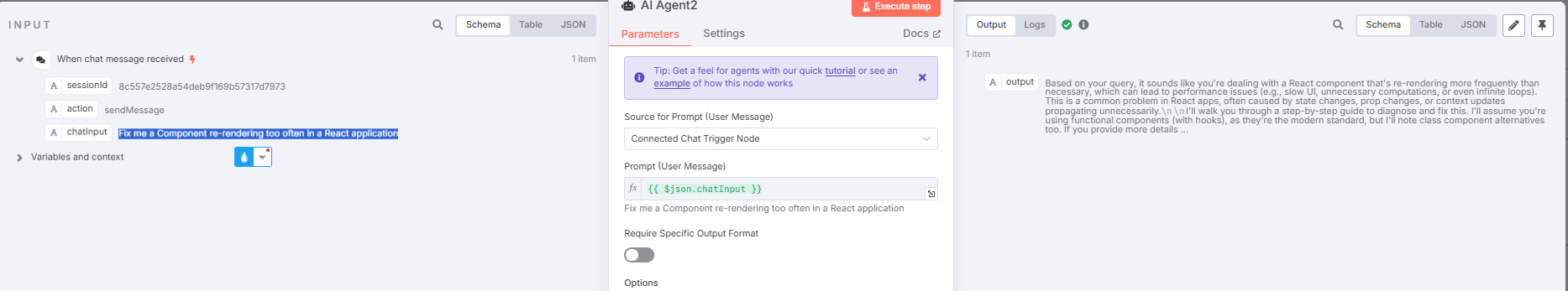

Step 1: Add the Node. Fire up n8n and create a new workflow. Add an "AI Agent" node, and within it, add a "Chat Model." Scroll down the list of providers to find and select "xAI Grok."

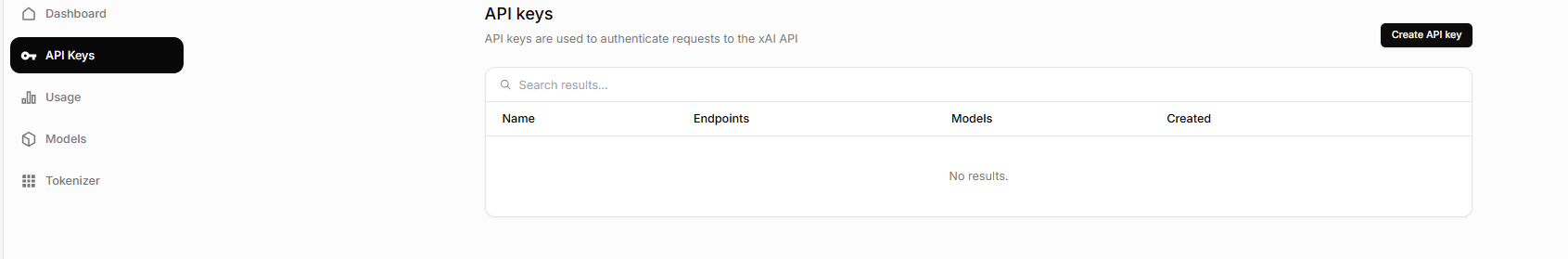

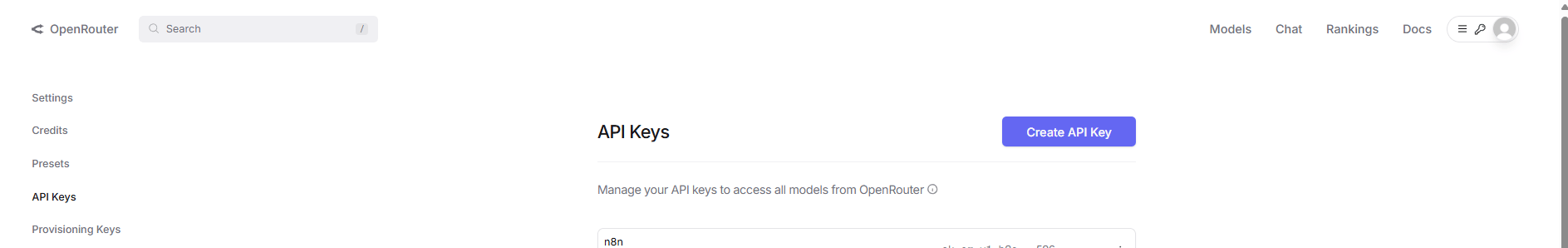

Step 2: Get Your API Key. You'll need to head over to the xAI admin console. Create an account, add your billing information (because while the model usage might be cheap or free depending on the provider, the API service itself is a commercial product), and navigate to the "API Keys" section. Create a new key and copy it.

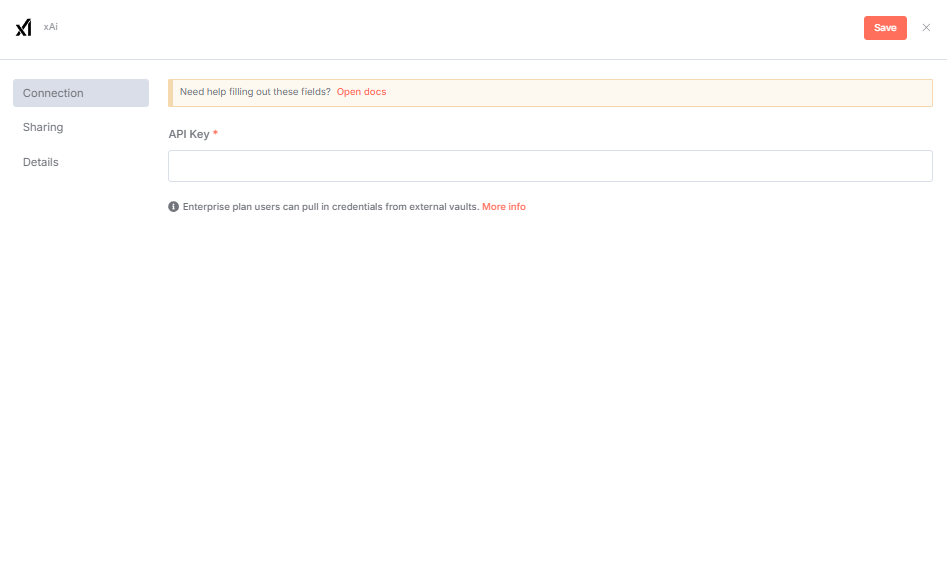

Step 3: Configure Your Credentials. Go back to n8n and create a new credential for the xAI Grok node, pasting in your shiny new API key.

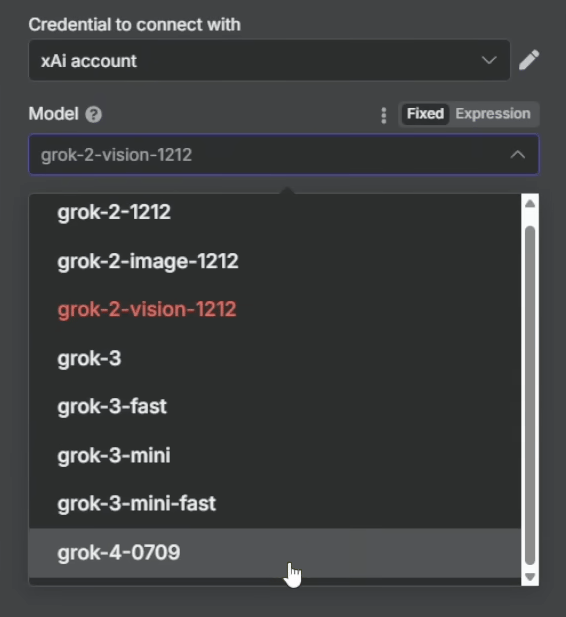

Step 4: Select Your Model. In the node's settings, you will see a drop-down list of available models. Select the latest version, which will look something like "Grok 4 0709" (the numbers represent the launch date, July 9th).

Step 5: Your First Conversation (The "Hello, World!" Test) This is the moment of truth. After all the setup and configuration, this simple test is designed to confirm that every part of the connection is working correctly. Think of it as a digital handshake between n8n and the Grok 4 model.

The Action: In the "Message" field of your AI Agent node, type a simple, friendly message like

"Hello, Grok".The Execution: Run the node manually by clicking its "play" button. You should see the node turn green, indicating a successful execution.

The Verification: Look at the output panel of the node. If everything is connected properly, you should see a friendly, conversational response from the AI, something along the lines of:

Hello! How can I help you today? 😊

The Celebration: If you see this response, take a moment to celebrate. You have successfully established a connection and had your first conversation with one of the most powerful AI models on the planet. All the previous steps—getting the API key, setting up the credentials, and configuring the node—have paid off. You are now ready to start building. Feel free to do a little victory dance; you've earned it.

Step 6: Adding Tools (This is Where It Gets Interesting)

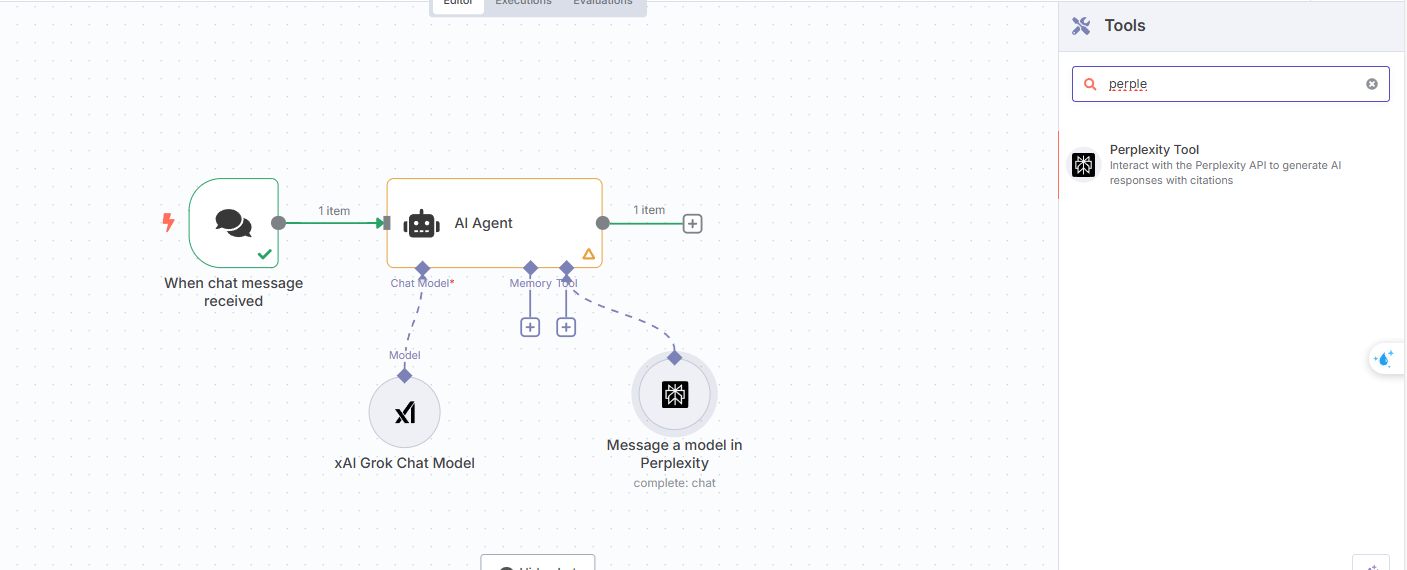

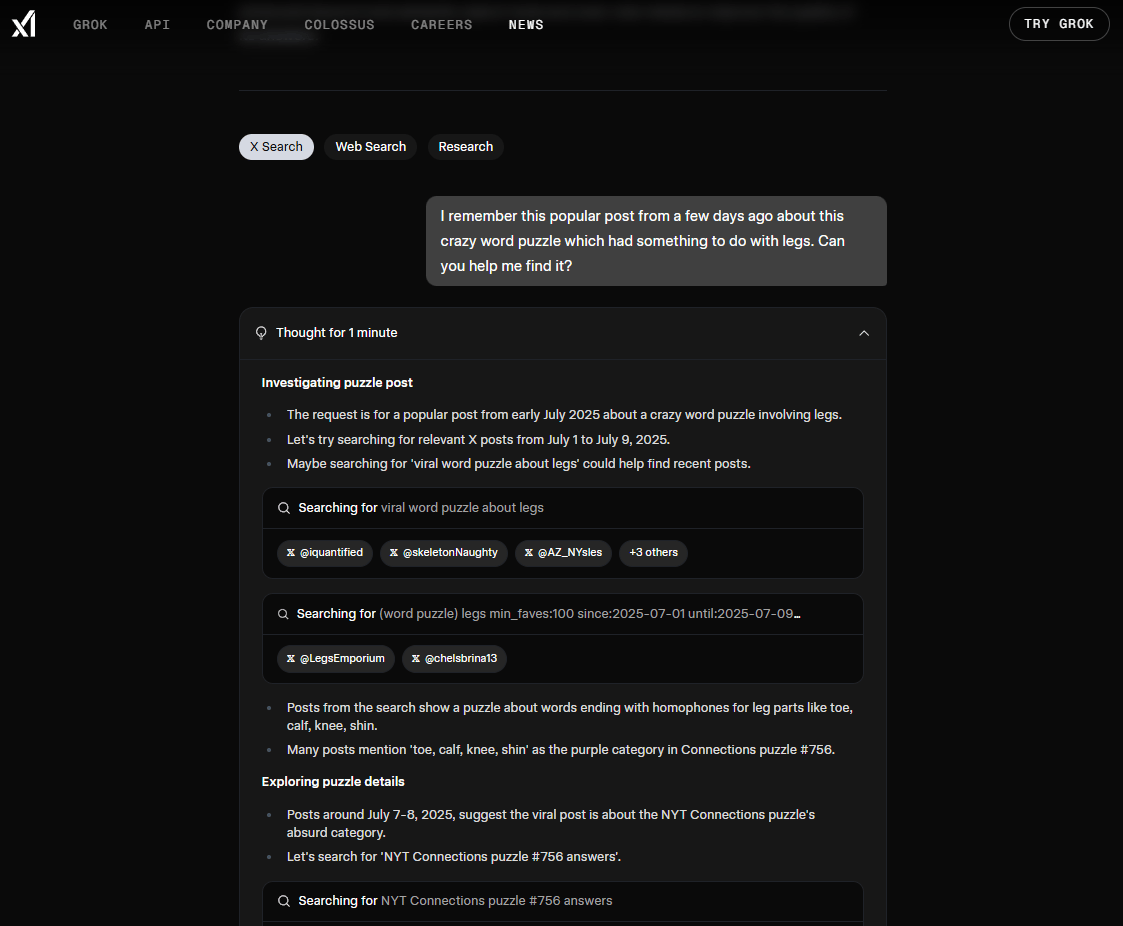

A chatbot is useful, but an AI agent is a game-changer. The real power comes from giving your AI access to tools it can use to perform actions. Let's add a Perplexity tool to give our agent the ability to do real-time web research.

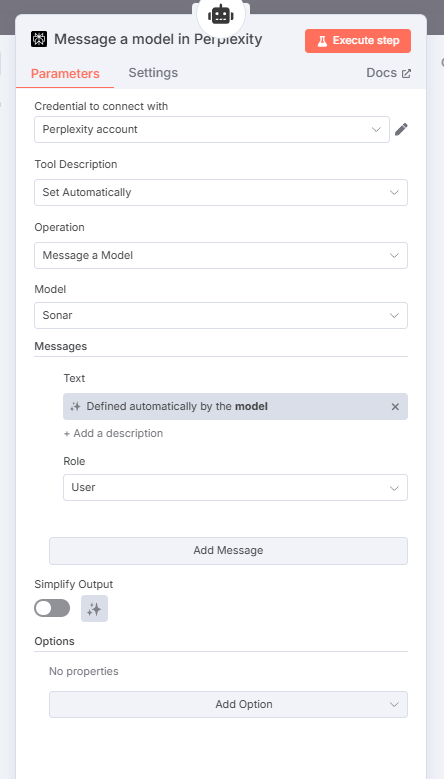

Add a Perplexity Node: In your n8n workflow, add a "Perplexity" tool.

Configure It: Set it up with your Perplexity API key and configure it to use the "sonar" model for the best research results.

Connect it to the Agent: In your "AI Agent" node, connect the Perplexity node to the "Tools" input. This tells the agent that it now has a research tool available to use.

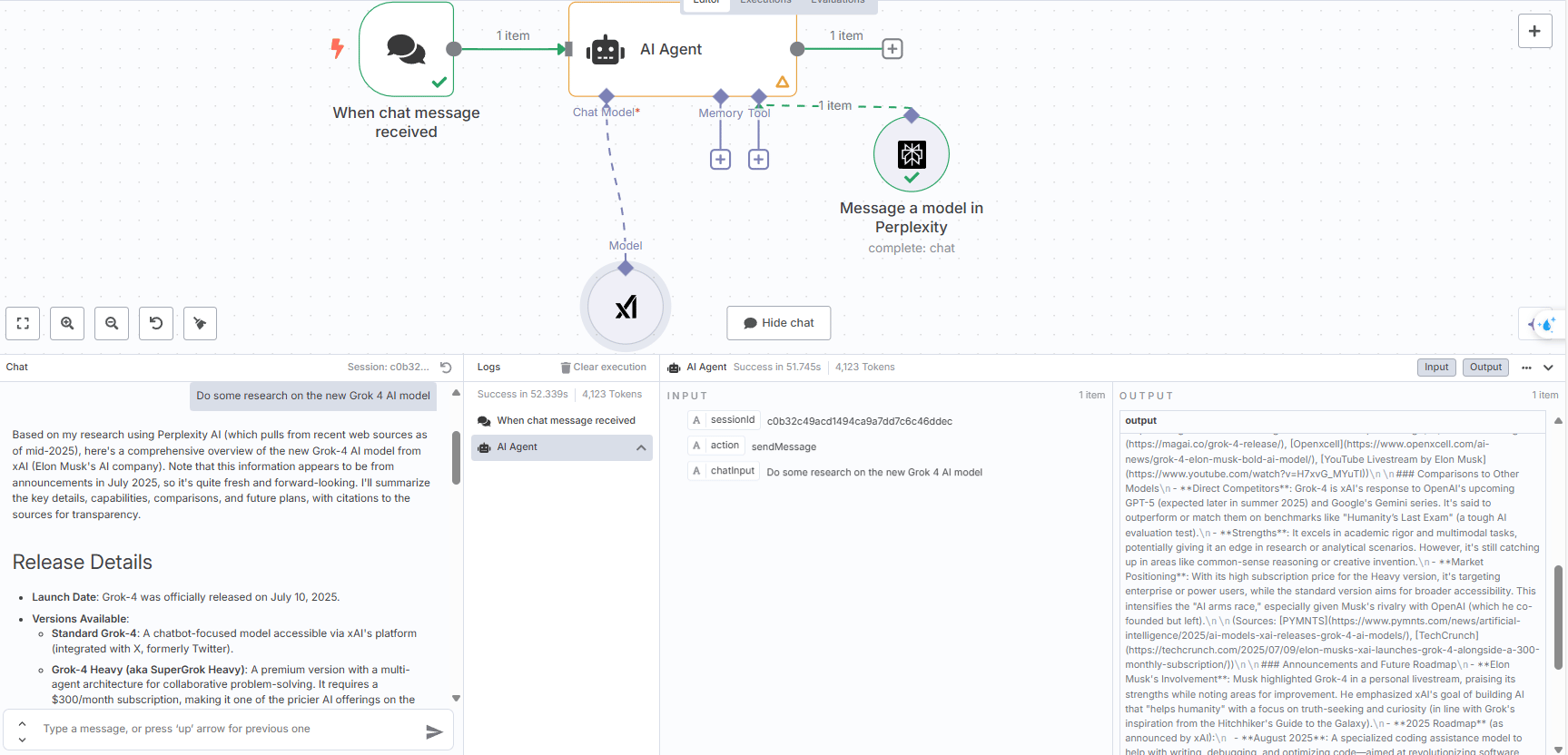

The Test: Now, try giving the agent a research task. A fun, meta-test is to ask it:

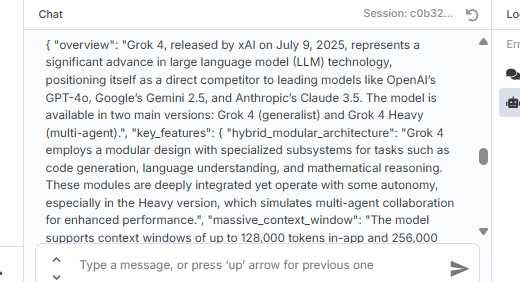

Do some research on the new Grok 4 AI modelThe Magic: If everything is working correctly, you will see the AI agent "think" about the request, automatically decide that it needs to use its research tool, and then call the Perplexity node. It will then process the search results and give you a complete, well-organized response, complete with sections on background, key features, comparisons with other models, and clickable source links for verification. This ability to decide by itself when to use a tool is what makes this AI powerful.

Step 7: When Things Don't Go According to Plan (AKA The Real World)

Of course, it's not all sunshine and perfectly parsed JSON responses. As you start building more complex workflows, you will definitely run into errors. Let me share a war story from the trenches.

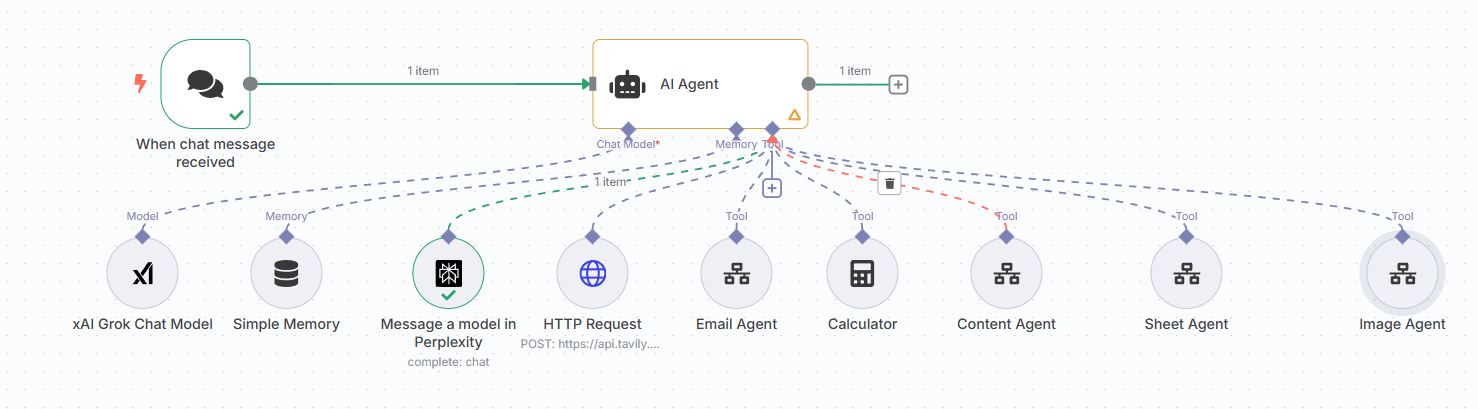

The "Ultimate Assistant" Nightmare: I tried to set up a more complex workflow that I called the "Ultimate Assistant." The goal was to have it:

Research the Grok 4 model using two different research tools (Tavily and Perplexity) to get a wider range of information.

Combine and summarize the results from both sources.

Find a specific contact in my address book.

Send an email summary of the research to that contact.

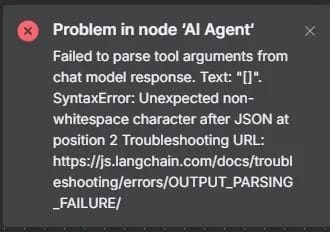

What Happened: Error city, population: me. I was immediately hit with the dreaded "Failed to parse tool arguments from chat model" error.

The Problem: In simple terms, Grok 4 was returning its responses in a format that wasn't perfectly structured JSON. The n8n agent, which is built on the LangChain framework, needs a perfectly valid JSON object to understand how to call a tool and what information to pass to it. Grok's slightly non-standard response was causing the entire workflow to break.

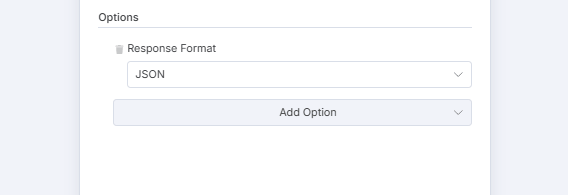

The JSON Response Format Hack: My first attempt at a fix was to try and force the model to behave.

In the Grok 4 chat model settings, I added a "Response Format" option and set it to

JSON mode.

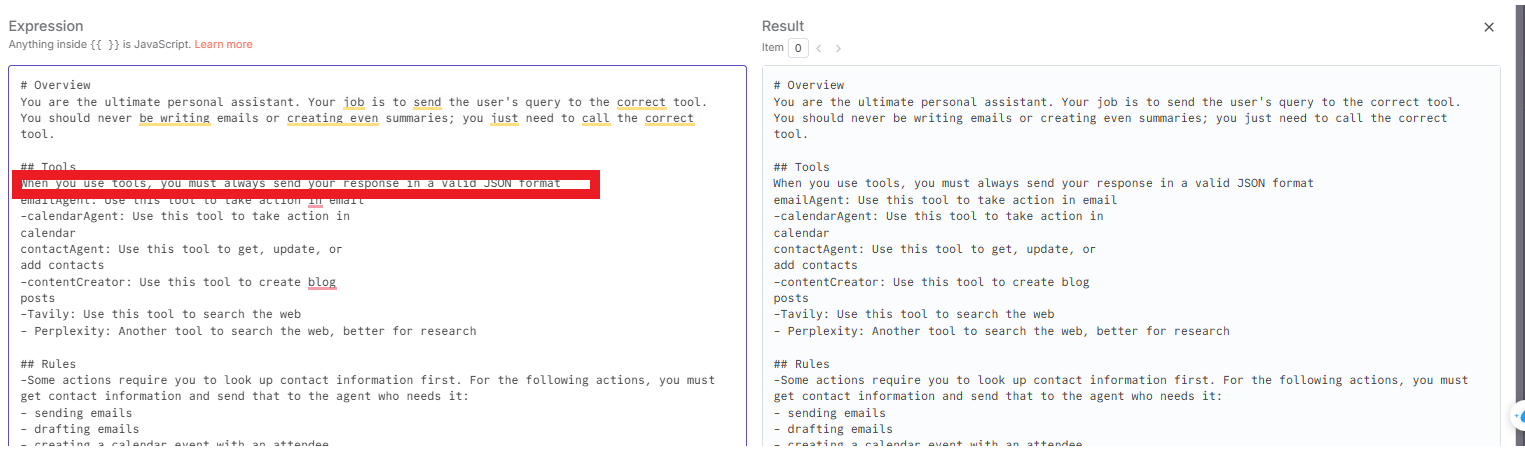

I updated the system prompt to explicitly tell the AI, "When you use tools, you must always send your response in a valid JSON format."

The Result: Progress, but not victory. The parsing errors stopped, which was great. However, now the tools weren't being called at all. The AI would respond, but it wouldn't use the research tools. I had solved the error but broken the functionality.

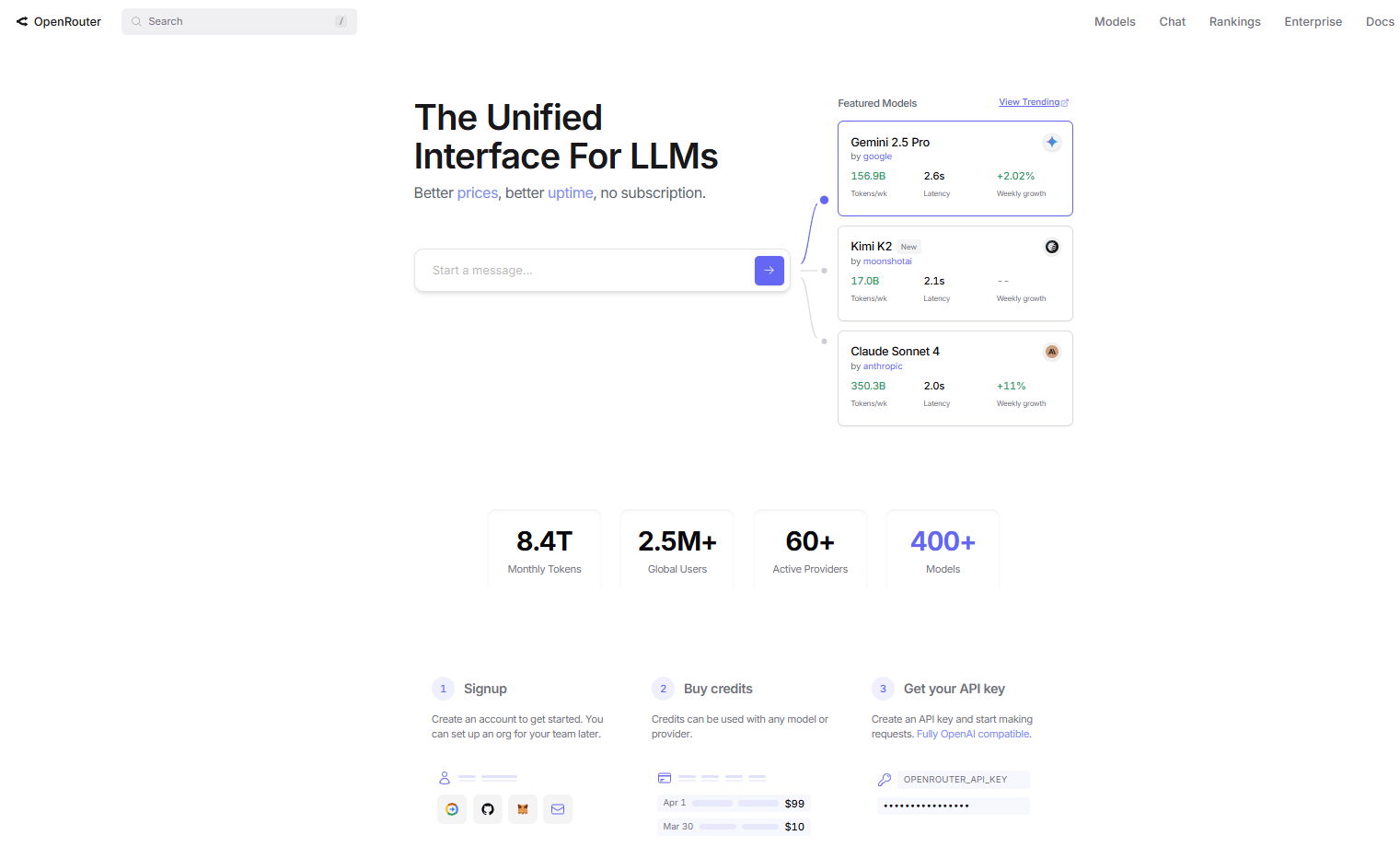

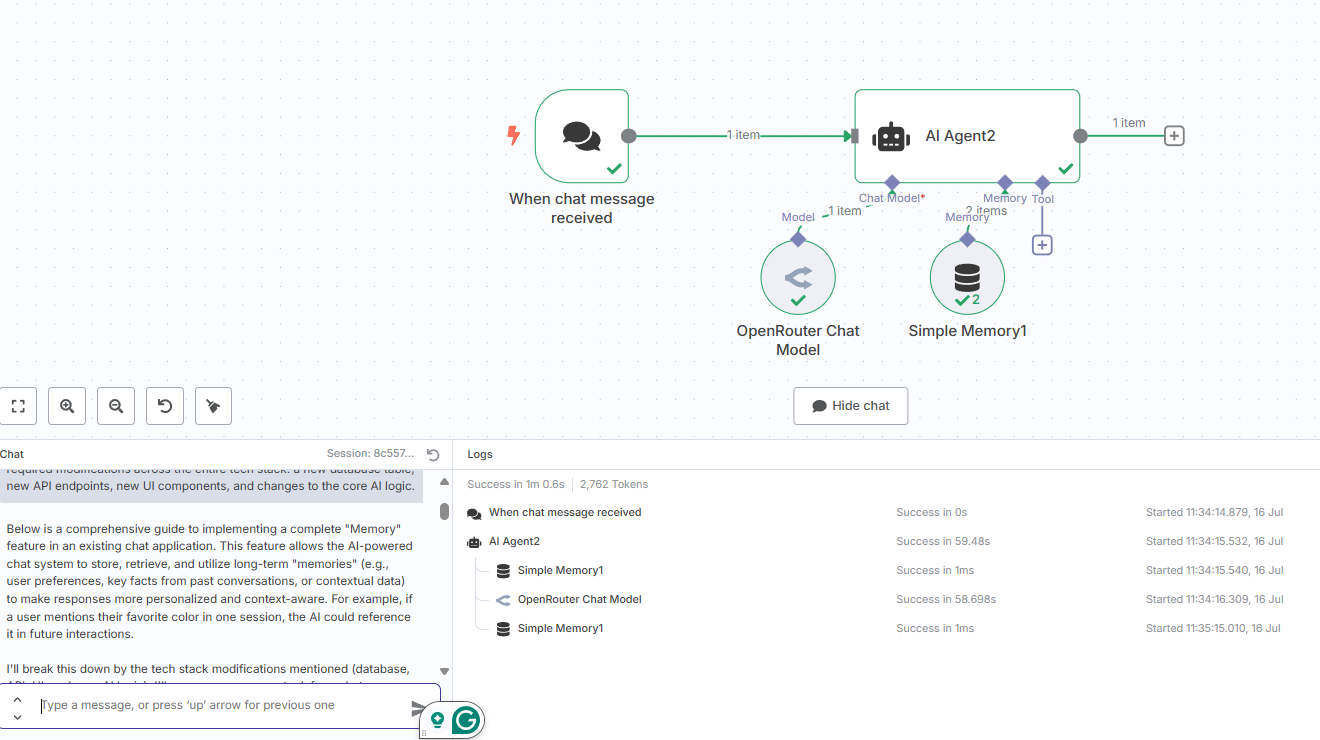

Path 2: The OpenRouter Solution (The Smarter, More Reliable Way)

Sometimes, the best solution is the simplest one. Instead of wrestling with the quirks of a direct API integration, I switched to using a service like OpenRouter as a middleman. Here's why this is actually a brilliant approach for any serious automation builder.

The Benefits of the OpenRouter Approach:

Single Billing Source: You can manage your credits and see detailed analytics for dozens of different AI models, all in one place.

Unified API: You use the same, consistent API format to call any model, whether it's from OpenAI, Anthropic, Google, or xAI. This makes switching between models incredibly easy.

Better Reliability: OpenRouter often provides a more stable and reliable connection to the underlying models, handling many of the small formatting inconsistencies for you.

Setting Up OpenRouter with Grok 4:

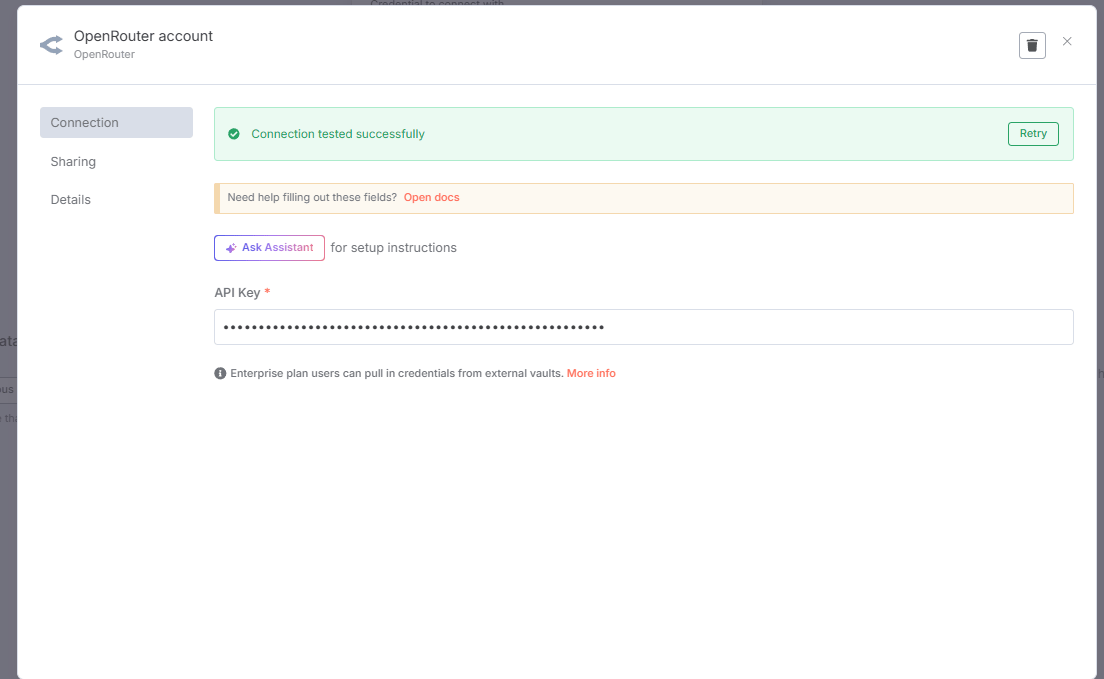

Sign up for a free account at OpenRouter.ai.

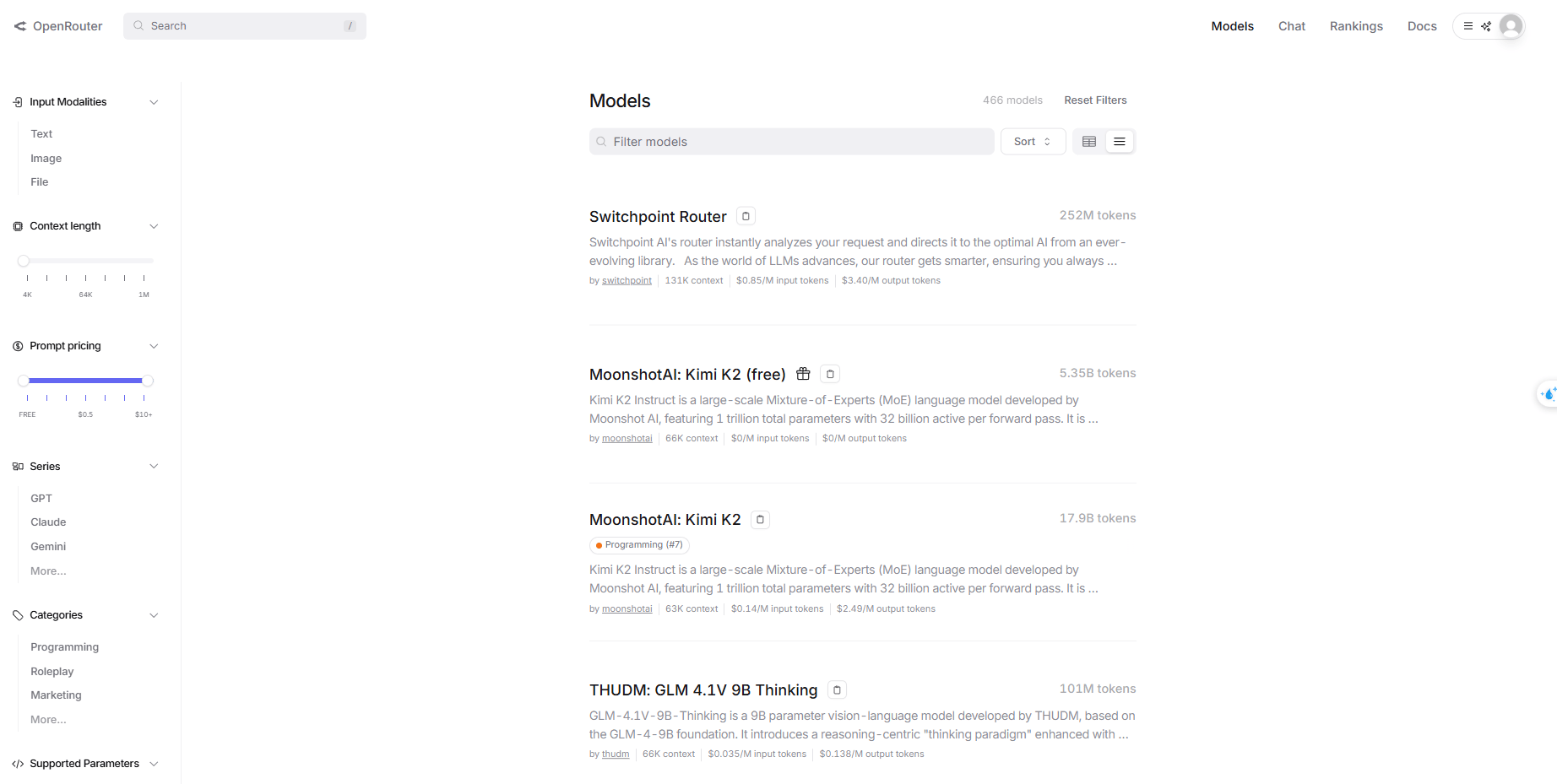

Go to the "Models" page to browse all the available models and see their pricing and context window information.

Generate a new API key in your OpenRouter dashboard.

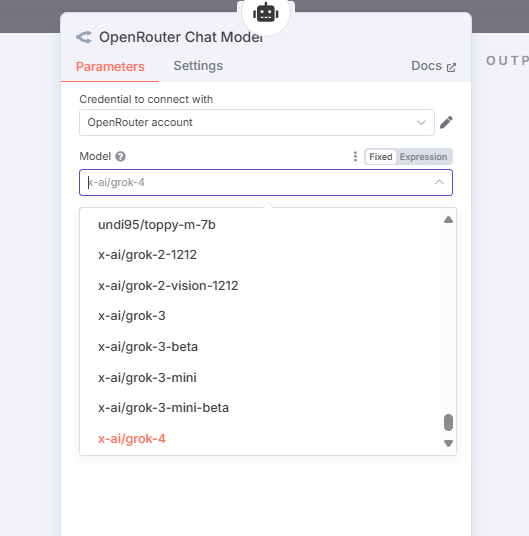

In your n8n workflow, instead of using the "xAI Grok" node, you will use the generic "Chat Model" node.

In its settings, you will add a new credential for OpenRouter. You will need to provide your OpenRouter API key.

Now, in the "Model" field, you can simply type in the name of the model you want to use, such as

xai/grok-4.

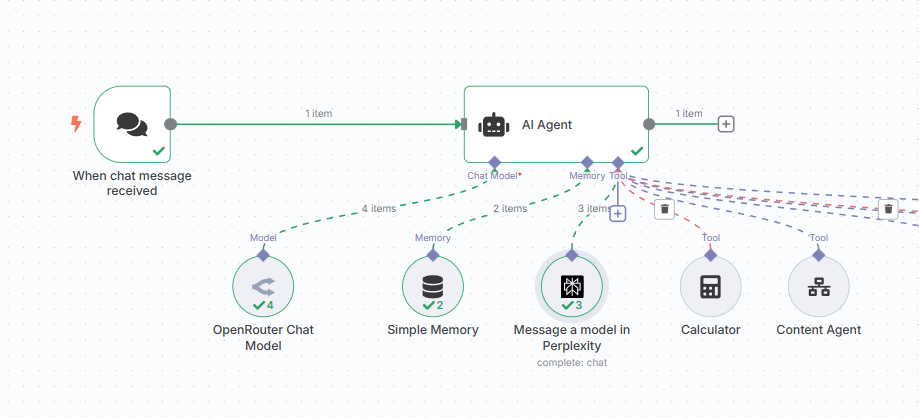

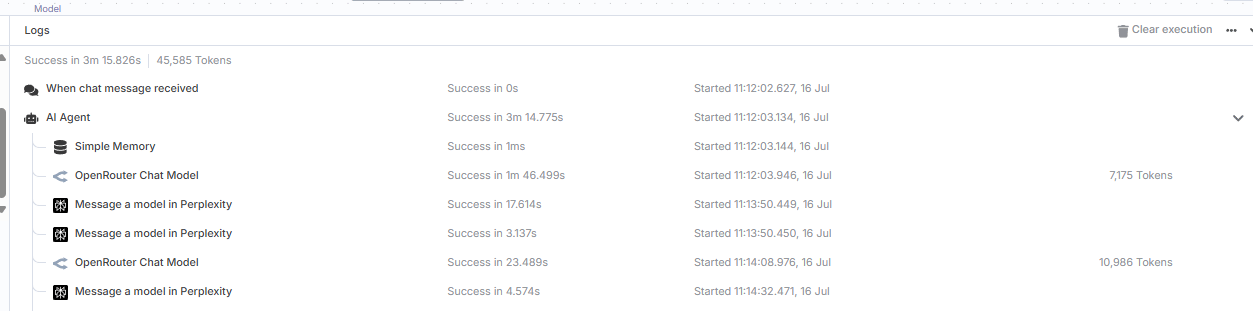

The Success Story: A Detailed Breakdown

With the more reliable OpenRouter handling the connection, I ran the same complex "Ultimate Assistant" workflow. This time, it worked perfectly. Here’s a step-by-step look at the agent's autonomous process:

Initial Prompt: I gave the agent the high-level goal:

"Do research using Perplexity and Tavily on the new Grok 4 AI model, then send the results as an email to AI Fire Research."Agent's Plan: The Grok 4 agent analyzed the prompt and created its own internal plan. It recognized it needed to perform four distinct tasks: research with Tool 1 (Perplexity), research with Tool 2 (Tavily), find a contact, and send an email.

Executing Tool 1: The agent first called the Perplexity tool to conduct a search. It processed the results and stored them in its short-term memory.

Executing Tool 2: Next, it called the Tavily search tool to get a different set of sources and information. It also stored these results.

Intelligent Synthesis: This is where Grok 4's reasoning shone. It analyzed the research from both sources, identified overlapping information, noted any conflicting details, and synthesized everything into a single, cohesive summary.

Contact Lookup: The agent then used a "Sheet Agent" tool (which I had connected to my Google Contacts) to find the email address for "AI Fire Research."

Email Composition: Finally, it used the "Email Agent" tool. It composed a beautifully crafted email, complete with a clear subject line, a personalized greeting, the synthesized research summary, and properly formatted source citations from both Perplexity and Tavily.

Final Output: The result was a perfectly executed, multi-step task that involved intelligent reasoning about conflicting information and the seamless orchestration of four different tools. The final email draft that appeared in my Gmail was comprehensive, well-written, and ready to send.

Creating quality AI content takes serious research time ☕️ Your coffee fund helps me read whitepapers, test new tools and interview experts so you get the real story. Skip the fluff - get insights that help you understand what's actually happening in AI. Support quality over quantity here!

Part 5: Speed, Cost, and Reality Checks

Let's talk numbers, because in the real world of building and running automations, they matter a great deal.

Performance Variability (The Speed Test): When I ran the complex "Ultimate Assistant" workflow, the first execution took 1 minute and 40 seconds. This was impressive. However, when I ran the exact same workflow a second time, it took over 3 minutes.

The Lesson: Grok 4 is a new and incredibly popular model. This means that its servers are under a heavy load. The performance you experience can vary significantly based on the time of day and the number of other people using the service. You need to build your workflows with the understanding that response times will not always be consistent.

Cost Analysis (The Price of Power): That same complex research and email workflow cost approximately 12 cents to run through OpenRouter. That might not sound like much, but it's important to put it in context.

Why the Cost Adds Up: In that single workflow, we are passing a lot of text (tokens) back and forth. The initial prompt, the results from two different research tools, and the final email composition all contribute to the token count. Grok 4, being a top-tier model, is not the cheapest option available on a per-token basis.

The Optimization Strategy: If cost is a major concern for your application, you can adopt a more sophisticated, multi-model approach.

Use cheaper, faster models (like a smaller version of Claude or Gemini) for the initial, high-volume tasks like processing research results.

Have these cheaper models create a concise summary of their findings.

Then, send only this condensed summary to the more expensive and powerful Grok 4 model for the final, high-level analysis and decision-making. This allows you to leverage Grok 4's exceptional reasoning capabilities where they add the most value, without paying for it to perform simple, repetitive tasks.

Part 6: Code Snippets and JSON Workflows

To help you get started even faster, here is the basic n8n JSON structure for integrating Grok 4. You can copy and paste this code directly into your n8n canvas to create the nodes.

Basic n8n Workflow Structure for Grok 4 Integration:

{

"nodes": [

{

"parameters": {

"options": {}

},

"type": "@n8n/n8n-nodes-langchain.chatTrigger",

"typeVersion": 1.1,

"position": [

360,

-300

],

"id": "48ee2b2e-b037-47b9-9fec-084d5ad3566a",

"name": "When chat message received",

"webhookId": "c9183bc4-a0e8-47b3-8ef6-dbb1e7826f30"

},

{

"parameters": {

"options": {

"responseFormat": "json_object"

}

},

"type": "@n8n/n8n-nodes-langchain.lmChatXAiGrok",

"typeVersion": 1,

"position": [

560,

-120

],

"id": "a9506af8-c66c-4cef-b21d-c515a1d2e86a",

"name": "xAI Grok Chat Model",

"credentials": {

"xAiApi": {

"id": "4KROdi7YNSPdR3tm",

"name": "xAi account"

}

}

},

{

"parameters": {

"options": {

"systemMessage": "=# Overview\nYou are the ultimate personal assistant. Your job is to send the user's query to the correct tool. You should never be writing emails or creating even summaries; you just need to call the correct tool.\n\n## Tools\nWhen you use tools, you must always send your response in a valid JSON format\nemailAgent: Use this tool to take action in email\n-calendarAgent: Use this tool to take action in\ncalendar\ncontactAgent: Use this tool to get, update, or\u0433\nadd contacts\n-contentCreator: Use this tool to create blog\nposts\n-Tavily: Use this tool to search the web\n- Perplexity: Another tool to search the web, better for research\n\n## Rules\n-Some actions require you to look up contact information first. For the following actions, you must get contact information and send that to the agent who needs it:\n- sending emails\n- drafting emails\n- creating a calendar event with an attendee"

}

},

"type": "@n8n/n8n-nodes-langchain.agent",

"typeVersion": 2,

"position": [

700,

-300

],

"id": "6fbb9bcb-8d20-45b6-948d-1b80b1efc210",

"name": "AI Agent2"

}

],

"connections": {

"When chat message received": {

"main": [

[

{

"node": "AI Agent2",

"type": "main",

"index": 0

}

]

]

},

"xAI Grok Chat Model": {

"ai_languageModel": [

[

{

"node": "AI Agent2",

"type": "ai_languageModel",

"index": 0

}

]

]

}

},

"pinData": {},

"meta": {

"templateCredsSetupCompleted": true,

"instanceId": "eb5b913a9d67c7f834fb115a10a067eb7bfe1cc0fb9335a475a7a00c80e45514"

}

}For the more reliable OpenRouter integration, you would update your chat model configuration to look like this:

{

"nodes": [

{

"parameters": {

"model": "x-ai/grok-4",

"options": {}

},

"type": "@n8n/n8n-nodes-langchain.lmChatOpenRouter",

"typeVersion": 1,

"position": [

220,

240

],

"id": "f28395a2-9110-40f8-b85b-43e9c5cc095d",

"name": "OpenRouter Chat Model",

"credentials": {

"openRouterApi": {

"id": "VBCgxXmaaxMFZpTy",

"name": "OpenRouter account"

}

}

}

],

"connections": {

"OpenRouter Chat Model": {

"ai_languageModel": [

[]

]

}

},

"pinData": {},

"meta": {

"templateCredsSetupCompleted": true,

"instanceId": "eb5b913a9d67c7f834fb115a10a067eb7bfe1cc0fb9335a475a7a00c80e45514"

}

}Part 7: The Real-World Testing – 3 Challenges That Reveal Everything

I put Grok 4 through three increasingly difficult, real-world scenarios to see where it shines and where it falls flat.

Test 1: The Simple Bug Fix - ✅ PASS

The Problem: I started with a common issue in a React application. There was a scrolling problem where content at the bottom of a list was getting cut off and was inaccessible.

The Process: I described the problem to the AI in plain English and gave it access to the relevant code file.

The Result: The entire process took less than two minutes. The AI correctly identified the problem as a CSS styling issue, proposed a clean and professional fix, and presented it in a visual diff format that was easy to review.

Verdict: For simple bugs, styling issues, and well-defined problems within an existing codebase, Grok 4 is incredibly effective.

Test 2: The Complex Feature Development - ✅ STELLAR

The Challenge: This was a much harder test. I asked the AI to add a complete "Memory" feature to an existing chat application. This required modifications across the entire tech stack: a new database table, new API endpoints, new UI components, and changes to the core AI logic.

The Process: I used an "AI-driven task development" methodology. I provided a screenshot of what I wanted the final feature to look like and worked with the AI to break the task down into logical phases.

The Result: This is where my mind was truly blown. The entire feature was built in under five minutes, and I never wrote a single line of code myself. The AI analyzed the existing codebase, understood the tech stack, wrote and applied a new database migration, built the React components, modified the API endpoints, and correctly updated the core application logic. Everything worked perfectly on the first try.

Verdict: For adding complex, multi-faceted features to an existing and well-structured codebase, Grok 4 is nothing short of revolutionary. It can act like a junior developer, taking clear instructions and completing them perfectly.

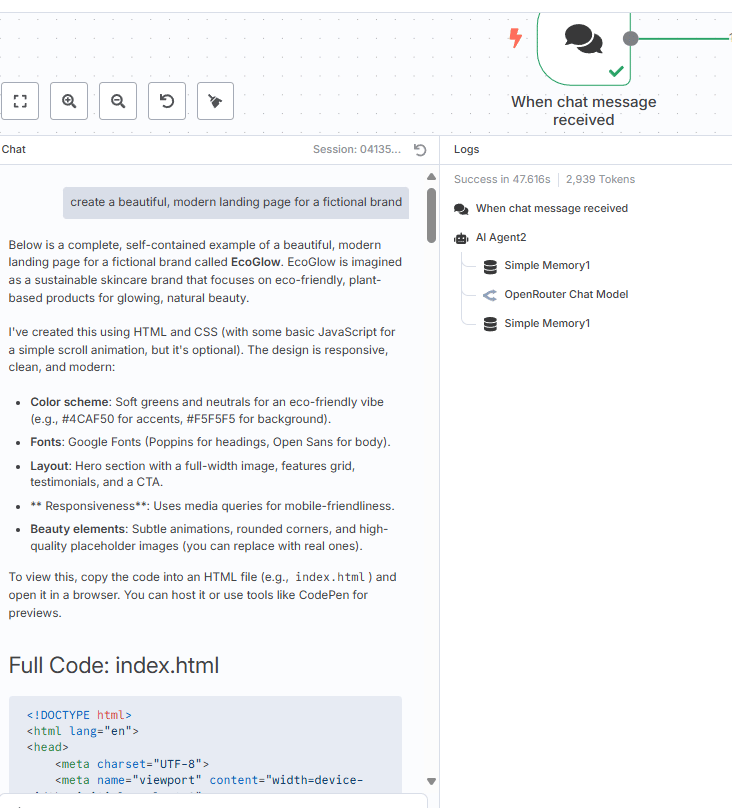

Test 3: The New Project Creation - ❌ DISAPPOINTING

The Challenge: After the stunning success of the last test, I wanted to see if it could handle a creative task from a blank slate. I asked it to create a beautiful, modern landing page for a fictional brand.

The Result: The website it created was functionally correct, but it did not look good. The styling was very basic and looked like websites from a long time ago. Despite multiple attempts to refine the prompt, the aesthetic quality never significantly improved.

Why it failed: This test revealed the AI's key weakness. It failed because it had no context about my personal design preferences, no existing design system to learn from, and the prompt was too subjective.

Verdict: For creating new projects from scratch that require a strong sense of visual design and creativity, Grok 4 struggles immensely without a huge amount of context and specific examples to guide it.

Part 8: The Verdict – Is Grok 4 Worth It?

After putting Grok 4 through its paces, here is my honest assessment.

The Good:

Exceptional Reasoning: The way it structures its responses, breaks down complex problems, and handles multi-step tasks is truly impressive.

Powerful Math Capabilities: This is a huge breakthrough. Its ability to solve difficult math problems makes it useful for tasks like financial analysis and scientific modeling.

Excellent Tool Use: When the connection is stable (especially via OpenRouter), its ability to intelligently choose and use external tools is top-tier.

High-Quality Research: It produces comprehensive, well-sourced, and intelligently synthesized research reports.

The Not-So-Good:

Speed Inconsistency: As a new and popular model, the performance can vary wildly based on the current server load.

Cost: While the initial cost might seem fair, for high-volume usage, it is not the cheapest model available on platforms like OpenRouter.

Integration Quirks: The direct xAI integration can be finicky and prone to errors, especially with complex tool-use cases.

Creative Design: It is not a designer. It has trouble with tasks that need to look good if you don't give it clear examples.

The Bottom Line: Grok 4 is a genuinely impressive and powerful model that excels at complex reasoning, mathematics, and analysis. It is not the fastest, cheapest, or most reliable option for every single task, but when you need serious intellectual horsepower for your AI automations, it delivers. For developers working on existing, well-structured codebases, it is an absolute game-changer.

Conclusion: The Future is Agentic

We are at a crossroads in the world of software development. Tools like Grok 4 are not just making us more productive; they are fundamentally changing what it means to be a developer. The future does not belong to the person who can write the most code, the fastest. It belongs to the person who can best lead a team of powerful AI assistants to reach a main goal.

These new tools are easy to learn, the potential for a massive increase in your productivity is very real, and the price is right. There is no excuse not to start experimenting. The choice is simple: you can either embrace these tools and 10x your capabilities, or you can resist the change and risk being left behind by the developers who adapt.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

Overall, how would you rate the LLMs series? |

Reply