- AI Fire

- Posts

- 🔌 This Is The "USB Port" For All AI Applications

🔌 This Is The "USB Port" For All AI Applications

The complete guide to MCP (Model Context Protocol): the open standard that's ending API chaos and connecting all AI applications

🤖 An MCP Server Gives Your AI New Powers. Which is Most Valuable?This guide explains that MCP servers can give an AI three new abilities. If you were building an agent, which of these capabilities would you prioritize? |

Table of Contents

Introduction: The USB Moment for AI

In the last six months, you've probably heard AI experts declare that MCP (Model Context Protocol) is "amazing" or a "total game-changer". They are absolutely right.

MCP is best understood as the USB port for AI.

Think back to the chaos before USB. Every device - printers, mice, keyboards - had its own special, clunky port. It was a nightmare of incompatible connectors and custom cables. USB fixed this by creating one universal standard that just works.

AI has been stuck in that pre-USB chaos. Connecting an AI to external tools like your calendar, a database or a flight booking system requires building a different, custom integration for every single tool. It was slow, complex and messy.

MCP is the universal standard that fixes this. It's an open protocol that standardizes how AI applications connect to any tool or data source. This guide is your complete map to a new world of AI connectivity. You will learn:

What MCP is and its fundamental concepts.

How to use thousands of pre-built MCP servers instantly (the easy part).

How to build your own custom MCP servers, both with and without code.

By the end, you'll understand why this is the most important change in AI since ChatGPT itself.

Prepare to plug into the future of AI connectivity.

Part 1: What is MCP? The Definition Decoded

To understand MCP's importance, we first need to know exactly what it is and the problem it solves.

The Official Definition

According to Anthropic, the pioneering company that created and open-sourced MCP, Model Context Protocol is:

"an open standard that enables developers to build secure, two-way connections between their data sources and AI-powered tools".

If that definition sounds a bit dry or abstract, you're not alone. Let's break down its real-world significance using a powerful analogy.

The USB Analogy: Why MCP is Revolutionary

Think of MCP as the universal USB port for AI applications.

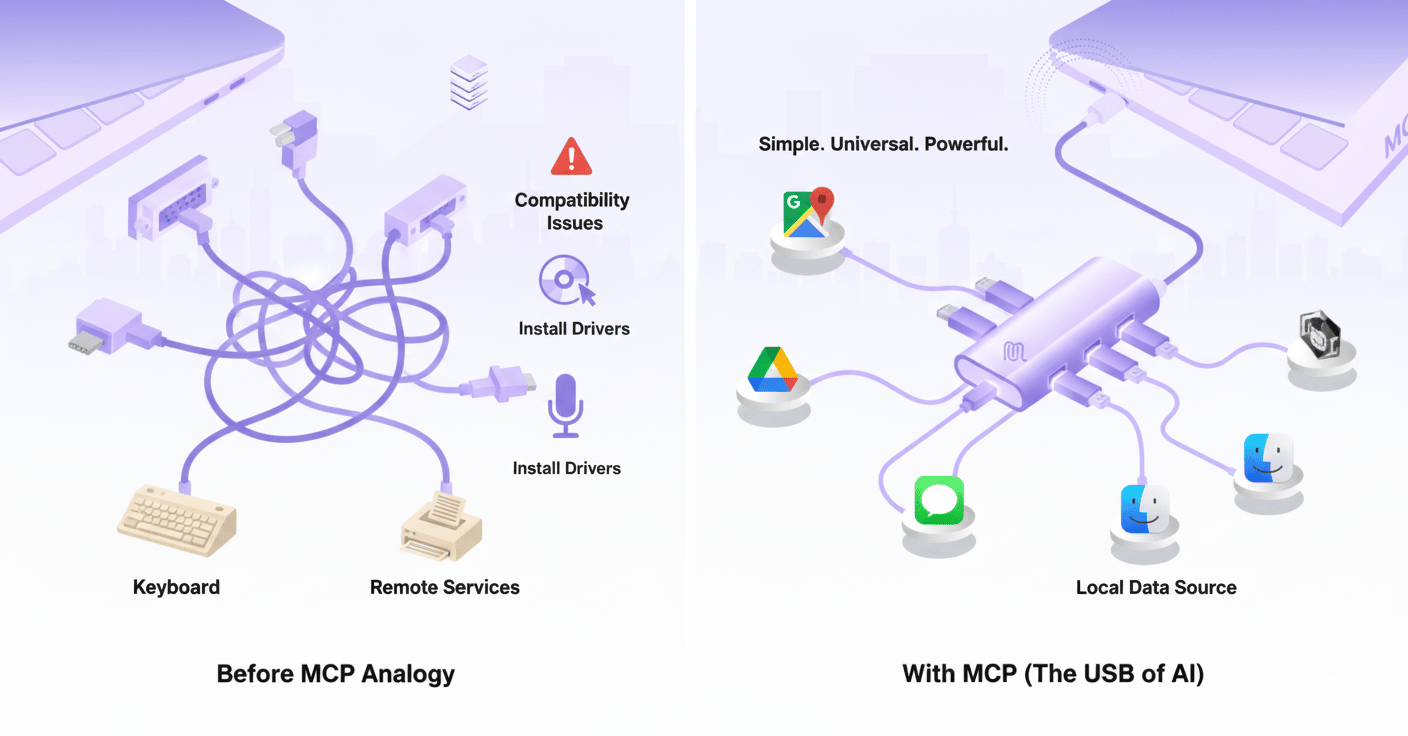

Before the Universal Serial Bus (USB) became the standard, connecting peripherals to computers was a frustrating nightmare:

Your keyboard, mouse, printer, microphone and webcam likely all had different, clunky, proprietary ports (Serial, Parallel, PS/2, etc.).

You needed a tangled mess of specialized cables and adapters for each device. Compatibility was a constant headache.

Software drivers had to be written and installed specifically for each unique connection type. Adding a new device often meant navigating a confusing installation process.

Then came USB. It introduced one standardized port that allowed virtually any device to connect seamlessly to any computer. It was simple, elegant and utterly transformative, unleashing a wave of innovation in hardware.

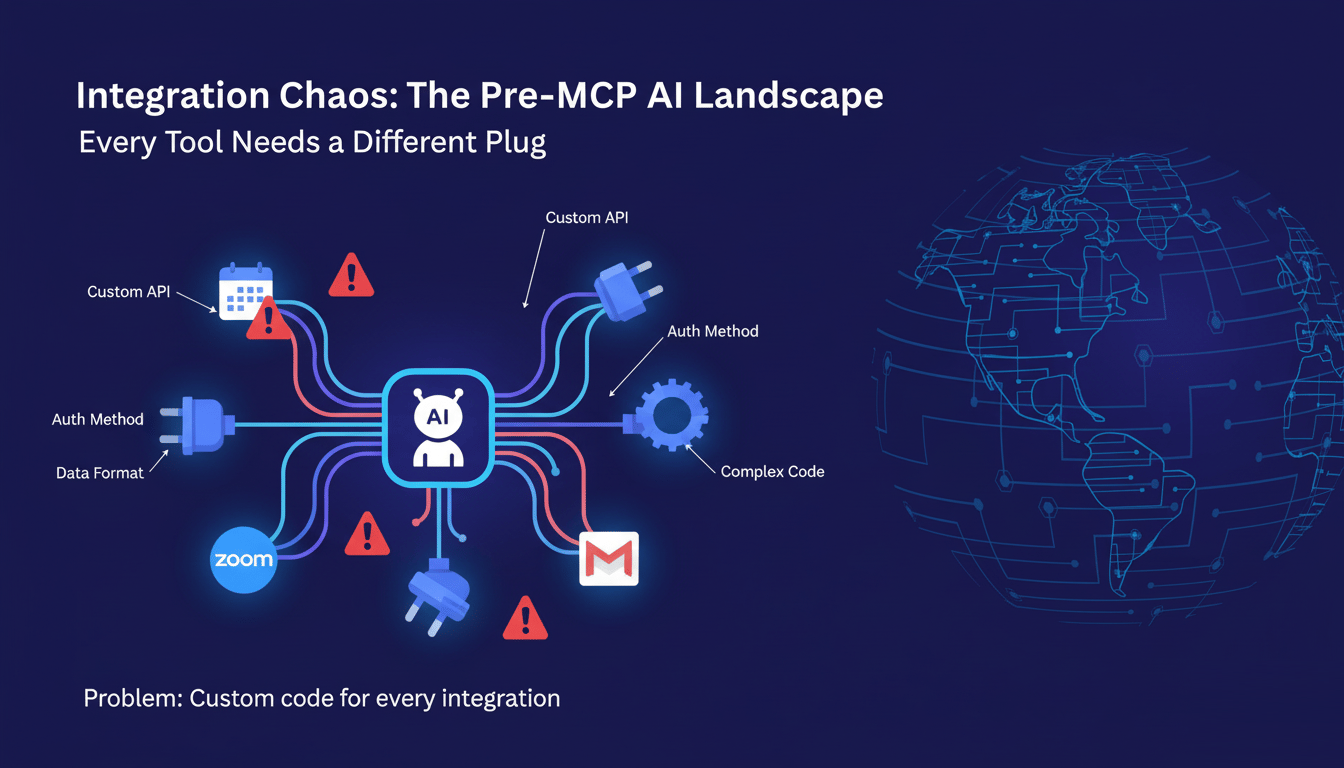

The Pre-MCP AI Landscape: Integration Chaos

Before MCP appeared, building AI agents that could interact with external tools and data sources created a similar kind of chaos, just in the software realm.

The Problem: Imagine building an AI scheduling agent. To be truly useful, it needs access to a variety of external systems:

Calendar Systems: Google Calendar, Microsoft Outlook Calendar and Apple Calendar.

Email Platforms: Gmail, Outlook, ProtonMail.

Video Conferencing: Zoom, Google Meet, Microsoft Teams.

The Pain: Every single one of these tools has its own unique Application Programming Interface (API). Each API has specific authentication methods (how the AI proves its identity), different data formats (how information is structured) and distinct interaction patterns (the specific commands it understands).

Building integrations meant custom-writing complex code for every single tool your AI needed to talk to. This was a time-consuming, expensive, nightmare full of errors, creating big problems for building truly capable AI agents. It was the digital equivalent of needing a different plug for every single appliance in your house.

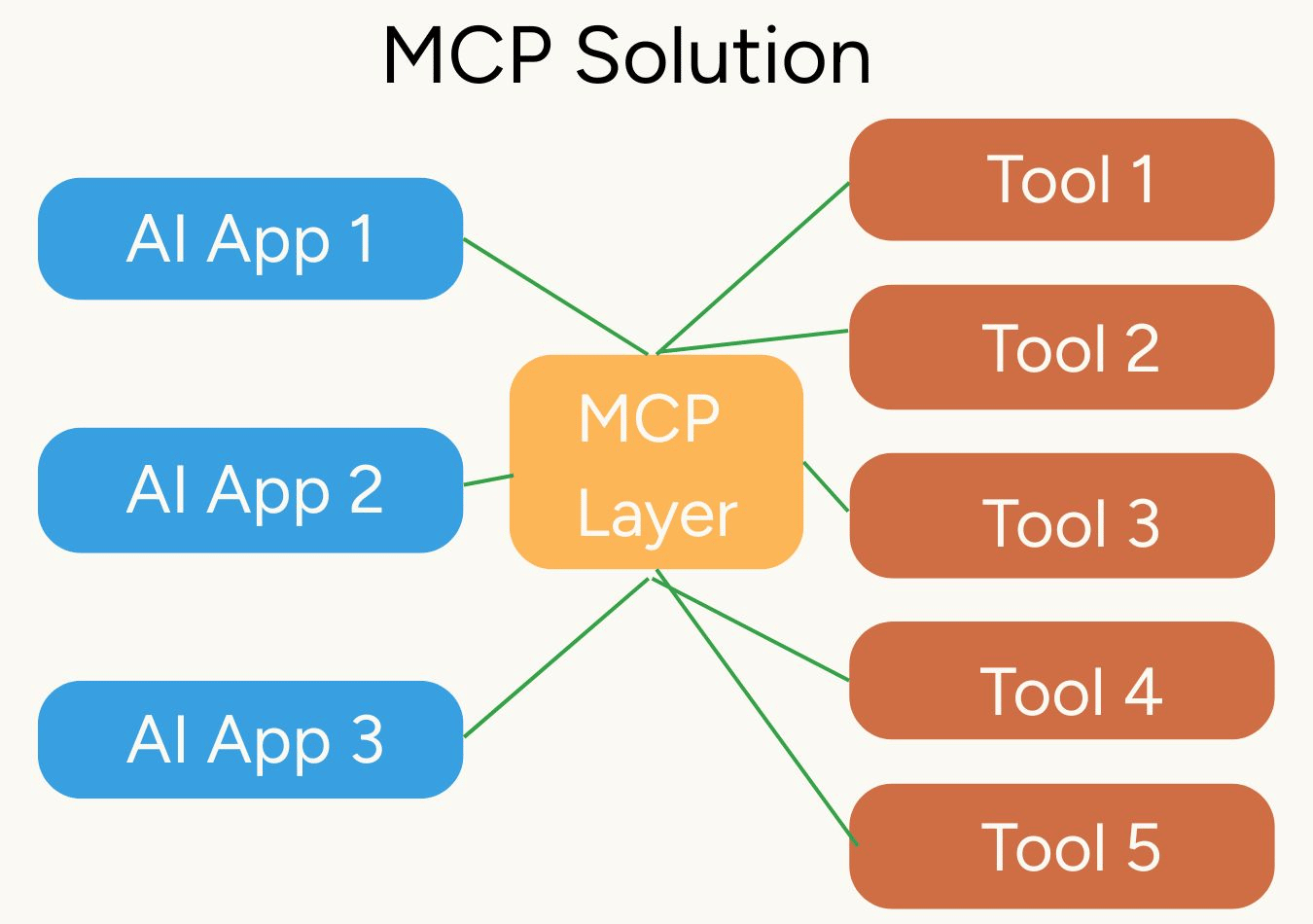

The MCP Solution: Standardization Brings Order

MCP brings order to this chaos by standardizing how AI applications (the "hosts") interact with external tools and data sources (exposed via "MCP servers"). With MCP, the whole system changes dramatically:

✅ Write Once, Use Everywhere: Developers create an MCP server for a specific tool (like Gmail) once. Any MCP-compatible AI application can then instantly use that server without needing custom code.

✅ Plug-and-Play Simplicity: Connecting new tools to your AI becomes as simple as adding a pre-built MCP server configuration. No more wrestling with complex API documentation for every new integration.

✅ Exponential Ecosystem Growth: The power of standardization is evident. Within just a few months of MCP's launch, the community has already created over 20,000 pre-built MCP servers, covering a vast range of applications and services. This rapid growth is just like the explosion of USB devices.

✅ Universal Compatibility: Any AI application that supports the MCP standard can seamlessly communicate with any available MCP server.

The result is a huge leap in efficiency. Instead of countless developers writing easily broken, custom integrations for every possible tool-application combination, the community now works together on building powerful, reusable MCP servers that work across the entire ecosystem. It's a classic case of standardization helping innovation and accessibility.

Part 2: Using MCP Servers (The Easy Part)

The beauty of MCP lies in its simplicity for the end-user or application builder. Using existing MCP servers requires almost no technical effort, allowing you to instantly grant your AI new superpowers.

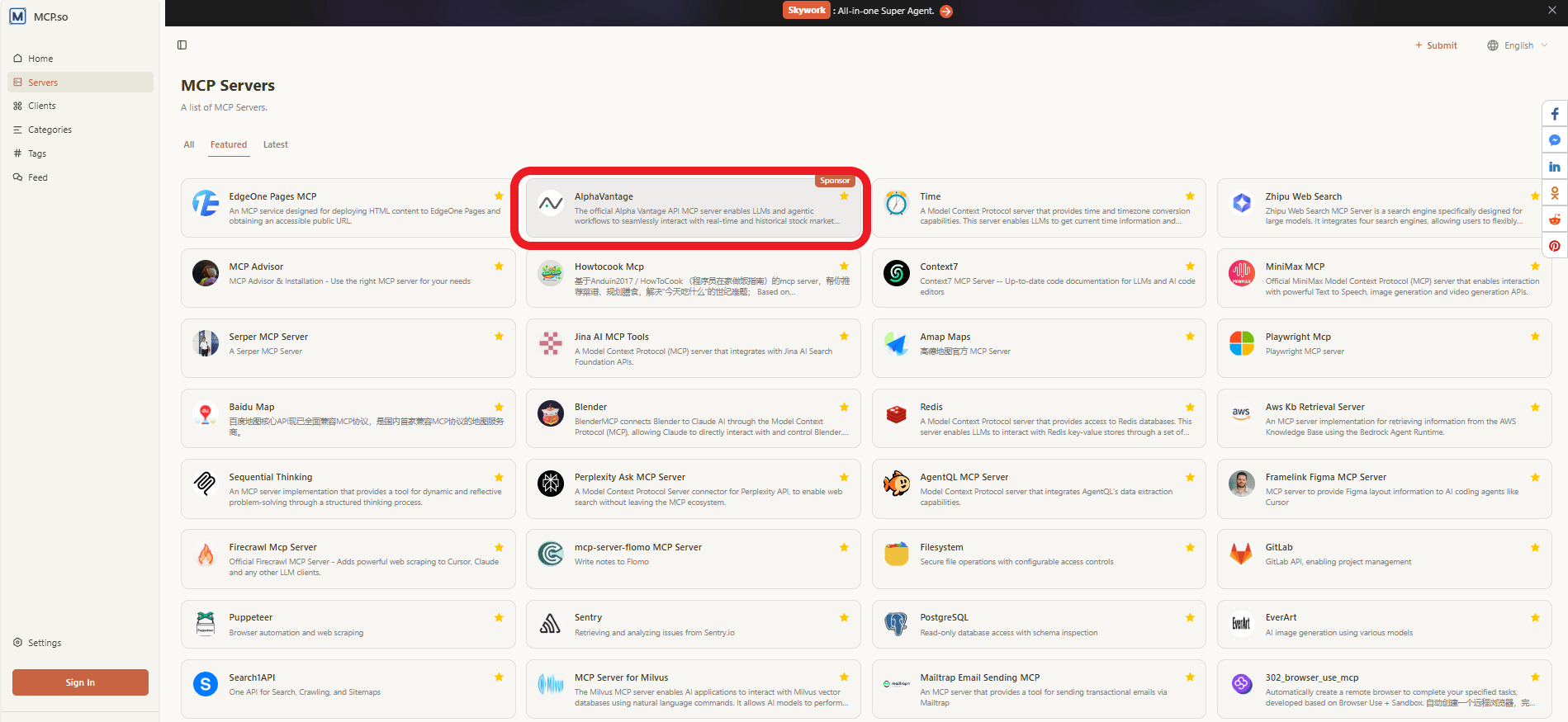

The MCP Server Marketplace: A Universe of Capabilities

Thousands of pre-built MCP servers are now available; you can find them in community directories or directly within compatible AI applications. These servers cover virtually every use case imaginable, turning your AI into a multitasking digital assistant. Here's a small sample of the categories available:

Financial & Market Data:

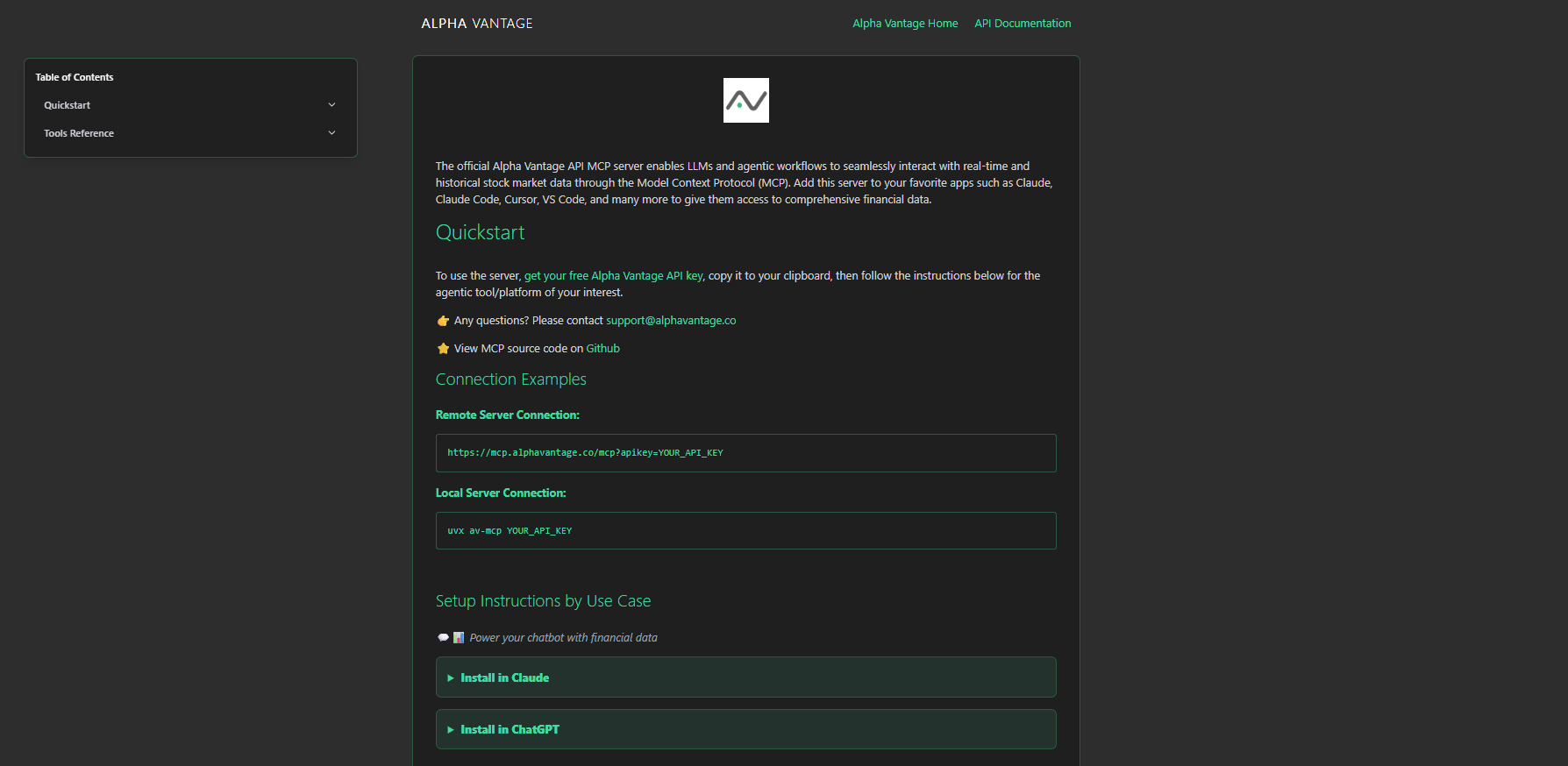

Alpha Vantage: Real-time and historical stock market data.

Crypto APIs: Cryptocurrency prices, market caps and blockchain data.

Financial Modeling Prep: Company financials, analyst ratings.

Productivity & Collaboration:

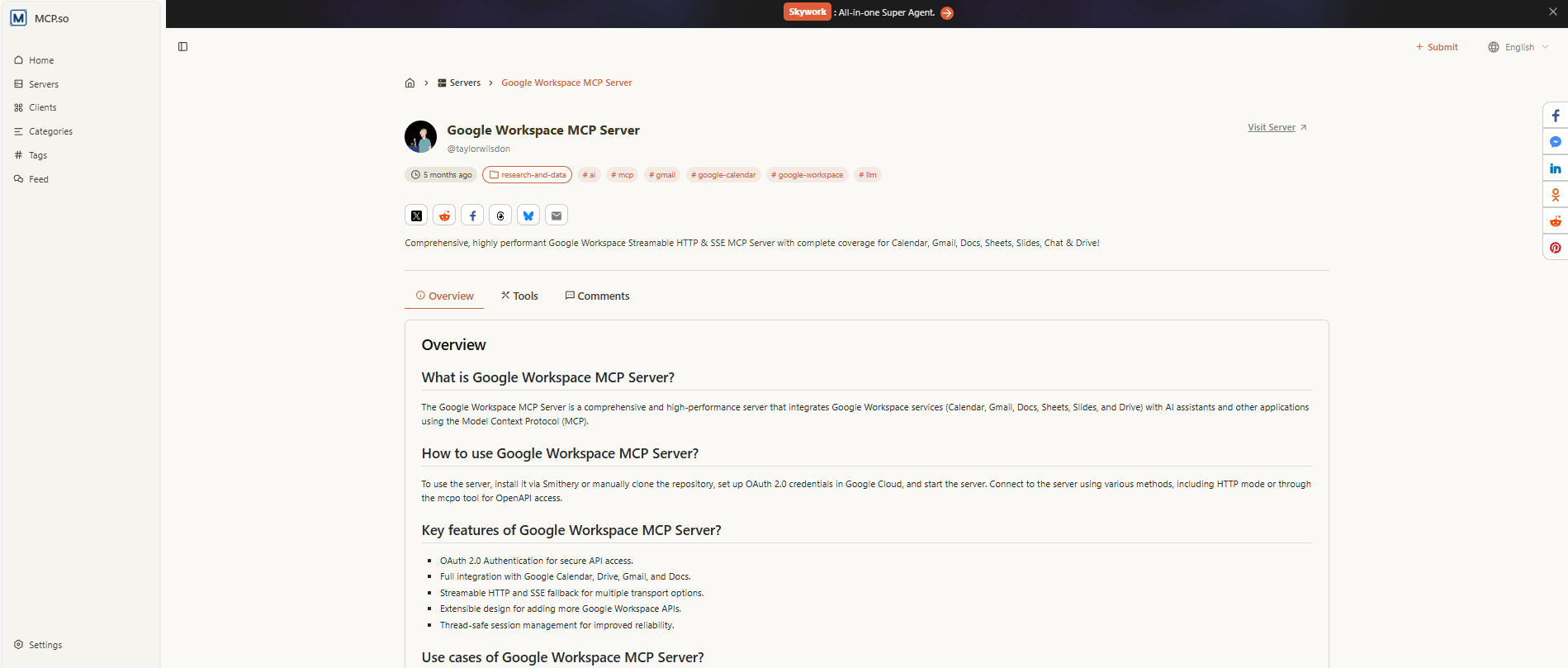

Google Workspace: Deep integration with Gmail, Calendar, Drive and Sheets.

Microsoft 365: Access to Outlook, OneDrive and Teams.

Notion/Obsidian: Database access, page creation and content management within your notes.

Slack/Discord: Send messages, read channels and manage communication platforms.

Development & Infrastructure:

GitHub/GitLab: Manage repositories, issues and pull requests.

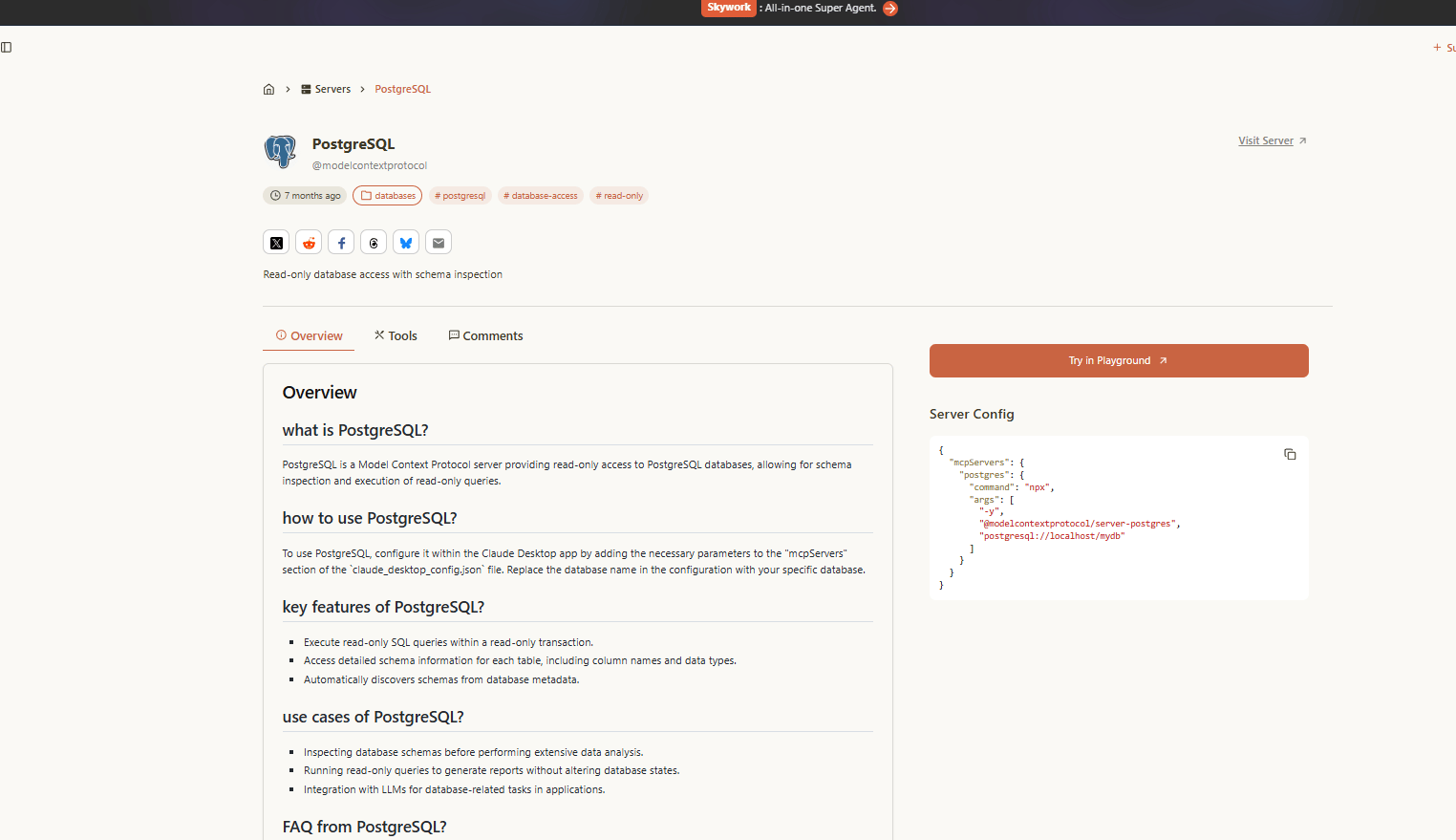

PostgreSQL/MySQL/MongoDB: Directly query and interact with databases.

Redis: Manage caches and fast data storage.

AWS/GCP/Azure: Interact with cloud infrastructure services.

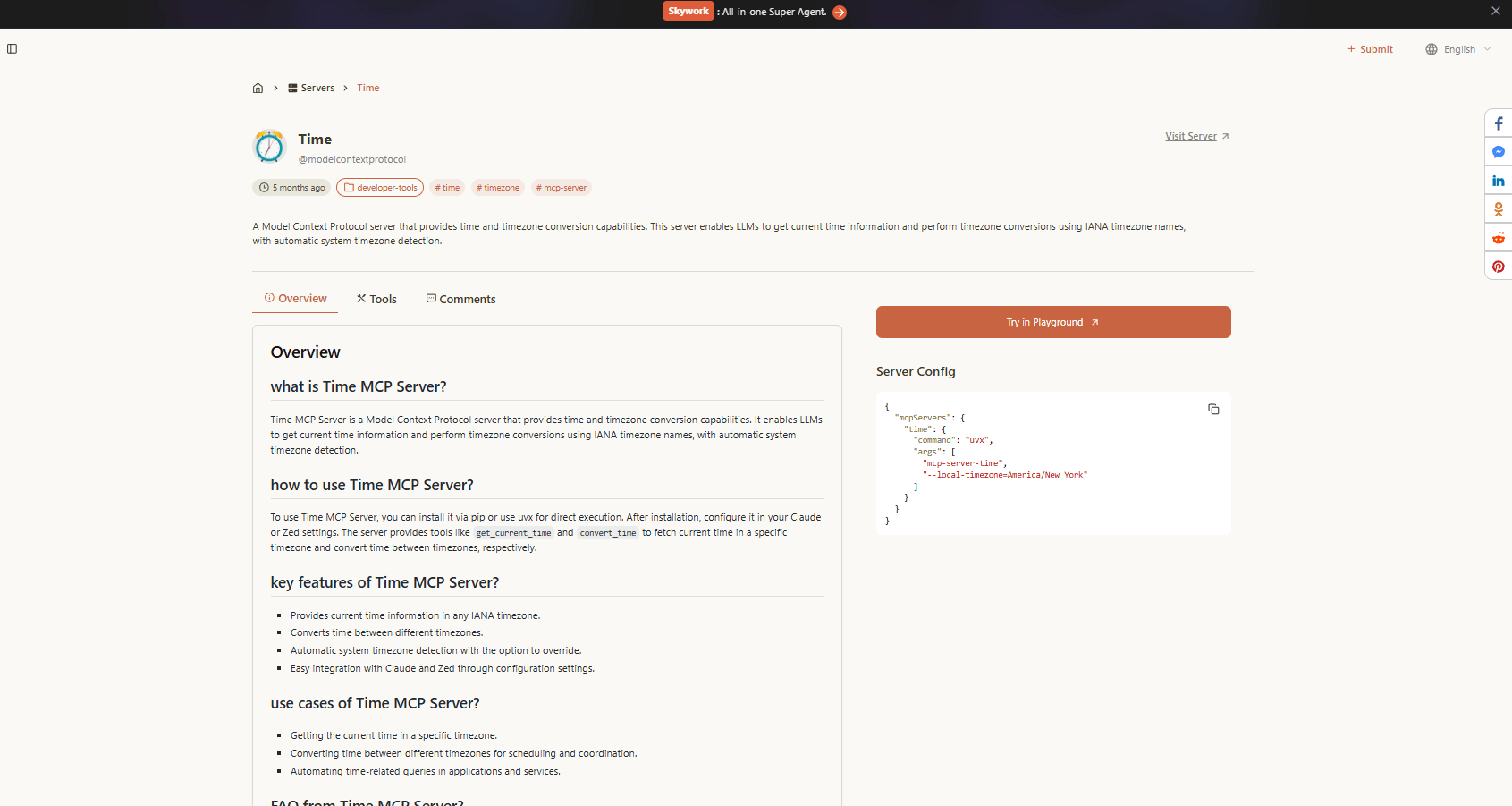

Time, Location & Utilities:

Time Zone APIs: Accurate time conversion and global zone information.

Weather Services: Current conditions and forecasts.

Mapping Services: Location data, directions and place information.

File Systems: Interact with local or remote file storage.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

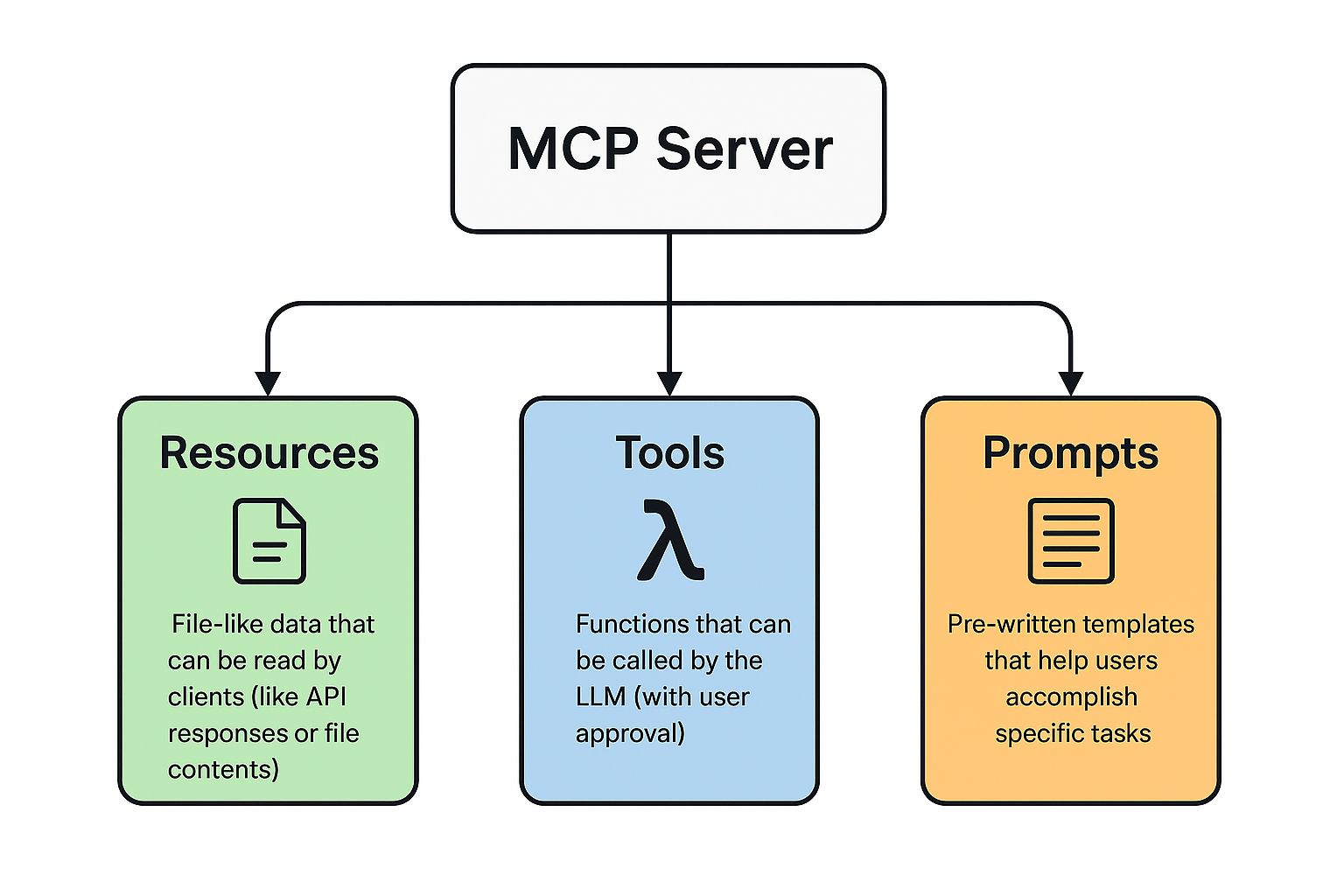

Real-World Example: Adding Stock Market Data in Minutes

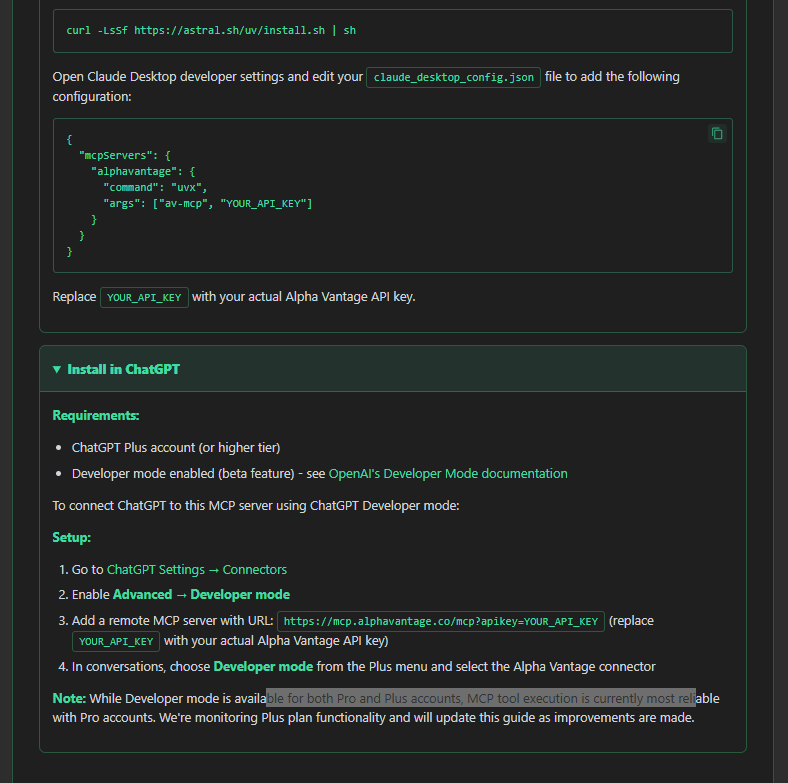

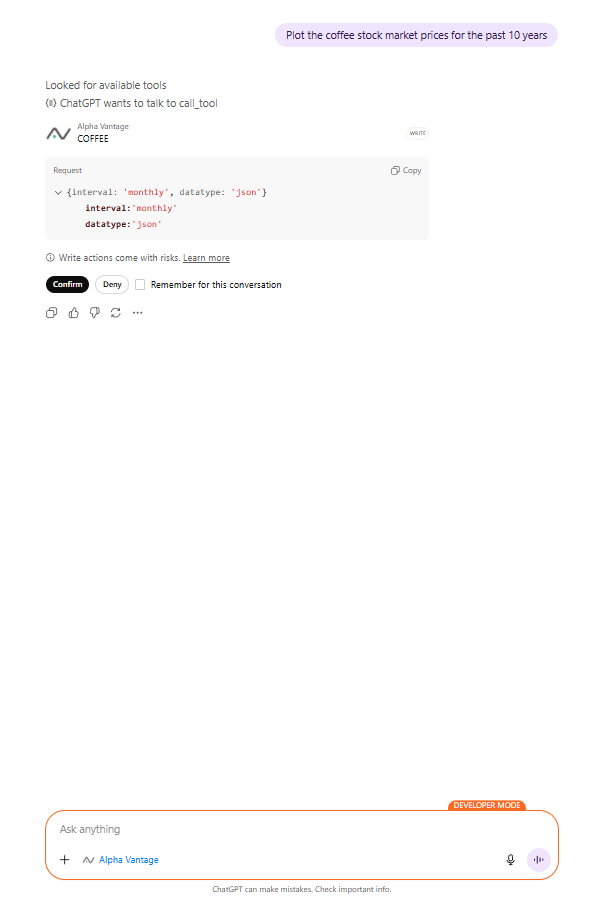

Let's walk through a practical example: adding real-time stock market data to an AI assistant like ChatGPT using the pre-built Alpha Vantage MCP server.

Step 1: Find the Server: Browse an MCP server directory (or check ChatGPT's built-in options) and locate the Alpha Vantage server, noting its function: providing real-time and historical stock market data via MCP.

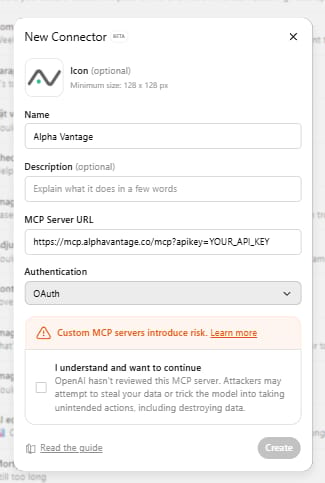

Step 2: Copy Configuration: Copy the server configuration snippet provided. This is usually just a URL containing the server's address and necessary details.

Step 3: Add it to Your AI Application: Open your AI application's configuration file (e.g., ChatGPT's config file accessible through its developer mode) and paste the copied URL configuration into the appropriate section.

Step 4: Use Immediately: Now, you can simply prompt your AI with a relevant request: "Plot the coffee stock market prices for the past 10 years".

The Result: What happens next is seamless:

ChatGPT recognizes the question needs stock data and identifies the newly added Alpha Vantage tool.

It requests your permission to access this external tool (a very important security step).

After you approve, ChatGPT's MCP client communicates with the Alpha Vantage MCP server.

The server retrieves the historical coffee price data.

ChatGPT receives the data and uses its internal skills to create an interactive chart, plotting the prices over the last decade.

Time Investment: This entire process, from finding the server to getting a working visualization, typically takes under 2 minutes.

The Magic: You didn't write a single line of code. You didn't need to sign up for an Alpha Vantage API key or study their complex documentation. You simply connected a standardized MCP server to a compatible application using a small configuration snippet. This plug-and-play simplicity is the core promise of MCP.

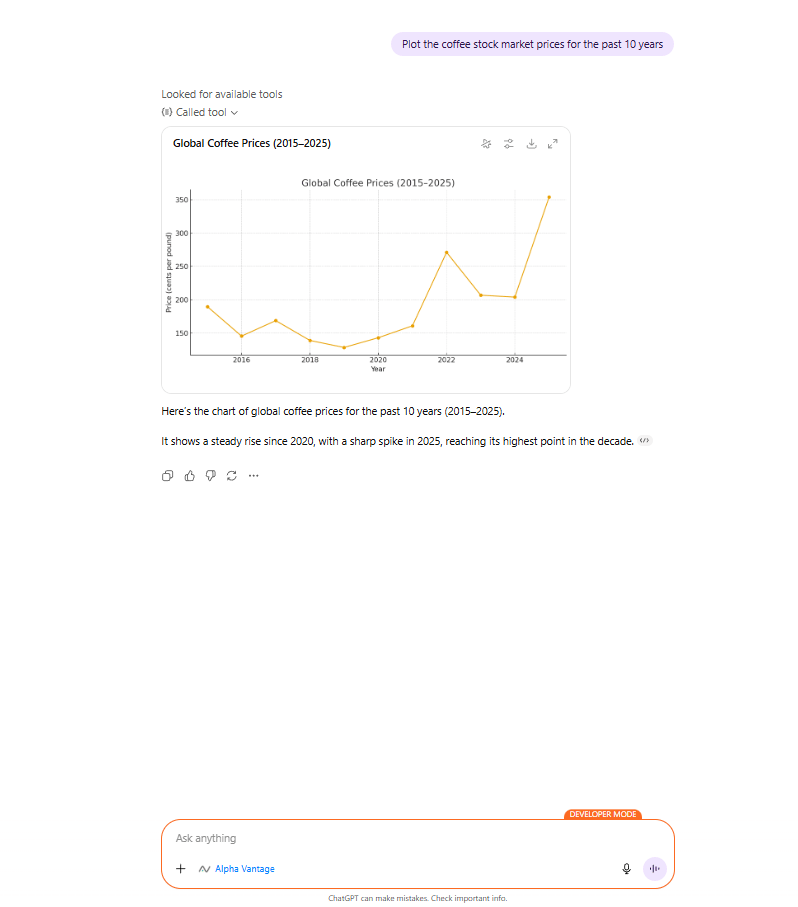

Switching Hosts Seamlessly: The Power of Portability

One of MCP's most powerful yet often overlooked features is host portability. Because the protocol is standardized, the same MCP server can be used by multiple different AI applications (hosts) without any changes to the server itself.

Imagine you built or configured an MCP server for managing your Google Calendar. You can use that exact same server with:

Claude Desktop: Your general-purpose AI assistant.

n8n: Your complex workflow automation platform.

Custom AI Applications: Web or mobile apps you build yourself.

IDE Extensions: AI coding assistants within your development environment (like VS Code).

The same MCP server provides its capabilities consistently across different host applications. You configure the connection on the host side but the server remains unchanged. This dramatically reduces the work of connecting tools and increases the reusability of your AI tools.

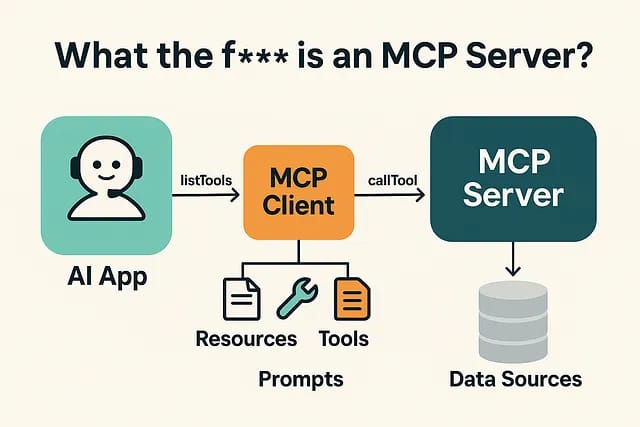

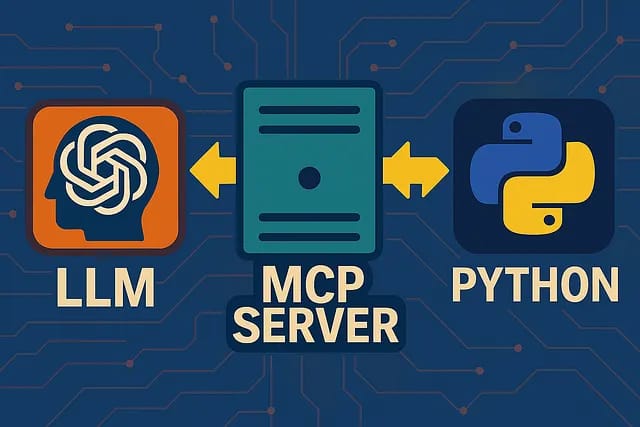

Part 3: MCP Architecture Fundamentals

To use, build and fix MCP connections, you need to understand the main setup and keywords. It's simpler than it might first appear.

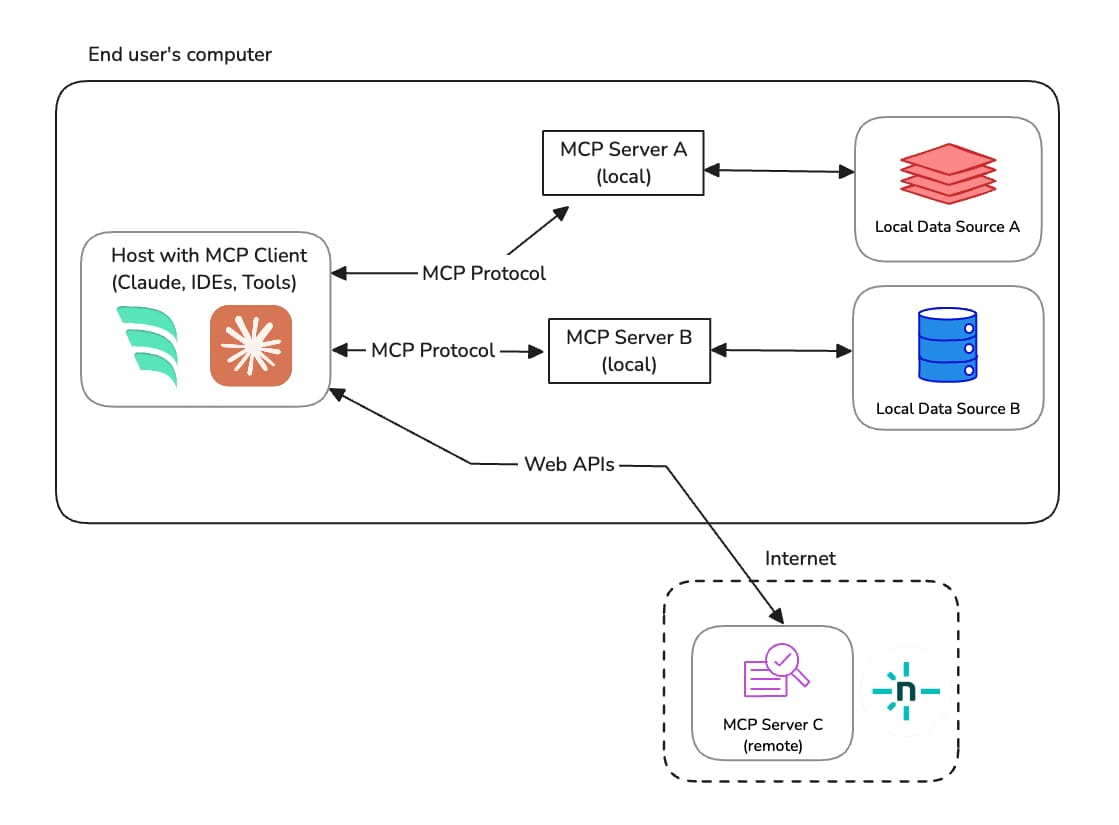

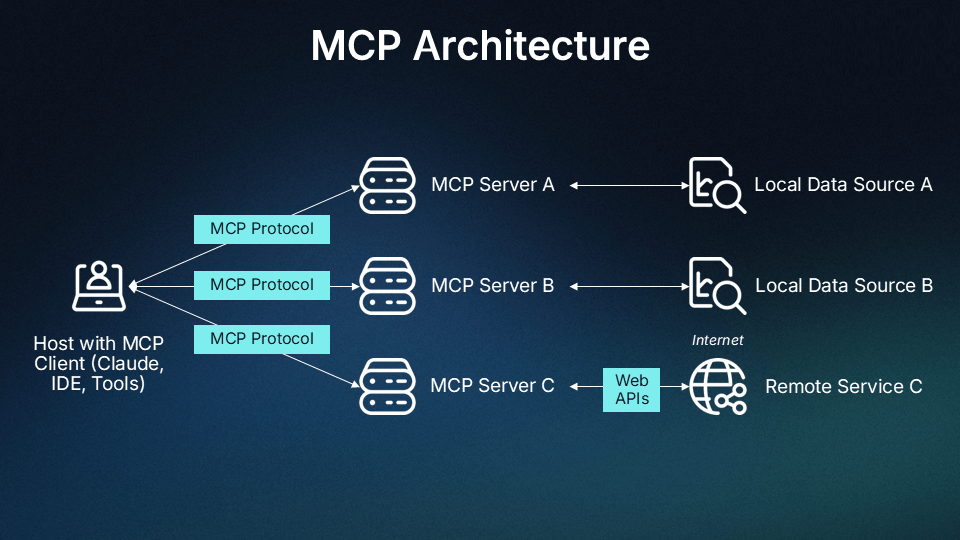

The Three Components: Host, Client, Server (HCS)

Every MCP setup involves three different parts working together in a clear order. Remembering HCS helps visualize the flow.

The Host (H): Your AI Application

Definition: The primary LLM application that wants access to external tools and data sources. This is the application the end-user interacts with.

Examples: Claude Desktop, n8n, Cursor, VS Code with an AI extension or any custom LLM-powered application you build.

Role: It holds the MCP Client and starts connections to one or more MCP Servers based on user requests or workflow logic.

The Client (C): The Protocol Messenger

Definition: Specialized software that lives inside the Host application. Its sole job is to implement the MCP protocol and manage communication with MCP Servers.

Key Characteristic: Maintains distinct, one-to-one connections with each MCP Server it needs to talk to, strictly following the standard MCP communication rules.

Think of it as: The Host's multilingual translator and messenger, fluent in the universal language of MCP, handling all the technical details of sending requests and receiving responses.

Important Note: As a user or even a typical developer, you usually don't interact directly with the MCP Client. It's automatically managed by the Host application (like Claude Desktop or n8n). You just tell the Host what you want and the Client handles the MCP communication.

The Server (S): The Capability Provider

Definition: Lightweight programs (often simple scripts or small applications) designed to expose specific capabilities - like accessing an API, querying a database or providing structured data - through the MCP protocol.

Examples: The Alpha Vantage Server, a Time Zone Server, a PostgreSQL Server, a custom Gmail Server or even a server exposing functions from your company's internal tools.

Role: Provides specific functionality that Host applications can invoke through their MCP Clients. It acts as the standardized gateway to an underlying tool or data source.

The Relationship Flow: Visualizing HCS

+---------------------+

| HOST | (e.g., Claude Desktop, n8n)

| |

| +---------------+ |

| | MCP CLIENT | | (Handles MCP Communication)

| +---------------+ |

+---------|-----------+

|

| (MCP Protocol - JSON-RPC 2.0 / HTTP)

|

+---------↓-----------+

| MCP SERVER | (e.g., Alpha Vantage Server, Your Custom Server)

| (Exposes Capabilities)|

+---------|-----------+

|

| (Server interacts with underlying service)

|

+---------↓-----------+

| EXTERNAL SERVICE | (e.g., Alpha Vantage API, Database, Gmail API)

+---------------------+

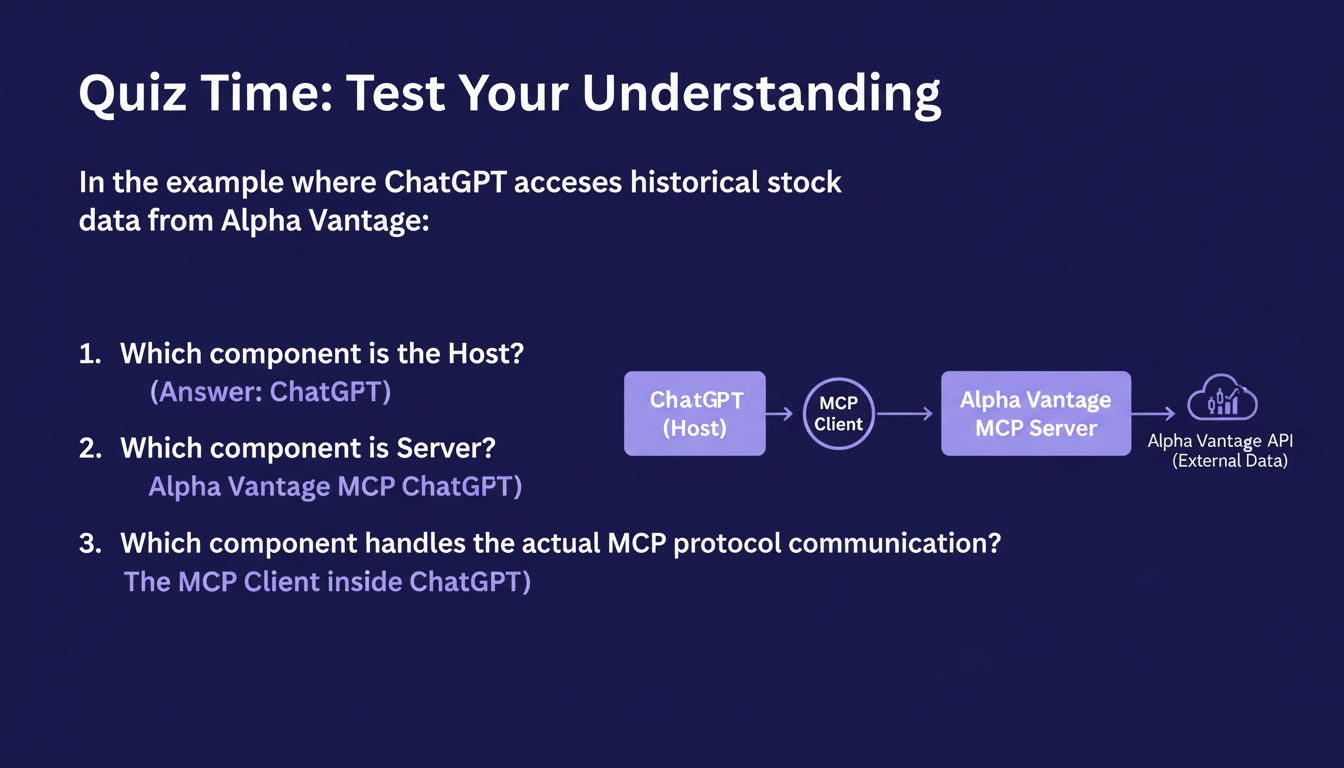

Real-World Example Breakdown

Let's revisit the stock market data example:

Host: ChatGPT (the application you are chatting with).

Client: ChatGPT's built-in MCP Client (silently handling the MCP communication).

Server: The Alpha Vantage MCP Server (providing the stock market data access).

Quiz Time: Test Your Understanding

In the example where ChatGPT accesses historical stock data from Alpha Vantage:

Which component is the Host? (Answer: ChatGPT)

Which component is the Server? (Answer: Alpha Vantage MCP Server)

Which component handles the actual MCP protocol communication? (Answer: The MCP Client inside ChatGPT)

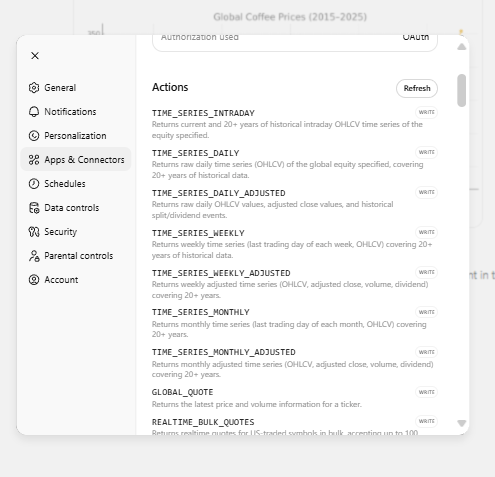

Part 4: What's Inside an MCP Server?

An MCP server isn't just a simple API wrapper. It can expose three distinct types of capabilities, often remembered by the acronym TRP: Tools, Resources and Prompt Templates. Understanding these allows you to design and utilize MCP servers much more effectively.

1. Tools: Functions AI Can Invoke

These are the most common capabilities: executable functions that the AI client can call to perform specific actions or retrieve dynamic data.

Definition: Active functions that perform operations, often interacting with external APIs or systems.

Examples from Various Servers:

Alpha Vantage Tools:

time_series_intraday,time_series_daily,symbol_search,global_quote. Each performs a specific data retrieval action.Gmail Tools:

send_message,read_messages,create_draft. These tools perform actions within the Gmail service.Database Tools:

execute_query,update_record,delete_record. These tools interact directly with a database.

Key Characteristic: Tools are active - they do things. They can change the state of external systems (like sending an email or updating a database) or fetch real-time, dynamic information.

2. Resources: Read-Only Data

Not all information needs to be fetched dynamically via a tool call. MCP servers can also expose Resources, which provide read-only access to data.

Definition: Data exposed by the server that clients can query or read but cannot directly modify through the resource itself. (Modification might happen via a separate Tool).

Why Resources Matter: Accessing data as a Resource can be much more efficient than repeatedly calling a Tool. Imagine needing historical weather data from the last month. Calling the

get_weathertool 30 times would be slow and potentially expensive. Accessing ahistorical_weather_logresource once is far better.Examples:

Markdown Notes: Server-side documentation or reference materials.

Log Files: Historical records of tool usage (e.g., all weather API calls made in the past week).

Database Records: Static information like signed contracts, meeting recordings or reference datasets.

Configuration Files: Settings or parameters that might inform how Tools should be used.

Use Case Example (Weather Server):

Tool:

get_current_weather(location)- Makes a live API call to an external weather service.Resource:

historical_weather_log- Stores all previously retrieved weather data in a log file on the server.The Efficiency Gain: An AI wanting to create a temperature visualization for the past week can read the

historical_weather_logResource once, instead of making seven separate calls to theget_current_weatherTool.

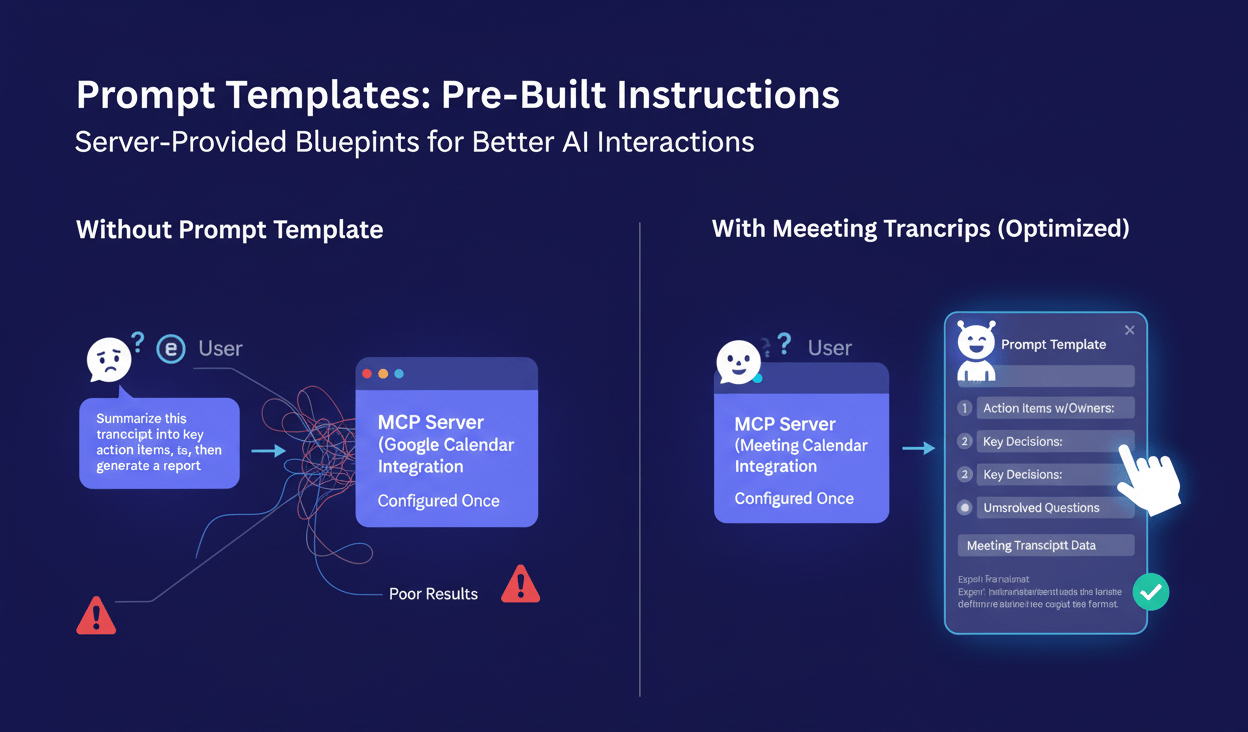

3. Prompt Templates: Pre-Built Instructions

This capability addresses a common challenge: users often don't know how to write effective prompts to get the best results from complex tools or analyses.

Definition: Structured prompt blueprints or templates, created by the MCP server developer, that guide users (or the AI itself) on how to best use the server's skills.

The Problem They Solve: Users might provide unclear or poorly structured prompts, leading to bad results from the server's tools.

The Solution: Server creators put expert-level prompt templates directly into the server. Users simply fill in the specific details and the template ensures the underlying AI or tool receives a perfectly structured, optimized request.

Example Scenario (Meeting Transcript Analysis Server):

Without Prompt Template (User's basic attempt): "Summarize this transcript into key action items, then generate a report". (Vague, likely yields poor results).

With Prompt Template (Server creator's optimized version): The user selects the "Analyze Meeting Transcript" template, which contains a pre-defined structure like:

Analyze the following meeting transcript using these criteria:

[1] Identify explicit action items with owners/due dates, [2] Extract key decisions with reasoning, [3] Note unresolved questions, [4] Classify topics by priority, [5] Generate an executive summary emphasizing business impact.

Format output as: Executive Summary, Decisions, Action Items, Open Questions, Next Steps.The Benefit: Users input only the meeting-specific details into the template. The MCP server then handles the complex analysis based on the expert-level structure provided by the template, ensuring high-quality results without requiring the user to be a prompt engineering guru.

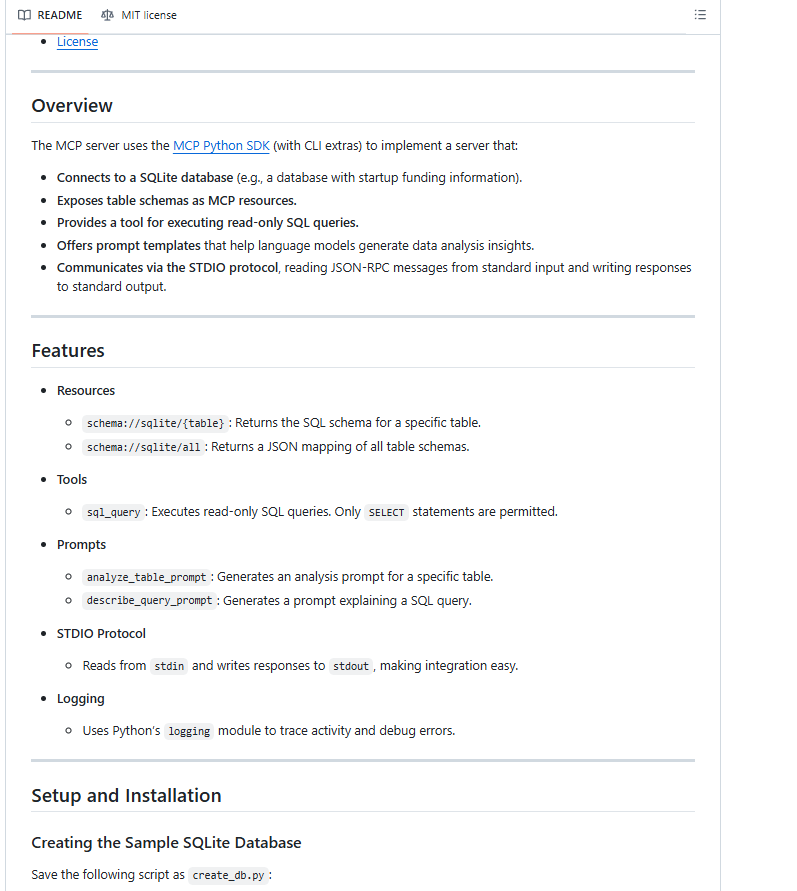

Complete Example: SQLite MCP Server

Let's examine how a hypothetical MCP server for managing a SQLite database might combine all three capabilities (TRP):

Tools (Actions):

sql_query:Executes read-only SQL queries.insert_record: Add new rows.update_record: Modify existing rows.delete_record: Remove rows.create_table: Define new tables.

Resources (Read-only Data):

schema://sqlite/{table}:Returns the SQL schema for a specific table.database_schema: The structure and definitions of all tables in the database.query_history: A log of previous queries executed through the server.

Prompt Templates (Optimized Interaction):

analyze_data_template: Generates an analysis prompt for a specific table.describe_query_prompt: Generates a prompt explaining a SQL query.backup_template: Offers a systematic template for initiating database backups and recovery procedures.

The Power: This combination provides users with a complete, intelligent database management system accessible via MCP, without needing deep SQL knowledge or database administration best practices. The server guides them through expert-level interactions.

Assessment Question

An MCP server for a customer service system provides:

Tools to send messages, escalate tickets and search the knowledge base.

Resources containing previous conversation transcripts and an FAQ database.

Prompt templates for handling common customer scenarios like refunds or complaints.

Which capability would you use to efficiently access a record of last month's customer conversations for analysis? (Answer: Resources, specifically the conversation transcripts).

Part 5: Communication Lifecycle & Transport

Understanding how MCP clients and servers establish connections, exchange messages and manage how they communicate is key for building reliable setups, especially when moving beyond simple local setups.

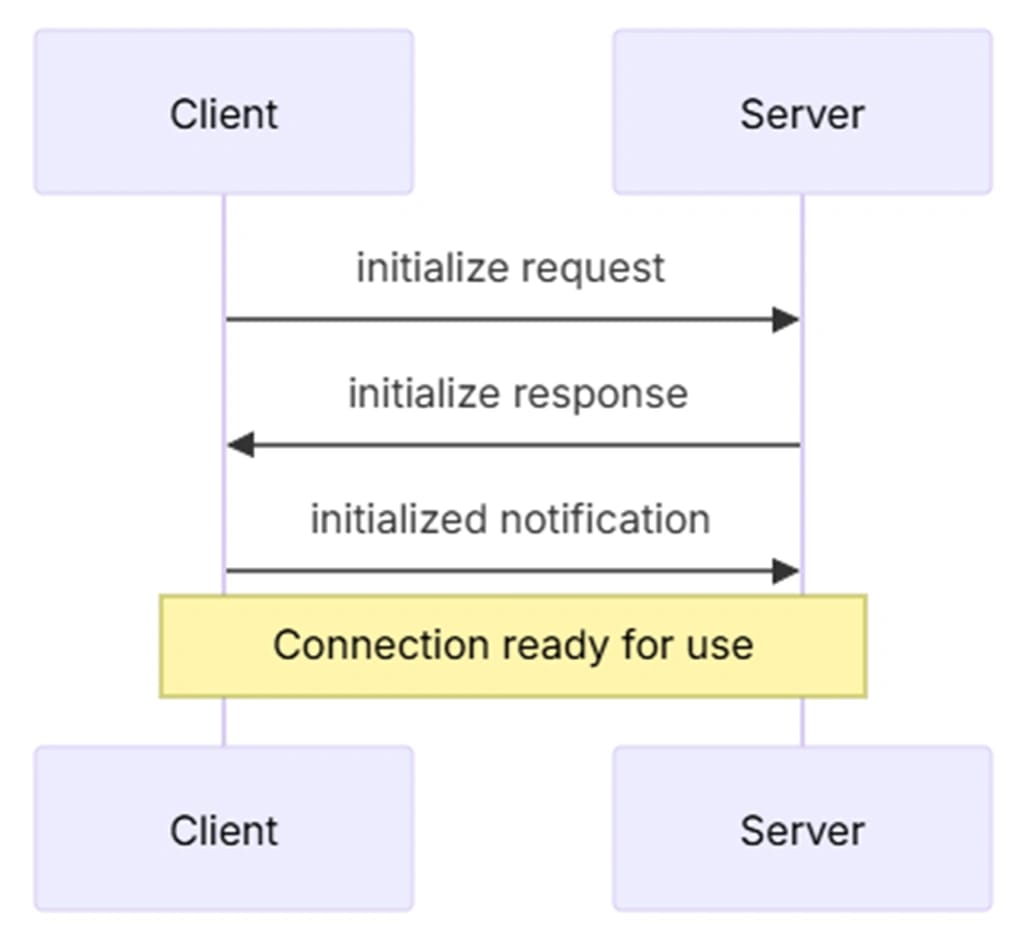

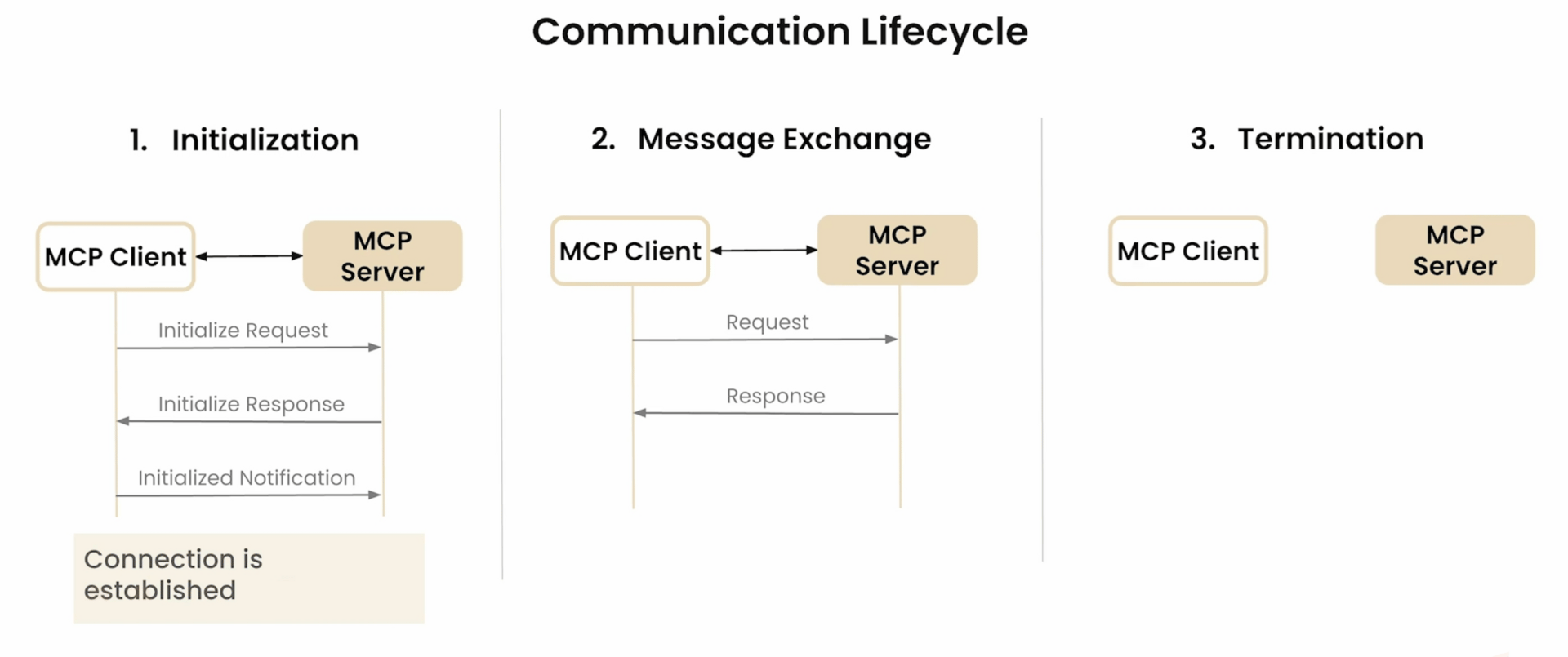

The Three-Phase Communication Lifecycle

Every interaction between an MCP client and server follows a predictable three-phase lifecycle:

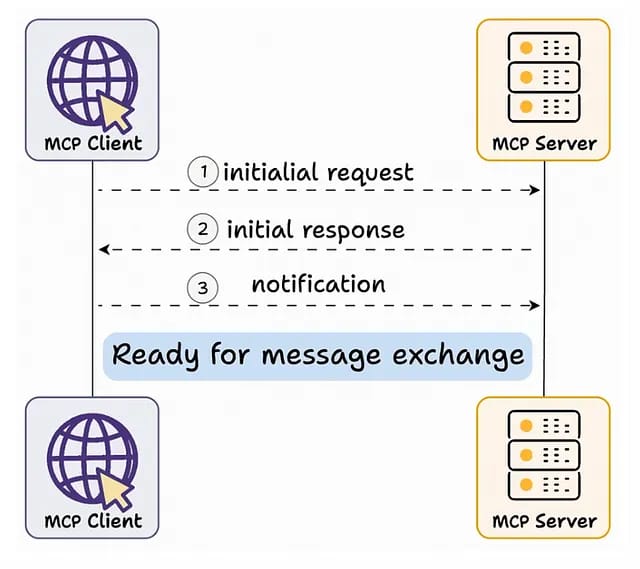

Phase 1: Initialization (The Handshake)

What Happens: The MCP Client (inside the Host application) initiates contact with the MCP Server to establish a connection.

The Handshake Process:

The Client announces its capabilities and any specific requirements it has.

The Server responds, detailing the Tools, Resources and Prompt Templates it offers.

Both sides agree on the protocol version and the specific communication method (transport) to be used.

Analogy: It's like two professionals meeting for the first time, exchanging business cards and agreeing on how they will work together before starting any actual work.

Phase 2: Message Exchange (The Conversation)

What Happens: This is the core interaction phase where the Client makes requests to use the Server's capabilities and the Server responds with results or performs actions.

Request-Response Pattern:

Client Request: "I need to use the

get_stock_pricetool with the argumentsymbol='AAPL'".Server Response: The server processes the request (e.g., calls the Alpha Vantage API) and returns the current Apple stock price data.

Ongoing Interaction: This phase continues with potentially many back-and-forth message exchanges as the AI application uses different server skills to complete the user's overall goal.

Phase 3: Termination (The Goodbye)

What Happens: Once the interaction is complete or if the connection is no longer needed, the Client and Server end their connection gracefully.

Why It Matters: Proper termination ensures that all resources are released cleanly, network connections are closed and no data is lost or left in an inconsistent state. It's the polite way to end the digital conversation.

Transport Methods: How Messages Travel

The "transport" defines the underlying technical mechanism used for sending messages between the Client and the Server. The choice of transport primarily depends on where the Server is located relative to the Host application.

Local Servers (Standard I/O Transport)

Scenario: The MCP Server program and the Host application (containing the MCP Client) are running on the same physical machine.

Transport Method: Standard Input/Output (stdin/stdout). The Host essentially starts the Server process and communicates with it directly through the operating system's standard input and output streams.

The Analogy: Imagine you and a friend cooking together in the same kitchen at home.

You are the chef (MCP Server), preparing food.

Your friend is the diner (MCP Client), requesting dishes.

You communicate by simply talking or passing notes directly (standard I/O). There's no need for phones or complex delivery systems.

Characteristics:

✅ Fastest Communication: Very little slowdown as there's no network involved.

✅ Simplest Setup: Easiest to configure for local development.

✅ Most Secure: No external network access is required, making it naturally more secure.

❌ Limitation: Strictly limited to running on a single machine.

Use Cases: Ideal for development environments, personal AI assistants running locally or local automation tools where performance and privacy are most important.

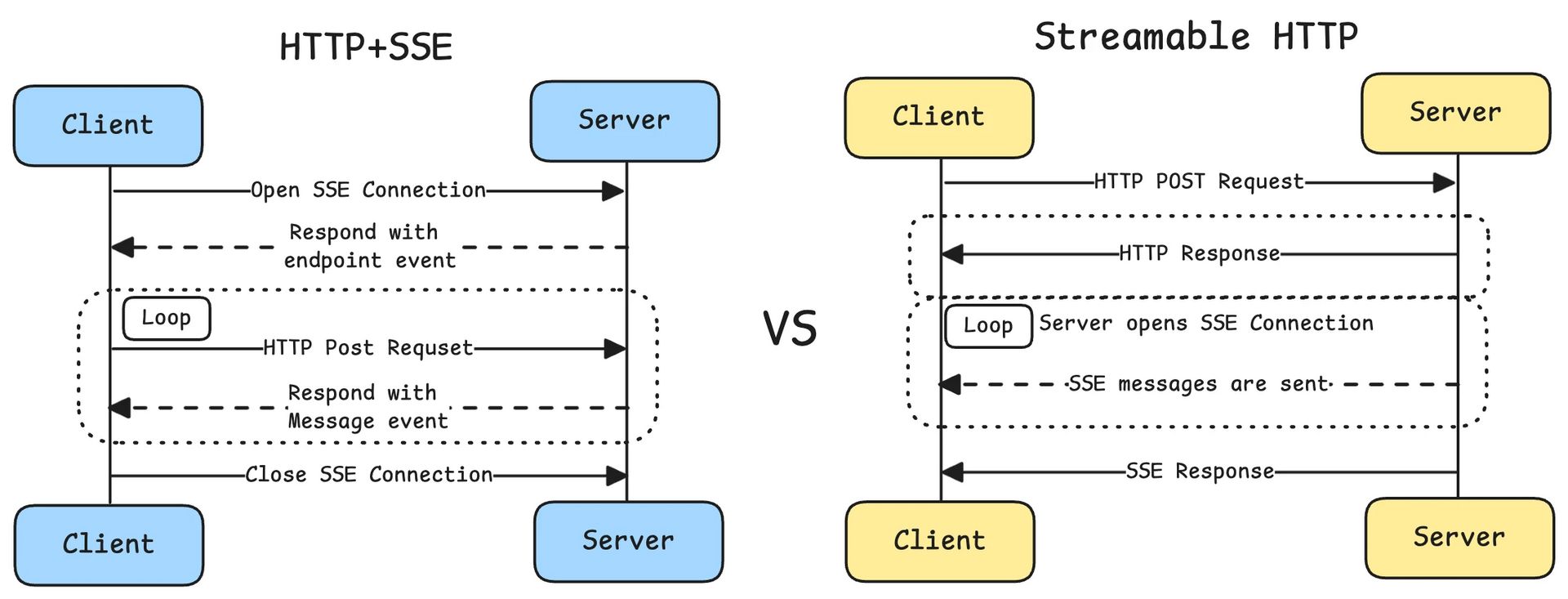

Remote Servers (HTTP-Based Transport)

Scenario: The MCP Server runs on a different machine, potentially in the cloud, as a web service or on another computer within a network.

Transport Method: Communication happens over a network, typically using HTTP-based protocols. There are two main approaches:

HTTP + SSE (Server-Sent Events) - Stateful

Analogy: A traditional sit-down restaurant experience.

You (Client) sit at a table and establish a connection with your waiter (Server).

The waiter remembers your entire order and conversation history throughout the meal (maintains state).

You can reference previous orders ("I'll have the same drink again"). The Server maintains context.

Characteristics:

Stateful: The Server remembers the context of the interaction across multiple requests within the same connection.

Persistent Connection: Uses Server-Sent Events (SSE) to maintain an open connection, allowing the Server to push updates to the Client in real-time.

Complex: Requires more server resources and complex state management to handle multiple stateful connections at the same time.

Use Cases: Interactive AI applications requiring real-time updates, collaborative tools or systems needing continuous monitoring where maintaining conversation history is crucial.

Streamable HTTP - Stateless (Preferred)

Analogy: A fast-food restaurant experience.

You (Client) place an order at the counter (make a request).

The cashier (Server) processes that single order.

If you want something else, you must place a completely new, separate order. The Server has no memory of your previous interaction.

Characteristics:

Stateless: Each request from the Client is treated independently by the Server; there is no memory kept between requests.

Scalable: Much easier to spread across multiple servers (load balancing) because no state needs to be shared.

Simple: Less complex state management required on the server side.

Important Note: Streamable HTTP is often the preferred remote transport method because, while naturally stateless, it can also support stateful interactions if the application layers (Host and Server) manage the state themselves. This provides maximum flexibility.

Use Cases: Suitable for simple questions, one-off tool calls, high-scale API services and applications where state can be managed effectively by the Host.

Why Understanding Transport Matters

While often handled automatically by frameworks, understanding transport methods becomes critical when:

Building Your Own MCP Servers: You must choose and implement the appropriate transport mechanism based on your server's intended use and deployment environment.

Deploying at Scale: Remote servers require different setup planning (networking, load balancing, security) than local servers.

Debugging Connection Issues: Knowing the transport method helps pinpoint problems (e.g., network errors vs. local process failures).

Optimizing Performance: Different transports have very different speeds and resource requirements.

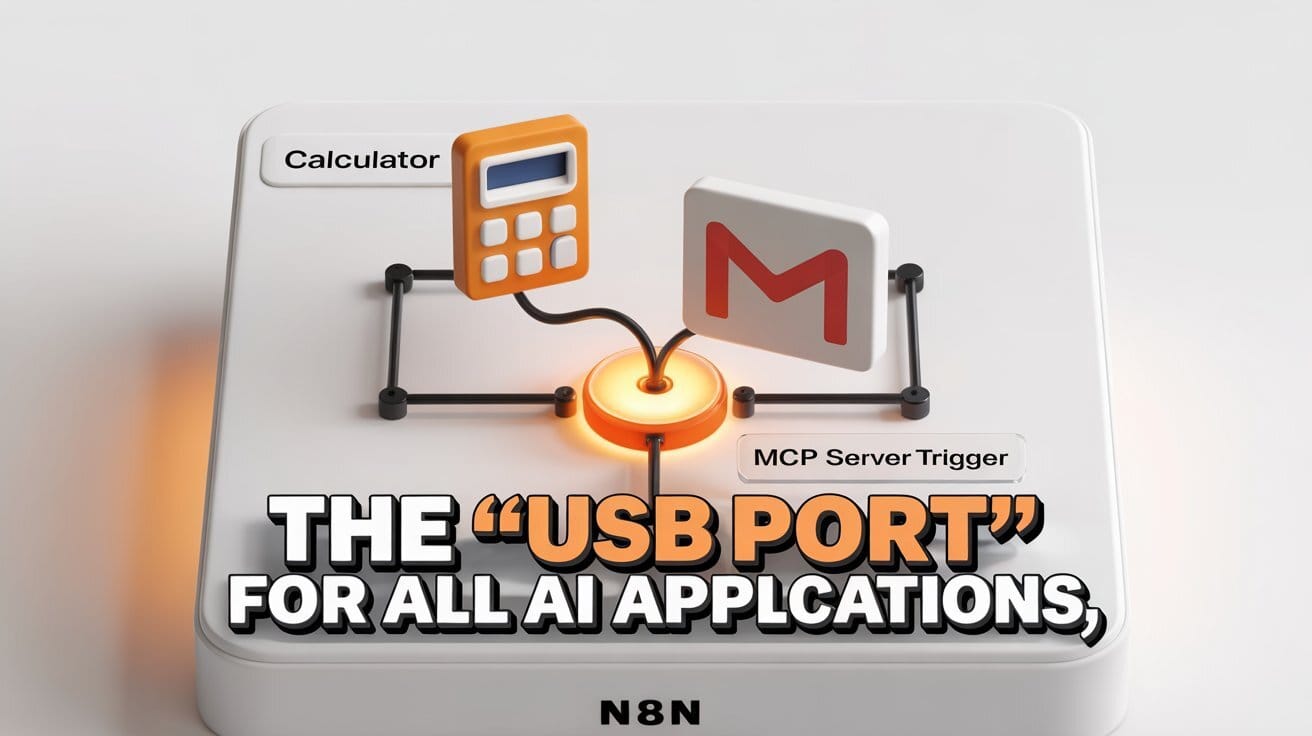

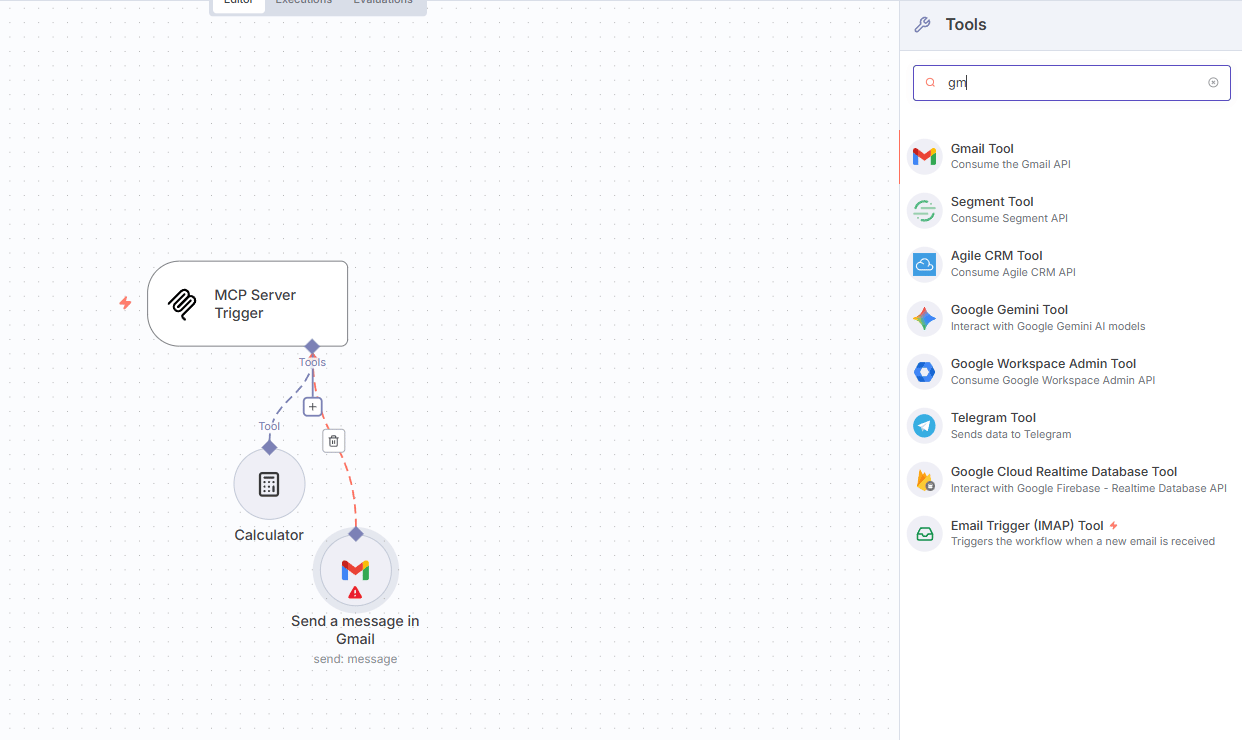

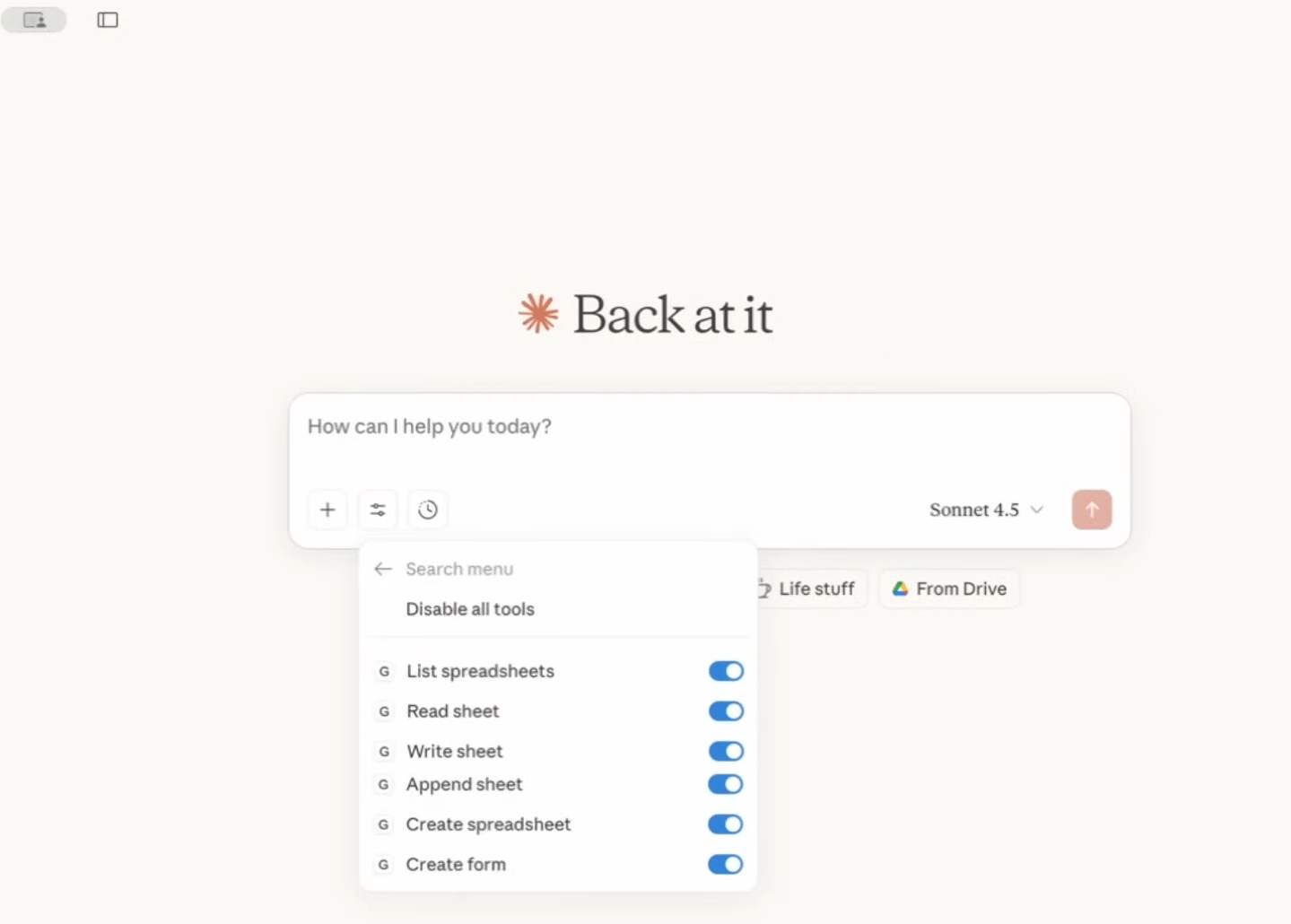

Part 6: Building MCP Servers (No-Code with n8n)

One of the most accessible ways to start building MCP servers is by using a visual workflow automation platform like n8n. This approach allows you to create functional MCP servers without writing a single line of code.

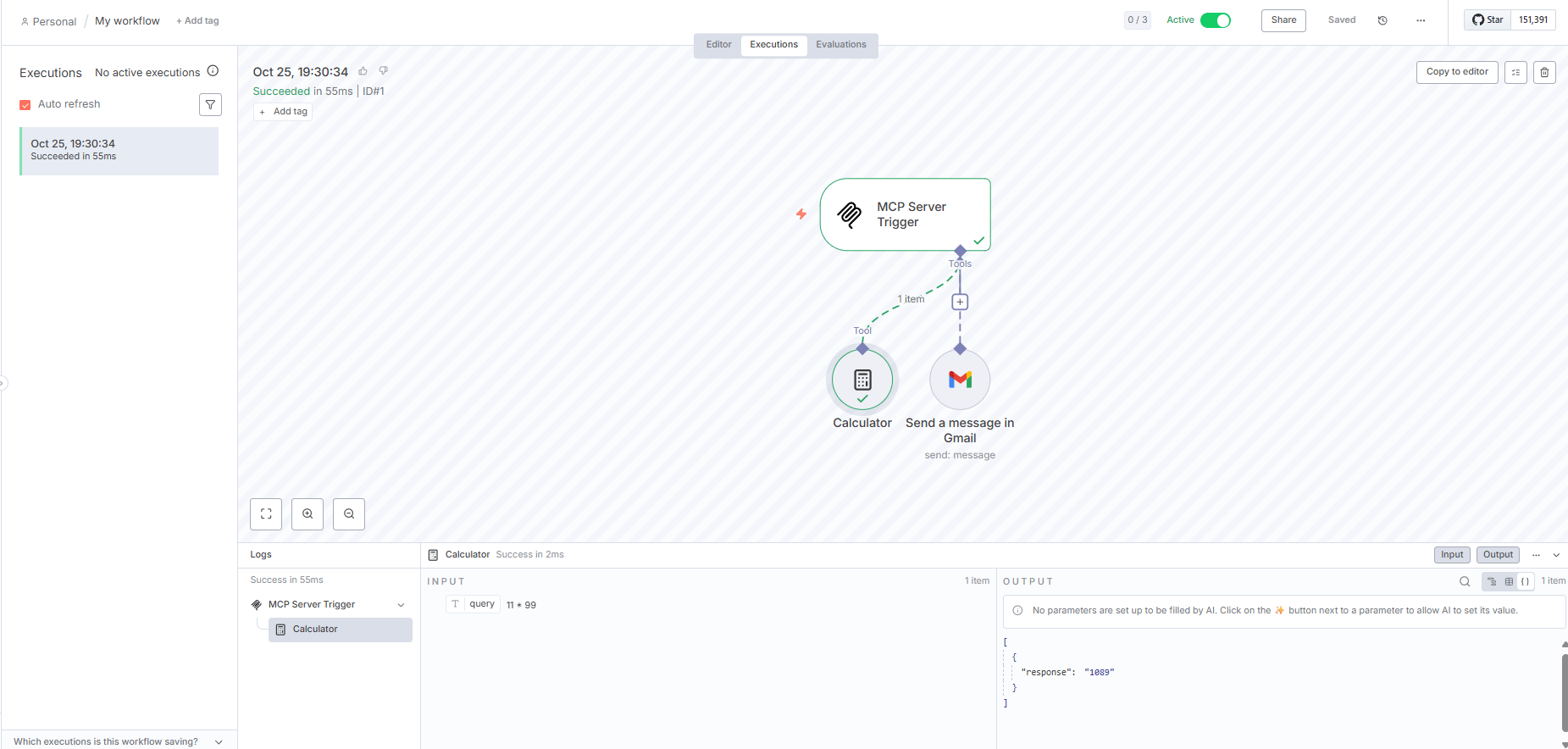

What We'll Build

Let’s build a simple yet practical MCP server using n8n that provides two distinct tools to AI applications:

Calculator: Performs basic mathematical operations.

Gmail Sender: Sends emails automatically based on AI instructions.

Step-by-Step Implementation

Create New n8n Workflow Start with a fresh, blank workflow in your n8n instance. n8n provides an easy-to-use, visual drag-and-drop interface for designing automation sequences.

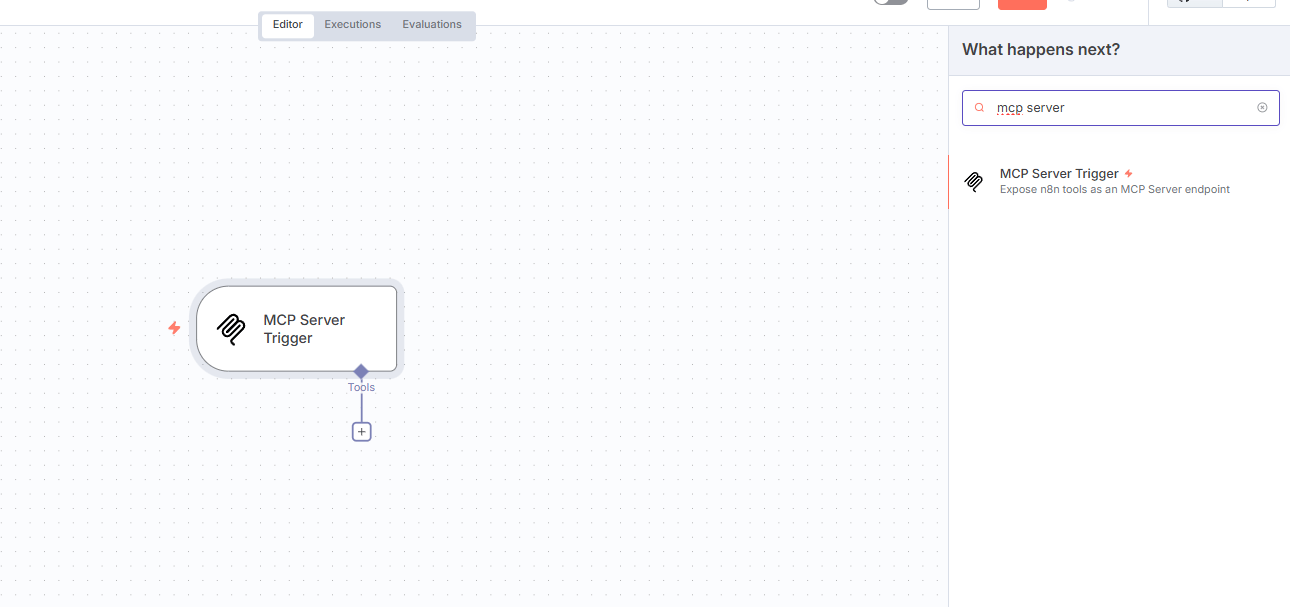

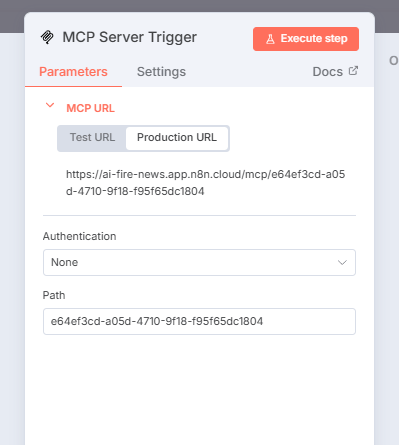

Add the MCP Server Trigger Search for and add the "MCP Server" trigger node to your workflow. This special node acts as the entry point and exposes your entire n8n workflow as a functional MCP server that other applications can discover and connect to.

Add Your Tool Nodes: Now, add the specific functionalities (tools) you want your server to offer.

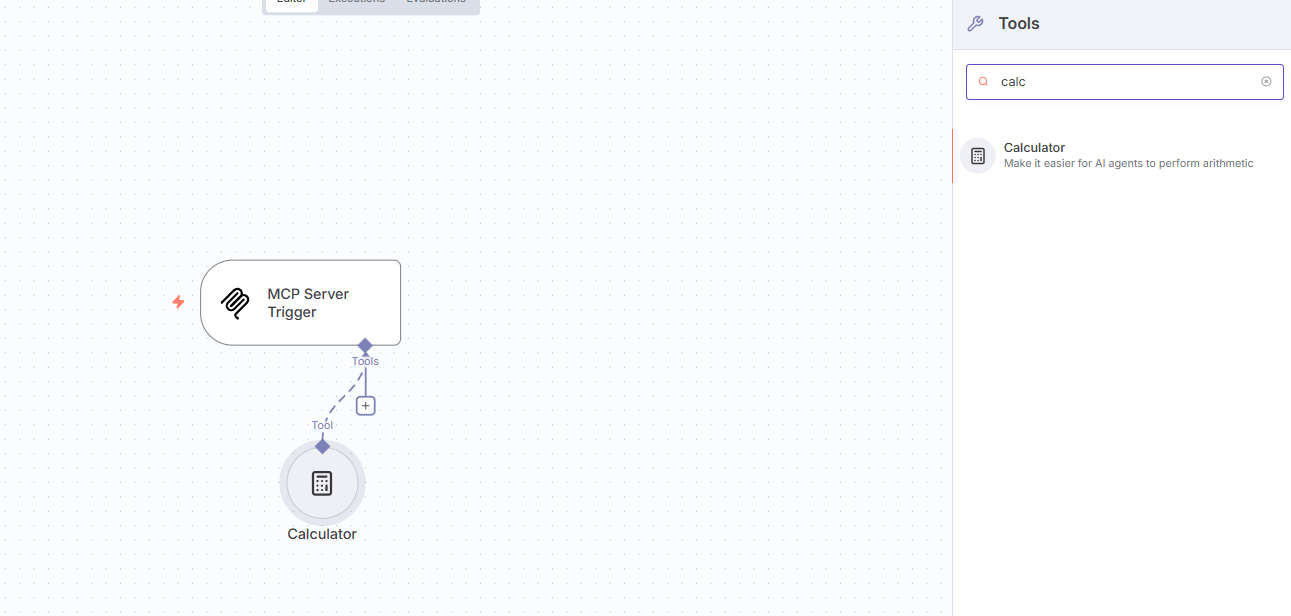

Add Calculator Tool:

Drag the standard "Calculator" node onto your workflow canvas.

Configure it to accept mathematical expressions as input (likely using n8n's expression editor to pull data from the trigger).

Set the output format to deliver the numerical result.

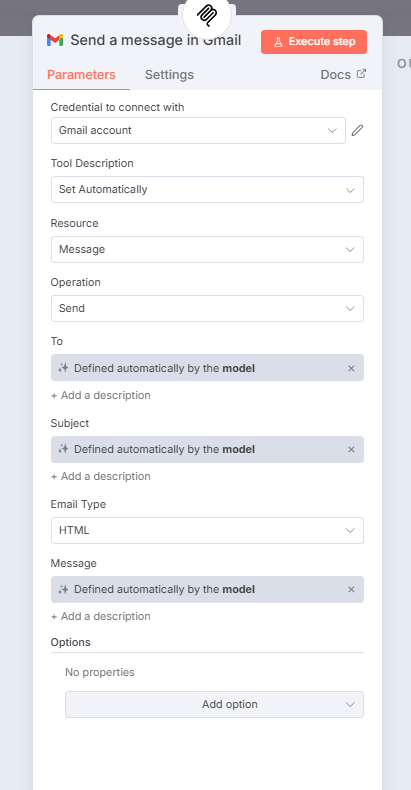

Add Gmail Tool:

Drag the "Gmail" node onto the canvas.

Crucially: Click "Create New Credential" and sign into the Google account you want the AI to send emails from. This securely authorizes n8n to access Gmail on your behalf.

Select the operation: "Send".

Configure Dynamic Parameters: This is key for AI integration. For fields like "To" (recipient), "Subject" and "Message", toggle the option to "Let Model Decide". This allows the calling AI model (like Claude or ChatGPT) to dynamically determine these values based on the user's request, rather than you hard-coding them. Set the email type to HTML for potentially richer formatting.

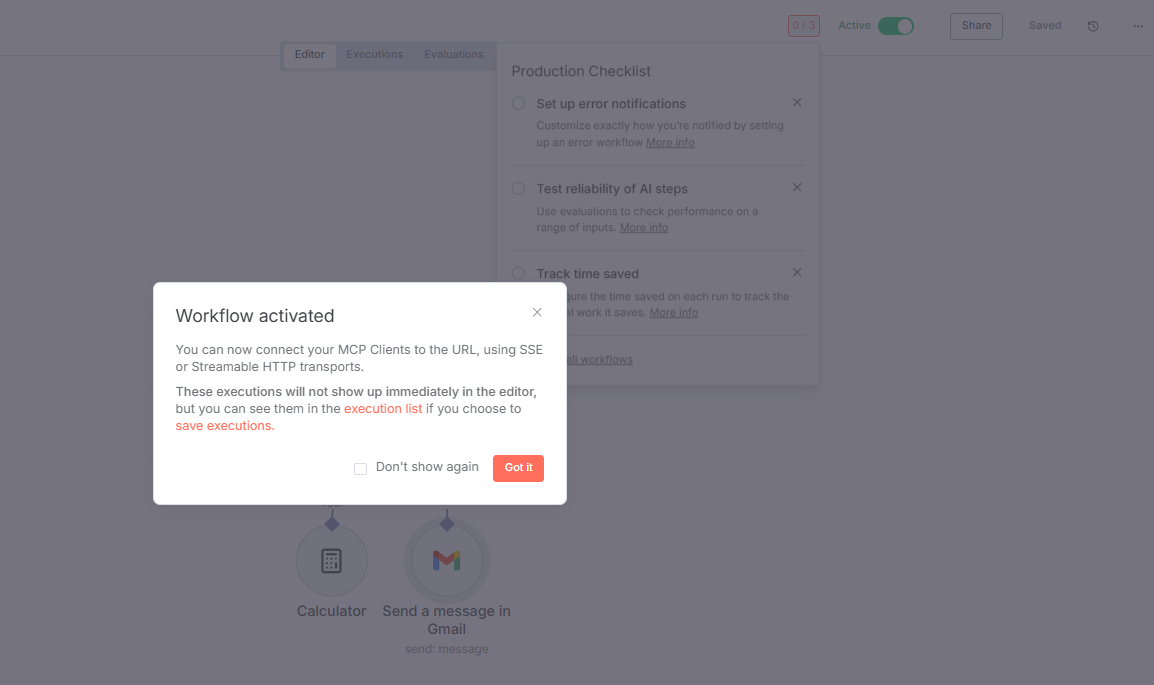

Activate and Deploy: Your workflow now defines your MCP server's capabilities.

Save your n8n workflow.

Toggle its status to "Active". This makes the server live.

Go back to the MCP Server trigger node and copy the "Production URL". This URL is the unique endpoint address for your newly created MCP server.

The Magic: In just a few minutes, with zero code, your MCP server is now live and ready for any compatible AI application (Host) to connect to and utilize its Calculator and Gmail Sender tools.

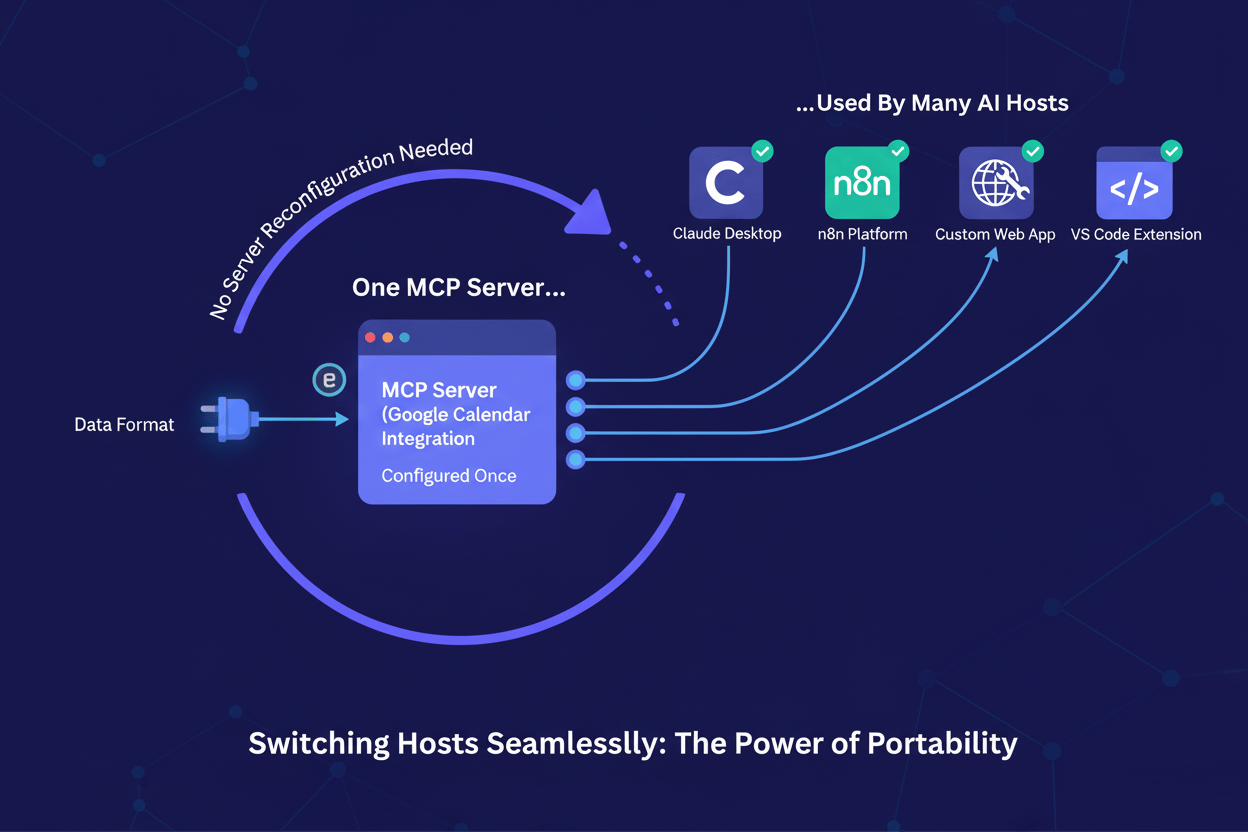

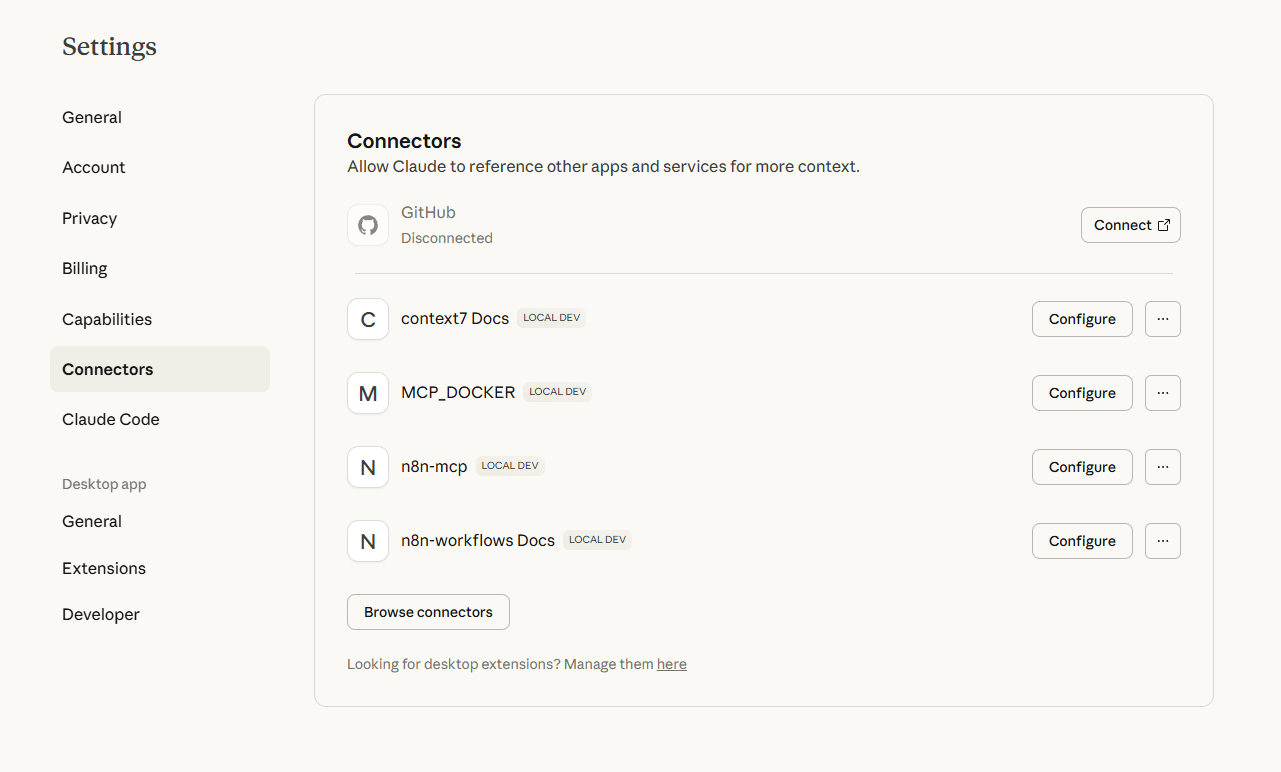

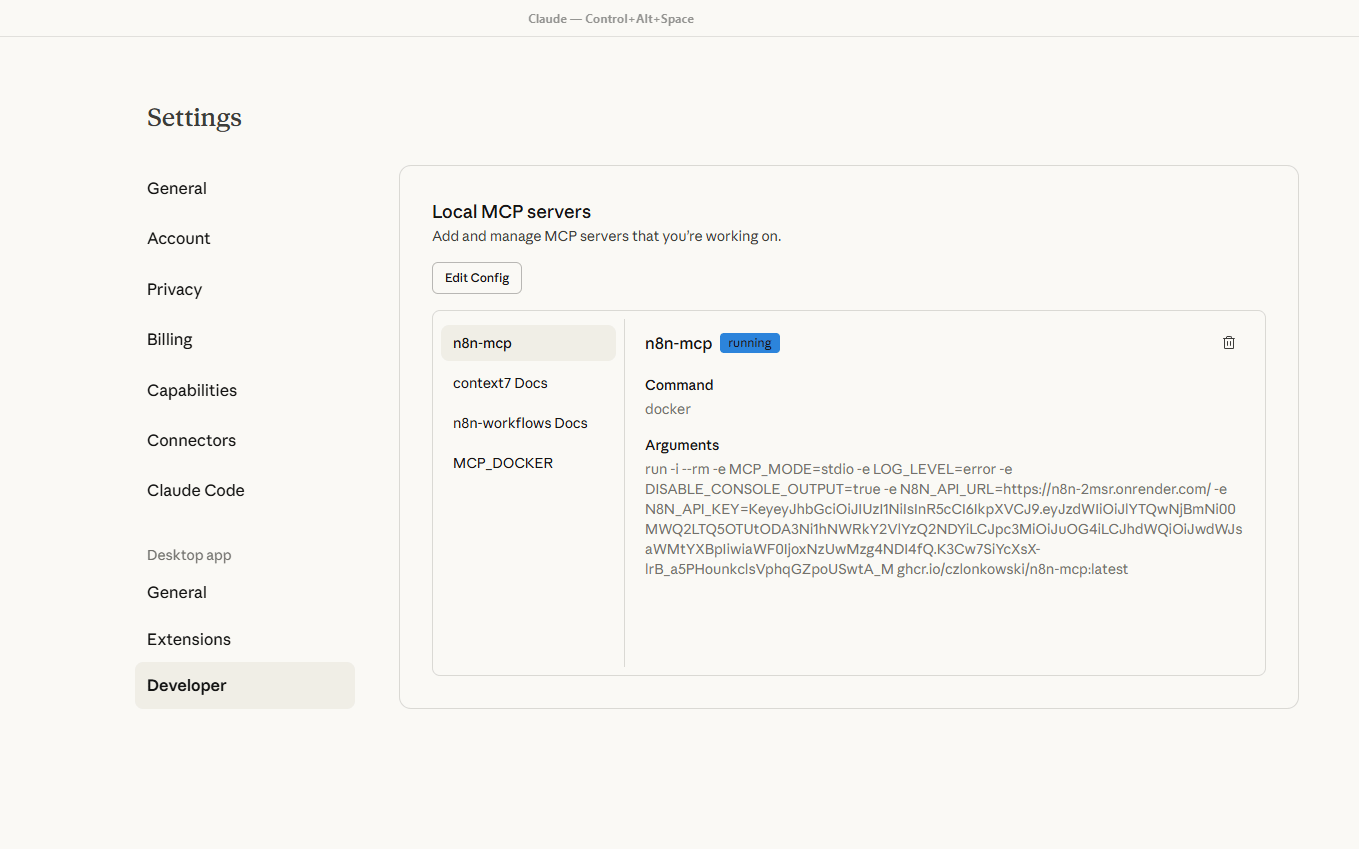

Connecting to Claude Desktop (Example Host)

Let's connect this n8n-based MCP server to the Claude Desktop application.

Access Developer Mode in Claude Desktop:

Open Claude Desktop.

Navigate to the Help menu.

Type "developer" and select "Switch to developer mode".

Find and open the "Claude Desktop config file" (this is where you add external servers).

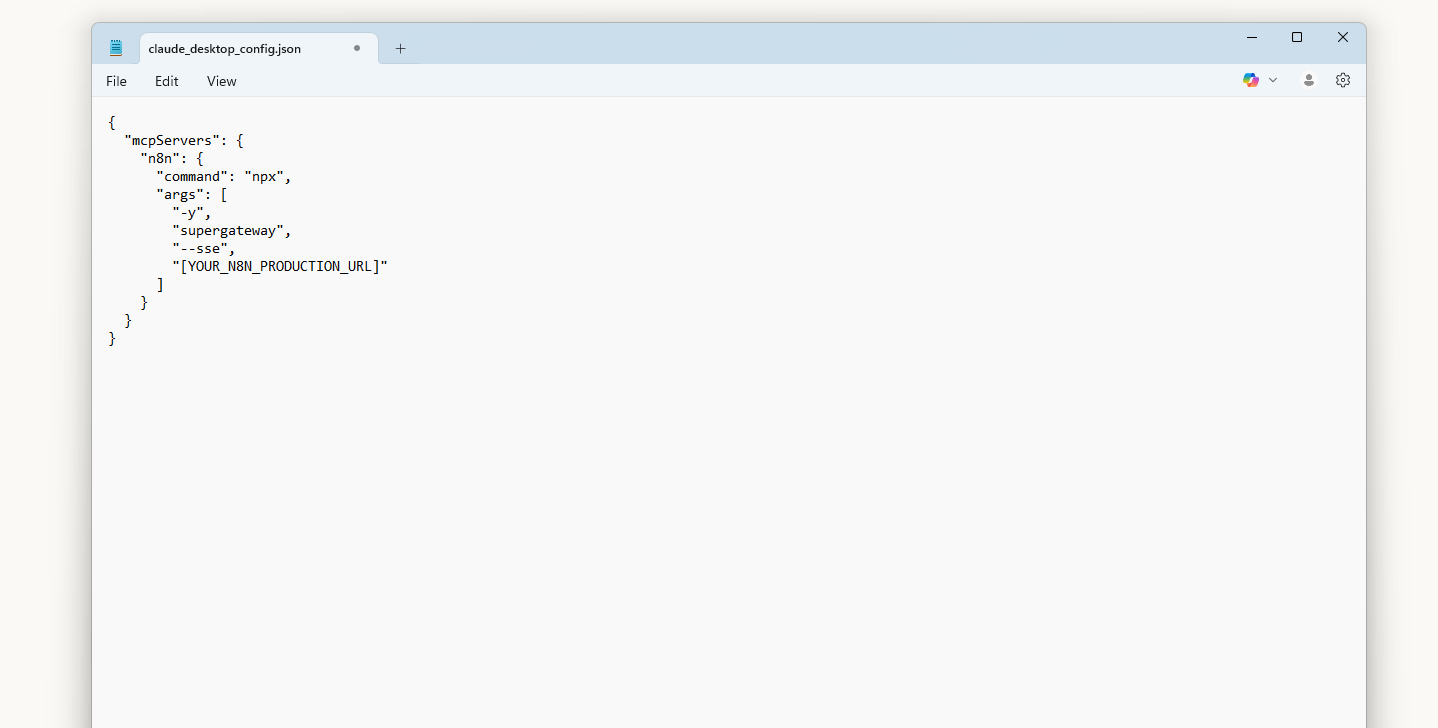

Configure the MCP Server in Claude: Add the following configuration block to your config file, replacing

[YOUR_N8N_PRODUCTION_URL]with the URL you copied from n8n:

{

"mcpServers": {

"n8n": {

"command": "npx",

"args": [

"-y",

"supergateway",

"--sse",

"[YOUR_N8N_PRODUCTION_URL]"

]

}

}

}

Important: For n8n MCP servers, the correct transport setting is typically sse (Server-Sent Events).

Restart and Verify:

Save the configuration file.

Restart Claude Desktop completely.

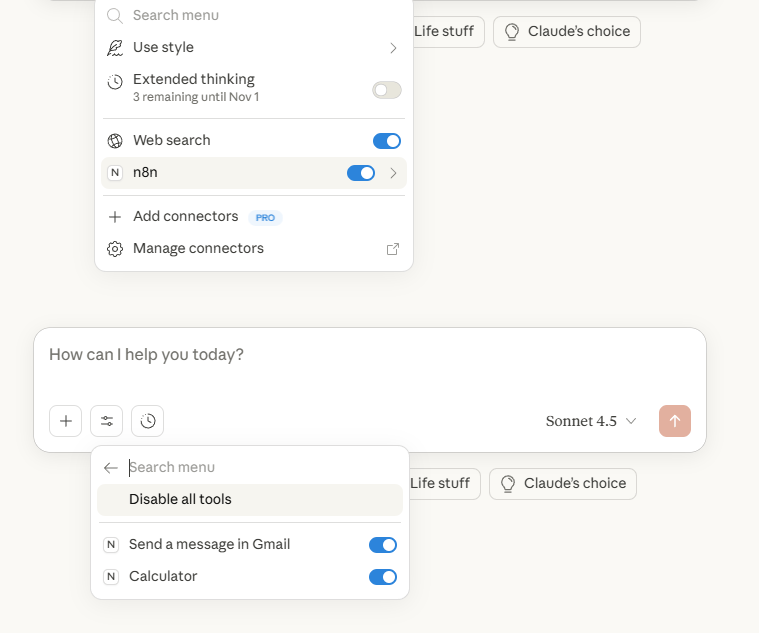

Check the available tools within Claude (often in a specific tools menu or sidebar). You should now see "Calculator" and "Send message in Gmail" listed as available actions, sourced from your n8n server.

Testing Your MCP Server

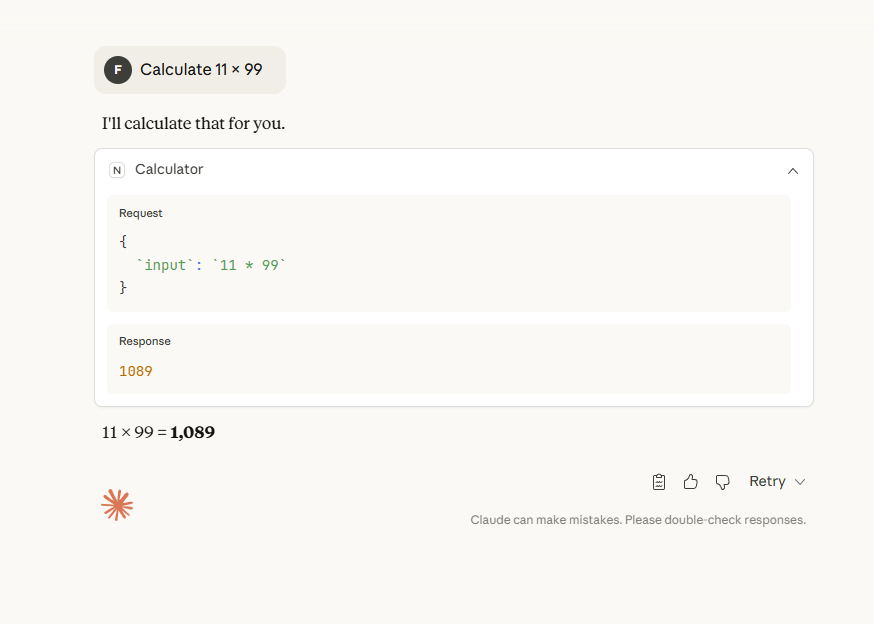

Now, test the integration with natural language prompts in Claude.

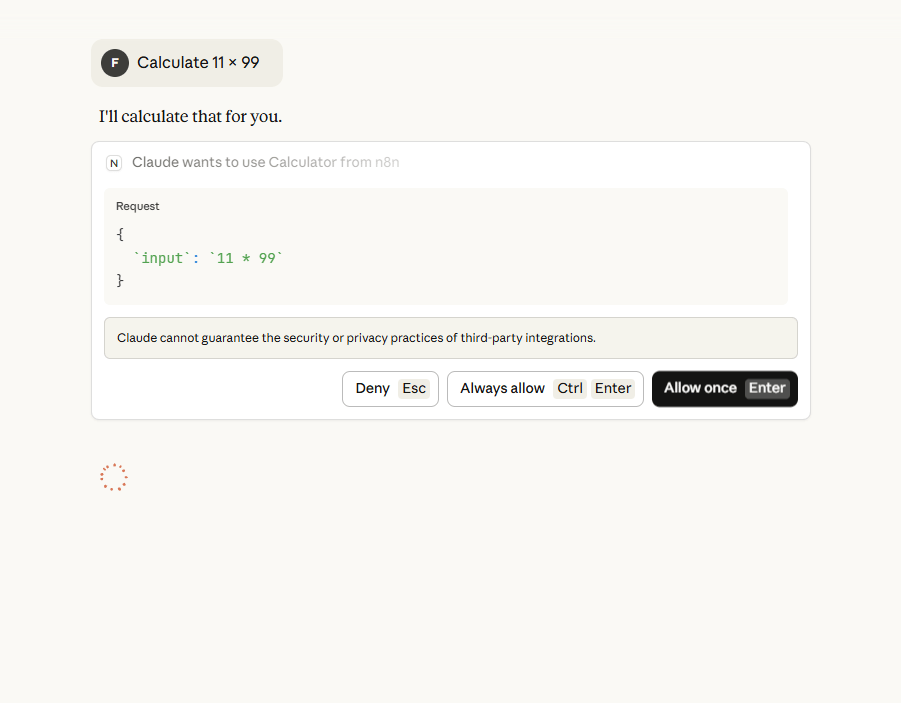

Test 1: Calculator

Prompt Claude: "Calculate 11 × 99"

What Happens:

Claude recognizes the need for calculation and identifies the "Calculator" tool provided by your n8n MCP server.

It accesses its internal MCP Client.

The Client calls your n8n MCP server's calculator tool via the production URL.

n8n executes the calculation node.

n8n sends the result (1089) back to Claude's Client.

Claude responds to you: "The result of 11 multiplied by 99 is 1,089".

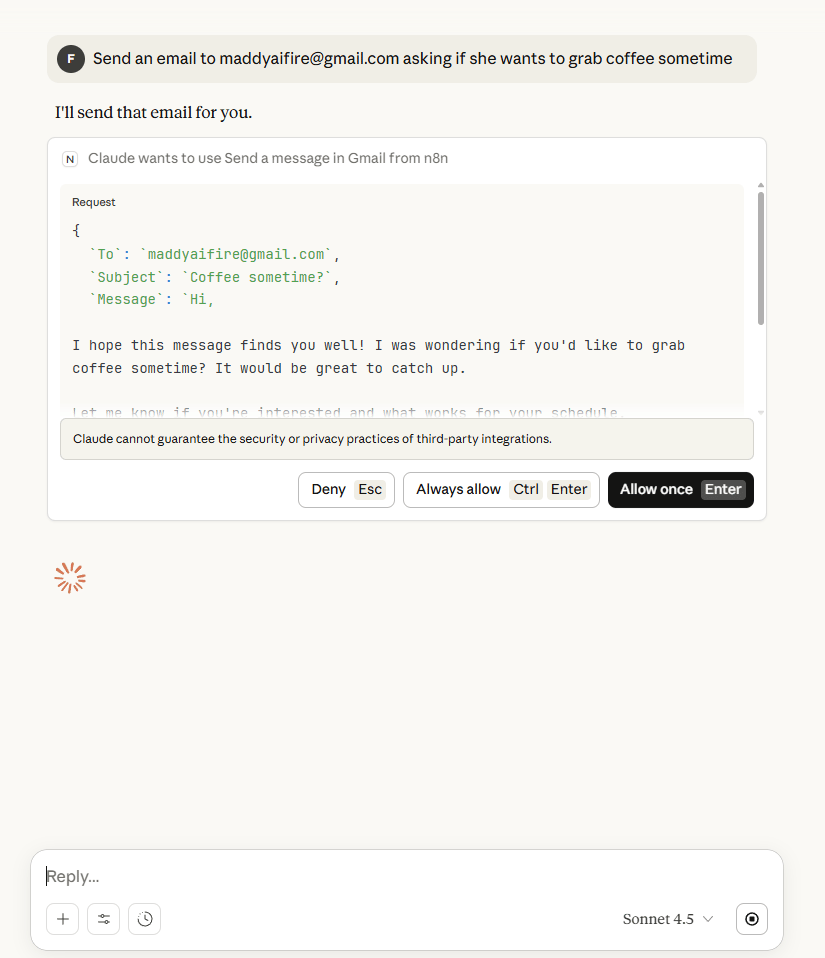

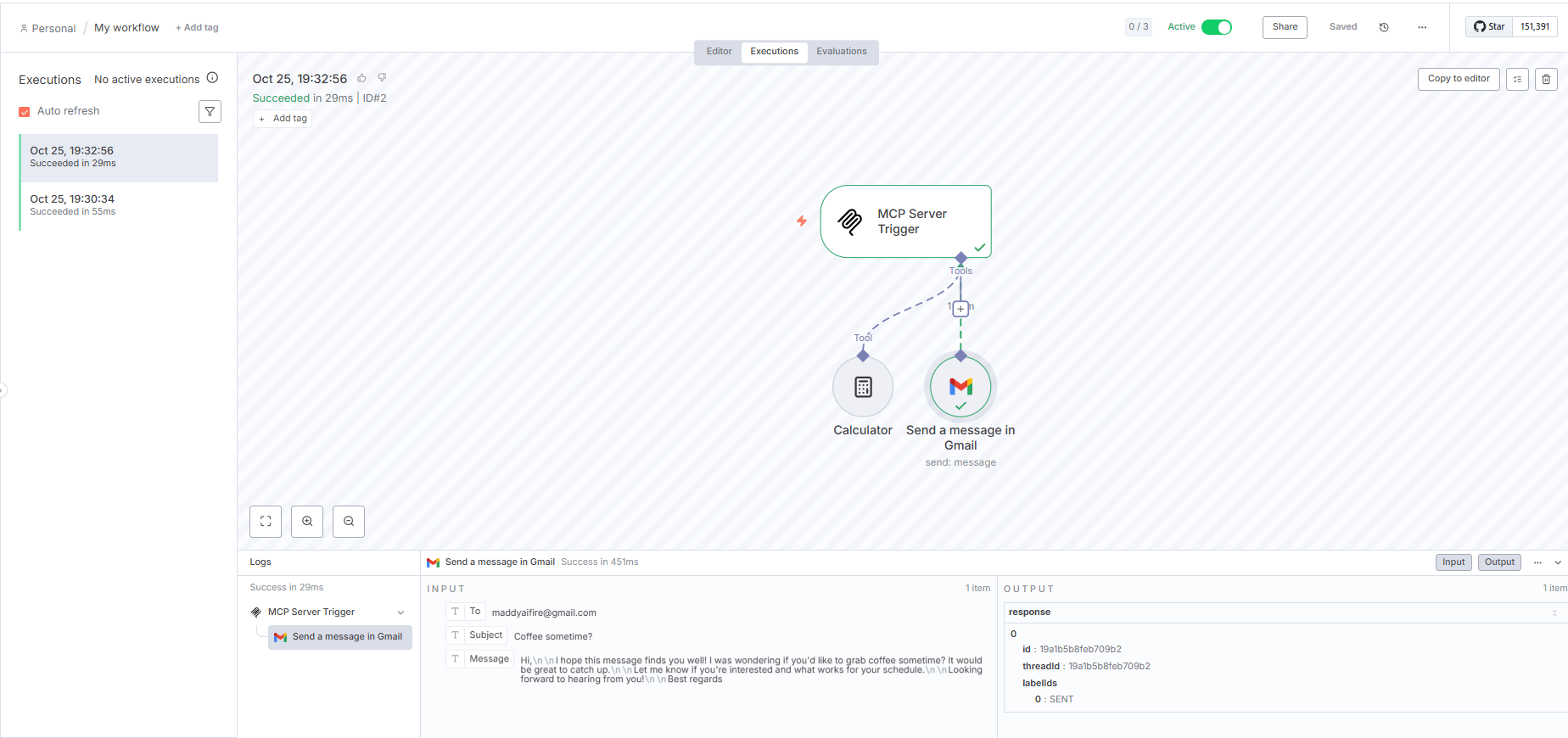

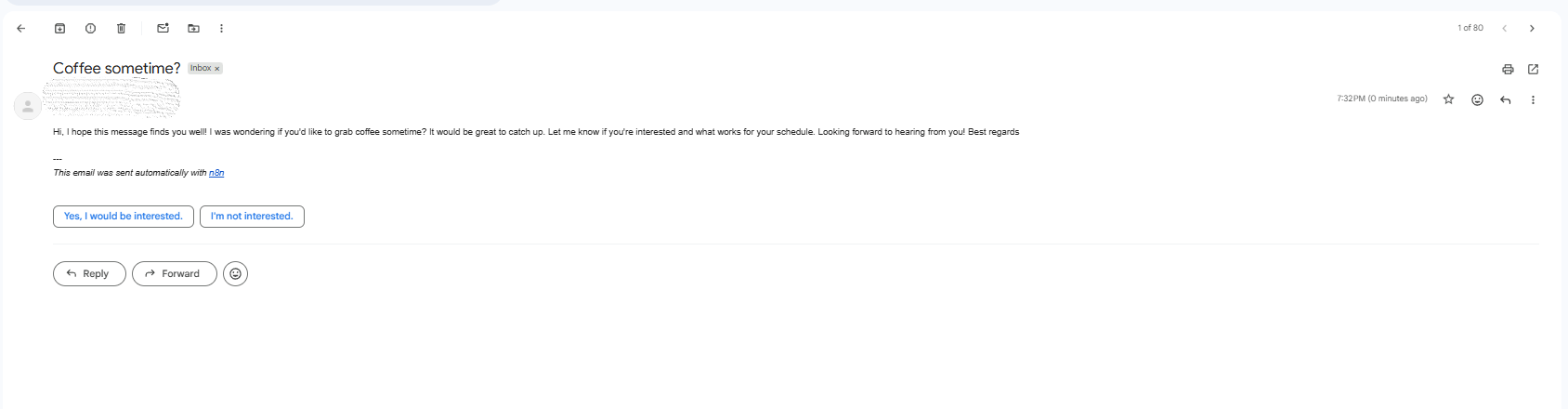

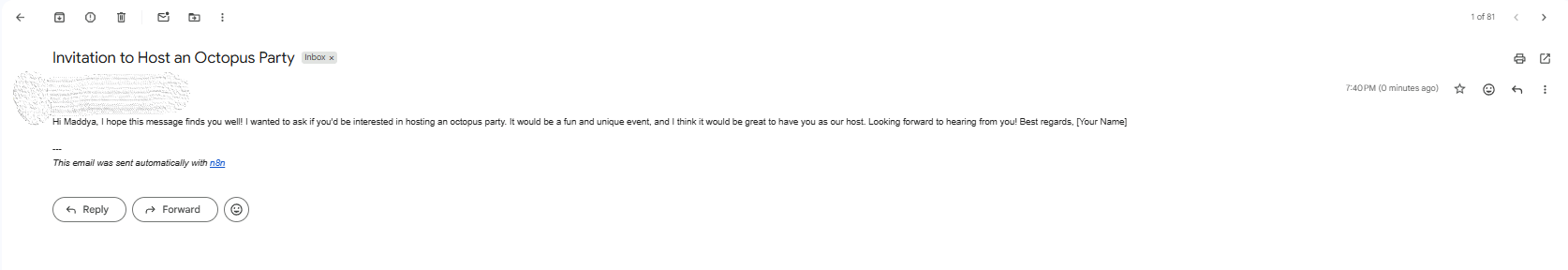

Test 2: Email Sender

Prompt Claude: "Send an email to [email protected] asking if she wants to grab coffee sometime"

What Happens:

Claude identifies the need to send an email and selects the "Send message in Gmail" tool from your n8n server.

It requests your permission to use this external tool (click "Allow once").

Claude's MCP Client sends the request (recipient, inferred subject/message) to your n8n server.

n8n's Gmail node composes a professional email using the dynamic data provided by Claude.

The email is automatically sent via the authorized Gmail account.

Email Result (Example):

Hi, I hope this message finds you well! I was wondering if you'd like to grab coffee sometime? It would be great to catch up. Let me know if you're interested and what works for your schedule. Looking forward to hearing from you! Best regards

---

This email was sent automatically with n8n

Creating quality AI content takes serious research time ☕️ Your coffee fund helps me read whitepapers, test new tools and interview experts so you get the real story. Skip the fluff - get insights that help you understand what's actually happening in AI. Support quality over quantity here!

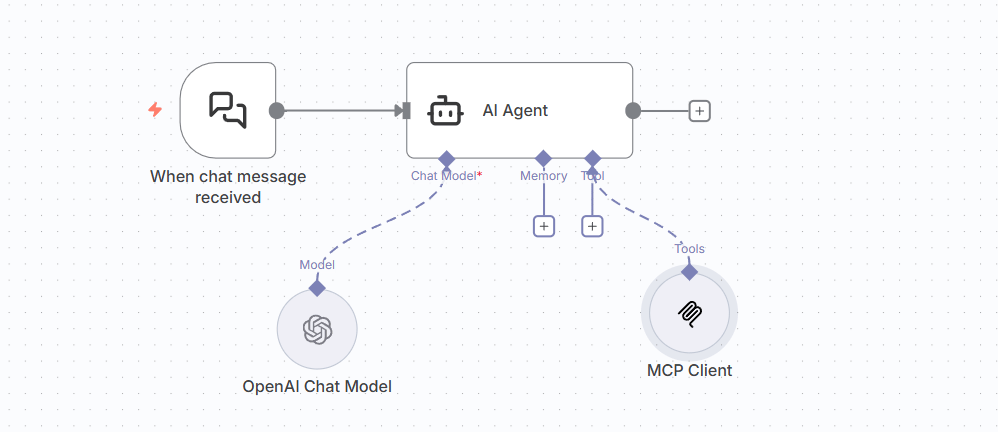

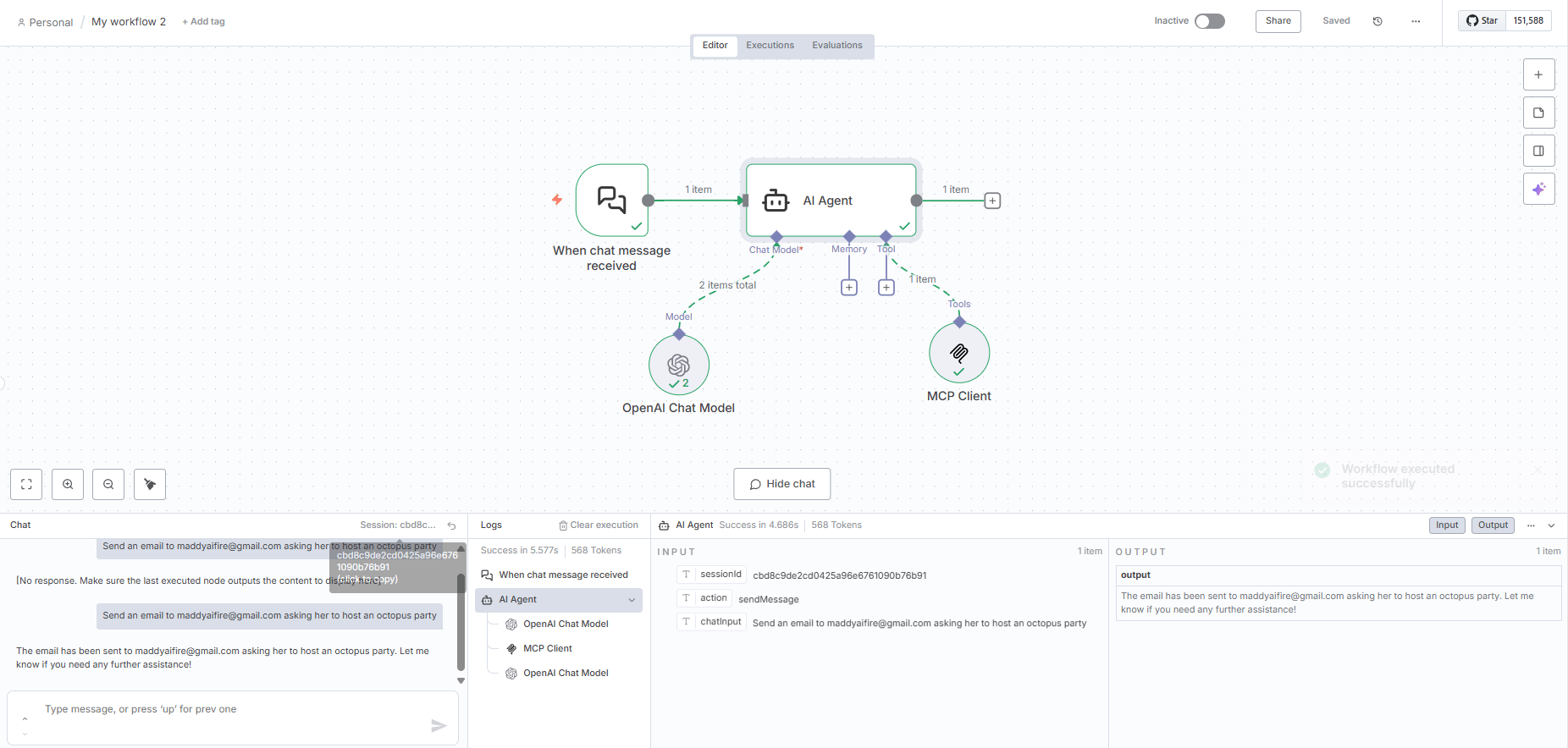

Switching Hosts: Using the Same Server with an n8n AI Agent

To demonstrate MCP's portability, you can use the exact same n8n MCP server (the one you just built) within another n8n workflow using its AI Agent node.

Create a new n8n workflow and add an AI Agent node.

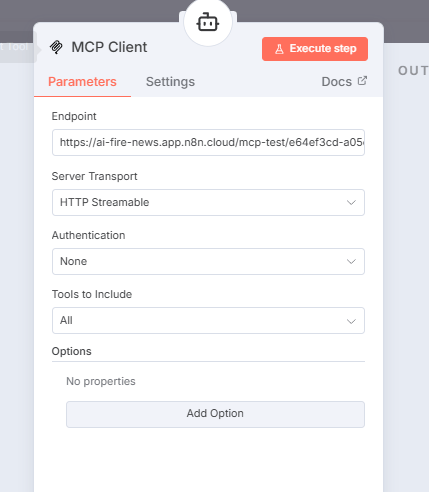

Add the MCP Client tool to this agent node.

Configure the endpoint:

Paste your first n8n workflow's production URL (the MCP server endpoint).

Select the transport method: "HTTP Streamable" (this is often the preferred method when n8n acts as the client).

Test: Prompt the AI agent within this second workflow: "Send an email to [email protected] asking her to host an octopus party".

Result: The second workflow’s AI Agent uses its MCP Client tool to call the first workflow’s MCP Server, successfully sending the email. This perfectly demonstrates MCP's core principle: the same server, providing the same capabilities, accessed seamlessly by different host applications.

No-Code Limitations

While incredibly accessible, building MCP servers with no-code tools like n8n has limitations compared to coding them directly:

❌ Cannot add custom Resources: You are generally limited to exposing Tools (actions), not read-only data Resources.

❌ Cannot define Prompt Templates: You cannot embed expert-level prompt structures directly into the server.

❌ Limited to Pre-built Tool Nodes: You can only expose functionalities that already exist as nodes within the no-code platform (though n8n's HTTP Request node offers significant flexibility).

✅ Perfect for simple tool integration: Ideal for quickly exposing existing API functionalities.

✅ Great for learning MCP concepts: An excellent way to understand the HCS architecture hands-on.

✅ Fast prototyping and deployment: Get functional servers running in minutes.

Part 7: Building MCP Servers (With Code)

For maximum flexibility and control, building MCP servers directly with code using official SDKs unlocks the full potential of the protocol, including Tools, Resources and Prompt Templates.

What We'll Build

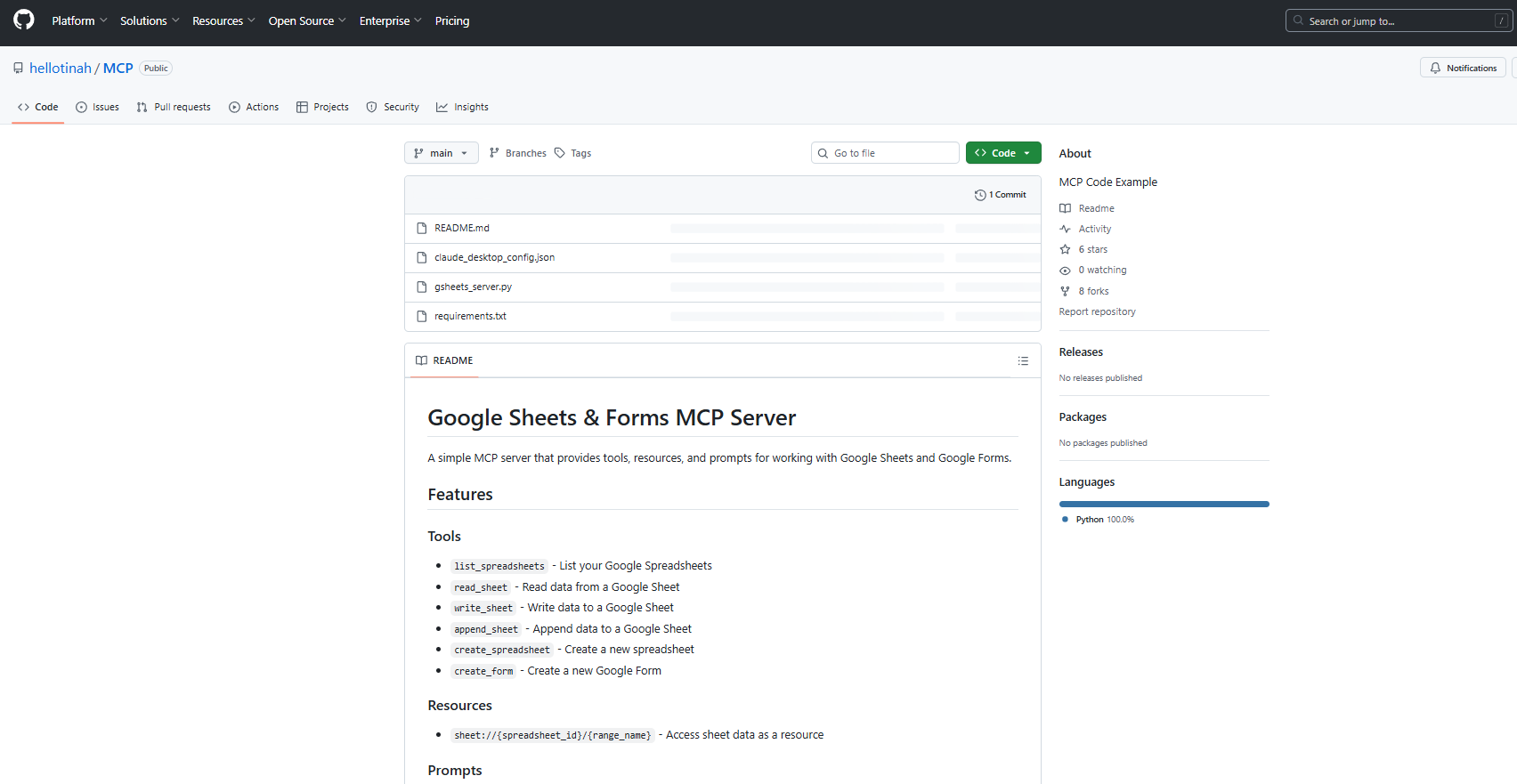

Let's outline how to build a more sophisticated Google Sheets MCP server using Python, showcasing all three TRP capabilities. This server will provide AI agents with comprehensive abilities to interact with Google Sheets.

The Architecture

Programming Language: Python is a popular choice due to readily available MCP SDKs and extensive libraries for interacting with services like Google Sheets.

Key Components:

Server Initialization: Setting up the basic MCP server framework and handling authentication with Google Sheets API.

Tool Definitions: Creating Python functions (using special tags) to be offered as MCP Tools (e.g., read, write, append).

Resource Definitions: Defining functions to expose sheet data as read-only MCP Resources.

Prompt Template Definitions: Crafting expert-level prompt structures for common Sheets-related tasks like analysis or reporting.

Transport Configuration: Specifying how clients (Host applications) will connect to this server (e.g., Standard I/O for local use, HTTP for remote access).

Code Overview (High-Level)

While the full code involves specific library imports and authentication flows, the core structure for defining capabilities uses simple decorators provided by the MCP SDK.

Tool Definition Example

Key Pattern: The @mcp_tool decorator magically tells the MCP framework that the tagged Python function should be offered as a usable Tool, automatically handling the protocol communication. The function's docstring often serves as the natural language description for the AI.

Resource Definition Example

The Difference: Resources (@mcp_resource) are designed for efficient, read-only access to potentially large datasets. The server might cache this data, avoiding repeated, expensive API calls needed by Tools.

Prompt Template Example

The Value: By providing these expert-crafted templates, the server ensures that users (or AI agents) can perform sophisticated tasks like data analysis consistently and effectively, without needing to be prompt engineering experts themselves.

Real-World Usage Demo

Once your code-based Google Sheets MCP server is running, you can connect it to a host application like Claude Desktop.

Connecting to Claude Desktop:

Configure the server's endpoint and transport method in the Claude Desktop config file.

Restart Claude.

Verify the connection by checking if the new "Google Sheets" tools and templates appear in Claude's available actions.

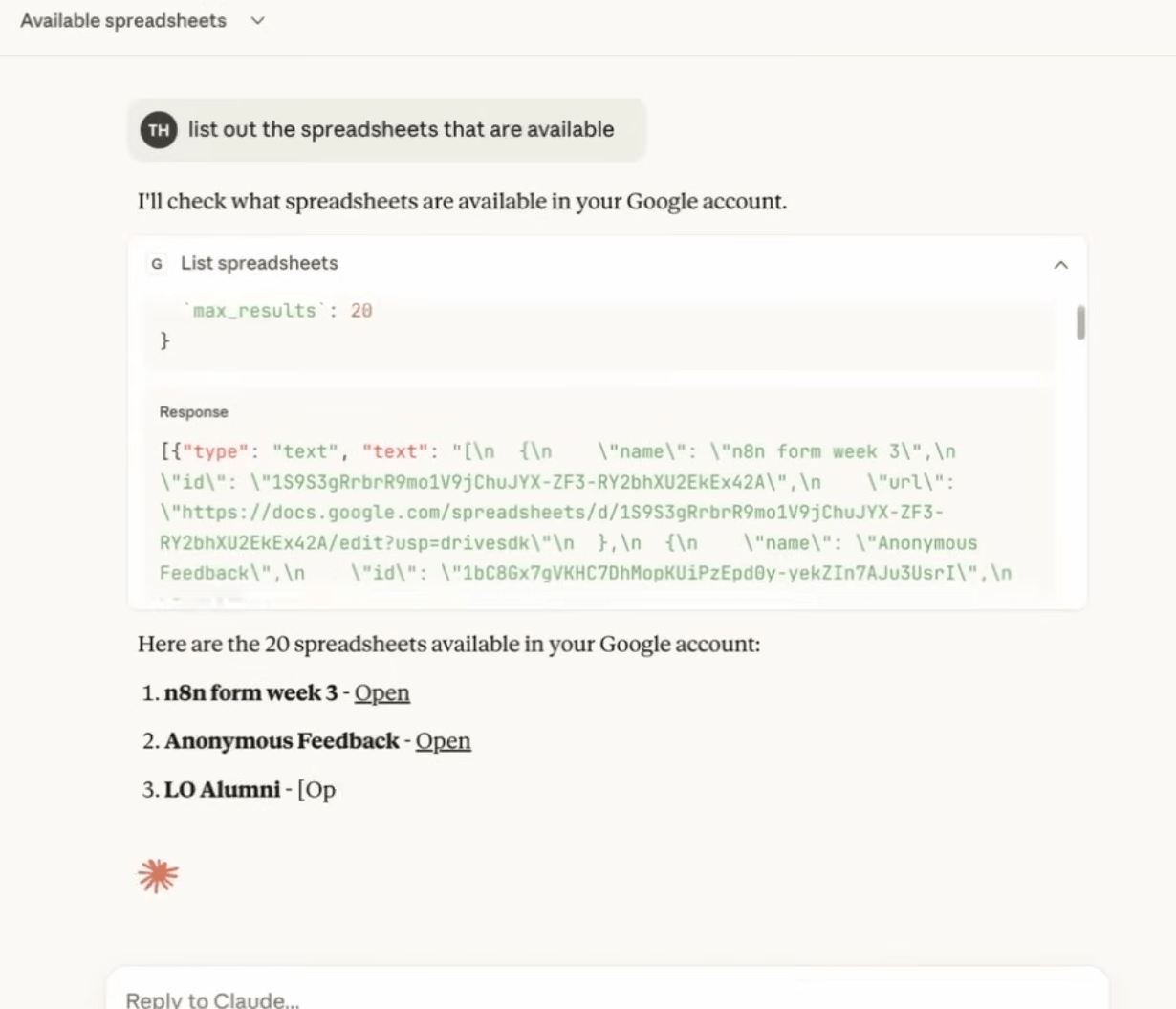

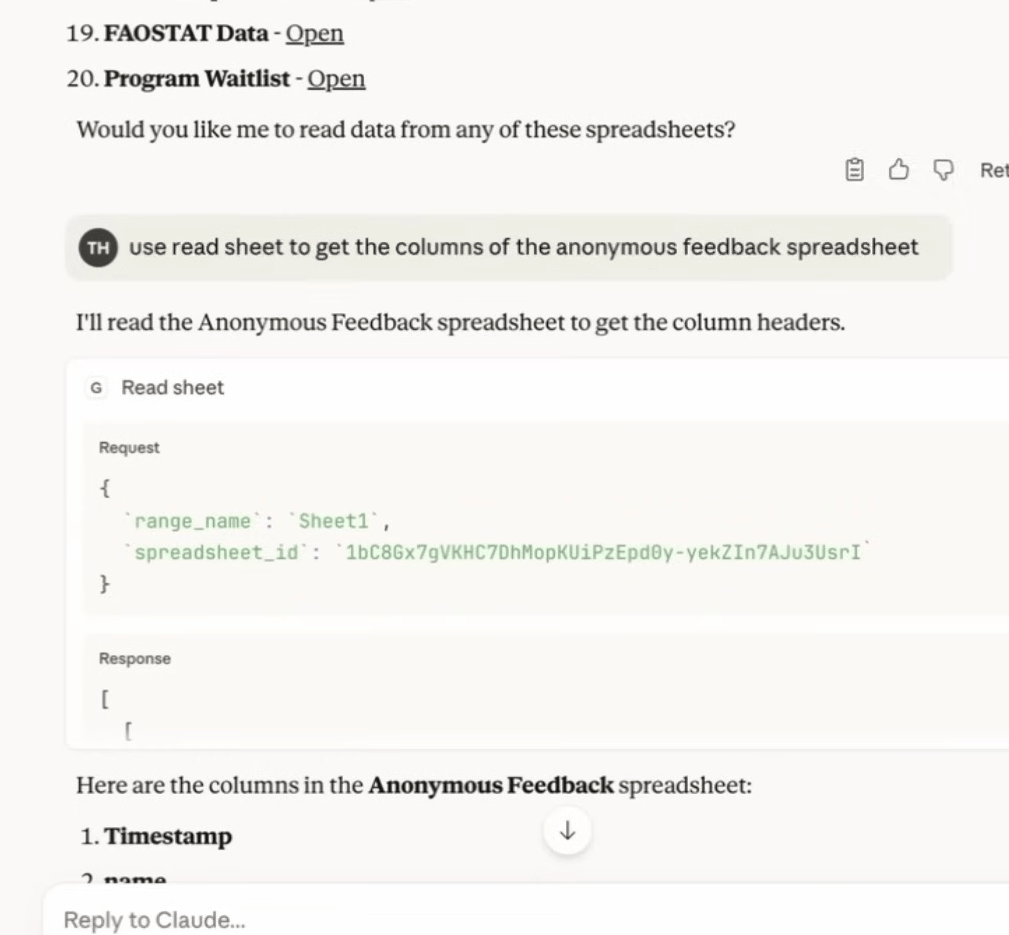

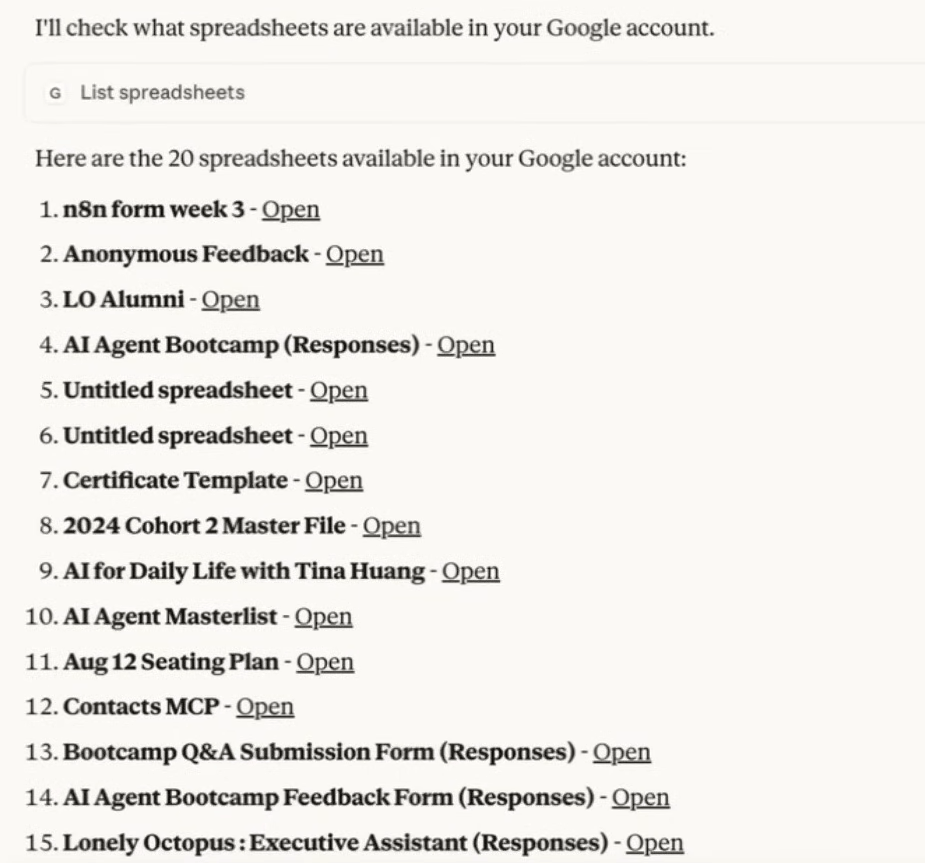

Example Interaction 1: List Spreadsheets (Using a Tool)

Prompt: "List out the spreadsheets that are available via the Google Sheets server".

What Happens: Claude uses the

list_spreadsheetstool on your MCP server, which calls the Google Sheets API and returns a list like: "20 spreadsheets found: 'n8n form week 3', 'Anonymous Feedback', 'LO Alumni', [and 17 more...]"

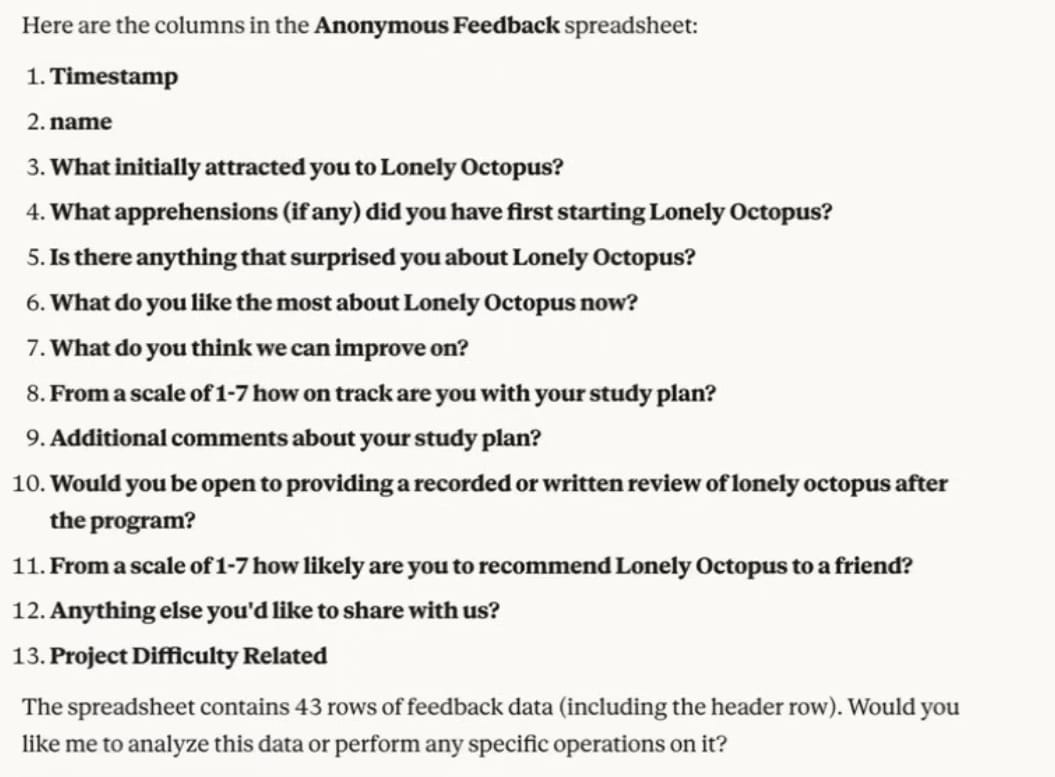

Example Interaction 2: Read Sheet Data (Using a Resource)

Prompt: "Use the Google Sheets resource to get the column headers of the 'Anonymous feedback' spreadsheet".

What Happens: Claude accesses the sheet data exposed as a Resource. This might retrieve cached data directly from the server without making a live API call to Google, resulting in a much faster response with the column names.

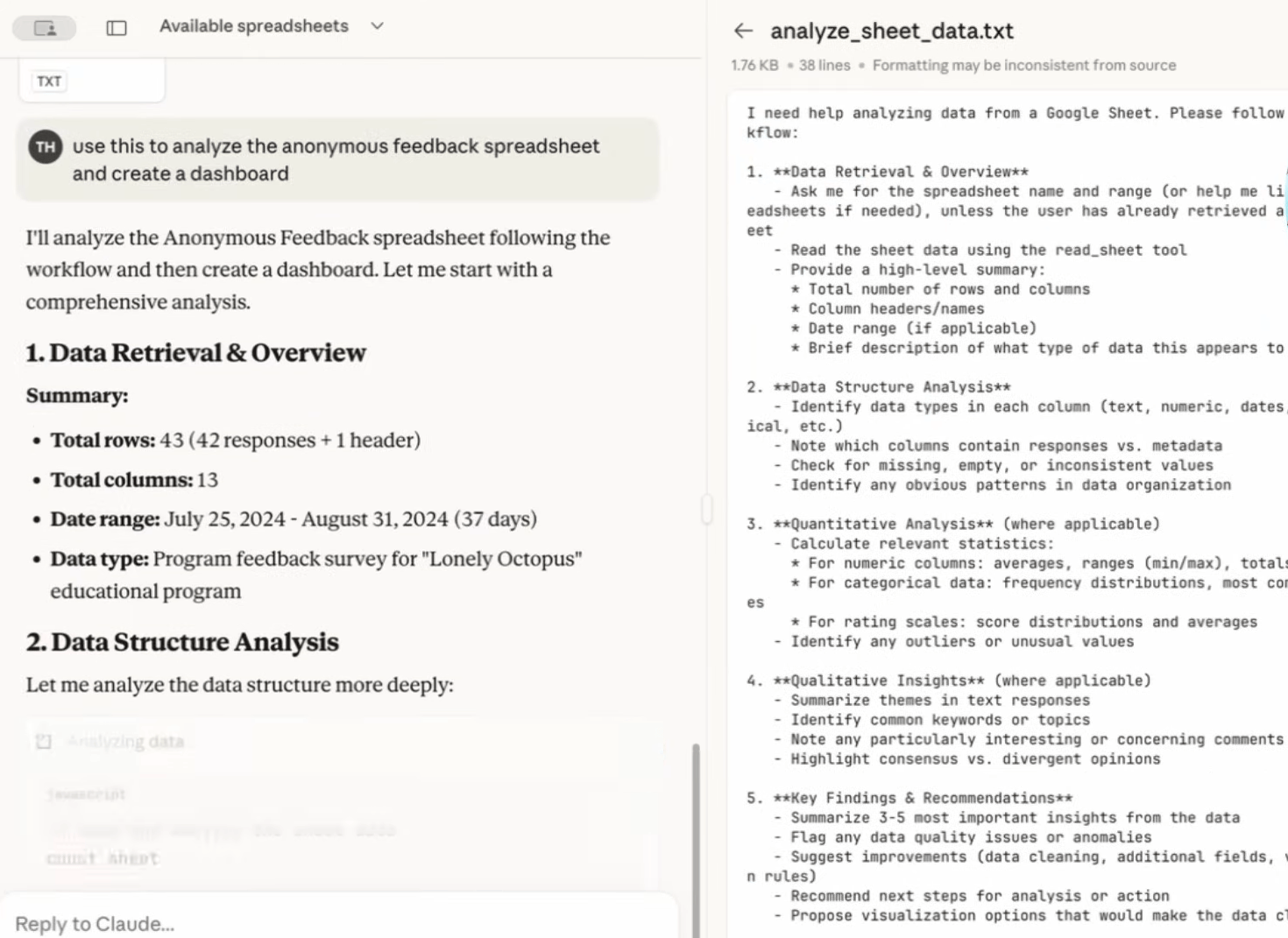

Example Interaction 3: Using a Prompt Template

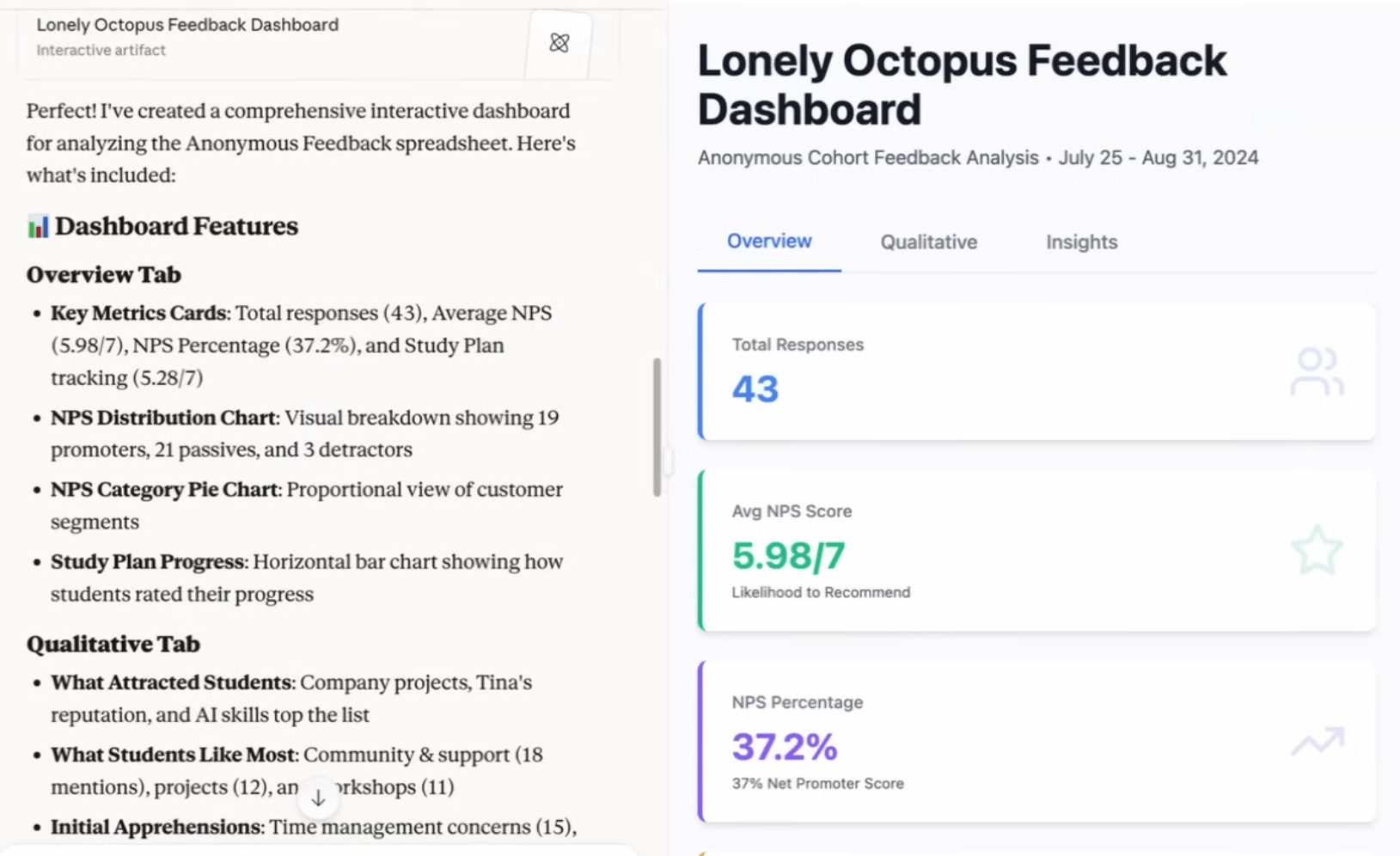

Prompt (using Claude's interface to select the template): "Add from sheets → Analyze sheet data template". Then fill in the specifics: "Use this to analyze the 'Anonymous feedback' spreadsheet (range A1:G50) and create a dashboard summarizing the findings".

What Happens:

Claude uses the pre-built "analyze_sheet_data" prompt template from your MCP server.

It sends the template, filled with your specifics, along with the relevant sheet data (potentially accessed via the Resource) to its underlying LLM.

The LLM performs a comprehensive analysis guided by the expert template structure.

Claude presents the results, potentially including summary statistics (Total responses: 43, Avg NPS: 4.98/5), key feedback themes and even suggestions formatted as a mini-dashboard.

The Power: The prompt template ensures a consistent, high-quality analysis every time, transforming a simple request into an expert-level output without requiring complex user prompting.

Running Your Own Code-Based MCP Server

Getting your custom server running involves standard software development steps.

Setup Requirements: A Python environment (3.8+ recommended), installation of the MCP SDK (

pip install mcp) and Google Sheets API credentials.Configuration Steps: Clone the server code repository, install dependencies (

pip install -r requirements.txt), configure your Google API authentication credentials securely, run the server script locally (python server.py) or deploy it to a cloud platform and finally, add the server's endpoint address to your Host application's config file (like Claude Desktop).

Code vs. No-Code Decision Matrix

When should you use n8n versus writing code for your MCP server?

Choose No-Code (n8n) When:

✅ You primarily need simple Tool integration (exposing existing APIs).

✅ You prioritize rapid prototyping and quick deployment.

✅ You are not a developer or prefer visual building.

✅ You're building solutions for personal use or small teams.

Choose Code When:

✅ You need the full power of Resources and Prompt Templates.

✅ You're building for production at scale and need optimal performance.

✅ You require custom business logic not available in no-code nodes.

✅ You plan to distribute or sell your MCP server to others.

The Reality: Many successful projects follow a hybrid path. Developers might start with a no-code prototype in n8n to quickly validate an idea, then build a strong, feature-complete code version for production deployment once the concept is proven.

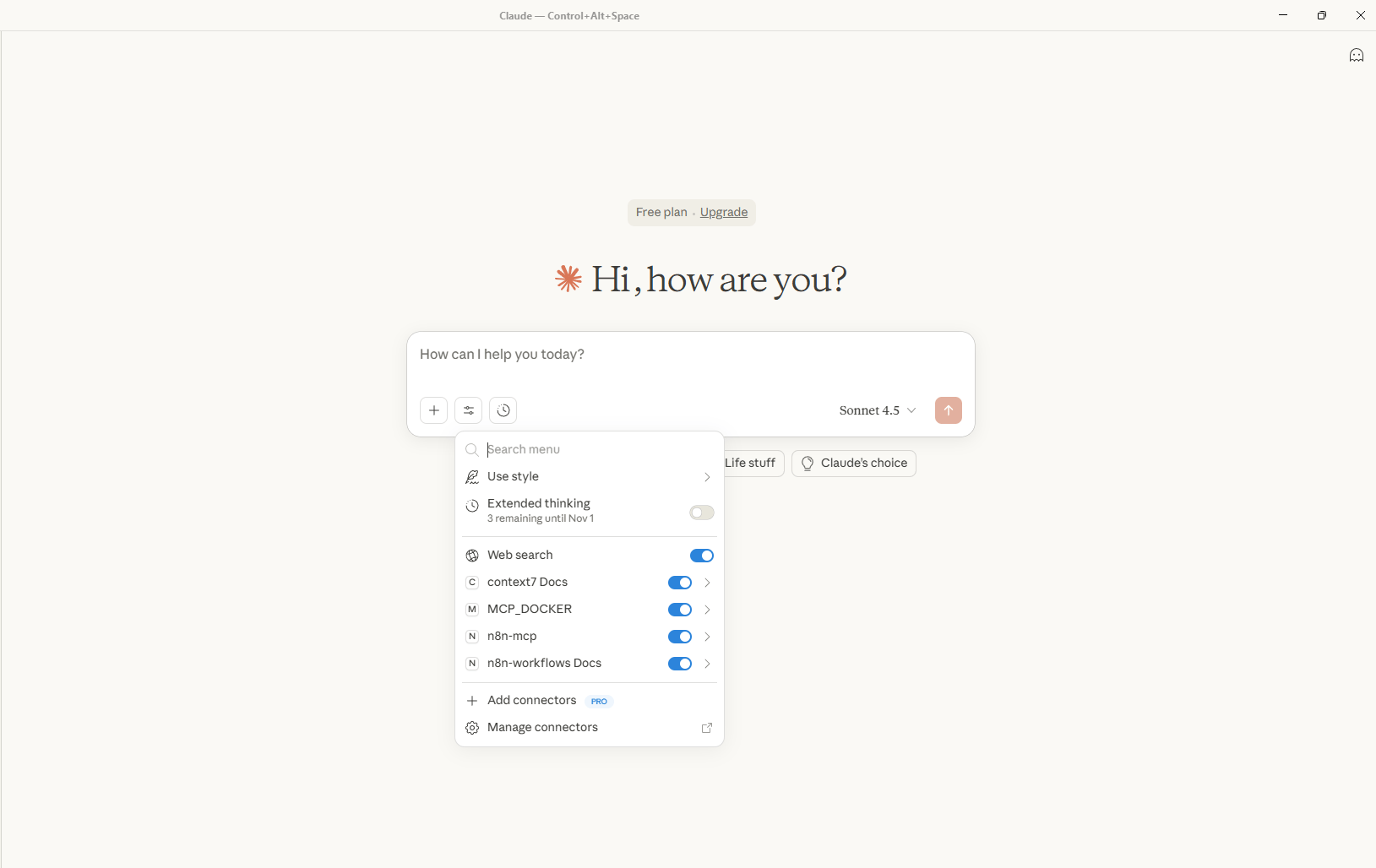

Part 8: The MCP Ecosystem & Future

MCP is more than just a technical protocol; it represents a foundational layer for the future of interconnected AI applications. Its long-term significance cannot be overstated.

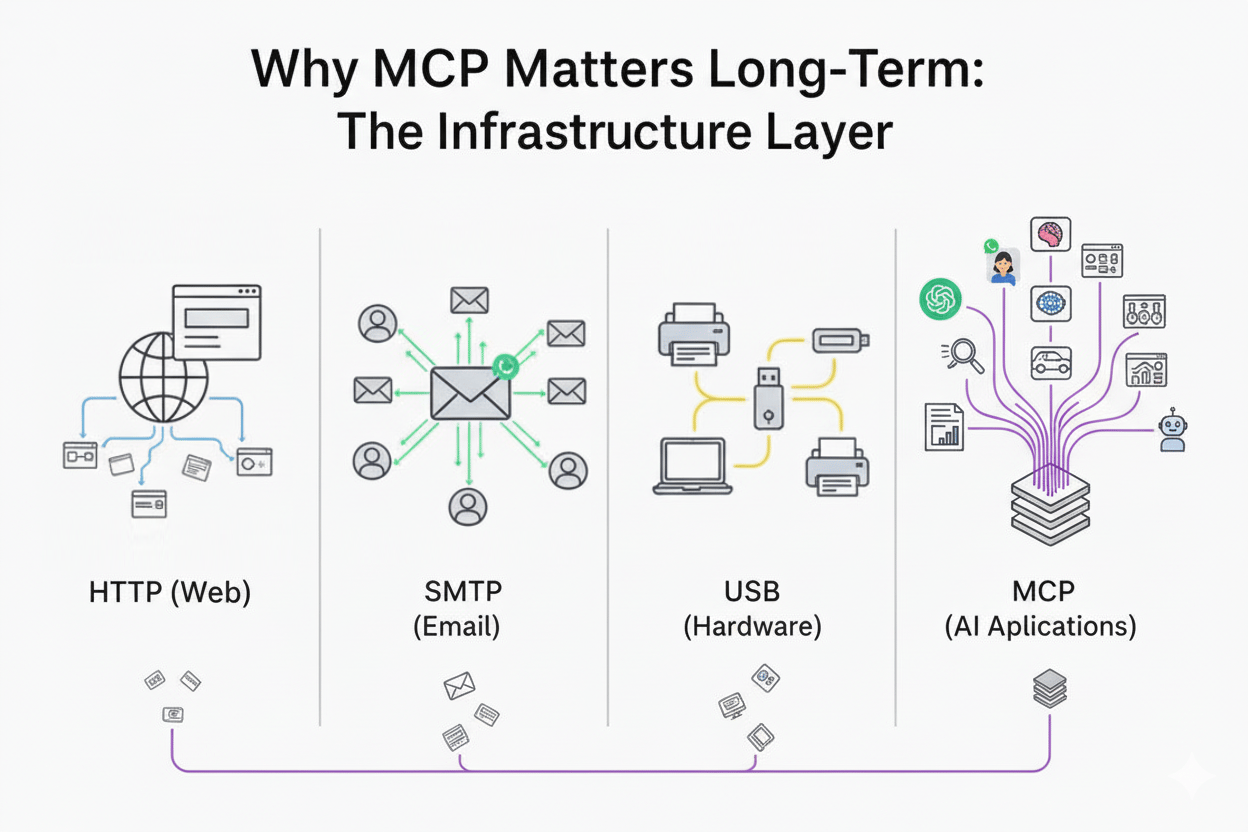

Why MCP Matters Long-Term: The Infrastructure Layer

MCP represents infrastructure-level innovation in the AI tooling landscape. Just as standardized protocols like HTTP (for the web), SMTP (for email) and USB (for hardware) created stable foundations that enabled massive ecosystems and innovation to grow on top, MCP is creating that same standardized foundation for AI applications to communicate and collaborate.

Current Trajectory: Explosive Growth

The rapid adoption rate highlights MCP’s potential:

Launch: December 2024.

3 Months Later: Over 20,000 MCP servers created by the community.

Growth Rate: Expanding very, very quickly as more developers and companies adopt the standard.

Industry Support: Major AI companies and tool providers are actively building MCP-compatible products and integrations.

Monetization Opportunities

The standardization created by MCP opens up significant business opportunities for developers and entrepreneurs.

Building MCP Servers as a Business:

Create specialized MCP servers tailored for specific industries (e.g., healthcare compliance, financial data analysis).

Offer premium versions with enhanced features, higher rate limits or dedicated support.

Sell niche MCP servers on emerging marketplaces.

Provide consulting and custom MCP server development services.

Why It Works: Standardization means developers can build an MCP server once and potentially reach every MCP-compatible AI application - a massive potential market with less connection work. It's like developing one USB device that works with billions of computers.

Conclusion: Your MCP Action Plan

MCP is the new universal standard for AI integration, replacing the chaos of custom APIs. It's the "USB for AI".

It works via a Host-Client-Server (HCS) model and servers can offer Tools, Resources or Prompt Templates (TRP).

Your Next Steps

Beginner: Use existing MCP servers. Explore the marketplace and plug pre-built tools into apps like Claude Desktop.

Intermediate: Build a simple server using n8n (no-code) for basic tool integration.

Advanced: Master the system. Use the code-based SDK (e.g., Python) to build powerful, custom servers with full capabilities (Tools, Resources and Templates).

The ecosystem is exploding now. Mastering MCP gives you a massive advantage. You're now equipped to build with it.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

Prompt Engineering Is Dead. The New Skill Is Context Engineering

*indicates a premium content, if any

How would you rate this article on AI Automation?We’d love your feedback to help improve future content and ensure we’re delivering the most useful information about building AI-powered teams and automating workflows |

Reply