- AI Fire

- Posts

- 🖥️ Why Physical AI is the Next Frontier to Dominate in 2026 & 4 Big Predictions

🖥️ Why Physical AI is the Next Frontier to Dominate in 2026 & 4 Big Predictions

AI finally has a body. I’ll break down how chips, math, and robot teams are pushing AI into cars, factories, and daily life much faster than most people realize.

TL;DR BOX

Physical AI is the shift of intelligence from screens into real environments, where machines can see, reason and act in the world around us. In 2026, this is no longer a "future" concept; it is being deployed at scale in automotive, manufacturing and logistics.

Key shifts include Predictive Math, which allows robots to simulate consequences before moving; Collaborative Learning, where fleets teach each other; and Vertical AI, task-specific systems that replace generic tools. Economically, the automotive chip market is on track to hit $121 billion by the early 2030s and "robot data" is becoming a tradable asset. But there’s a real downside. Many routine factory and retail jobs could shrink by 10-15% globally, while demand for highly specialized engineering roles explodes.

Key Points

Physical vs. Digital: Unlike ChatGPT, Physical AI must survive the real world; mistakes in navigation or hardware movement have immediate, expensive physical consequences.

Vertical AI dominance: "Generalist" robots are failing; pre-trained "Vertical" systems for welding, picking and assembly are now standard.

The Data Economy: Performance data (error logs, sensor readings) is being anonymized and sold to developers to fuel smarter industrial AI.

Critical Insight

The real bottleneck for Physical AI isn't hardware strength, it's Predictive Math and Edge Compute. The machines that "win" in 2026 are those that can anticipate the next 500 milliseconds of reality on-device without needing the cloud.

Table of Contents

I. Introduction: The Moment Robots Actually Wake Up

You’re probably thinking: “Great, another hype term for robots that don’t exist yet.” It’s totally fair to think like that. The AI world has been throwing buzzwords at you for years.

But this one, Physical AI, is not about sci-fi trailers or humanoid demo videos that fall on stage. It’s already running factories, warehouses and even parts of your car and it’s getting a lot smarter in 2026.

Right now, Nvidia, ARM and major automakers are building AI systems that don’t just think. They see, move and react on their own.

Imagine cars that learn from billions of miles of driving data or robotic arms that learn to copy a human worker after watching them just one time and machines fixing problems before they break anything.

The wild part? Most people still think this is 10 years away. It’s not.

In this post, I’ll walk you through what’s actually coming in 2026 and beyond, based on what’s already live in labs, factories and CES demo floors. This will define how you move, work and live in the very near future.

🤖 Physical AI is waking up. How do you feel? |

II. What Is Physical AI? Why Is Everyone Suddenly Talking About It?

Physical AI is intelligence embedded in machines that interact with the real world. It uses sensors, cameras and onboard chips to make fast decisions. Unlike screen-based AI, it controls motion and physical force. Mistakes have real consequences.

Key takeaways

Uses cameras, sensors and actuators

Runs decisions locally, not just in the cloud

Errors have real-world consequences

Requires real-time reliability

Once AI controls motion, reliability matters more than creativity.

To understand this revolution, you first have to understand the difference between the AI you use today and Physical AI.

1. The Quick Definition

Physical AI is the moment when AI stops living inside screens and starts shaping the real world. So, instead of generating text or images, these systems see, move and interact with their surroundings.

They use cameras, sensors, onboard chips and precise actuators to:

Understand what’s happening in front of them.

Make fast decisions with incomplete information.

Move arms, wheels or joints with accuracy.

Complete tasks without waiting for cloud servers.

If digital AI thinks, Physical AI acts. It is the “brain” inside robots, self-driving cars, warehouse fleets, factory assistants and any machine that needs real-world awareness.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

2. Digital AI vs. Physical AI

Most of us are used to "Digital AI" like ChatGPT. It lives on a server, processes text and makes zero physical contact with the world. If ChatGPT "hallucinates" and gives you the wrong recipe for lasagna, nobody gets hurt.

Physical AI is different. Like I said, it can see, hear, think and touch physical things. If a self-driving car or a factory robot hallucinates, the consequences are immediate and expensive.

That means Physical AI has to:

Process sensor data in real time.

Make fast decisions with incomplete information.

Coordinate multiple moving parts at once.

Recover when things don’t go as planned.

Keep humans safe at all times.

All of this is a very different problem than generating text or images. While Digital AI can hallucinate, Physical AI must survive the real world.

3. The $121 Billion "Brain" Race

Here’s the part most people miss: This is a chip war, not just a robotics trend.

This is why companies like Nvidia and ARM are fighting for the center of it. They are racing to build the “brains” that power Physical AI devices.

Allied Market Research projects the automotive chip market alone at $121 billion by 2031, roughly a 143% increase over 11 years. That’s why every booth at CES 2026 was showing some version of the same story:

Source: Allied Market Research.

Cars that can drive without you watching.

Humanoid robots stepping onto factory floors.

Vision systems picking and sorting at insane speed.

Machines that hand off control between human and AI without chaos.

All of them need one thing: massive on-device compute, running in real time, inside the machine itself.

Physical AI has moved out of the lab and into production. The robots are already here and the question is what they’ll be capable of next.

I’ve seen this shift firsthand at CES and in factory demos. The most impressive systems weren’t the flashiest robots; they were the ones quietly predicting failure before it happened.

Now let's look at the four trends that will define where Physical AI goes in 2026 and beyond.

III. Prediction #1: Math Will Make Robots Smarter Than New Hardware

Most people think the next robot breakthrough will come from better motors or stronger arms. Nope, the real upgrade is math.

1. How Robots Work Today vs. Tomorrow

Right now, robots are reactive. You tell them what to do, they do it. They adapt in real time based on sensors but they don't think ahead. When something unexpected occurs, they stop and wait for human intervention.

Future robots will be different. A recent MIT study shows that AI models can now predict what will happen before a robot even moves. So the future robots will absolutely do that same thing.

Source: MIT News.

This comes from math tools like dual numbers and jets. These used to live in academic papers. Now they’re practical enough to run inside real robots. They’ll anticipate outcomes before they move. Instead of reacting, they’ll simulate possibilities.

2. What This Means in Practice

Imagine a robotic arm in a factory. Today, it picks up a part, moves it and places it. If something goes wrong, it stops and waits for human help.

Robot Learning from Demonstration in Robotic Assembly. Source: MDPI.

With predictive math, that same robot could:

Simulate five different paths before choosing the best one

Forecast if a slight adjustment will cause a collision

Optimize movements in milliseconds, not seconds

This isn't theoretical. Research teams are already testing these methods. The question isn't if this will become standard but who will get there first.

The impact is obvious.

Faster optimization means less downtime. Better predictions mean fewer mistakes. And adaptive control that "feels intuitive" means robots that are easier to work with, not harder.

The next generation of automation will be defined by smarter machines, instead of stronger ones.

IV. Prediction #2: The Death of the Solo Robot

Right now, most robots work alone. They follow a program written by a human and ignore everything else around them.

That's about to change.

1. From Solo Units to Learning Teams

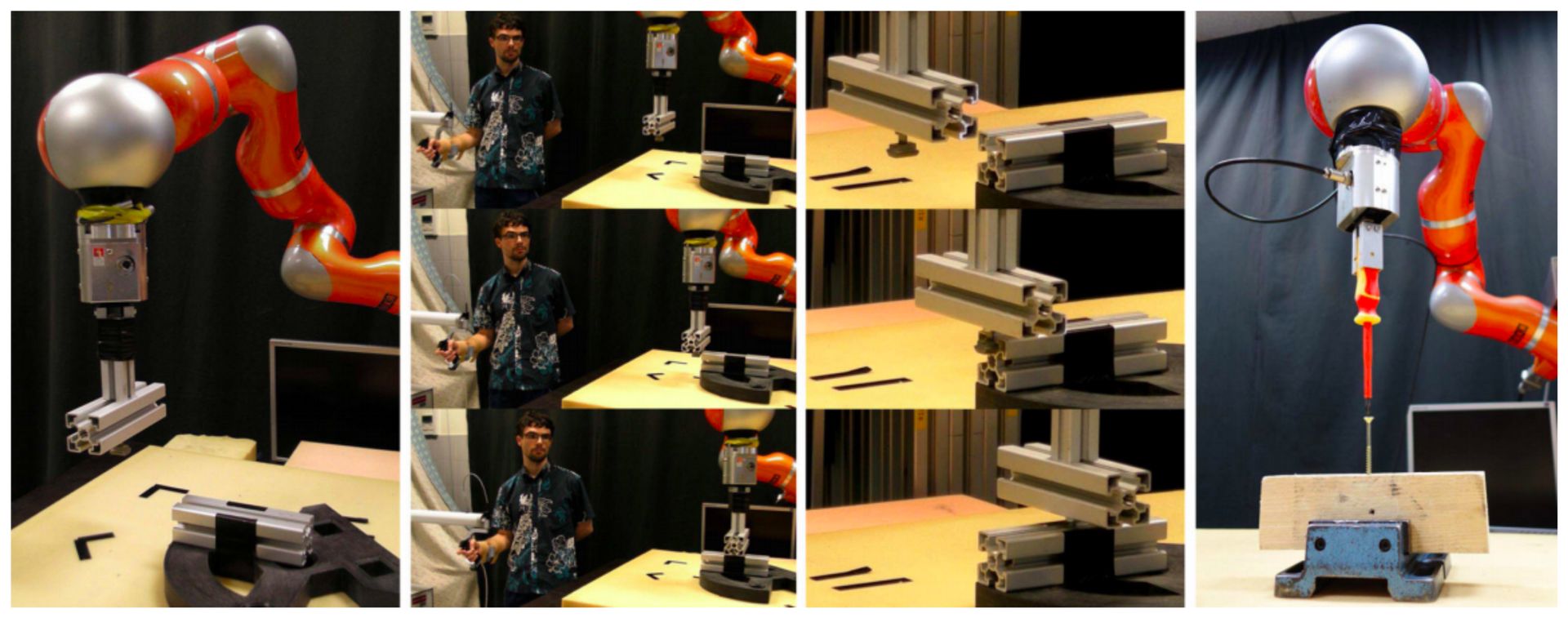

The next wave of robotics is built on imitation learning. Robots will watch humans and other robots, then copy what works, refine it and share it across the group. Instead of being programmed one by one, they learn as teams.

This isn’t science fiction. Industrial robotics companies already run multi-arm systems and synced robot fleets.

But true peer-to-peer learning, where robots teach each other without human programming, is just starting to arrive.

Robot built on imitation learning. Source: arXiv.

In 2026, you’ll see robots that:

Watch a human do a task once, then replicate it.

Study a “master robot” and match its speed.

Share what they learn across an entire team without rigid scripts.

2. What Changes in Real Life

In real operations, this changes everything.

Faster setup: Instead of spending days writing code, you just show the task to one robot and the whole team learns it.

More adaptable: When conditions change (like a part arriving in the wrong position) robots adapt together instead of waiting for a software update.

Better teamwork: Humans and robots work side by side more smoothly because robots intuitively follow human intent.

The barriers that once made this impossible are falling. Safety rules, communication standards and orchestration tools are finally catching up.

Companies like Universal Robots and others already run coordinated multi-arm systems. Robots that can self-organize and keep learning are arriving much sooner than people expect.

Once robots can learn together, the next question becomes obvious: should they learn everything or just one job perfectly?

V. Prediction #3: Vertical AI Crushes Generic Tools

For the last year, everyone tried to build "general" AI that could do everything.

In 2026, manufacturers have realized that a tool that does everything usually does nothing well and they want systems that do one job extremely well.

That’s where vertical AI comes in.

Source: AI World Journal and IBM.

1. Task-Specific AI Is Taking Over

Instead of buying a generic robot and spending months customizing it, companies are switching to AI systems that show up already trained for one job:

AI welding

AI finishing

AI assembly

AI inspection

And these aren’t vague promises. They’re shipping right now.

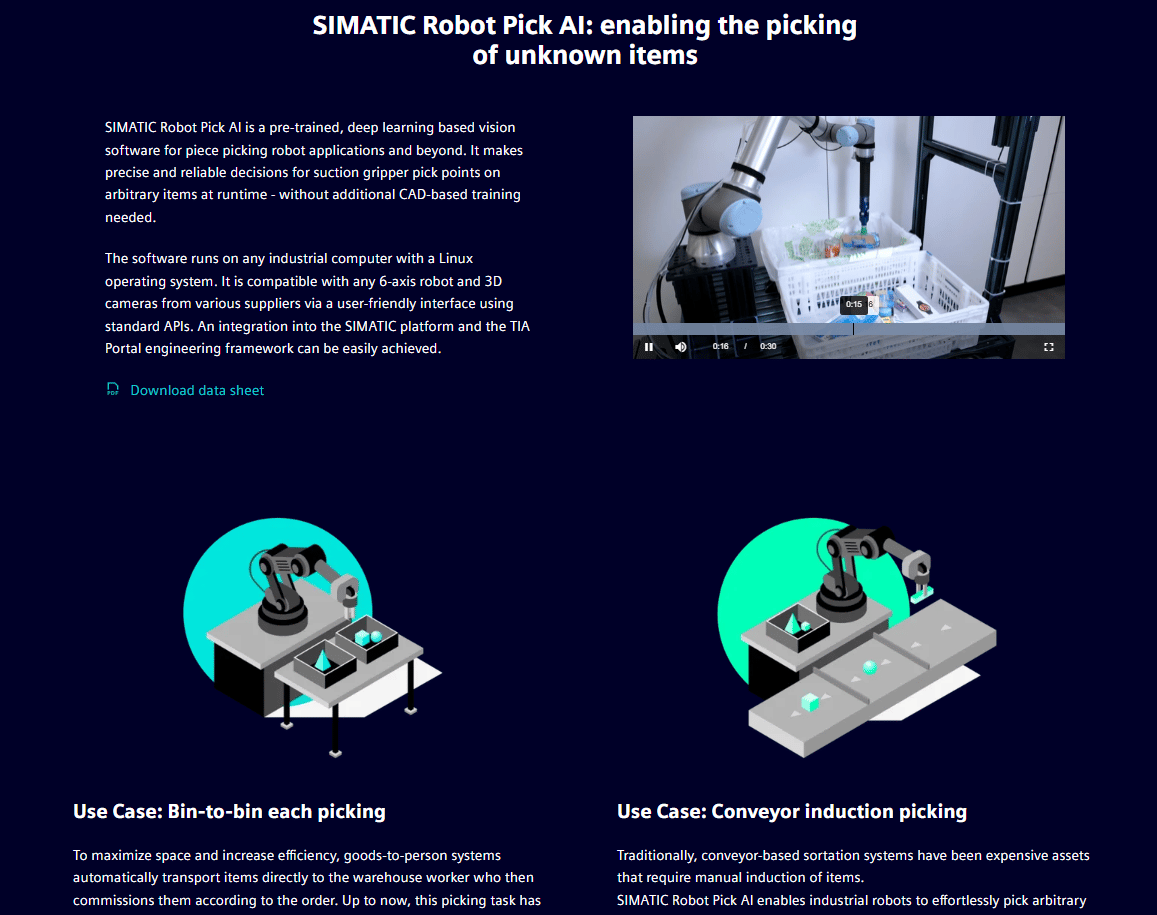

Siemens' SIMATIC Robot Pick AI, for example, uses deep learning to handle pick-and-place tasks that used to require human workers. It works with robots from Universal Robots and comes ready to deploy.

Source: Siemens.

2. Why This Is a Big Deal

Welding is one of the clearest examples. It used to require a skilled human because seams shift, materials vary and conditions change. AI vision can now track seams in real time and machine learning adjusts welding parameters on the fly. It reduces the setup time.

And it doesn’t stop there. Complex assembly, fastening and delicate handling, jobs that were "too variable" to automate, are now getting AI solutions.

Amazon Robotics in warehouses. Source: Amazon.

You’ve seen this in logistics already, AI-powered robots now handle pick, stow and sorting at scale in warehouses. In 2026, expect retail to follow: robots stocking shelves, tracking inventory and taking over basic customer tasks.

3. What This Means for Buyers

For buyers, the benefit is immediate. AI that is already trained for a specific job reduces mistakes immediately. Quality improves without weeks of tuning. You don’t need a large engineering team to make it work.

For industries like automotive, logistics and retail, this is a step change. Automation is no longer a luxury for companies with massive R&D budgets. It's becoming an off-the-shelf solution.

Creating quality AI content takes serious research time ☕️ Your coffee fund helps me read whitepapers, test new tools and interview experts so you get the real story. Skip the fluff - get insights that help you understand what's actually happening in AI. Support quality over quantity here!

VI. Prediction #4: Robot Data Will Become a Tradable Asset

Most people don't think about what happens to the data robots generate (how much force it uses and what its cameras see). But that's about to become a big business.

1. The Data Problem Today

Right now, robots collect massive amounts of information: sensor readings, vision data, force feedback, error logs. Most of that data stays locked inside the customer's facility. It's great for privacy and speed but it creates a problem.

Robots collect data. Source: N-iX.

AI developers need real-world data to build better models. But they can't access it because the models need real-world feedback but the best data on the planet is locked away on the edge.

2. The Solution: Secure Data Exchanges

The shift comes with secure data exchanges.

Robot companies are creating systems where they can safely share anonymous data to help other AIs learn faster. With customer permission, anonymized performance data will be bundled and sold as training sets for better industrial AI.

Here's how it could work:

Welding robots share de-identified seam quality metrics.

Sanding cobots contribute surface-finish data.

Assembly robots contribute mistake and correction logs.

This data fuels smarter AI for defect detection, predictive maintenance and adaptive control.

3. Everyone Wins

Why this is a win for everyone? Let me explain:

For manufacturers: New revenue streams and continuous improvement of their own robots.

For customers: Access to better AI tools trained on real-world conditions, without compromising confidentiality.

For developers: High-quality training data that's structured, privacy-preserved and representative of actual factory conditions.

The result is a virtuous cycle. Every robot deployed makes every future robot smarter.

VII. Why Does Physical AI Matter for Cars, Factories and Daily Life?

Cars are the biggest driver of Physical AI investment. Factories are next. The same systems power both.

Key takeaways

Hands-free driving systems are already announced

Humanoid robots enter factories in 2026

Compute inside machines is exploding

Cars, robots and chips form one system

These industries are converging faster than people expect.

Another highlight for you: “Physical AI” isn’t a cute buzzword. It’s a way to understand how multiple industries are colliding at once and you should know how to navigate this shift without making expensive mistakes.

What do you think about the AI Report series? |

1. Cars Are the Biggest Domino

The automotive chip market is projected to hit $121B by 2031. Nvidia, ARM and every major automaker are loading up for this.

At CES 2026, the announcements were nonstop:

Ford will sell a hands-free driving system by 2028.

Sony and Honda's Afeela will drive itself in most situations (date TBD).

Nvidia is supplying chips for Chinese automaker Geely's autonomous driving system.

Mercedes-Benz will debut a hands-off system in the US this year, eventually capable of driving from home to work without help.

Source: CES 2026.

As Jensen Huang put it at CES: “This is already a giant business for us.” It’s already a major business that you should care about if you don’t want to be left behind.

2. Robots Are Spreading Beyond Warehouses

The same shift is happening in factories. Google DeepMind, Boston Dynamics and Hyundai all confirmed humanoid robots will hit factory floors in the coming months with the actual production work.

Source: Boston Dynamics.

Physical AI is the upgrade that makes this possible; robots that can see, understand, reason and act in messy real environments.

So don’t be surprised if one day you see robots handling cleaning, maintenance or inspection tasks you never imagined automating before.

3. The Real Fuel: Computing Power

All of this only works because the compute inside cars and robots is exploding. Modern vehicles and robots need onboard systems that are dramatically more powerful than anything before. Chipmakers know this.

Mark Wakefield from AlixPartners described it perfectly: “The central brain of the vehicle will now be quantum leaps bigger, hundreds of times as big and that’s what they’re selling into.”

Source: Mexico Business News.

What looks like separate trends (cars, robots, chips) is really one system coming together. And it’s already reshaping daily life.

VIII. Conclusion: The Future is Not a Script

The future of Physical AI will be defined by four forces working together: advanced math, cooperative learning, vertical AI applications and a new data economy.

As Nvidia CEO Jensen Huang recently said during a major tech showcase, self-driving cars and physical AI are already a giant business. We are moving away from machines that just follow orders. We are moving toward partners that can learn and predict the future.

This isn't a distant future. The pieces are already falling into place. In 2026, these ideas move beyond pilots and into everyday operations.

Physical AI is here and it's coming for your car, your factory and the products you buy every day.

The real question isn’t whether Physical AI arrives. It’s whether you’re ready when it does.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

AI Bubble is Bursting!? Here's the Simple Play for Anyone to Still Make Passive Money

Is The "Knowledge Work" Era Over? (40 Jobs AI Will & Won't Kill)

Google's Gemini or ChatGPT: The Clear Best AI to Boost Your Marketing Traffic & Sales in 2026

How to Attract High-Value Clients in 2026 with Just One Clear Positioning Move*

*indicates a premium content, if any

Reply