- AI Fire

- Posts

- 🤫 Advanced Prompt Engineering: 10 Private & Secret Methods from Google, OpenAI & Anthropic

🤫 Advanced Prompt Engineering: 10 Private & Secret Methods from Google, OpenAI & Anthropic

I spent months analyzing OpenAI research papers. Here are the 10 internal tactics they use to guarantee accurate, high-quality responses instantly.

TL;DR

Advanced Prompt Engineering relies on ten specific internal techniques used by major AI labs to guarantee high accuracy. It transforms generic outputs into precise results by treating models as simulators rather than chatbots.

This guide explains methods like Persona Adoption and Chain-of-Verification to eliminate errors. You will learn to force the AI to verify its own answers and use negative examples to avoid bad habits.

The text details how to apply structured thinking for complex logic and set strict context boundaries. These strategies stop hallucinations and ensure the model follows your exact rules without deviation.

Key points

Google uses Confidence-Weighted Prompting to rate answer reliability mathematically.

Avoid asking "What do you think" instead of assigning a specific expert role.

Use Meta-Prompting to let the AI write its own optimized instructions.

Critical insight

Defining specific hard constraints before the actual task allows the model to plan its output architecture more effectively than adding rules at the end.

🤖 What drives you CRAZY about AI responses? |

Table of Contents

If you want to truly understand Advanced Prompt Engineering, you need to stop treating AI like a magic 8-ball. I have spent the last six months testing different ways to talk to AI models. At first, I was just like everyone else. I would ask a simple question and get a boring, generic answer. It was frustrating.

But then I dug deeper. I looked at how the big labs like OpenAI, Anthropic, and Google research their own tools. I found that the difference between a bad answer and a perfect answer is not the AI model you use. It is how you talk to it.

Before we dive into the hard skills, have you seen the recent viral trend? People are asking ChatGPT to "analyze my chat history and tell me who I am." It is a new viral trend with 1.5 million views. It is fun, but it is just the tip of the iceberg. To get real work done, you need more than just fun trends. You need a system.

An example of this trend

In this guide, I will act as your teacher. I will break down 10 techniques for Advanced Prompt Engineering that I use every day. These are simple to learn but very powerful.

Part 1: What Is The Core Mindset Behind Advanced Prompt Engineering?

Most people mistakenly treat AI like a human with opinions, asking questions like "What do you think?" The core mindset shift is to treat AI as a "simulator" rather than a person. You must stop asking for its opinion and instead direct it to simulate a specific expert persona, just as a movie director guides an actor.

Key takeaways

Fact: AI models do not have brains or souls; they predict text based on training data.

Comparison: Asking a random stranger vs. directing a master mechanic yields vastly different results.

Detail: Never start a prompt without setting the stage or defining who is answering.

Action: Replace "What do you think?" with "Act as an expert in [Field]."

The AI gives boring answers because it defaults to the "average" of the internet unless you tell it which specific "mask" to wear.

Once you understand this mindset, everything else becomes easier. The quality of your output is not about how smart the model is, but about how clearly you define the role it must simulate.

Here is the truth: The AI does not "think." It does not have a brain or a soul. It is a simulator.

Think of it this way. If you ask a random stranger on the street, "How do I fix my car?", they might give you a bad guess. But if you act like a movie director and say, "You are now a master mechanic with 20 years of experience. Tell me how to fix my car," you will get a much better answer.

However, treating the AI as a simulator is only the first step. A great prompt sets the stage for one brilliant interaction, but how do you ensure the AI doesn't break character after the tenth message? This is where many engineers get stuck.

To truly master the machine, you must understand the architectural difference between giving an instruction and building an environment. This is exactly what I unpack when talking about prompt vs context engineering. It explains why prompting gets the first good output, but context ensures the 1000th is still great.

Why This Matters

The AI has read the whole internet. It knows how a 5-year-old speaks, and it knows how a PhD professor speaks. If you don't tell it which "mask" to wear, it will give you a boring, average answer.

My Advice

Never start a prompt without setting the stage. Don't ask questions directly. Think about who needs to answer that question first.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

Part 2: How Does Persona Adoption Improve Advanced Prompt Engineering?

"Persona Adoption" works because specific roles trigger specific subsets of the AI's training data. Instead of a vague instruction like "You are a coder," you should use a detailed persona like "Senior Data Engineer with 10 years of experience." This specificity forces the model to switch its vocabulary, tone, and logic from "average" to "expert."

Key takeaways

Contrast: "Write about coffee" produces generic text; "Act as an Italian barista" produces rich detail.

Detail: Specificity in the persona (e.g., years of experience) improves the quality of the output.

Fact: Persona adoption changes the model's vocabulary and logical framework.

Action: Always define the role before defining the task.

When the AI adopts a character, it stops being a robot and accesses the specific knowledge associated with that expert.

The first technique is the most famous, but most people do it wrong. This is called Persona Adoption. As I mentioned, the AI is a simulator.

In Advanced Prompt Engineering, we don't just say "You are an expert." That is too vague. We need to be specific.

1. The Difference Between Average And Specific

If you say "You are a coder," the AI will write average code. If you say "You are a Senior Data Engineer with 10 years of experience in Python," the AI changes its entire vocabulary. It uses better logic. It explains things differently.

2. Example Of This Technique In Action

Bad Prompt: "Write a blog post about coffee."

Good Prompt (Using Advanced Prompt Engineering):

"Act as a world-class barista and coffee roaster with 10 years of experience running a cafe in Italy. Write a blog post about the art of making espresso. Focus on the smell and the crema."

3. Why It Works

When I use this, the AI stops being a robot and starts acting like a character. It accesses specific parts of its training data that relate to that expert character.

Part 3: Can Chain-Of-Verification Solve Errors In Advanced Prompt Engineering?

AI models are prone to "hallucinations" (making things up), but "Chain-of-Verification" (CoVe) helps fix this by forcing the model to double-check itself. The process involves generating an initial answer, creating verification questions to test that answer, and then rewriting the final response based on those checks. This self-correction loop significantly improves factual accuracy.

Key takeaways

Process: Generate Answer → Verify Facts → Correct Answer.

Detail: You can automate this entire loop in a single prompt.

Fact: This technique mimics how human researchers double-check their work.

Action: Use this for historical facts, citations, or data-heavy requests.

Forcing the AI to "audit" its own response before showing it to you filters out most errors.

This is a key part of Advanced Prompt Engineering because it forces the AI to double-check its own work before showing it to you.

While Google researchers pioneered CoVe to fix factual errors, they aren't the only ones trying to solve the 'hallucination' problem. The Chain-of-Verification is powerful, but it is just one part of a larger puzzle. To truly master accuracy, you should combine this with insights from other top institutions.

I have compiled the most effective methods in Quit Using AI Like Google: 5 Stanford Hacks For 10x Better Answers. These additional techniques go beyond simple verification to help you capture a unique, human-like voice in every answer.

1. How The Process Works

The model generates an answer, then it generates questions to test that answer, answers them, and finally fixes the original answer. It sounds like a lot of steps, but you can do it in one prompt.

2. The Template

Task: [Your Question]

Step 1: Provide your initial answer.

Step 2: Generate 5 verification questions that would find errors in your answer.

Step 3: Answer each verification question strictly.

Step 4: Provide your final, corrected answer based on the verification.I used this when I asked about a specific historical event.

Task: Explain the fall of the Roman Empire.

Step 1: The AI gave a generic answer.

Step 2 (Verification): It asked itself, "Did I mention economic inflation? Did I mention the Visigoths correctly?"

Step 3: It answered those checks.

Step 4: The final answer was much more accurate and detailed.

Part 4: Why Are Negative Examples Important For Advanced Prompt Engineering?

Anthropic discovered something interesting. Showing the AI what not to do is just as important as showing it what to do.

In Advanced Prompt Engineering, we call this "Few-Shot with Negative Examples."

1. The Concept Of "Bad Examples"

Usually, we give examples like "Here is a good email." But the AI might still make mistakes. If you show it a bad email and explain why it is bad, the AI learns the boundaries.

2. The Template

I need you to write catchy headlines.

GOOD Example: "5 Ways to Save Money Today"

BAD Example: "SAVE MONEY NOW!!! URGENT!!!"

Why it's bad: It uses all caps and sounds like spam.

Now complete: Write 3 headlines for a gardening shop.

Why This Template Is Genius (Technical Analysis)

The prompt structure you used leverages 3 powerful behavioral psychology elements designed for AI:

A. The "Negative Constraint" Technique Instead of just telling the AI to "do a good job" (which is very vague), you show it exactly "what is bad."

When you provide the BAD Example: "SAVE MONEY NOW!!! URGENT!!!", you are essentially setting up an electric fence. The AI will automatically steer clear of false urgency, all-caps, or spammy tones.

B. Providing Logic (Chain Of Thought)

The most crucial line in your prompt is actually this one: "Why it's bad: It uses all caps and sounds like spam."

This is where you teach the AI how to think. You aren't just giving an example; you are explaining the principle. Thanks to this line, the AI understands: "Ah, the user hates all-caps and hates spammy tones."

Next time, even if you switch topics to Real Estate or Finance, it will still remember this core principle to avoid making the same mistakes.

C. Creating Contrast

Placing the GOOD Example right next to the BAD Example creates a distinct contrast. Since AI is a probability-based language model, seeing two extremes helps it "calibrate" its response to the exact sweet spot you want: Engaging, but not over-the-top.

Part 5: How Does Structured Thinking Help With Advanced Prompt Engineering?

OpenAI uses a method called the "Structured Thinking Protocol." This is great for complex math or logic problems.

If you rush the AI, it will guess. Advanced Prompt Engineering teaches us to slow the AI down.

1. Thinking In Layers

You must force the model to think in steps. You want it to understand the problem, analyze it, strategize, and only then execute the answer.

2. The Template

Before answering, complete these steps:

[UNDERSTAND]

Restate the problem in your own words.

[ANALYZE]

Break down the problem.

List assumptions.

[STRATEGIZE]

Outline 2 ways to solve this.

[EXECUTE]

Provide your final answer.

I use this when I have a difficult business decision. For example, "Should I buy a house or rent?" The AI breaks down the costs, the market risks, and my personal situation before giving a number. The answer is always deeper than a standard chat response.

Part 6: Does Confidence-Weighted Prompting Fix Advanced Prompt Engineering Accuracy?

Google DeepMind uses a technique where they ask the model how confident it is.

The problem with standard prompting is that the AI always sounds 100% sure, even when it is wrong. Advanced Prompt Engineering allows us to see the doubt.

1. Asking For A Percentage

You can ask the AI to rate its confidence from 0% to 100%.

2. The Template

Answer this question: [Your Question]

For your answer, provide:

Your primary answer

Confidence level (0-100%)

Key assumptions you are making

Alternative answer if you are less than 80% confident3. Why This Is A Game Changer

If I ask, "Will it rain in London next year on May 5th?", a basic prompt might guess "Yes." But with this technique, the AI will say: I can’t know for sure. The best answer is: maybe. In London, early May often has a real chance of rain, roughly around one day in three.

Part 7: How Do Boundaries Affect Advanced Prompt Engineering Results?

This technique comes from the engineers at Anthropic. It is called "Context Injection with Boundaries."

Sometimes, you want the AI to only use the information you give it. You don't want it to use its outside knowledge from the internet. Advanced Prompt Engineering is about control.

1. Setting The Fence

You paste your document (like a resume or a manual) and tell the AI: "If the answer is not in this text, say you don't know."

2. The Template

[CONTEXT]

[Paste your text here]

[FOCUS]

Only use information from CONTEXT to answer. If the answer is not there, say "Insufficient information."

[TASK]

[Your Question]

3. Real-World Application

Imagine you are a customer support agent. You have a manual for a new product. You paste that manual into Claude or ChatGPT.

If a customer asks a question, you want the answer to come strictly from the manual. You don't want the AI to guess based on other products. This technique guarantees the AI stays on topic.

This technique of locking the AI to a specific document is the most basic form of a powerful discipline. However, simply pasting text isn't enough for complex systems; you must know how to structure that data so the AI retrieves it perfectly.

If you are ready to move from simple 'context injection' to building robust knowledge bases, read my deep dive: Stop Guessing, Start Building: A Guide To Context Engineering. It is the definitive guide to providing perfect information for superior results."

Part 8: Why Is Iterative Refinement Vital For Advanced Prompt Engineering?

No writer writes a perfect book in the first draft. The same is true for AI. OpenAI researchers use "Iterative Refinement."

In Advanced Prompt Engineering, we accept that the first result is just a draft.

1. The Loop

You ask the AI to write something. Then you ask it to critique itself. Then you ask it to rewrite it.

2. The Template

[ITERATION 1]

Create a draft of a sales email.

[ITERATION 2]

Review the output above. Find 3 weaknesses or boring parts.

[ITERATION 3]

Rewrite the output fixing all the weaknesses.I used to spend hours editing AI writing. Now, I just use this prompt. The jump in quality from Iteration 1 to Iteration 3 is huge. The writing becomes sharper and more human. It is like having an editor inside the chat.

Part 9: How Does Constraint-First Prompting Change Advanced Prompt Engineering?

Google Brain researchers found that if you put constraints (rules) before the task, the AI follows them better.

In standard prompting, we often put the rules at the end. "Write a story, make it short, don't use the letter E." By the time the AI reads the end, it has already started thinking about the story. Advanced Prompt Engineering flips this.

1. Hard Vs. Soft Constraints

You should clearly list what must happen (Hard Constraints) and what you want to happen (Soft Preferences).

2. The Template

HARD CONSTRAINTS (Cannot be violated):

Must be under 200 words.

Must not use the word "Delve."

SOFT PREFERENCES (Try your best):

Use a funny tone.

TASK: Write a product description for a new soap.

Confirm you understand the constraints before starting.

It creates a "box" for the AI to work inside. When the AI knows the rules first, it plans its output to fit those rules. I find this very useful for coding or writing specific formats like tweets or meta descriptions.

Part 10: Can Multi-Perspective Prompting Improve Advanced Prompt Engineering?

Anthropic uses a method called "Constitutional AI" which looks at things from many sides to reduce bias.

Advanced Prompt Engineering uses this to get a balanced view. Instead of one answer, you ask for three perspectives.

1. Wearing Multiple Hats

You ask the AI to analyze a problem from different viewpoints: Technical, Business, and Emotional.

2. The Template

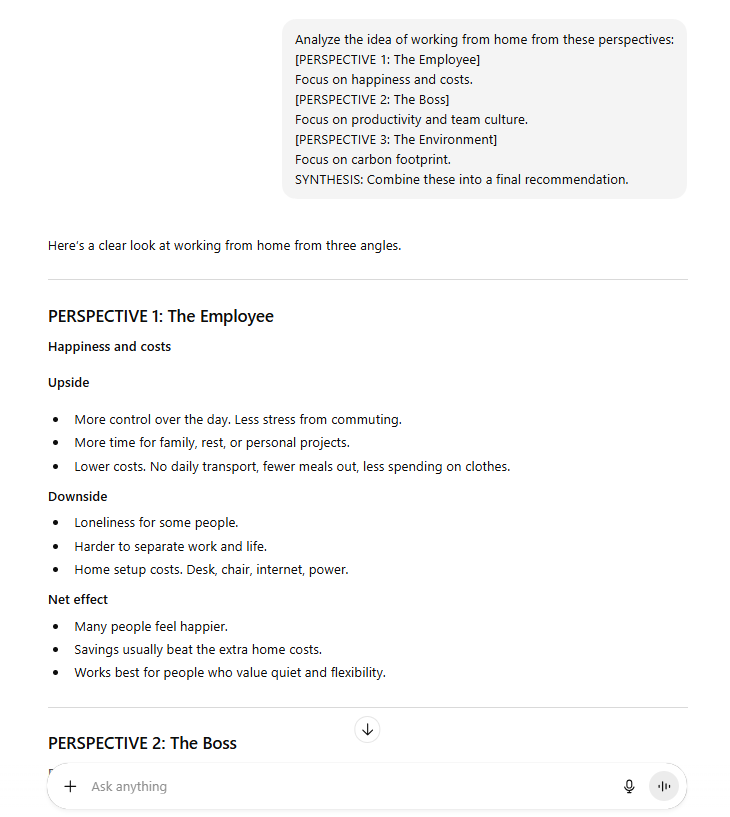

Analyze the idea of working from home from these perspectives:

[PERSPECTIVE 1: The Employee]

Focus on happiness and costs.

[PERSPECTIVE 2: The Boss]

Focus on productivity and team culture.

[PERSPECTIVE 3: The Environment]

Focus on carbon footprint.

SYNTHESIS: Combine these into a final recommendation.

This gives you a much smarter answer. It stops the AI from being one-sided. I use this when I am trying to understand a complex news topic or make a fair decision for my team.

Part 11: Is Meta-Prompting The Ultimate Advanced Prompt Engineering Trick?

This is the "Nuclear Option." It is what OpenAI's red team uses.

Meta-Prompting means asking the AI to write the prompt for you. Sometimes, we are not good at explaining what we want. But the AI knows exactly what it needs to hear to give a good result.

Instead of struggling to write a long prompt, just ask the AI to help.

The Template

I need to accomplish: [Your Goal]

Your Task:

Analyze what would make the PERFECT prompt for this goal.

Write that perfect prompt.

Then execute it.Why I Love This

I had to write a complex legal disclaimer (I am not a lawyer). I didn't know the right words. I used Meta-Prompting. I told the AI: "Write a prompt that will make you act like a lawyer and write a disclaimer."

The AI wrote a prompt that was 10 times better than mine. Then it used that prompt to write the disclaimer. It is like hiring a prompt engineer for free.

Summary And Next Steps

These 10 techniques are the foundation of Advanced Prompt Engineering.

You do not need to be a coder or a genius to use them. You just need to change your habit. Stop asking simple questions. Start building simulators.

Here is a quick recap of what to do next:

Stop using "you" questions (e.g., "What do you think?").

Start assigning Personas (e.g., "Act as an expert").

Use the Chain-of-Verification to check facts.

Give negative examples to fix tone.

Try Meta-Prompting when you are stuck.

The gap between a beginner and an expert is simply knowing how to talk to the machine. Now, you have the manual. Go try one of these techniques right now.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

Earn Money with MCP in n8n: A Guide to using Model Context Protocol for AI Automation*

We Tested Grok 4... And The Results Are NOT What You Think!

*indicates a premium content, if any

How useful was this AI tool article for you? 💻Let us know how this article on AI tools helped with your work or learning. Your feedback helps us improve! |

Reply