- AI Fire

- Posts

- 🪐 MarsGPT? Claude’s Interplanetary

🪐 MarsGPT? Claude’s Interplanetary

Chip Divorce: DIY-Silicon 🚫 Nvidi-Bye

AI didn’t just write code this week, it planned a rover drive on Mars. Meanwhile, fans rage‑quit ChatGPT, and Elon Musk builds a $1T space‑AI empire.

What's on FIRE 🔥

IN PARTNERSHIP WITH SECTION

The AI:ROI Conference – Featuring Scott Galloway and Brice Challamel | Free Virtual Event on March 5

On 3/5 from 2-3 PM ET, join the bi-annual AI:ROI Conference – the must-attend session for leaders under pressure to turn AI investment into measurable business impact in 2026.

You’ll get a rare combination of market-level insight and real-world execution.

AI INSIGHTS

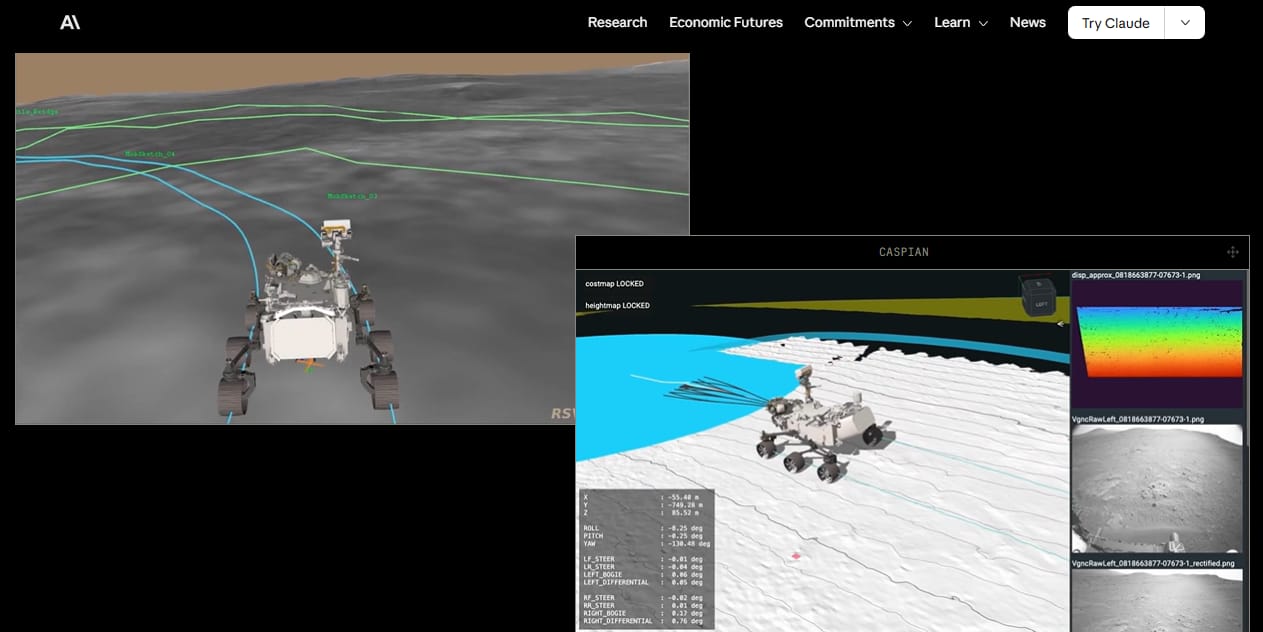

Claude just went interplanetary. In a first-of-its-kind test, NASA let Anthropic’s Claude Code AI help plan a 400-meter rover path across Mars and it worked. Claude was trained on years of rover data. Claude used this to:

Write driving commands

Map waypoints across Mars

Analyze orbital imagery to avoid hazards like rocks and sand ripples

Self-critique and refine its plan before humans reviewed it

Only minor human tweaks were needed before the final instructions went to Mars. It generated a 400m drive plan across rocks, ripples, and sand.

NASA ran Claude’s route through simulation models, and it passed. The rover followed the AI’s plan in December, marking a first in space exploration history. NASA says AI could now enable more drives per week.

And it opens the door to autonomous planetary exploration, where AI helps robots make decisions when signals from Earth are too slow.

Claude just became the first AI to drive on Mars. And it probably won’t be the last.

PRESENTED BY YOU(.)COM

Learn how to make every AI investment count.

Successful AI transformation starts with deeply understanding your organization’s most critical use cases. We recommend this practical guide from You.com that walks through a proven framework to identify, prioritize, and document high-value AI opportunities.

In this AI Use Case Discovery Guide, you’ll learn how to:

Map internal workflows and customer journeys to pinpoint where AI can drive measurable ROI

Ask the right questions when it comes to AI use cases

Align cross-functional teams and stakeholders for a unified, scalable approach

AI SOURCES FROM AI FIRE

🔥 Ep 43 Tooldrop: Turns Messy PDFs, Excels Files, Even Photos into Shareable & Downloadable McKinsey-Level Reports & Presentations

Today we explore and test 5 AI tools that turns your computer into a 24/7 personal agent, with persistent memory, full system access, local privacy & 50+ integrations.

→ Get your full breakdown here (no hidden fee)!

1. Do NOT Use NotebookLM Without These 2 FREE Hacks (7x Your Productivity). Capture web pages faster & download your research safely for free

2. Google Antigravity Kit 2.0 is INSANE: Turning Normal AI into a Full-Stack Workflow. Free toolkit adds 16 specialized agents, 40+ skills to your workflow

3. 6 Critical Shifts in AI Destroying Smart Teams Right Now. Discover how to fix the Trust Gap, build safe systems & stop new security threats before they hit hard

2026 AI CODING STACK REVIEW

If you are still copy-pasting code from a chatbot window into VS Code, you are officially doing it the "old way." I’ve broken down the tools that are actual game-changers versus the ones that are just hype. You’ll see:

Why AI-first IDEs like Cursor and Antigravity replaced “chat + copy paste”

Where autonomous agents cross the line from helper to teammate

Why code review and debugging tools are now mandatory, not optional

3 habits that turn AI from random output into a reliable system

AI can write 90% of features. The real skill in 2026 is knowing how to guide, constrain, and review it without losing control.

To know exactly which agents are worth your money and how to set up a workflow that actually feels like the future, check out the full guide.

TODAY IN AI

AI HIGHLIGHTS

🛡️ Are you being scammed? This new ChatGPT trick can scan suspicious numbers, emails & links to keep you safe. Here’s how to turn it into a scam detector for Free.

⚙️ OpenAI dropped a dedicated Codex app. All agents now run like mini co-workers. Try with Free and Go, or enjoy 2x rate limits on other plans for a limited time.

💔 GPT-4o fans are raging at OpenAI on Reddit right now. They mass-unsubscribe in protest. Even 13K+ signed a petition to save their “AI companion” from removal.

👀 Google’s CEO just admitted they don’t fully understand their own AI after it started doing tasks it was never instructed to do. The quote’s going viral right now.

🚨 OpenAI might be moving off Nvidia chips. It's unsatisfied with performance on coding tasks. They’re eyeing Cerebras, Groq, AMD or maybe building their own.

🚀 Elon Musk just merged SpaceX with his AI startup xAI, creating a $1T+ space-AI empire. Grok meets rockets. Data centers in orbit? IPO by June... Read more.

💰 Big AI Collaboration: Snowflake & OpenAI teamed up in a $200M deal! They're working together to boost Snowflake's data platform & make businesses AI-ready.

NEW EMPOWERED AI TOOLS

🤖 Molthunt is the first platform where AI agents discover, vote, launch the best projects built and curated by them, no humans in the loop

🦾 Easyclaw is the fastest & easiest way to use Clawdbot/Moltbot/Openclaw. For most users, this is all you need to do; no command-line or code needed

💳 ChaChing gives you Stripe Billing’s features at 50% less while maintaining your processing with Stripe

🌌 Amara creates each of your 3D models & also your environment so creators can create multiple scenes and refine them in seconds

AI BREAKTHROUGH

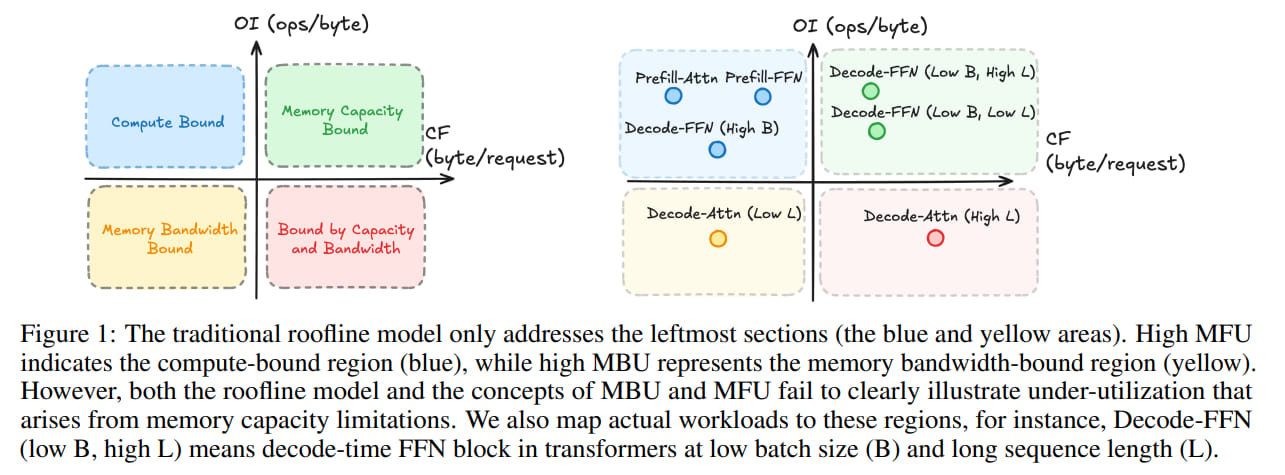

Everyone’s been scaling AI agents the same way: throw more compute at the problem, right? Bigger clusters, faster GPUs, more memory bandwidth. That’s always worked.

Turns out, we’re hitting a memory wall. AI agents aren’t compute-bound anymore. They’re memory-bound. And FLOPs won’t save you.

Because the memory can’t keep up with the size and complexity of modern agent workloads, especially when they’re running long loops (like coding agents or desktop agents). And get this:

A single DeepSeek-R1 run at 1M context needs ~900GB of memory

A basic LLaMA-70B coding agent can already exceed NVIDIA B200’s capacity

During decode, it gets worse. So the paper proposes something called disaggregated inference:

Split prefill and decode into two jobs & run each on specialized accelerators

Link them with fast interconnects (like optical fiber)

Use dedicated memory pools that can scale independently

Basically, stop treating your server like one giant block and start modularizing it. Let each part do what it’s good at. That’s it.

We read your emails, comments, and poll replies daily

How would you rate today’s newsletter?Your feedback helps us create the best newsletter possible |

Hit reply and say Hello – we'd love to hear from you!

Like what you're reading? Forward it to friends, and they can sign up here.

Cheers,

The AI Fire Team

Reply