- AI Fire

- Posts

- 🧄 GPT-5.3 “Garlic”: The First Leaked Comprehensive Preview. What to Expect?

🧄 GPT-5.3 “Garlic”: The First Leaked Comprehensive Preview. What to Expect?

The bodybuilder era of AI is over. The gymnast era has begun and it explains why GPT-5.3 wins on cost, speed, and reasoning.

TL;DR BOX

As of early 2026, the AI landscape is watching one release very closely: GPT-5.3 "Garlic" is OpenAI's answer to the challenge from Gemini 3 and Claude 4.5. Unlike previous iterations, "Garlic" marks a strategic pivot toward Cognitive Density; building smaller, ultra-efficient models that prioritize "GPT-6 level" reasoning over raw scale.

Key features include a 400,000-token memory with "Perfect Recall". This means the AI will not forget details even in very long documents. Early benchmarks suggest it outperforms competitors in coding and reasoning, while running cheaper and faster than previous versions.

Key points

Fact: GPT-5.3 "Garlic" is the result of merging the stability-focused Shallotpeat project with the experimental Garlic compression project.

Mistake: Assuming a larger context window (like Gemini's 2M) is always better. "Garlic" focuses on Recall Precision, ensuring it doesn't "forget" information buried in large datasets.

Action: Organize your high-density data (codebases, legal docs) now to take advantage of the 400K context window when the beta drops in late January/February 2026.

Critical insight

The release of "Garlic" signifies the end of the "Brute Force" AI era. The competition has moved from "who can build the biggest model" to "who can provide the most intelligence per dollar".

Table of Contents

I. Introduction: When the CEO Says ‘Code Red’, Listen

Do you remember what happened at OpenAI headquarters last month? Yes, I’m talking about the “Code Red” event announced by Sam Altman that made everyone pay attention.

It wasn’t about servers crashing or hacks. This was about OpenAI losing ground to Google’s Gemini 3 and Anthropic’s Claude Opus 4.5.

And I thought GPT-5.2 was OpenAI's answer. But no, it's just a temporary solution.

The real response is GPT-5.3, codenamed “Garlic.” A strange name but a smart one. A small clove can change an entire dish. This model does the same: compact, efficient and powerful.

This is OpenAI throwing a punch to get back on top and if early reports are right, this one could land hard.

Here’s what GPT-5.3 Garlic actually does, how it stacks up and why you should care now.

🚨 OpenAI declares "Code Red." Your reaction? |

II. The Leak That Started Everything

On December 2, 2025, The Information published a report that got everyone's attention. Mark Chen, OpenAI's Chief Research Officer, had shared internal details about a new model called GPT-5.3 "Garlic".

Source: The Information.

According to the report, Garlic was destroying internal benchmarks for coding and reasoning tasks. It was outperforming both Gemini 3 and Claude Opus 4.5 in critical areas.

But here's what makes this different from typical AI hype: developers are already testing this thing. Internal validation is happening right now. Beta previews are expected in early Q1 2026, which is basically now.

I don’t know about you but this got me excited.

Learn How to Make AI Work For You!

Transform your AI skills with the AI Fire Academy Premium Plan - FREE for 14 days! Gain instant access to 500+ AI workflows, advanced tutorials, exclusive case studies and unbeatable discounts. No risks, cancel anytime.

III. What Exactly Is GPT-5.3 or ‘Garlic’?

Garlic represents a strategic shift. Instead of scaling size, OpenAI focused on density. The model compresses reasoning paths early in training.

It combines stability work with experimental compression research.

Key takeaways

Merge of Shallotpeat and Garlic projects

Focus on reasoning efficiency

Smaller physical footprint

Higher intelligence per parameter

This is the first time OpenAI has stopped trying to win by just using more power.

GPT-5.3 represents a fundamental shift in strategy. Instead of just building bigger models, OpenAI is building denser ones.

Think of it like the difference between a bodybuilder and a gymnast. The bodybuilder is massive but the gymnast is more efficient and can do things the bodybuilder can't even attempt.

This model is the result of merging two research tracks:

Shallotpeat: An incremental GPT-5.x update focused on stability and bug fixes.

Garlic (experimental branch): An experimental project to compress how AI thinks.

By teaching the model to discard redundant neural pathways early in the training process, OpenAI has created a system with "GPT-6 level" reasoning inside a much faster, physically smaller architecture.

I have spent months tracking these updates and this is a huge shift. Garlic is the gymnast (smaller and more efficient).

IV. What Features That Make Garlic Different

At first look, Garlic looks like just another big model announcement. Then you look closer and realize it plays by different rules.

1. The 400,000-Token Context Window (With Perfect Recall)

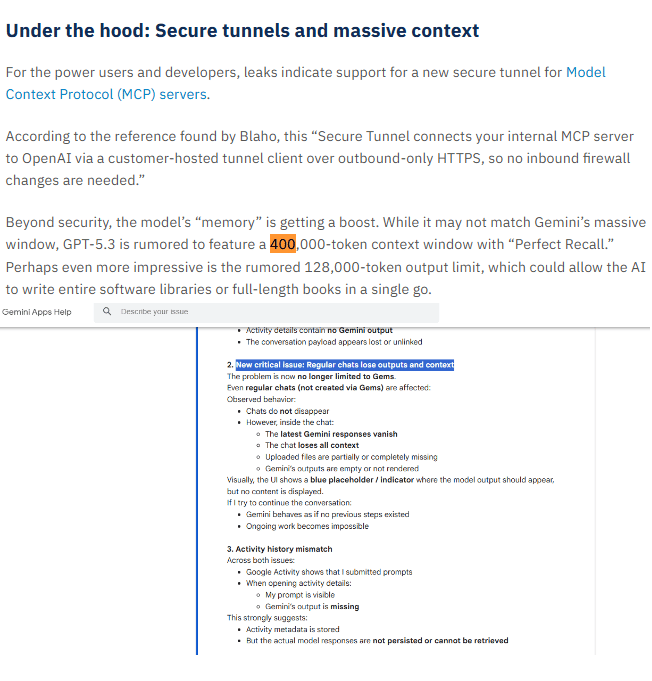

Garlic can handle 400,000 tokens. That's not a typo, four hundred thousand.

You could feed it entire books, massive codebases, weeks of chat history or your entire company's documentation and it can retain far more of that information than previous models.

Source: eWEEK and Google Support.

Now, you might think: "But doesn't Gemini 3 have a 2 million token context window?" Yes. But here's the problem: Gemini suffers from what's called "middle-of-the-context loss". The model forgets information buried in the middle of a massive context.

Garlic, however, reportedly uses a new attention mechanism that provides "Perfect Recall" across its entire 400K window. It actually remembers it, not just stores information.

2. The 128,000-Token Output Limit

Garlic can reportedly output 128,000 tokens in a single response. That means no chunking, no typing “continue from where you left off,” and no broken flow.

You can get a full app, a long legal brief, a software library or even a book-length draft in one coherent output. For complex work, this removes one of the biggest friction points in AI today.

3. Native Agent Capabilities

Usually, you might ask AI models to be an AI agent to do complex task and it fails most of the time. But Garlic isn’t pretending to be an agent. It is one.

Tool use is built in. It understands file systems, edits multiple files, runs tests, makes API calls, queries databases and debugs systems the way a real developer would.

Early agents = "LLMs + wrappers for tools, prompt chains, external scripts”. Source: DEV Community.

APIs, execution and tools aren’t add-ons. They’re part of how the model thinks.

This is the shift from chatbot to worker.

4. Self-Checking Before Answering

One of the biggest pain points with current AI models is hallucinations when they confidently give you completely wrong information.

Garlic introduces a self-checking stage before it responds.

AI hallucination. Source: OpenAI.

Before answering, it pauses to verify. It checks whether it knows or whether it’s guessing. If it isn’t sure, it reassesses.

The result is far fewer hallucinations and fewer confident but wrong answers.

For developers, researchers, lawyers and anyone who needs correctness, this matters more than raw intelligence.

5. High-Density Pre-Training

The most important change in Garlic is what OpenAI is calling Enhanced Pre-Training Efficiency (EPTE).

Traditional models learn by seeing massive amounts of data and creating sprawling networks of associations.

Garlic's training process involves a "pruning" phase where the model actively condenses information.

The result is a model that’s smaller, faster, cheaper to run and still deeply capable. Concentrated intelligence without massive overhead.

That’s the garlic metaphor in action: fewer cloves, stronger flavor.

Source: Vertu.

What do you think about the AI Report series? |

V. How Garlic Compares to the Competition

This is my favorite part and it’s the reason Garlic was born. It exists to compete directly with the two giants dominating right now: Google's Gemini 3 and Anthropic's Claude Opus 4.5.

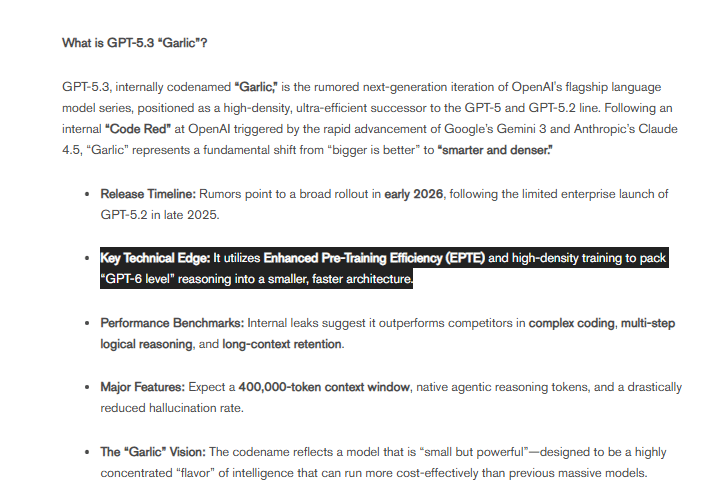

Here's how they compare based on leaked internal benchmarks.

1. GPT-5.3 ‘Garlic’ vs. Google Gemini 3

The Battle: Scale vs. Density

Feature | GPT-5.3 "Garlic" | Google Gemini 3 |

|---|---|---|

Context Window | 400K tokens (perfect recall) | 2M tokens (middle-loss issues) |

Multimodal | Text-focused | Video, audio, native image gen |

Reasoning (GDP-Val) | 70.9% | 53.3% |

Coding (HumanEval+) | 94.2% | 89.1% |

Best For | Pure text, code, complex reasoning | Messy real-world multimodal data |

The Verdict: If you need to analyze a 3-hour video, use Gemini. If you need to write the backend for a banking app, use Garlic.

2. GPT-5.3 ‘Garlic’ vs. Claude Opus 4.5

The Battle: For the Developer's Soul

Feature | GPT-5.3 "Garlic" | Claude Opus 4.5 |

|---|---|---|

Context Window | 400K tokens | 200K tokens |

Reasoning | 70.9% | 59.6% |

Coding | 94.2% | 91.5% |

Speed | Ultra-fast | Fast (but slower) |

Cost | ~0.5x Opus pricing | Premium pricing |

Vibe | Efficient, direct | Warm, human-readable |

The Verdict: Claude won developers' hearts with its warmth and clean, readable code. But it's expensive and slow. Garlic aims to match Opus's coding proficiency at 2x the speed and 0.5x the cost. If it delivers, the market will shift overnight.

GPT-5.3 “Garlic” vs Google Gemini 3 vs. Claude Opus 4.5. Source: CometAPI.

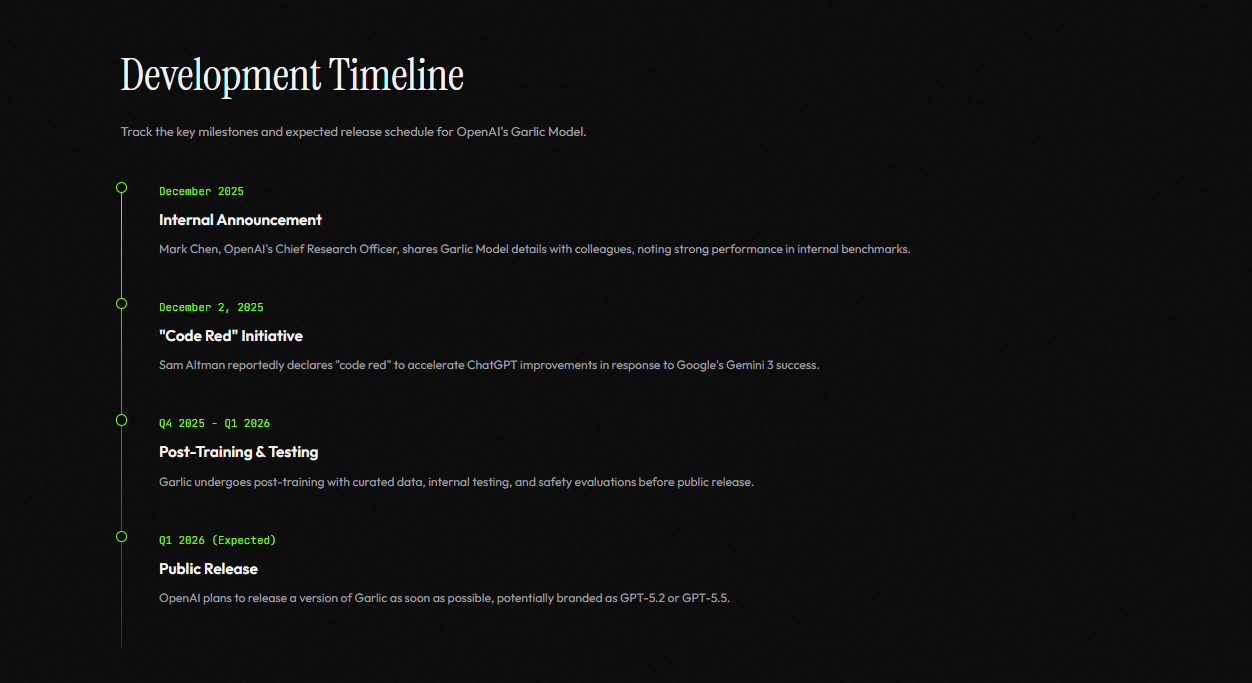

VI. When Can You Actually Use This?

Based on the convergence of leaks, vendor updates and the "Code Red" timeline, here's the most likely release schedule:

Source: Garlic Model.

Late January 2026 (Imminent):

Preview release to select partners and ChatGPT Pro users.

Possibly labeled as "GPT-5.3 (Preview)" or quietly rolled out under the existing GPT-5 branding.

February 2026:

Full API availability for developers.

Pricing structure announced (expected to be significantly cheaper than GPT-4.5).

March 2026:

Integration into the free tier of ChatGPT (with limited queries).

This is OpenAI's counter to Gemini's free accessibility.

The "Code Red" was declared in December 2025 with a directive to ship "as soon as possible". Given that the model is already in final validation, we're talking weeks, not months.

Creating quality AI content takes serious research time ☕️ Your coffee fund helps me read whitepapers, test new tools and interview experts so you get the real story. Skip the fluff - get insights that help you understand what's actually happening in AI. Support quality over quantity here!

VII. What This Means for You

Garlic removes context limits and agent friction. Developers automate more. Businesses reduce costs. Creators scale output without cleanup.

Workflows become simpler and faster.

Key takeaways

Less context loss

Fewer API calls

More reliable outputs

Real automation

Efficiency compounds across teams. Let's talk about how this actually plays out once you stop reading and start using it.

Audience | What They Can Do | Why It Matters |

|---|---|---|

Developers | - Refactor entire codebases without losing context. - Automate CI/CD with agentic workflows. - Debug complex systems faster. - Generate full test suites instantly. - Build autonomous coding agents. | Less context loss. Fewer manual steps. Real automation instead of brittle scripts. |

Businesses | - Speed up internal workflows. - Lower cost per task. - Do more with fewer API calls. - Reduce hallucinations in customer-facing use cases. | Higher reliability. Lower spend. Safer AI in production. |

Entrepreneurs Building AI Products | - Scale without blowing the budget. - Build agents that execute multi-step workflows. - Ship higher-quality AI products | Easier scaling. Stronger differentiation. Better unit economics. |

Content Creators & Marketers | - Generate full content calendars at once - Create long-form content without chunking. - Build assistants that follow complex brand rules. - Automate research and analysis | Faster output. Better consistency. Less manual cleanup. |

If this model delivers, many of today’s limitations disappear overnight and your work will be faster than ever.

VIII. What This Means for the AI Arms Race

AI has hit an inflection point. Competition between OpenAI, Google and Anthropic is no longer about who builds the biggest model, it’s about who builds the most efficient one.

Garlic is OpenAI’s bet on a different strategy: win with cognitive density instead of raw scale.

The idea is simple: intelligence per dollar matters more than model size. Let me give you three possible scenarios:

If Garlic succeeds: It shifts the entire industry toward smaller, denser models and pushes API costs down.

If Garlic disappoints: Google and Anthropic extend their lead and OpenAI is forced into another fast pivot, maybe an even more aggressive "Code Red 2.0" in 6 months.

If Garlic is just competitive: The three-way race continues, which means rapid iteration, falling prices and better tools for all of us.

No matter the outcome, users win: faster progress, better tools and falling prices.

X. So… Is This All Just Hype?

Anyone paying attention has seen this movie before. We’ve all seen big AI promises that didn’t deliver. So let's address the skepticism head-on.

There are reasons to doubt the noise:

Benchmarks don't always match real-world use.

"Code Red" could be marketing theater to generate hype.

Leaked benchmarks aren't public benchmarks; we haven't seen independent testing.

OpenAI has a history of overpromising.

But there’s also reason to take this seriously.

The Information is a reputable source, not some random blog.

Mark Chen (Chief Research Officer) doesn't leak nonsense to employees.

Developers are already seeing Garlic in internal logs; this is not vapor.

The "Code Red" response is unusual; companies don't pause projects lightly.

The timing makes sense; OpenAI needs a response to Gemini 3 and Claude 4.5 now.

Even if Garlic is only half as good as the rumors say, it's still a major leap forward. You could see how powerful GPT-5.2 works, even I thought it was real “Garlic”.

And in a space moving this fast, being six months late is the same as being irrelevant. So whether you believe the hype or not, you need to pay attention.

XI. Conclusion: Are You Ready?

Here's the uncomfortable truth: Someone else is already preparing for this and they’re going to beat you to market if you wait.

If you want to be ready before launch, here’s a short Garlic Readiness Checklist.

So you need to start moving now.

Organize your data: If you want to use that 400K memory, get your documents and codebases cleaned up and ready to upload.

Build agentic workflows: Start using current models to map out tasks. When Garlic drops, you can plug those maps into a brain that actually knows how to follow them.

Test your current stack: Benchmark what you are doing now so you can see if Garlic actually saves you money and time.

Whether GPT-5.3 "Garlic" ends up being called Garlic, GPT-5.2, GPT-5.5 or something else entirely, The time for big, slow AI is over. The time for smart, efficient AI has arrived.

The only question is: will you be sitting on the sidelines or will you be ready to build? Because when Garlic drops and it will drop, the people who are ready will have a massive advantage.

If you are interested in other topics and how AI is transforming different aspects of our lives or even in making money using AI with more detailed, step-by-step guidance, you can find our other articles here:

Create a Fast News Summarization AI App for Free: A Simple Guide*

AI is Changing How Companies Actually Win: 5 Shifts You Can't Ignore if You Wanna Success

GPT Image 1.5 vs Nano Banana Pro: Which One Truly Wins for Real Work?

Google's Gemini or ChatGPT: The Clear Best AI to Boost Your Marketing Traffic & Sales in 2026

70% Of Google Searches Get ZERO Clicks (Here's The Fix)*

*indicates a premium content, if any

Reply